christian.r

Bio

Christian Ruhl, Founders Pledge

I am a Senior Researcher at Founders Pledge, where I work on global catastrophic risks. Previously, I was the program manager for Perry World House's research program on The Future of the Global Order: Power, Technology, and Governance. I'm interested in biosecurity, nuclear weapons, the international security implications of AI, probabilistic forecasting and its applications, history and philosophy of science, and global governance. Please feel free to reach out to me with questions or just to connect!

Posts 16

Comments50

Topic contributions1

Thanks for writing this! I like the post a lot. This heuristic is one of the criteria we use to evaluate bio charities at Founders Pledge (see the "Prioritize Pathogen- and Threat-Agnostic Approaches" section starting on p. 87 of my Founders Pledge bio report).

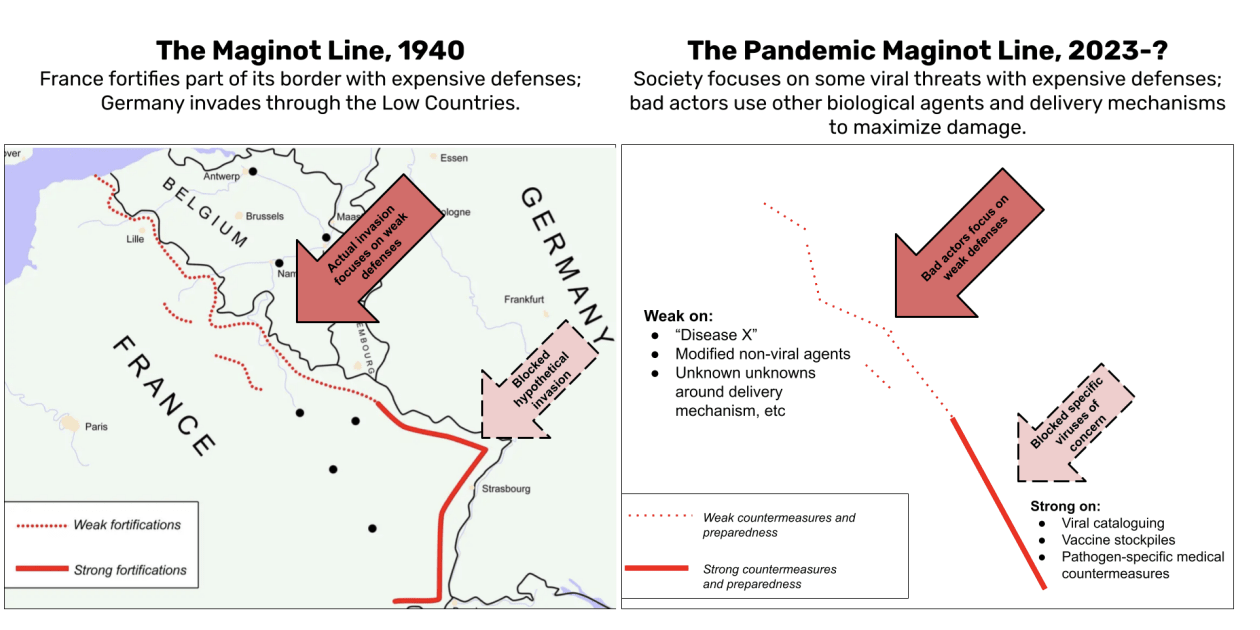

One reason that I didn't see listed as one of your premises is just the general point about hedging against uncertainty: we're just very uncertain about what a future pandemic might look like and where it will come from, and the threat landscape only becomes more complex with technological advances and intelligent adversaries. One person I talked to for that report said they're especially worried about "pandemic Maginot lines":

I also like the deterrence-by-denial argument that you make...

[broad defenses] might also act as a deterrent because malevolent actors might think: "It doesn't even make sense to try this bioterrorist attack because the broad & passive defense system is so good that it will stop it anyways"

... though I think for it to work you have to also add a premise about the relative risk of substitution, right? I.e. if you're pushing bad actors away from BW, what are you pushing them towards, and how does the risk of that new choice of weapon compare to the risk of BW? I think most likely substitutions (e.g. chem-for-bio substitution, as with Aum Shinrikyo) do seem like they would decrease overall risk.

Hi Ulrik, thanks for this comment! Very much agreed on the communications failures around aerosolized transmission. I wonder how much the mechanics of transmission would enter into a policy discussion around GUV (rather than a simplified “These lights can help suppress outbreaks.”)

An interesting quote relevant to bio attention hazards from an old CNAS report on Aum Shinrikyo:

"This unbroken string of failures with botulinum and anthrax eventually convinced the group that making biological weapons was more difficult than Endo [Seiichi Endo, who ran the BW program] was acknowledging. Asahara [Shoko Asahara, the founder/leader of the group] speculated that American comments on the risk of biological weapons were intended to delude would-be terrorists into pursuing this path."

Footnote source in the report: "Interview with Fumihiro Joyu (21 April 2008)."

Thanks for this post! I'm not sure cyber is a strong example here. Given how little is known publicly about the extent and character of offensive cyber operations, I don't feel that I'm able to assess the balance of offense and defense very well

Longview’s nuclear weapons fund and Founders Pledge’s Global Catastrophic Risks Fund (disclaimer: I manage the GCR Fund). We recently published a long report on nuclear war and philanthropy that may be useful, too. Hope this helps!

Just saw reporting that one of the goals for the Biden-Xi meeting today is "Being able to pick up the phone and talk to one another if there’s a crisis. Being able to make sure our militaries still have contact with one another."

I had a Forum post about this earlier this year (with my favorite title) Call Me, Maybe? Hotlines and Global Catastrophic Risks with a section on U.S.-China crisis comms, in case it's of interest:

"For example, after the establishment of an initial presidential-level communications link in 1997, Chinese leaders did not respond to repeated U.S. contact attempts during the 2001 Hainan Island incident. In this incident, Chinese fighter jets got too close to a U.S. spy plane conducting routine operations, and the U.S. plane had to make an emergency landing on Hainan Island. The U.S. plane contained highly classified technology, and the crew destroyed as much of it as they could (allegedly in part by pouring coffee on the equipment) before being captured and interrogated. Throughout the incident, the U.S. attempted to reach Chinese leadership via the hotline, but were unsuccessful, leading U.S. Deputy Secretary of State Richard Armitage to remark that “it seems to be the case that when very, very difficult issues arise, it is sometimes hard to get the Chinese to answer the phone.”

There is currently just one track 2/track 1.5 diplomatic dialogue between the U.S. and China that focuses on strategic nuclear issues. ~$250K/year is roughly my estimate for what it would cost to start one more

China and India. Then generally excited about leveraging U.S. alliance dynamics and building global policy advocacy networks, especially for risks from technologies that seem to be becoming cheaper and more accessible, e.g. in synthetic biology

I think in general, it's a trade-off along the lines of uncertainty and leverage -- GCR interventions pull bigger levers on bigger problems, but in high-uncertainty environments with little feedback. I think evaluations in GCR should probably be framed in terms of relative impact, whereas we can more easily evaluate GHD in terms of absolute impact.

This is not what you asked about, but I generally view GCR interventions as highly relevant to current-generation and near-term health and wellbeing. When we launched the Global Catastrophic Risks Fund last year, we wrote in the prospectus:

The Fund’s grantmaking will take a balanced approach to existential and catastrophic risks. Those who take a longtermist perspective in principle put special weight on existential risks—those that threaten to extinguish or permanently curtail humanity’s potential—even where interventions appear less tractable. Not everyone shares this view, however, and people who care mostly about current generations of humanity may prioritize highly tractable interventions on global catastrophic risks that are not directly “existential”. In practice, however, the two approaches often converge, both on problems and on solutions. A common-sense approach based on simple cost-benefit analysis points us in this direction even in the near-term.

I like that the GCR framing is becoming more popular, e.g. with Open Philanthropy renaming their grant portfolio:

We recently renamed our “Longtermism” grant portfolio to “Global Catastrophic Risks”. We think the new name better reflects our view that AI risk and biorisk aren’t only “longtermist” issues; we think that both could threaten the lives of many people in the near future.

FWIW, @Rosie_Bettle and I also found this surprising and intriguing when looking into far-UVC, and ended up recommending that philanthropists focus more on "wavelength-agnostic" interventions (e.g. policy advocacy for GUV generally)