brentonmayer

Comments18

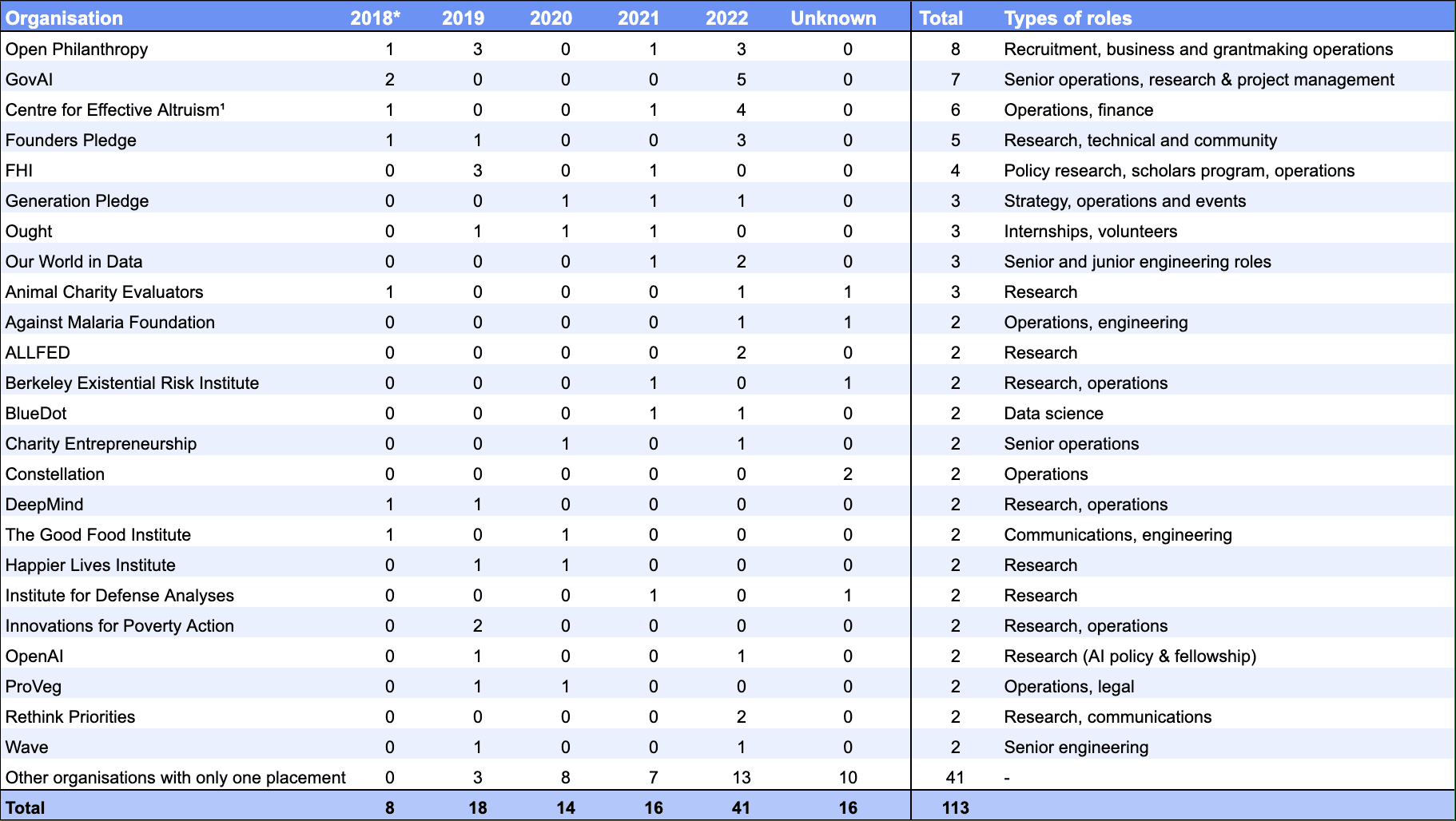

Cody's answer below and mine above give better 'overall' answers to your question, but - if you'd like to see something concrete and incomplete you could look at this appendix of job board placements we're aware of.

Basically: these just take a really long time!

Lumping 2021 and 2022 progress together into a single public report meant that we saved hundreds of hours of staff time.

A few other things that might be worth mentioning:

- I’m not sure whether we’ll use 1 or 2 year cycles for public annual reviews in future.

- This review (14 pages + appendices) was much less in-depth and so much less expensive to produce than 2020 (42 pages + appendices) or 2019 (109 pages + appendices). If we end up thinking that our public reviews should be more like this going forward then the annual approach would be much less costly.

- In 2021, we only did a ‘mini-annual review’ internally, in which we attempted to keep the time cost of the review relatively low and not open up major strategic questions.

- We didn’t fundraise in 2021.

- I regret not publishing a blog post at the time stating this decision.

Hi Vaidehi - I'm answering here as I was responsible for 80k’s impact evaluation until late last year.

My understanding is that plan changes (previously IASPC's then DIPY's) were a core metric 80K used in previous years to evaluate impact. It seems that there has been a shift to a new metric - CBPC's (see below).

This understanding is a little off. Instead, it’s that in 2019 we decided to switch from IASPCs to DIPYs and CBPCs.

The best place to read about the transition is the mistakes page here, and I think the best places to read detail on how these metrics work is the 2019 review for DIPYs and the 2020 review for CBPCs. (There’s a 2015 blog post on IASPCs.)

~~~

Some more general comments on how I think about this:

A natural way to think about 80k’s impact is as a funnel which culminates in a single metric which we can relate to as a for profit does to revenue.

I haven’t been able to create a metric which is overall strong enough to make me want to rely on it like that.

The closest I’ve come is the DIPY, but it’s got major problems:

- Lags by years.

- Takes hundreds of hours to put together.

- Requires a bunch of judgement calls - these are hard for people without context to assess and have fairly low inter-rater reliability (between people, but also the same people over time).

- Most (not all) of them come from case studies where people are asked questions directly by 80,000 Hours staff. That introduces some sources of error, including from social-desirability bias.

- The case studies it’s based on can’t be shared publicly.

- Captures a small fraction of our impact.

- Doesn’t capture externalities.

(There’s a bit more discussion on impact eval complexities in the 2019 annual review.)

So, rather than thinking in terms of a single metric to optimise, when I think about 80k’s impact and strategy I consider several sources of information and attempt to weigh each of them appropriately given their strengths and weaknesses.

The major ones are listed in the full 2022 annual review, which I’ll copy out here:

- Open Philanthropy EA/LT survey.

- EA Survey responses.

- The 80,000 Hours user survey. A summary of the 2022 user survey is linked in the appendix.

- Our in-depth case study analyses, which produce our top plan changes (last analysed in 2020). EDIT: this process produces the DIPYs as well. I've made a note of this in the public annual review - apologies, doing this earlier might have prevented you getting the impression that we retired them.

- Our own data about how users interact with our services (e.g. our historical metrics linked in the appendix).

- Our and others' impressions of the quality of our visible output.

~~~

On your specific questions:

- I understand that we didn’t make predictions about CBPCs in 2021.

- Otherwise, I think the above is probably the best general answer to give to most of these - but lmk if you have follow ups :)

Ah nice, understood!

I don't think you'll find anything from us which is directly focused on most of these questions. (It's also not especially obvious that this is our comparative advantage within the community.)

But we do have some relevant public content. Much of it is in our annual review, including its appendices.

You also might find these results of the OP EA/LT survey interesting.

Hi - thanks for taking the time to think through these, write them out and share them! We really appreciate getting feedback from people who use our services and who have a sense of how others do.

I work on 80k’s internal systems, including our impact evaluation (which seems relevant to your ideas).

I've made sure that the four points will be seen by the relevant people at 80k for each of these.

Re. #1, I'm confused about whether you're more referring to 'message testing' (i.e. what ideas/framings make our ideas appealing to which audiences) or 'long term follow up with users to see how their careers/lives have change'. (I can imagine various combinations of these.)

Could you elaborate?

I was interested in seeing a breakdown of the endpoints, before they'd been compressed into the scales AAC uses above.

Jamie kindly pulled this spreadsheet together for me, which I'm sharing (with permission), as I thought it might be helpful to other readers too.

Through overpopulation and excessive consumption, humanity is depleting its natural resources, polluting its habitat, and causing the extinction of other species. Continuing like this will lead to the collapse of civilisation and likely our own extinction.

This one seems very common to me, and sadly people often feel fatalistic about it.

Two things that feeling might come from:

- People rarely talking about aspects of it which are on a positive trajectory (e.g. the population of whales, acid rain, CFC emissions, UN population projections).

- The sense that there are so related things to solve - such that even if we managed to fix (say) climate change then we'd still see (say) our fisheries cause the collapse of the ocean's ecosystem.

Thanks for the thought!

You might be interested in the analysis we did in 2020. To pull out the phrase that I think most closely captures what you’re after:

~~~

We did a scrappy internal update to our above 2020 analysis, but haven’t prioritised cleaning it up / coming to agreements internally and presenting it externally. (We think that cost effectiveness per FTE has reduced, as we say in the review, but are not sure how much.)

The basic reasoning for that is:

~~~

This appendix of the 2022 review might also be worth looking at - it shows FTEs and a sample of lead metrics for each programme.