Note: in response to some (much appreciated) feedback on this post, the title has been updated from "The Case for Inspiring Disasters" in order to improve clarity. The content, however, remains unchanged.

Summary

If an endurable disaster inspires a response that reduces the chance of even greater future catastrophes or existential risk, then it may be overwhelmingly net-positive in expectation. In this sense, surprising disasters which substantially change our paradigms for risk may not be worth preventing. This should be a major factor considered in the (de)prioritization of certain cause work.

Some disasters spark new preventative work.

Amidst tragedy, there are reasons to be optimistic about the COVID-19 outbreak. An unprecedented disaster has been met with an equally unprecedented response. As of June 6, 2020, over 130,000 papers and preprints related to the virus have been submitted, and governments like the USA are expected to majorly ramp up pandemic preparedness efforts for the future. As a result of COVID-19, disease-related biorisk is becoming much less neglected. This single pandemic has very likely done much more to bring attention to the dangers of disease and the fragility of our global institutions than the EA community as a whole has done in recent years. Given that pandemics are potential catastrophic or existential risks, if an endurable event like COVID-19 leads to even a modest reduction in the risk of greater pandemics in the future, its expected impacts may be overwhelmingly net-positive.

This effect isn’t unique to the current pandemic. When high-profile disasters strike, calls for preventative action are often soon to follow. One example is how after the second World War, the United Nations was established as a means of preventing future conflicts of scale. Although the 20th century from then on was far from devoid of conflict, the UN played a key role in avoiding wars and has been the principle global body working to prevent them since. While a calamity, World War II was at least endurable. But what if it has been delayed by several decades? Then it’s at least plausible that in the latter half of the 20th century, a world without a UN would be to avoid an alternate World War II, but this time with widespread nuclear arms.

These disasters need not even be particularly devastating in an absolute sense. Another striking example is the September 11, 2001 terrorist attacks. Quickly following them, governments worldwide introduced major new security measures, and no attack of the same type has been successful ever since.

An illustrative toy model

Consider two types of disasters: endurable and terminal. Suppose that each arrives according to a poisson process with rates of ε and τ respectively. Should a terminal disaster arrive, then humanity goes extinct. However, should an endurable one arrive, let its severity be drawn from an exponential distribution with rate parameter λ, and if that severity surpasses some threshold α for inspiring preventative future action, let ε and τ decay toward a base rate by a factor of γ as a result of this response.

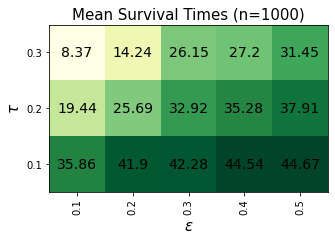

Although overly simple, this model captures why avoiding certain endurable disasters may not be good. Letting λ=α=1, γ=0.99, and using asymptotic base disaster rates of 0.05 and 0.02 for endurable and terminal disasters respectively, here are simulated mean survival times for civilizations under various values of the initial ε and τ.

Obviously, increasing τ consistently reduces mean survival time. But increasing ε, at the cost of resulting in more endurable disasters, causes large increases in the mean survival times under this model regardless of τ. So long as the wellbeing of future civilization is substantially valued and that human extinction is seen as much more tragic than an endurable disaster (there is a strong case for this viewpoint), this suggests that endurable disasters which inspire action to prevent future catastrophes are highly valuable.

Implications

I argue that the potential for endurable disasters to inspire actions that can prevent more devastating disasters in the future should be a major factor in evaluating the effectiveness of working on certain causes. This perspective suggests that working to prevent these risks may be overwhelmingly net-negative in expectation. However, this perspective does not diminish the importance of work on (1) avoiding risks that are likely to be terminal (e.g. AI), (2) preventing catastrophic risks that aren’t likely to inspire successful preventative responses (e.g. nuclear war), (3) avoiding risks whose impacts are delayed with respect to their cause (e.g. climate change), and (4) coping strategies for risks which may not be preventable (e.g. natural disasters).

However, some highly-speculative examples of causes that this model suggests may actually be counterproductive include:

- Pandemic flu

- Lone-wolf terrorism with weapons of mass destruction

- Lethal autonomous weapons

- Safety failures in self-driving cars

___

Thanks to Eric Li for discussion and feedback on this post.

Maybe this wasn't your intent, but the title is a bit ambiguous about the word "inspire" - it seems as though you might be advocating for actions that inspire disasters, as opposed to making the case for allowing disasters that are themselves inspiring.

Thanks for the observation. The idea was definitely not to say that promoting disasters is a pragmatic course of action, bur rather that disasters which inspire us to prevent future risks can be good. I hope that the first line of the post would clear up any potential confusion.

I'm pretty averse to making major changes to a post, but for the sake of preventing possible future confusion, I opted to change 'Inspiring' to 'Inspirational' in the title.

[Update: in response to some additional feedback, another update was made. See the first line of the post.]

I think the word "inspirational" isn't ideal either, and in fact not very different from "inspiring". And I think the title matters massively for the interpretation of an article. So I think you haven't appropriately addressed David's legitimate point. I wouldn't use "inspiring", "inspirational", or similar words.

I agree that "inspirational" is still not optimal because of its positive connotation, but I think it is fair to say that stecas was trying to improve it and that the update successfully removed the possibility of understanding the title as a call to action (old title was something like "Cause Prioritization by Inspiring Disasters", where "Inspiring" was meant as an adjective, but could be understood as a gerund).

Some ideas:

I have similar objections to this post as Khorton & cwbakerlee. I think it shows how the limits of human reason make utilitarianism a very dangerous idea (which may nevertheless be correct), but I don’t want to discuss that further here. Rather, let’s assume for the sake of argument that you are factually & morally correct. What can we learn from disasters, and the world’s reaction to them, that we can reproduce without the negative effects of the disaster? I am thinking of anything from faking a disaster (wouldn’t the conspiracy theorist love that) to increasing international cooperation. What are the key characteristics of a pandemic or a war that make the world change for the better? Is the suffering an absolute necessity?

I down voted this because it seems uncooperative with the rest of humanity. The general vibe of the summary seems to be "let people suffer so they'll learn their lesson." If that's not what you meant, though, I'm interested to hear what you actually think.

Edit: I appreciate the updated title!

I think it's pretty safe to say that

is a strawperson for this post. This post argues that the ability for certain disasters to spark future preventative work should be a factor in cause prioritization work. If that argument cannot be properly made by discussing examples or running a simulation, then I do not know how it could be. I would be interested in how you would recommend discussing this if this post was not the right way to do so.

I share in your hope that the attention to biorisks brought about by SARS-CoV-2 will make future generations safer and prevent even more horrible catastrophes from coming to pass.

However, I strongly disagree with your post, and I think you would have done well to heavily caveat the conclusion that this pandemic and other "endurable" disasters "may be overwhelmingly net-positive in expectation."

Principally, while your core claim might hold water in some utilitarian analyses under certain assumptions, it almost definitely would be greeted with unambiguous opprobrium by other ethical systems, including the "common-sense morality" espoused by most people. As you note (but only in passing), this pandemic truly is an abject "tragedy."

Given moral uncertainty, I think that, when making a claim as contentious as this one, it's extremely important to take the time to explicitly consider it by the lights of several plausible ethical standards rather than applying just a single yardstick.

I suspect this lack of due consideration of other mainstream ethical systems underlies Khorton's objection that the post "seems uncooperative with the rest of humanity."

In addition, for what it's worth, I would challenge your argument on its own terms. I'm sad to say that I'm far from convinced that the current pandemic will end up making us safer from worse catastrophes down the line. For example, it's very possible that a surge in infectious disease research will lead to a rise in the number of scientists unilaterally performing dangerous experiments with pathogens and the likelihood of consequential accidental lab releases. (For more on this, I recommend Christian Enemark's book Biosecurity Dilemmas, particularly the first few chapters.)

These are thorny issues, and I'd be more than happy to discuss all this offline if you'd like!

Hmm, FWIW I didn‘t think for one second that the author suggested inspiring a disaster and I think that it’s completely fine to post a short argument that doesn‘t go full moral uncertainty. It’s not like the audience is unfamiliar with utilitarian reasoning and that a sketch of an original utilitarian argument should never be understood as an endorsement or call to action. No?

Thanks, cwbakerlee for the comment. Maybe this is somewhat due in part to how much more time I've been spending on LessWrong recently than EA Forums, but I have been surprised by the characterizaation of this post as one that seems dismissive of the severity of some disasters. This isn't what I was hoping for. My mindset in writing it was one of optimism. It was inspired directly by this post plus another conversation I had a friend about how if self-driving cars turn out to be riddled with failures, it could lend much more credibility to AI safety work.

I didn't intend for this to be a long post, but if I wrote it again, I'd have a section on "reasons this may not be the case." But I would not soften the message that endurable disasters may be overwhelmingly net positive in expectation. I disagree that non-utilitarian moral systems would generally dismiss the main point of this post. I think that rejecting the idea that disasters can be net-good if they prevent bigger future disasters would be pretty extreme even by commonsense standards. This post does not suggest that these disasters should be caused on purpose. To anyone who points out the inhumanity of sanctioning a constructive disaster, it can easily be pointed out the even greater inhumanity of trading a small number of deaths for a large one. I wouldn't agree with making the goal of a post like this to appeal to viewpoints that are this myopic. Even considering moral uncertainty, I would discount this viewpoint almost entirely--similarly to how I would discount the idea that pulling the lever in the trolley problem is wrong. To the extent that the inspiring disaster thesis is right (on its own terms), it's an important consideration. And if so, I don't find the idea that it should be too taboo to write a post on very tenable.

About COVID-19 in particular, I am not an expert, but I would probably ascribe a fairly low prior to the possibility that increased risks from ineffective containment of novel pathogens in labs would outweigh reduced risks from other adaptations regarding prevention, epidemiology, isolation, medical supply chains, and vaccine development. I am aware of speculation that the current outbreak was the result of a laboratory in Wuhan, but my understanding is that this is not seen as very substantiated. Empirically in the past few decades, it seems that far more deaths have been due to "naturally" occurring disease outbreaks not being handled better than to outbreaks due to pathogens escaping a lab.

When I mentioned the classic trolley problem, that was not to say that it's analogous. The analogous trolley problem would be a trolley barreling down a track that splits in two and rejoins. On the current course of a trolley there are a number of people drawn from distribution X who will stop the trolley if hit. But if the trolley is diverted to the other side of the fork, it will hit a number of people drawn from distribution Y. The question to ask would be: "What type of difference between X and Y would cause you to not pull the lever and instead work on finding other levers to pull?" Even a Kantian ought to agree that not pulling the lever is good if the mean of Y is greater than the mean of X.

This seems quite ungenerous. Yes, you can construe this as having a negative 'vibe'. But it's far from the only such possible 'vibe'! The idea of exposure to mild cases of a bad thing yielding future protection through behavioral change is widespread in medicine: think of vaccination with live virus changing the behavior of your immune system, or a mild heart attack causing an unhealthy young person to change their habits.

But even if the 'vibe' was bad, in general we should try to analyze things logically, not reject ideas because they pattern match to an unpleasant sounding idea. If it was the case that global pandemics are less of an Xrisk now, owning up to it doesn't make it worse.

I think that depends a lot on framing. E.g. if this is just a prediction of future events, it sounds less objectionable to other moral systems imo b/c it's not making any moral claims (perhaps some by implication, as this forum leans utilitarian)

In the case of making predictions, I'd strongly bias to say things I think are true even if they end up being inconvenient, if they are action relevant (most controversial topics are not action relevant, so I think people should avoid them). But this might be important for how to weigh different risks against each other! Perhaps I'm decoupling too much tho

Aside: I don't necessarily think the post's claim is true, because I think certain other things are made worse by events like this which contributes to long-run xrisk. I'm very uncertain tho, so seems worth thinking about, though maybe not in a semi-public forum

Thanks for making this case, and for directly putting your idea in a concrete model. I share the intuition that humanity (unfortunately) relies way too much on recent and very compelling experience to prioritise problems.

Some thoughts:

1) Catastrophes as risk factors: humanity will be weakened by a catastrophe and less able to respond to a potential x-risks for some time

2) In many cases we don't need the whole of humanity to realise the need for action (like almost everyone does with the current pandemic), but instead convincing small groups of experts is enough (and they can be convinced based on arguments)

3) Investments in field building and "practice" catastrophes might be very valuable for a cause like pandemic preparedness to get off the ground, and be worth the lack of buy-in of bigger parts of humanity

4) You may expect that, even without global catastrophes, humanity as a whole will come to terms with the prospect of x-risks in the coming decades. It might then not be worth it to accept a slight risk of fatally underestimating an unlikely x-risk.

Thanks for the comment. I think that 2 and 4 are good points, and 1 and 3 are great ones. In particular, I think that one of the more important factors that the toy model doesn't capture is the nonindependence of the arrival of disasters. Disasters that are large enough are likely to have a destabilizing affect which breeds other disasters. An example might be that WWII was in large part a direct consequence of WWI and a global depression. I agree that all of these should also be part of a more complete model.