Table of contents

- The American Empire Has Alzheimer's.

- Prediction Markets: VC money, searching for DraftKings, predatory pricing, and the race to be last.

- For skilled forecasters, crypto prediction markets are much more profitable than forecasting platforms.

- Best forecasting pieces from 2021

You can sign up for or view this newsletter on substack, where there are already a few thoughtful comments. To some extent, this edition of the newsletter is a bit more centered on prediction markets, and more targeted to prediction market developers. But I still think it'll be interesting/valuable to EA readers.

The American Empire has Alzheimer's

It is 1964. Sherman Kent is a senior intelligence analyst. While doing some rudimentary experiments, he realizes that analysts themselves disagree about the degree of confidence that words convey. He suggests that analysts clearly state how certain they are of their conclusions, by using words that correspond to probabilities. This will allow keeping track of how analysts do, while being simple enough for less detail-focused politicians to understand. His proposal encounters deep resistance, and doesn't get implemented.

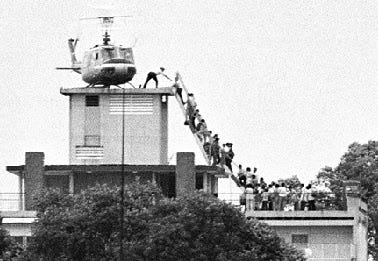

It is the 30th of April of 1975. With the fall of Saigon, the US finally pulls out of a bloody war with Vietnam. There are embarrassing images of people flying out of the US embassy at the last moment. Biden is a newly-minted senator from Delaware.

It is 2001. The US intelligence agencies are very embarrassed by not having been able to predict the September 11 attacks. The position of the Director of National Intelligence, and an associated Office of the Director of National Intelligence, is established to coordinate all intelligence agencies to do better in the future.

At the same time, Robin Hanson pushes for a "Policy Analysis Market", which would have covered topics of geopolitical interest. This proposal becomes too controversial, and gets dropped.

It is 2008. A bunch of Nobel Prize winners and other luminaries publish a letter urging the Commodity Futures Trading Commission (CFTC) to make prediction markets more legal.

It is 2010. IARPA, an intelligence agency modeled after DARPA, which incubates high-risk, high payoff projects, creates a tournament to find out which forecasting setups do best. Philip Tetlock had done some experiments which found that pre-selecting participants does pretty well. He repeatedly wins the IARPA tournament, and creates Good Judgment Inc to provide the services of his preselected high-performance forecasters. The US doesn't buy their services, and Good Judgment Inc survives by selling very expensive training sessions to clients which have too much money.

It is 2013. The CFTC shuts down Intrade, one of the only prediction market platforms in the US.

It is 2017 and onwards. As Ethereum and crypto more generally become more mainstream, some prediction markets on top of crypto-blockchains start to pop up, such as Augur, Omen, and later, Polymarket. As cryptocurrencies become more and more popular, the fees on the original Ethereum blockchain increase so much that placing bets on these prediction markets becomes too expensive. Polymarket survives by moving to a "layer two" blockchain, a less paranoidly secure blockchain that mimics the Ethereum blockchain, and which allows users to continue betting.

It is the summer of 2021. Biden makes incredibly overconfident assertions about the Afghani government holding on against the Taliban. It doesn't. There are images of the evacuation from Kabul, Afghanistan which look very similar to the evacuation from Saigon, Vietnam. This is all very embarrassing to the Biden administration, and his approval rating drops drastically.

“There’s going to be no circumstance where you see people being lifted off the roof of an embassy in the — of the United States from Afghanistan. [...] the likelihood there’s going to be the Taliban overrunning everything and owning the whole country is highly unlikely.” — Biden, July 08, 2021

Come Christmas of 2021, the CFTC gives Americans the gift of disappointment by shutting down Polymarket in the US, one of the few places where real money was being traded around topics of extreme interest to Americans, like US covid cases.

The picture I paint above is somewhat reductionist, and omits some important details. For instance, for a while the US had, and maybe still had an intelligence community prediction market. More recently, the United Kingdom also has a "Cosmic Bazaar", a forecasting tournament using Cultivate Labs infrastructure. Dominic Cummings, who was Chief Adviser to Prime Minister Boris talked of reading Scott Alexander’s blogposts about Covid. There is also forecasting done with the Czech Republic, the Dutch and the OSCE. But I am yet to think that forecasters are meaningfully driving policy. The forecasting community is close-knit and I think there would be conversations if policymakers were regularly looking at forecasts—if you disagree, please get in touch.

Still, even after adjusting for my predisposition for pessimism, I think that the broad strokes of the above overview are about right. The US government is not being a "strong optimizer", whatever that means. In fact, the US government is being fucking dumb. But it took me a while to crystallize this, and to notice how some of the dysfunctional aspects of the forecasting panorama have the same root cause. PredictIt's fees are so high (10%) because until very recently, they didn't have competition to keep them on their toes. Metaculus is—to some extent—structured around fake internet points because doing the real money version would have been bureaucratically exhausting.

Prediction Markets: VC money, searching for DraftKings, predatory pricing, and the race to be last

VC money

In the past few years, a few startups have joined the prediction market arena, chiefly Polymarket, Hedgehog Markets and Kalshi. Augur, previously a crypto project with very strong decentralization mechanisms, also sold out and spun off a more commercially oriented site, Augur Turbo, more focused on sports, crypto and entertainment. There were also a whole lot of less successful copycats, like PolkaMarkets.

These projects have gotten a fair amount of funding. Polymarket got an initial $4M investment round, and was reportedly valued at $1B in later talks. Kalshi got $30M in funding from, among others, Sequoia Capital. Augur Turbo got an investment of $1M from Polygon for its liquidity program, the network on which it and Polymarket runs. And Hedgehog Markets got a $3.5M investment, as well as $500k through the sale of NFTs, NFTs which allow users to participate in exclusive walled-off markets.

Searching for DraftKings

What is driving that valuation and initial investment? Well, for comparison, DraftKings, one of the biggest sports markets around, was valued at $20B before its stock price took a beating after an adversarial report by Hindenburg's Research, a short-seller. So being a similarly large player in the nascent prediction markets field could be worth a significant fraction of that. Even being the "DraftKings of crypto", i.e.—the largest and more liquid player for sports within the crypto ecosystem—could potentially be worth quite a bit.

Predatory pricing and the race to be last

But first, these startups have to capture the market. And the way they are trying to do this is by subsidizing participation. That is, they create markets whose initial probabilities are off, giving users the chance to make money by participating in the market. I'm most familiar with how Polymarket has done this; I think they have overall lost money even though they have seen tens of millions in volume. My guess is that some other platforms have likewise lost a fair bit of money subsidizing volume.

But this creates a race to be the last to subsidize one's own markets, and steal the competition's user base. Then, perhaps, the last one standing could monopolize the business and raise fees.

Alternative profit models.

In short, it looks that right now, there is money flushing around, but eventually the necessary sucker at the table will tend to be the users. And this incentivizes markets on sports, NFTs, or celebrities, rather than on war, politics, or technological developments, because they have more mainstream appeal. So the core, amoral business insight here is that all of these platforms are vying for the same slice of the market: the sports and crypto markets. Or, in other words, entertainment for those newly rich off the crypto-boom.

What would an alternative business model be? Well, on the one hand, the different prediction markets could aim for different niches. Hedgehog markets could aim to entertain people heavily into the cryptocurrency scene. Polymarket could aim to be the best at real-world predictions. Augur could return to its original vision and be the go-to place for paranoid users interested in security. And FTX offers the best derivatives on cryptocurrency products.

Personally, the profit model that I'd like to see is one in which the prediction market platforms extract the profit not from their users, but rather from the people who are consuming the odds which the betting produces as a side-effect. For instance, a large NGO such as Open Philanthropy might be interested in a variety of geopolitical events, and could subsidize a market on them. This would involve providing both the liquidity ($300 to a few thousand per market), and some money to support the prediction market platform. As the decentralized finance ecosystem develops, instead of a central organization paying for public goods, DAOs might form for this purpose.

Such a profit model would be such that the prediction market platform would be able to benefit in proportion to how much value it generates in the world. For instance, right now Polymarket creates value by producing common knowledge about sensible default probabilities to have around covid. Having sponsors which pay in proportion to how much value these markets produce, and which are willing to pay to create valuable markets might allow platforms such as Polymarket to capture a fraction of the value they create.

That kind of a profit model could, I think, make humanity more formidable.

For skilled forecasters, crypto prediction markets are much more profitable than forecasting platforms.

A drift divides the human forecasting space. On the first corner, we have forecasting platforms, which are legal throughout the land, and which see lower volumes. Forecasters play by giving their probabilities, and checking whether these are more right than other participants'. They are often rewarded according to a proper scoring rule so that they're incentivized to give their true and honest best guess, but this often fails in amusing ways. Chief amongst these platforms are Metaculus and Good Judgment Open, which have many questions and whose communities have historically been open and welcoming. Forecasting platforms tend to have socially useful questions on e.g., geopolitics, technology developments, or risks to society.

On the opposing corner, we have prediction markets, where participants put their money where their mouth is, and earn money if they turn out to be right. The communities can also be welcoming in their own ways, though this is partially because good bettors are looking for less experienced bettors to fleece. Prediction markets are of dubious legality, mostly because some apparatchiks under the Commodity and Futures Trading Commission (CFTC) have a hard-on against it. In principle, real-money prediction markets could have questions on any topic, but they tend to have questions that have more mainstream appeal, such as on sports.

In recent times, it has become noticeably the case that prediction markets, and in particular crypto prediction markets, are significantly more profitable to participants than forecasting platforms. So some forecasters who trained themselves on Metaculus then flocked to try their luck on Polymarket, and the best ones made significantly more money than they could have made on Metaculus.

For reference, the current monetary rewards given in forecasting platforms are roughly as follows:

- Metaculus: On the order of $1000 per tournament.

- Hypermind: On the order of $5000 per tournament.

- Replication Markets: Around $150k in total, for around 10-20 rounds of forecasting, each of which contained many questions.

- CSET-Foretell; $120k a year, or $200 per forecaster per month.

Note that these are per tournament, so if a $1000 Metaculus tournament contains around ten questions, and ten forecasters participate on it, this amounts to $10 per forecaster. If a forecaster spends more than an hour per question, they are then earning less than minimum wage.

In contrast, prediction markets, such as PredictIt or Polymarket often see upwards of $100k traded on individual markets. So the top predictors can and do make a comfortable living betting, in a way that would be difficult or impossible to do in forecasting platforms rather than in prediction markets.

For instance, one of the top earners on Replication Markets earned around $10k. But he spent significant time programming tools to automate his trading, and it seems like he put a lot of love into his forecasting. If he had invested that labor into trading on Polymarket and building tools to do so, or if he had simply sold his labor as a programmer, he would have earned significantly more money.

So the incentives are not pointing in the right direction. Capable forecasters can earn significantly more by predicting societally-useless sports stuff, or simply by arbitraging between the big European sports-houses and crypto markets. Meanwhile, the people who remain forecasting socially useful stuff on Metaculus, like whether Russia will invade the Ukraine or whether there will be any new nuclear explosions in wartime, do so to a large extent out of the goodness of their heart.

I think that the clear solution to this is to either increase the overall willingness to pay forecasters, or to be willing to subsidize liquidity in prediction markets for questions that are of general value.

Best pieces on forecasting during 2021

Practice

Predicting Politics is generally worth reading, starting with How to get good, Mining the Silver Lining of the Trump Presidency and Boring is back, baby

Avraham Eisenberg wrote Tales from Prediction Markets, gathering a few interesting anecdotes.

Cultured meat predictions were overly optimistic (a). "Overall, the state of these predictions suggest very systematic overconfidence."

Charles Dillon of Rethink Priorities and SimonM looked at How does forecast quantity impact forecast quality on Metaculus? More forecasters increase forecast quality, but the effect is small beyond 10 or so forecasters.

David Friedman looked at whether the past IPCC temperature projections/predictions have been accurate?

Violating the EMH — Prediction Markets gave specific examples in which prediction markets appeared to violate the efficient market hypothesis.

How I Made $10k Predicting Which Studies Will Replicate. The author started out with a simple quantitative model based on Altmejd et al. (2019), and went on from there.

Together with my coauthors Misha Yagudin and Eli Lifland, I posted a fairly thorough investigation into Prediction Markets in The Corporate Setting. The academic consensus seems to overstate their benefits and promisingness. Lack of good tech, the difficulty of writing good and informative questions, and social disruptiveness are likely to be among the reasons contributing to their failure. In the end, our report recommended not having company-internal prediction markets.

Charles Dillon wrote Data on forecasting accuracy across different time horizons and levels of forecaster experience, using Metaculus and PredictionBook data, and building on earlier work by niplav.

Futurism

Incentivizing forecasting via social media explored the implications of integrating forecasting functionality with social media platforms.

The Machine Intelligence Research Institute's research on agent foundations shed some light on probability theory more generally. Radical Probabilism and Reflective Bayesianism seem particularly worth highlighting, as does Probability theory and logical induction as lenses.

Daniel Kokotajlo wrote What 2026 looks like (Daniel's Median Future), extrapolating the performance of models like GPT-3 year by year. Ben Snodin wrote My attempt to think about AI timelines.

The Machine Intelligence Research Institute has published a few conversations on future AI capabilities. Of these, readers of this newsletter might be particularly interested in the Conversation on technology forecasting and gradualism.

Theory

There was some back and forth online on Kelly betting:

- Kelly isn't just about logarithmic utility

- Kelly is just about logarithmic utility

- Never Go Full Kelly

- Why the Kelly criterion kind of sucks

See also: Proebsting's paradox (a), a thought experiment in which naïve Kelly bettors are lead to ruin, Learning Performance of Prediction Markets with Kelly Betting proves that prediction markets with Kelly bettors update similarly to Bayes' law, and this blog post illustrates that paper's point in a more approachable manner.

After reading these posts, I'm left with the conclusion that Kelly betting is an interesting yet ultimately limited tool. One encounters the limits of applicability as soon as one is exposed to many bets at once, or to the chance that the bets may change in favorable or unfavorable directions.

Alex Lawsen and I published Alignment Problems With Current Forecasting Platforms, outlining problems with the incentive mechanisms in almost all non-prediction market platforms.

The Generalized Product Rule outlines how a certain step in Cox's theorem—the step which proves that probability updating is multiplicative—can be applied to other problems as well.

Jaime Sevilla took a deep dive into aggregating forecasts.

Eli Lifland published an article on bottlenecks to more impactful forecasting. It crystallizes his knowledge from a few years of his forecasting on Metaculus, CSET-Foretell and Good Judgment Open.

I always appreciate your newsletter, and agree with your grim assessment of prediction markets' long-suffering history. Here is what I am left wondering after reading this edition:

Okay, so the USA has mostly dropped the ball on this for forty years. But what about every other country? China seems pretty ambitious and willing to make things happen in order to secure their place on the world stage -- where is the CCP-subsidized market hive-mind driving all the crucial central planning decisions? Well, maybe a prediction market doesn't play well with wanting to exert lots of top-down control and suppress free speech. Okay, what about countries in Europe? What about Taiwan or Singapore? Nobody has yet achieved some kind of Hansonian utopia, so what is the limiting factor?

Of course I would be eager to hear your thoughts on what the key limiting factor(s) might be.

In your last newsletter, you remarked, "It's kind of interesting how $40k [given away by Astral Codex Ten Grants] feels like a significant quantity of all the funding there is for small experiments in the forecasting space. This is probably suboptimal." What prediction-market experiments would you be most interested to see run?

This is a lot of questions, so no pressure to respond to everything -- I mostly intend this as food for thought. Also, I wrote this post casually, but let me know if you think it would be good to rework as a top-level post (ie, if you think these are good questions that people should be thinking more about).

Seconding Nuño's assessment that this comment is awesome. While waiting for his response I'll butt in with some quick off-the-cuff takes of my own.

On why no countries use prediction markets / forecasting to make crucial decisions:

My first reaction is "idk, but your comment already provides a really great breakdown of options that I would be excited to be turned into a top-level post."

If I had to guess I think it's some combination of universal human biases and fundamental issues with the value of prediction markets at present. On human biases, it seems like many people have a distaste for markets on important topics and quantified forecasting is an unnatural-feeling activity for many people to partake in. On fundamental issues, I'd refer to https://forum.effectivealtruism.org/posts/dQhjwHA7LhfE8YpYF/prediction-markets-in-the-corporate-setting which you mentioned and https://forum.effectivealtruism.org/posts/E4QnGsXLEEcNysADT/issues-with-futarchy for ideas.

On what things I'd like to see done overall, I'd point to the solution ideas and conclusion section of my post https://forum.effectivealtruism.org/posts/S2vfrZsFHn7Wy4ocm/bottlenecks-to-more-impactful-crowd-forecasting-2. In particular:

The question you ask about "studying forecasting to benefit EA" vs. "prediction markets as an EA cause area" is also important. I'm inclined to favor interventions closer to "studying forecasting to benefit EA" at present (though I might frame it more as "improve EA's wisdom/decision-making via various means including forecasting", h/t QURI/Ozzie for influence here) because I feel we're a relatively young and growing movement with a lot of resources (money + people) to use and not much clarity on how to do it best. Once we get better at this ourselves, e.g. forecasting platforms and prediction markets have clearly substantially improved important EA decisions, I'd feel it's more time for "prediction markets/forecasting platforms improving non-EA's decision-making" to be an EA cause area. I'm open to changing my mind on this e.g. if I see more evidence of forecasting having already improved important EA decisions. https://forum.effectivealtruism.org/posts/Ds2PCjKgztXtQrqAF/disentangling-improving-institutional-decision-making-2 and https://forum.effectivealtruism.org/posts/YpaQcARgLHFNBgyGa/prioritization-research-for-advancing-wisdom-and are good pointers on this overall question as well.

Hypotheses for the global lack of adoption of prediction markets/probabilistic methods

From the hypothesis you outline, the ones that sound the most plausible, or like they hit more the nail in the head, are:

But, to some extent, all your hypothesis have something to it. It's also not clear how one would go about differentiating between the different hypotheses. "Good judgment", sure, but we still don't really have the tools for thought to be reliably able to distinguish between these kinds of hypothesis, and I dislike punditry.

Prediction market experiments and other cool things

More on this to come in the next edition of the newsletter!

More Wagner

Yes, but I'd be more interested in getting something more comprehensive/structured; these points seem pretty scattered. To be clear, I do greatly prefer scattered thoughts over nothing.

This comment is glorious. I'll take some time to answer, though.

My general take on this space is:

Re 1: the disconnect between decision makers and forecasting platforms. I think the problem comes in two directions.

Re 2: someone saying "X has a y% chance of happening" is not (usually) especially valuable to a decision maker. (Especially since the market is already accounting for what it expects the decision maker to do). Models (even fairly poor ones) often have more use to a decision maker, since they can see how their decision might affect the outcome. [Yes, there are ideas like counterfactual markets, but none of those ideas can really capture the full space of possibilities and will also just fragment liquidity]. The best you can really do is extract a model statistically (when indicator goes up, forecast goes down, so indicator might be saying something about event).

Re 3: It would take a while for me to summarise the evidence here, but I think there's a pretty strong case that central banks (eg the Federal Reserve in the US) are increasingly looking at market indicators when setting monetary policy. I think CEOs and other decision makers in business look at market prices as indicators when deciding direction of their companes. (Although it's hard to fully describe this as a prediction market as much as "looking at the competition" I think with some time I could articulate what I mean)

Thanks for this excellent article. I found it very initiated and informative.

Minor:

Fwiw, I would use more moderate language, since I think this is a bit of an unnecessary distraction.

Point taken. I've created a twitter poll here, but I'll probably use more moderate language in the future.

I think I don't quite see it this way. I agree that more money directed towards forecasters is good. However, there are different lessons one could take here.

Altruistic jobs often pay less than very selfish ones. A big reason why is because many people care about altruistic roles more, and are willing to do them for much cheaper. The "selfish" companies essentially have to pay more for people to be willing to work for them.

Telsa and SpaceX both paid less, and had worse working conditions, than other silicon valley companies, for example. Nonprofits often pay even lower. (Some of this is unjustified, but my guess is that some of it just comes from higher supply of workers).

I feel like the phrase "goodness of their heart" implies that it's unusual for people to do things out of altruism or meaning. But I think it's very frequent. Basically all of the Wikipedia editors, and almost all of Open Source, is done due a mix of interest/altruism. Much of the funding would be due to altruism, so this really doesn't seem much different.