Personal Views Only

Introduction

I thought Maxwell Tabarrok’s The Offense-Defense Balance Rarely Changes was a good contribution to the discourse, and appreciated his attempt to use various lines of data to “kick the tires” on the case that an AI could in principle defeat all humans by inventing technologies that would allow them to dominate humans. I think Tabarrok is correct that this is a crux in the debate about how pessimistic to be about the possibility of humanity defending ourselves against a misaligned AGI,[1] and so in turn a crux about the probability of extinction or similarly bad outcomes from misaligned AI.

One thing I think Tabarrok is importantly correct about is that the idea of an “offense–defense balance” in hypothetical future human–AI conflicts is important but often undertheorized. Anyone interested in this question should be humbled by the fact that offense–defense theory and its application seem to me, as an outsider, to remain hotly contested by informed commentators, despite decades of scholarship.[2] Shorthand references to people’s intuition on the offense–defense balance between a hypothetical unaligned AGI and humanity are likely imprecise in many hidden ways, and ideally we would fix that. So Tabarrok is correct to say that this whole line of inquiry remains “on unstable ground.” Kudos to him for sparking a conversation on that.

Why I am not as reassured by the historical trends in conflict fatality rates as Tabarrok

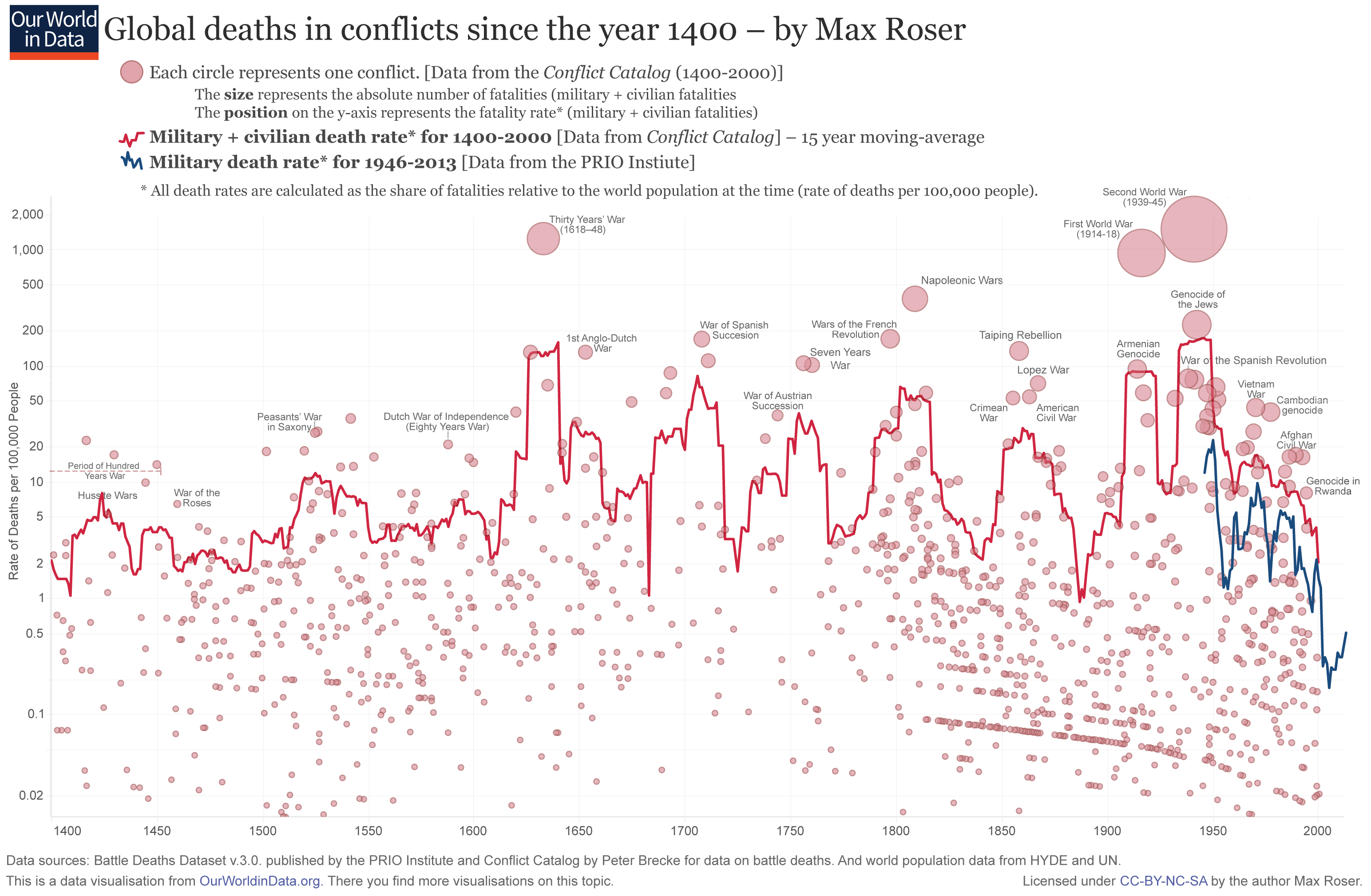

Some smaller points of agreement and disagreement were covered in the comments to that piece, including Daniel’s great comment. I want to focus mainly on the use of this graph, which I will call the “OWID Graph”:

The OWID Graph generated a lot of good discussion. I’m going to ignore here the difficulty of estimating such rates,[3] because it’s unclear in which direction that difficulty cuts, if any. I agree with Dwarkesh that the apparent stability is obscured by the use of a log scale, but with Tabarrok that the fact that there is no apparent long-run trend itself hints at an interesting form of stability.

Nevertheless, there are a few other reasons why I also find this line of evidence to be less reassuring than Tabarrok.

Reason 1: Deaths in conflict is not a measure of the offense–defense balance

To first set the backdrop, it’s important to remember that (per my lay understanding), the offense–defense balance is usually understood as the feature of some dyad—pairs of states, in the central case—with respect to some particular territory (again, in the central case).[4] It is not straightforwardly some ambient feature of the world, comparable to, e.g., parts per million of CO2 in the atmosphere.

The global number or proportion of deaths in combat is therefore not necessarily a measure of the offense–defense balance, though it may be a correlate thereof since offense–defense theory predicts that in offense-dominant eras that there will be more conflict.[5] (On the other hand, the theory also predicts that in defense-dominant regimes, wars will be more protracted, which could cause higher death rates. See World War I.)[6]

If we wanted to make statements about the long-run trend in offense and defense, therefore, we may want to look at long-run trends in territorial change. Some offense-defense theorists claim that in offense-dominant periods we should expect to see the number of states in a given region decrease.[7] And it does seem like the long-term net trend in human history has been ever-greater territorial control of the most powerful political units. Right now, four political entities—Russia, Canada, China, and the US—control about 30% of the world’s landmass. Even if you interpreted “political unit” and “control territory” loosely, I am 99% confident that, 100,000 years ago, the four most powerful political units cumulatively controlled much less than 1% of the world’s landmass.

But more fundamentally, I think the question of what the long-run trend has been is not the most informative question…

Reason 2: Only a temporary period of offense-dominance may be sufficient for AI takeover.

I don’t think the pessimist case turns on whether there has been a long-run, monotonic increase in offense-dominance. Instead, what it requires is that at certain times one party in a dyad has had such an offensive advantage that they could eradicate/conquer the other. And I think it’s pretty clear that has been the case: history is replete with examples of states completely dominating or eradicating other states and societies. If you were a member of the conquered or eradicated group, and all you cared about was the survival of your group, it is of little comfort to you that in the long run the group that conquered you will have its offensive advances limited and itself need to become a defender. Your group is still permanently disempowered! Indeed, the possibility of offense-dominance being fleeting favors states rushing to offense, to capitalize on their temporary advantage.

The question is then whether there is a realistic chance that an AI could find a technology that gives it an offensive advantage over humanity, for long enough that it could permanently seize power from us, just as more powerful states have seized on their (possibly temporary!) offense-dominance to seize power over others. The history of more powerful states conquering less powerful states does not give much reason for optimism if your main concern is that you will temporarily end up in the less-powerful state’s position as against a misaligned AGI.

Reason 3: AI might not need to defend itself against humans wielding AI-made offensive weapons.

Tabarrok also correctly points at a possible reason for expecting long-run stability: attackers may need to become defenders, and so once they invent an offense-dominant technology they will probably be strongly incentivized to find defenses to it. This is especially the case because states seem to have had a hard time keeping new technologies secret from each other: a rational state should probably expect its competitor to copy its offensive technologies sooner or later. Even if a small period of offense-dominance can enable a state to totally subjugate one defender, future possible defenders will take notice and appropriate the original attacker’s offensive technologies.

But I take the pessimists’ view to be that humans are likely to have weaknesses against which an attacking AI need not defend, especially owing to humans’ dependence on biological processes. As a crude example, suppose an AI system that poisoned the atmosphere to make it unbreathable. This would require humans to expend enormous amounts on defense (e.g., controlled environments) to survive as a species, which may not ultimately succeed and would likely kill, at minimum, the vast majority of people. But it seems possible that a sufficiently advanced AI would not in so doing threaten itself much, if it no longer required human labor for upkeep. It is also worth considering whether AIs could obviate the need for defending against their own inventions through other strategies, such as:

- Keeping them secret from humans for longer.

- Relying on scientific or technological insights that are not easily understood by humans.

- Inventing weapons that humans cannot operate.

- Simply permanently disempowering humans before humans can have a chance to respond by appropriating AI-derived offensive technologies.

Thus, the reasons why we might expect long-run stability in human–human conflicts may not apply to conflicts between humans and AIs.

Reason 4: The destructiveness of the most destructive conflicts is increasing

From eyeballing the OWID Graph, one salient feature is that the most destructive conflicts have gotten deadlier over time. Conflicts with over 100 deaths per 100,000 were unprecedented before 1600 but happened multiple times per century thereafter. The Thirty Years’ War was uniquely destructive for approximately 300 years, until two comparable wars happened in the 20th Century. Conflicts that have killed more than 20 people per 100,000 appear to have become steadily more common since 1600.

This is what you’d predict if there was a long-run increase in the destructiveness (which is distinct from offense-dominance) of military technology, net of defenses.

Looking at the maximum fatality rate in addition to the median or mean is important if worse-case scenarios matter much more than central cases on ethically relevant dimensions. Since, à la Parfit, many “pessimists” think that events that kill 100% of humans are orders of magnitude worse than events that kill (e.g.) 50% of humans, the upward trend in the maximum destructiveness of conflict is more salient than the lack of a long-run trend in the median or modal case: it suggests that the probability of conflicts that kill a high percentage of humans is rising, and therefore, maybe, that the probability of a conflict that kills all or almost all humans is rising. Of course, this is not a good reason to conclude that there is a high risk of such a conflict, and past conflicts have only barely breached 1% of humans, so on priors we still should not think it is likely. But a morally significant trend is real and going in the wrong direction.

See also Steven Byrnes, Comment to AGI Ruin: A List of Lethalities, LessWrong (July 5, 2022), https://www.lesswrong.com/posts/uMQ3cqWDPHhjtiesc/agi-ruin-a-list-of-lethalities?commentId=Sf4caqwxDFyYHoxFZ. ↩︎

My understanding is that the seminal work on offense–defense balance is Robert Jervis, Cooperation Under the Security Dilemma, 30 World Pol. 167 (1978), https://perma.cc/B2MG-F3UL. ↩︎

H/T Sarah Shoker. ↩︎

See Charles L. Glaser & Chaim Kaufmann, What is the Offense-Defense Balance and Can We Measure it?, 22 Int’l Sec. 44 (1998), https://www.jstor.org/stable/2539240. ↩︎

See id. ↩︎

H/T Alan Hickey. ↩︎

Stephen Van Evera, Offense, Defense, and the Causes of War, in Offense, Defense, and War 227, 259 (Michael E. Brown et al. eds., 2004). ↩︎

Nice piece, Cullen!

I would be curious to know whether you have any thoughts my analysis on the chance of a war causing human extinction.

I used data from 1816 to 2014, thus arguably accomodating your contention the destructiveness of the most destructive conflicts is increasing. However, this is not clear to me. Eyeballing the red line of OWID's graph, which is below, the maximum death rate in a 15 year period each century:

I very much think one would not find a clear trend running a statistical analysis. My understanding is that, since the death rate is heavy-tailed, just a slight appearance of increase of worst case outcomes is not enough to confidently establish a trend. Relatedly, it is also difficult to establish a clear trend towards piece based on the absence of large conflicts since WW2. Clauset 2018 says:

Executive summary: The author argues that historical trends in conflict fatality rates are less reassuring about the offense-defense balance in hypothetical future AI conflicts than Maxwell Tabarrok claims.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.