Hi!

🌐 The UK is hosting the first major global summit on AI safety, bringing together several countries, tech companies, and researchers to evaluate and monitor the most significant risks associated with AI. Details of the summit and the list of attendees are yet to clarified. A warm welcoming of Palantir raised many eyebrows, but this is widely seen as a positive step for AI safety, especially because UK has people like Marc Warner, a government AI Council member, who warns of the potential need to ban powerful artificial general intelligence (AGI) systems.

In other good news, UN Secretary General Guterres recognises existential threat from AI.

🔎 We have collated legal cases against AI labs, covering topics such as copyright infringement and other court cases.

📚 The campaign website now has a range of resources for AI safety training, including factsheets on the Control Problem. Do you want to contribute? Especially valuable are materials targeting broader audiences, informational posters, materials focused on young adults.

🔬 Our latest research on public opinion regarding AI safety and a logical case on the lack of safety has uncovered intriguing insights. Implications of the research are:

- Create urgency around AI danger

- Explain uncontrollability

- Promote optimism for international cooperation.

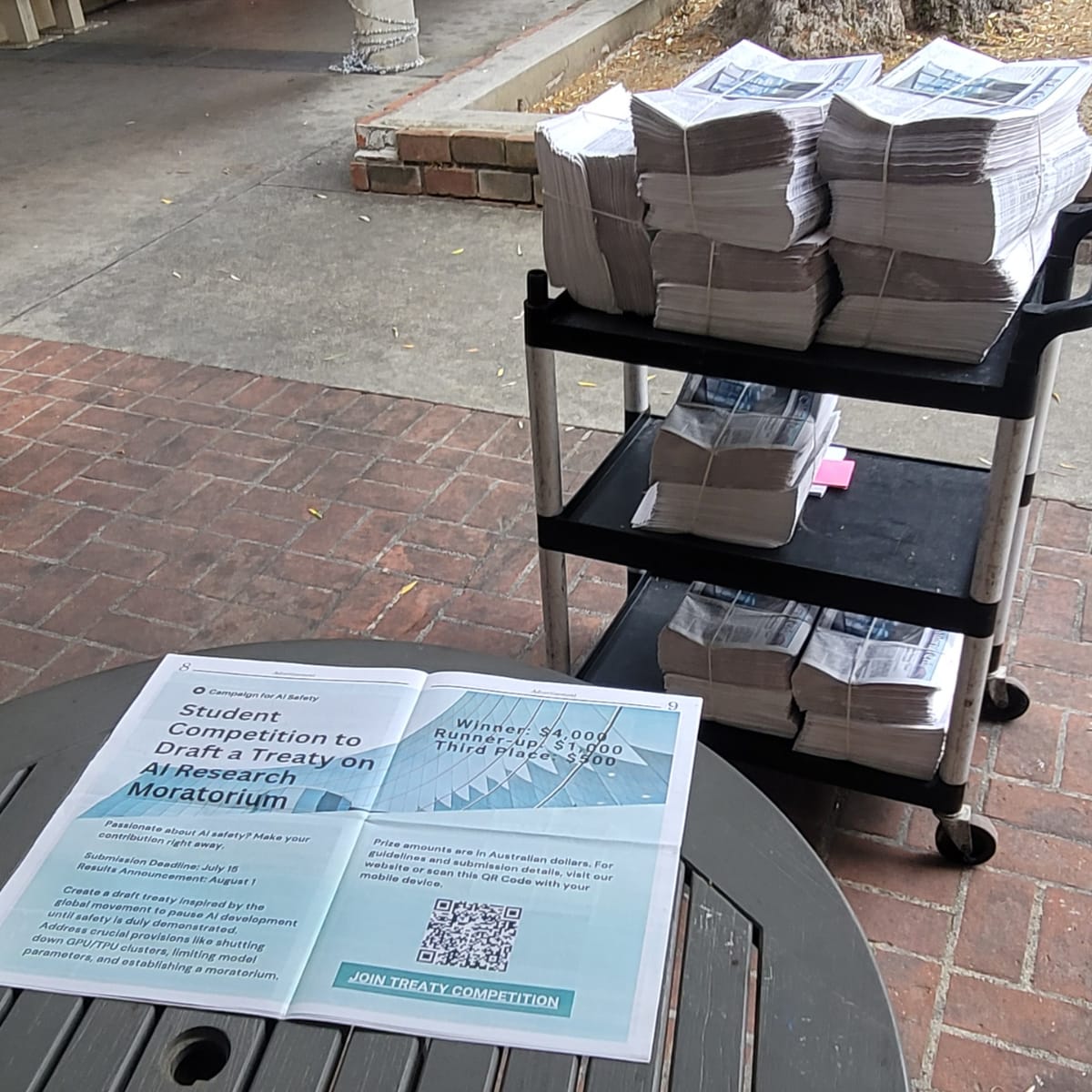

🧑⚖️ Our law student competition for drafting a treaty on the moratorium of large-scale AI capabilities R&D is publicised on several platforms, including Above the Law and the The California Tech:

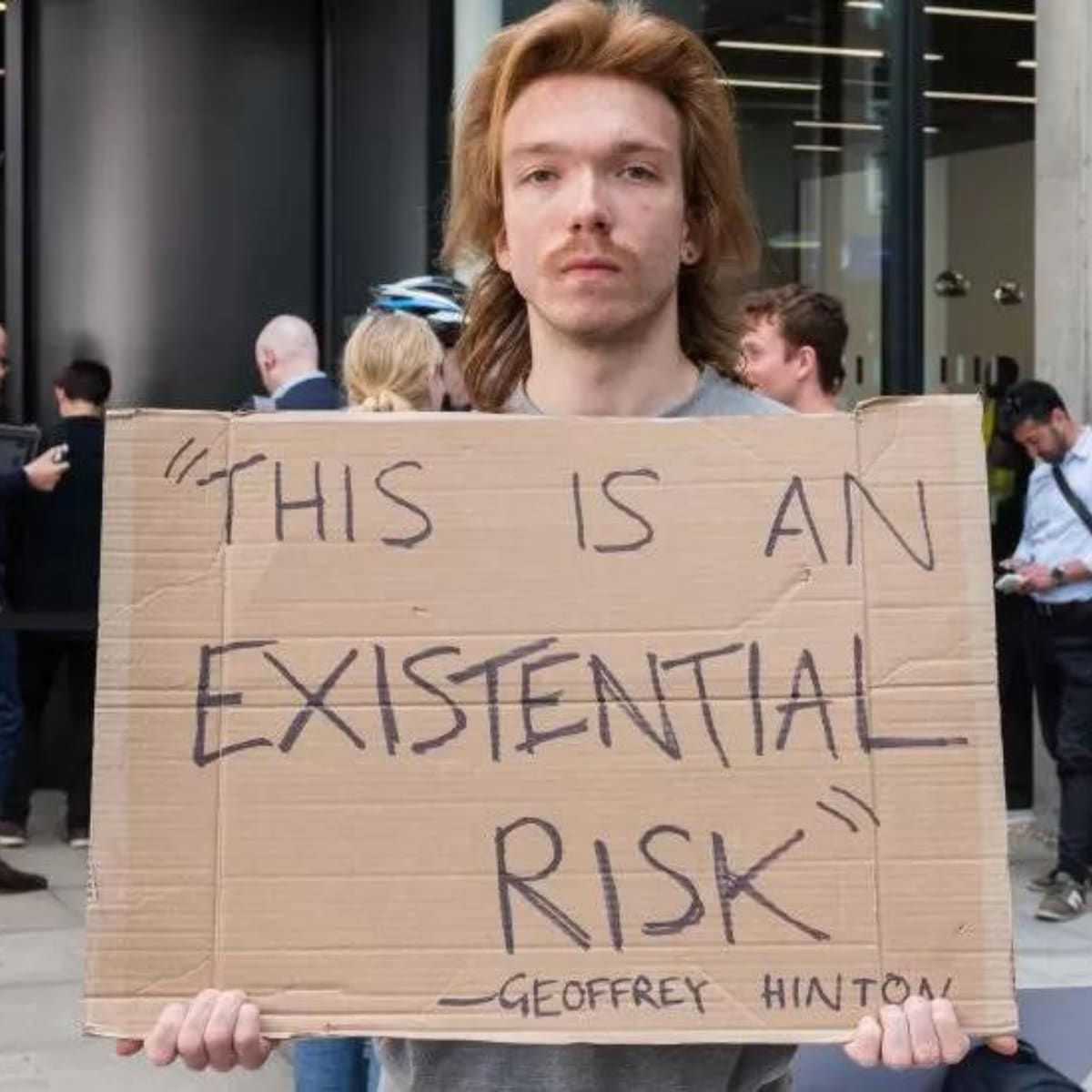

📢 Last month, #PauseAI held a powerful protest at UCL in London outside an event at which Sam Altman spoke, raising awareness about the ethical considerations of AGI development by opposing OpenAI's pursuit of AGI. The event gained widespread attention and made headlines on BBC News, capturing the essence of the movement.

🪧 Join #PauseAI for an upcoming peaceful protest at the Melbourne Convention and Exhibition Centre (MCEC) where Sam Altman will be having a talk in Melbourne.

- Date & Time: Friday, June 16, 2 pm AEST

- Venue: Main entrance of MCEC, 1 Convention Centre Place, South Wharf, VIC 3006, Australia

- Protest Times: 1.30 pm to 3 pm (arrival time) & 4:30 pm onwards (departure time)

- Logistics: Bring signs and flyers, no fee is required to participate, Startup Victoria membership ticket is currently free

Join us to raise your voice for AI safety and make a difference. Please join #PauseAI's Discord, the #australia channel and AGI Moratorium's Slack, #λ-australia for more discussions.

📃 We are currently focusing on our policy paper titled "AI regulation: a pro-innovation approach" in the UK, with a submission deadline of June 21, 2023.

If you're interested in contributing to this paper, please respond to this email.

✍️ We have some exciting news to share! Nik's Australian parliamentary e-petition has been accepted. Please help us spread the word by sharing it around with your Aussie friends. Please hurry as the deadline for signing is 12 July.

Thank you for your support! Please share this email with friends.

Nik Samoylov from Campaign for AI Safety

This is a very helpful update; thanks for sharing it!

Did anyone do a fermstimate of what a random deviantart user can expect to make in royalties, if copyright is used against an AI firm like midjourney? My guess is it would be under a penny per month.

If you divvy up revenue of AI companies from diffusion models at current pricing, then yes.

But if creators' consent is first sought for training and if they have a chance to do individual or collective bargaining (the choice of form of bargaining needs to be with the creators) with AI companies, then payouts may be meaningful.