Spencer Greenberg wrote on Twitter:

Recently @KerryLVaughan has been critiquing groups trying to build AGI, saying that by being aware of risks but still trying to make it, they’re recklessly putting the world in danger. I’m interested to hear your thought/reactions to what Kerry says and the fact he’s saying it.

Michael Page replied:

I'm pro the conversation. That said, I think the premise -- that folks are aware of the risks -- is wrong.

[...]

Honestly, I think the case for the risks hasn't been that clearly laid out. The conversation among EA-types typically takes that as a starting point for their analysis. The burden for the we're-all-going-to-die-if-we-build-x argument is -- and I think correctly so -- quite high.

Oliver Habryka then replied:

I find myself skeptical of this.

[...]

Like, my sense is that it's just really hard to convince someone that their job is net-negative. "It is difficult to get a man to understand something when his salary depends on his not understanding it" And this barrier is very hard to overcome with just better argumentation.

My reply:

I disagree with "the case for the risks hasn't been that clearly laid out". I think there's a giant, almost overwhelming pile of intro resources at this point, any one of which is more than sufficient, written in all manner of style, for all manner of audience.[1]

(I do think it's possible to create a much better intro resource than any that exist today, but 'we can do much better' is compatible with 'it's shocking that the existing material hasn't already finished the job'.)

I also disagree with "The burden for the we're-all-going-to-die-if-we-build-x argument is -- and I think correctly so -- quite high."

If you're building a machine, you should have an at least somewhat lower burden of proof for more serious risks. It's your responsibility to check your own work to some degree, and not impose lots of micromorts on everyone else through negligence.[2]

But I don't think the latter point matters much, since the 'AGI is dangerous' argument easily meets higher burdens of proof as well.

I do think a lot of people haven't heard the argument in any detail, and the main focus should be on trying to signal-boost the arguments and facilitate conversations, rather than assuming that everyone has heard the basics.

A lot of the field is very smart people who are stuck in circa-1995 levels of discourse about AGI.

I think 'my salary depends on not understanding it' is only a small part of the story. ML people could in principle talk way more about AGI, and understand the problem way better, without coming anywhere close to quitting their job. The level of discourse is by and large too low for 'I might have to leave my job' to be the very next obstacle on the path.

Also, many ML people have other awesome job options, have goals in the field other than pure salary maximization, etc.

More of the story: Info about AGI propagates too slowly through the field, because when one ML person updates, they usually don't loudly share their update with all their peers. This is because:

1. AGI sounds weird, and they don't want to sound like a weird outsider.

2. Their peers and the community as a whole might perceive this information as an attack on the field, an attempt to lower its status, etc.

3. Tech forecasting, differential technological development, long-term steering, exploratory engineering, 'not doing certain research because of its long-term social impact', prosocial research closure, etc. are very novel and foreign to most scientists.

EAs exert effort to try to dig up precedents like Asilomar partly because Asilomar is so unusual compared to the norms and practices of the vast majority of science. Scientists generally don't think in these terms at all, especially in advance of any major disasters their field causes.

And the scientists who do find any of this intuitive often feel vaguely nervous, alone, and adrift when they talk about it. On a gut level, they see that they have no institutional home and no super-widely-shared 'this is a virtuous and respectable way to do science' narrative.

Normal science is not Bayesian, is not agentic, is not 'a place where you're supposed to do arbitrary things just because you heard an argument that makes sense'. Normal science is a specific collection of scripts, customs, and established protocols.

In trying to move the field toward 'doing the thing that just makes sense', even though it's about a weird topic (AGI), and even though the prescribed response is also weird (closure, differential tech development, etc.), and even though the arguments in support are weird (where's the experimental data??), we're inherently fighting our way upstream, against the current.

Success is possible, but way, way more dakka is needed, and IMO it's easy to understand why we haven't succeeded more.

This is also part of why I've increasingly updated toward a strategy of "let's all be way too blunt and candid about our AGI-related thoughts".

The core problem we face isn't 'people informedly disagree', 'there's a values conflict', 'we haven't written up the arguments', 'nobody has seen the arguments', or even 'self-deception' or 'self-serving bias'.

The core problem we face is 'not enough information is transmitting fast enough, because people feel nervous about whether their private thoughts are in the Overton window'.

We need to throw a brick through the Overton window. Both by adopting a very general policy of candidly stating what's in our head, and by propagating the arguments and info a lot further than we have in the past. If you want to normalize weird stuff fast, you have to be weird.

What broke the silence about artificial general intelligence (AGI) in 2014 wasn’t Stephen Hawking writing a careful, well-considered essay about how this was a real issue. The silence only broke when Elon Musk tweeted about Nick Bostrom’s Superintelligence, and then made an off-the-cuff remark about how AGI was “summoning the demon.”

Why did that heave a rock through the Overton window, when Stephen Hawking couldn’t? Because Stephen Hawking sounded like he was trying hard to appear sober and serious, which signals that this is a subject you have to be careful not to gaffe about. And then Elon Musk was like, “Whoa, look at that apocalypse over there!!” After which there was the equivalent of journalists trying to pile on, shouting, “A gaffe! A gaffe! A… gaffe?” and finding out that, in light of recent news stories about AI and in light of Elon Musk’s good reputation, people weren’t backing them up on that gaffe thing.

Similarly, to heave a rock through the Overton window on the War on Drugs, what you need is not state propositions (although those do help) or articles in The Economist. What you need is for some “serious” politician to say, “This is dumb,” and for the journalists to pile on shouting, “A gaffe! A gaffe… a gaffe?” But it’s a grave personal risk for a politician to test whether the public atmosphere has changed enough, and even if it worked, they’d capture very little of the human benefit for themselves.

Simone Sturniolo commented on "AGI sounds weird, and they don't want to sound like a weird outsider.":

I think this is really the main thing. It sounds too sci-fi a worry. The "sensible, rational" viewpoint is that AI will never be that smart because haha, they get funny word wrong (never mind that they've grown to a point that would have looked like sorcery 30 years ago).

To which I reply: That's an example of a more-normal view that exists in society-at-large, but it's also a view that makes AI research sound lame. (In addition to being harder to say with a straight face if you've been working in ML for long at all.)

There's an important tension in ML between "play up AI so my work sounds important and impactful (and because it's in fact true)", and "downplay AI in order to sound serious and respectable".

This is a genuine tension, with no way out. There legit isn't any way to speak accurately and concretely about the future of AI without sounding like a sci-fi weirdo. So the field ends up tangled in ever-deeper knots motivatedly searching for some third option that doesn't exist.

Currently popular strategies include:

1. Quietism and directing your attention elsewhere.

2. Derailing all conversations about the future of AI to talk about semantics ("'AGI' is a wrong label").

3. Only talking about AI's long-term impact in extremely vague terms, and motivatedly focusing on normal goals like "cure cancer" since that's a normal-sounding thing doctors are already trying to do.

(Avoid any weird specifics about how you might go about curing cancer, and avoid weird specifics about the social effects of automating medicine, curing all disease, etc. Concreteness is the enemy.)

4. Say that AI's huge impacts will happen someday, in the indefinite future. But that's a "someday" problem, not a "soon" problem.

(Don't, of course, give specific years or talk about probability distributions over future tech developments, future milestones you expect to see 30 years before AGI, cruxes, etc. That's a weird thing for a scientist to do.)

5. Say that AI's impacts will happen gradually, over many years. Sure, they'll ratchet up to being a big thing, but it's not like any crazy developments will happen overnight; this isn't science fiction, after all.

(Somehow "does this happen in sci-fi?" feels to people like a relevant source of info about the future.)

When Paul Christiano talks about soft takeoff, he has in mind a scenario like 'we'll have some years of slow ratcheting to do some preparation, but things will accelerate faster and faster and be extremely crazy and fast in the endgame'.

But what people outside EA usually have in mind by soft takeoff is:

I think the Paul scenario is one where things start going crazy in the next few decades, and go more and more crazy, and are apocalyptically crazy in thirty years or so?

But what many ML people seemingly want to believe (or want to talk as though they believe) is a Jetsons world.

A world where we gradually ratchet up to "human-level AI" over the next 50–250 years, and then we spend another 50–250 years slowly ratcheting up to crazy superhuman systems.

The clearest place I've seen this perspective explicitly argued for is in Rodney Brooks' writing. But the much more common position isn't to explicitly argue for this view, or even to explicitly state it. It jut sort of lurks in the background, like an obvious sane-and-moderate Default. Even though it immediately and obviously falls apart as a scenario as soon as you start poking at it and actually discussing the details.

6. Just say it's not your job to think or talk about the future. You're a scientist! Scientists don't think about the future. They just do their research.

7. More strongly, you can say that it's irresponsible speculation to even broach the subject! What a silly thing to discuss!

Note that the argument here usually isn't "AGI is clearly at least 100 years away for reasons X, Y, and Z; therefore it's irresponsible speculation to discuss this until we're, like, 80 years into the future." Rather, even giving arguments for why AGI is 100+ years away is assumed at the outset to be irresponsible speculation. There isn't a cost-benefit analysis being given here for why this is low-importance; there's just a miasma of unrespectability.

- ^

Some of my favorite informal ones to link to: Russell 2014; Urban 2015 (w/ Muehlhauser 2015); Yudkowsky 2016a; Yudkowsky 2017; Piper 2018; Soares 2022

Some of my favorite less-informal ones: Bostrom 2014a; Bostrom 2014b; Yudkowsky 2016b; Soares 2017; Hubinger et al. 2019; Ngo 2020; Cotra 2021; Yudkowsky 2022; Arbital's listing

Other good ones include: Omohundro 2008; Yudkowsky 2008; Yudkowsky 2011; Muehlhauser 2013; Yudkowsky 2013; Armstrong 2014; Dewey 2014; Krakovna 2015; Open Philanthropy 2015; Russell 2015; Soares 2015a; Soares 2015b; Steinhardt 2015; Alexander 2016; Amodei et al. 2016; Open Philanthropy 2016; Taylor et. al 2016; Taylor 2017; Wiblin 2017; Yudkowsky 2017; Garrabrant and Demski 2018; Harris and Yudkowsky 2018; Christiano 2019a; Christiano 2019b; Piper 2019; Russell 2019; Shlegeris 2020; Carlsmith 2021; Dewey 2021; Miles 2021; Turner 2021; Steinhardt 2022

- ^

Or, I should say, a lower "burden of inquiry".

You should (at least somewhat more readily) take the claim seriously and investigate it in this case. But you shouldn't require less evidence to believe anything — that would just be biasing yourself, unless you're already biased and are trying to debias yourself. (In which case this strikes me as a bad debiasing tool.)

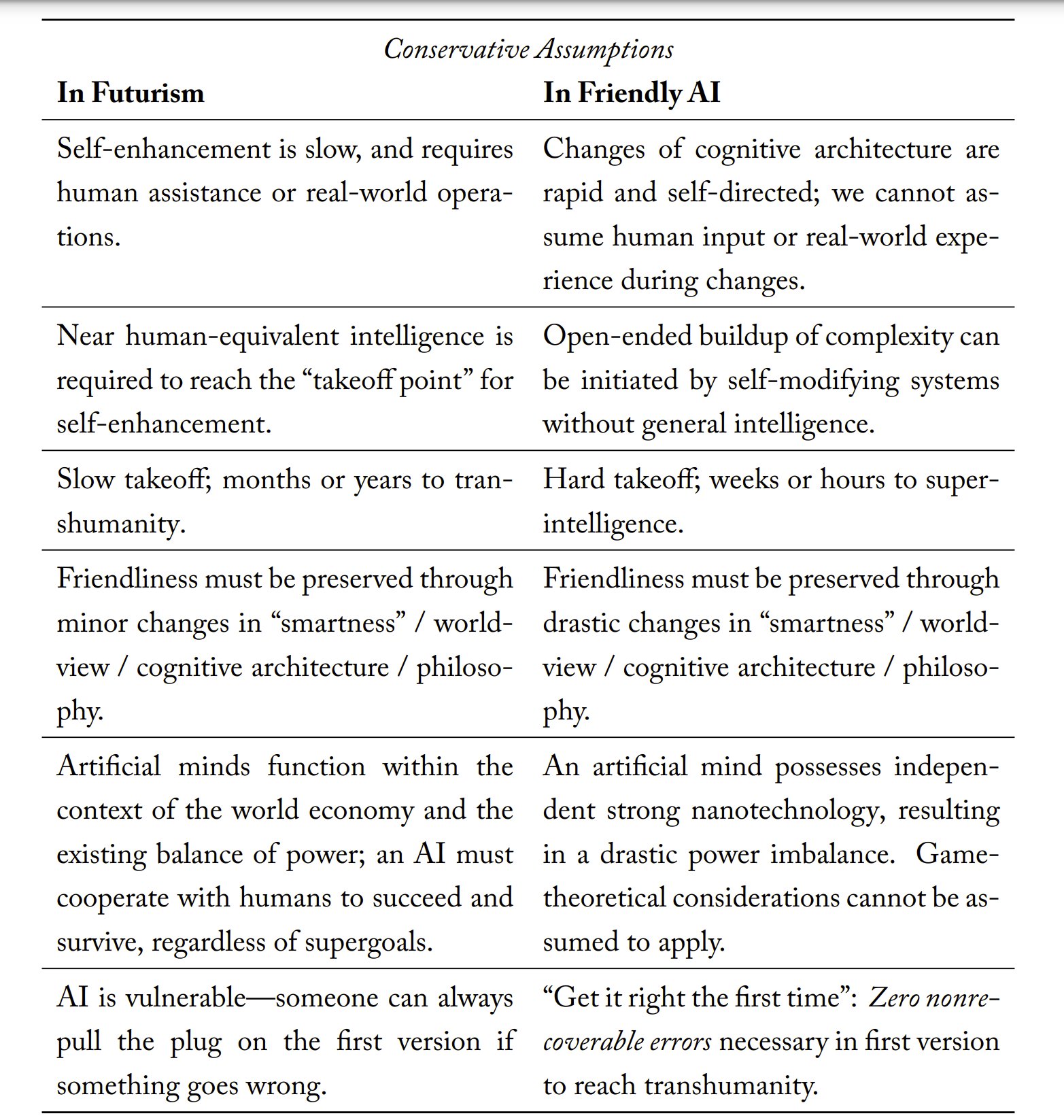

See also the idea of "conservative futurism" versus "conservative engineering" in Creating Friendly AI 1.0:

The conservative assumption according to futurism is not necessarily the “conservative” assumption in Friendly AI. Often, the two are diametric opposites. When building a toll bridge, the conservative revenue assumption is that half as many people will drive through as expected. The conservative engineering assumption is that ten times as many people as expected will drive over, and that most of them will be driving fifteen-ton trucks.

Given a choice between discussing a human-dependent traffic-control AI and discussing an AI with independent strong nanotechnology, we should be biased towards assuming the more powerful and independent AI. An AI that remains Friendly when armed with strong nanotechnology is likely to be Friendly if placed in charge of traffic control, but perhaps not the other way around. (A minivan can drive over a bridge designed for armor-plated tanks, but not vice-versa.

The core argument for hard takeoff, 'AI can achieve strong nanotech', and "get it right the first time" is that they're true, not that they're "conservative". But it's of course also true that a sane world that thought hard takeoff were "merely" 20% likely, would not immediately give up and write off human survival in those worlds. Your plan doesn't need to survive one-in-a-million possibilities, but it should survive one-in-five ones!

My experience from a big tech company: ML people are too deep in the technical and practical everyday issues that they don't have the capacity (nor incentive) to form their own ideas about the further future.

I've heard people say, that it's so hard to make ML do something meaningful that they just can't imagine it would do something like recursive self-improvement. AI safety in these terms means making sure the ML model performs as well in deployment as in the development.

Another trend I noticed, but I don't have much data for it, is that the somewhat older generation (35+) is mostly interested in the technical problems and don't feel that much responsibility for how the results are used. Vs. the generation of 25 - 35 care much more about the future. I'm noticing similarities with climate change awareness, although the generation delimitations might differ.

Thanks for posting this! I thought the points were interesting, and I would have missed the conversation since I'm not very active on twitter.