A year ago Louis Dixon posed the question “Does climate change deserve more attention within EA?”. On May 30th I will be discussing the related question “Is Climate Change Neglected Within EA?” with the Effective Environmentalism group. This post is my attempt to answer that question.

Climate change is an incredibly complex issue where a change in greenhouse gas concentrations is warming the planet, which has a long list of knock on impacts including heatwaves, more intense rainfall, more intense droughts, sea level rise, increased storm surges, increased wildfires, expanding the territory affected by tropical diseases, ocean acidification, shifts in geopolitical power as we transition off fossil fuels.

The consequences of these impacts are happening already and will intensify over the coming decades. Predicting that future is very challenging, and our desire to have some understanding of the future makes it tempting to accept simplified models that often only look at one aspect of the greater problem. It is very difficult to have confidence about any models that we do build, as the world is heading into fundamentally uncharted territory. We have good reason to believe that the impacts of climate change will be severe, quite possibly even catastrophic, and so it is critical that the world takes action.

In this post I will argue the following:

- Due to the inherent difficulty of predicting the full impacts of climate change, there is limited evidence about exactly what these impacts will be (section 1). EA has drawn questionable conclusions from the evidence that does exist (section 2).

- EA considers Climate Change as “not neglected” based on the amount of effort already being made, rather than the results achieved (section 3).

- EA downplays the huge impacts from currently expected levels of climate change (section 4), focusing instead on whether climate change is an x-risk (section 5). This approach risks alienating many people from EA (section 6).

- Climate change has many different impacts, this is a poor fit for EA which tries to quantify problems using simple models, leading to undervaluing of action on climate change (section 7). The EA model of prioritising between causes doesn’t work for climate change which has a broad and effectively permanent impact (section 8).

The result of all of this is to visibly neglect and undervalue climate change in EA publications. I well demonstrate this in two case studies - a talk from EA 2019 (section 9), and some key EA publications and initiatives (section 10).

EA is not unique for neglecting climate change - humanity as a whole continues to neglect to act on this urgent problem. “Don’t Even Think About It” is an excellent book about the many reasons why this is the case.

Some members of the EA community are already doing great work on climate change. However, in this post I have argued that climate change is generally neglected by EA. This needs to change. EA should visibly promote climate change as one of the most important causes to work on. This should be based on more than just discussion of unlikely x-risk scenarios, but also of discussion of the severe impact of the level of climate change which is predicted to happen in the coming decades. The next 10 years will determine whether a 1.5C world is possible - now is the time for action on climate change.

“The hard part is not wrestling with how bad things could get – it’s understanding how much responsibility we still have to make things better.” - Alex Steffen

1) There is a lack of evidence for the more severe impacts of climate change, rather than evidence that the impacts will not be severe.

The UK government commissioned a 2015 risk assessment on the topic of climate change. It is relevant to quote a section of this report here.

--- (Begin quote from 2015 risk assessment)

The detailed chapters of the same report [AR5] suggest that the impacts corresponding to high degrees of temperature increase are not only relatively unknown, but also relatively unstudied. This is illustrated by the following quotes:

- Crops: “Relatively few studies have considered impacts on cropping systems for scenarios where global mean temperatures increase by 4ºC or more.”

- Ecosystems: “There are few field-scale experiments on ecosystems at the highest CO2 concentrations projected by RCP8.5 for late in the century, and none of these include the effects of other potential confounding factors.”

- Health: “Most attempts to quantify health burdens associated with future climate change consider modest increases in global temperature, typically less than 2ºC.”

- Poverty: “Although there is high agreement about the heterogeneity of future impacts on poverty, few studies consider more diverse climate change scenarios, or the potential of 4ºC and beyond.”

- Human security: “Much of the current literature on human security and climate change is informed by contemporary relationships and observation and hence is limited in analyzing the human security implications of rapid or severe climate change.”

- Economics: “Losses accelerate with greater warming, but few quantitative estimates have been completed for additional warming around 3ºC or above.”

A simple conclusion is that we need to know more about the impacts associated with higher degrees of temperature increase. But in many cases this is difficult. For example, it may be close to impossible to say anything about the changes that could take place in complex dynamic systems, such as ecosystems or atmospheric circulation patterns, as a result of very large changes very far into the future.

--- (End quote from 2015 risk assessment)

2) EA has drawn questionable conclusions from this limited evidence base

I previously reviewed two attempts to compute the cost effectiveness of action on climate change and found that both of these were based on sources which are simultaneously the best available evidence, and also deeply flawed as a basis for making any kind of precise cost effectiveness estimate. I concluded:

One of the central ideas in effective altruism is that some interventions are orders of magnitude more effective than others. There remain huge uncertainties and unknowns which make any attempt to compute the cost effectiveness of climate change extremely challenging. However, the estimates which have been completed so far don’t make a compelling case that mitigating climate change is actually order(s) of magnitude less effective compared to global health interventions, with many of the remaining uncertainties making it very plausible that climate change interventions are indeed much more effective.

This contradicts the conclusion of one of the underlying cost effectiveness analysis which concluded that “Global development interventions are generally more effective than Climate change interventions”.

3) Climate Change is deemed “not neglected” based on the amount of effort already being made, rather than the results achieved

The EA importance, tractability, neglectedness (ITN) framework discounts climate change because it is not deemed to be neglected (e.g. scoring 2/12 on 80K Hours). I have previously disagreed with this position because it ignores whether the current level of action on climate change is anywhere close to what is actually required to solve the problem (it’s not).

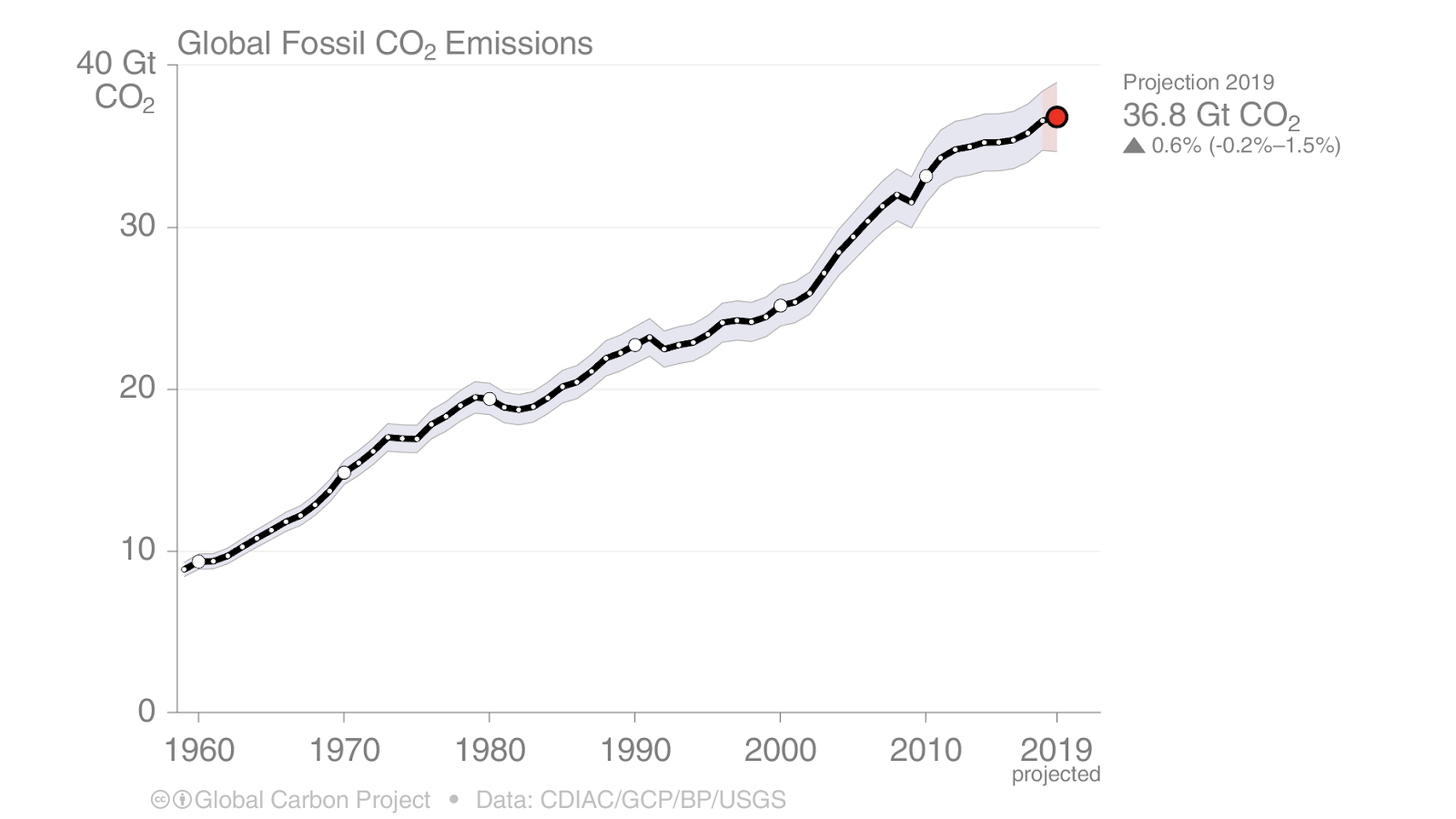

The IPCC predicts that emissions must reach net zero by 2050 to limit warming to 1.5C. However, global emissions continue to increase, apart from short term economic shocks. We are a long way from achieving sustained global reductions in emissions.

Climate change is a uniquely difficult problem to tackle because it is so massively decentralised. Agriculture, transport, building standards, energy, manufacturing, etc - all need to be reinvented to work without emitting CO2 and other greenhouse gasses. There’s no way of avoiding that this will require the work of a very large number of people.

It seems to me that the current application of the ITN framework is akin to arguing during a war against joining the army because there are already lots of soldiers fighting. This argument may make sense at the individual level (will one person really make much difference?) but seems obviously wrong at the population level - the war must be won.

4) EA often ignores or downplays the impact of mainstream climate change, focusing on the tail risk instead

The 80,000 hours “Climate change (extreme risks)” problem profile says:

More extreme scenarios (say, warming of 6 ºC or higher) would likely have very serious negative consequences. Sea levels would rise, crop yields could fall significantly, and there would likely be large water shortages. If we fail to adapt, hundreds of millions of people could die from shortages, conflict, or increased vulnerability to diseases, and billions of people could be displaced.

This profile is focused on these ‘tail’ risks — the chance that the planet will experience extreme warming. Though unlikely, the chance of warming over 6 ºC still seems uncomfortably high. We focus on this possibility, rather than the issue of climate change in general, because preventing the most extreme levels of warming helps prevent the worst possible outcomes.

This frames action on climate change as being focused on preventing the risk of a severe but unlikely outcome. The tail risk is a serious concern but I think it is a mistake to neglect the avoidable harms that will be caused from the levels of climate change which we think are very likely without much more rapid global action.

The 80K Hours problem profile makes no mention of the IPCC SR15 report, our best available evidence of the incremental impact of 1.5 vs 2.0C of climate change, which predicts that hundreds of millions more people will be severely impacted. As stated previously, we have very little reliable evidence about what the quantified impact would be of warming more than 2C, and yet according to Climate Action Tracker, we are heading towards a future of 2.3C - 4.1C of warming.

In 2011 the Royal Society considered the impacts of more severe climate change in a special issue titled “Four degrees and beyond: the potential for a global temperature increase of four degrees and its implications”. This included statements that we could see 4C as soon as the 2060s:

If carbon-cycle feedbacks are stronger, which appears less likely but still credible, then 4°C warming could be reached by the early 2060s in projections that are consistent with the IPCC’s ‘likely range’.

And that a 4C world would be incredibly difficult to adapt to:

In such a 4°C world, the limits for human adaptation are likely to be exceeded in many parts of the world, while the limits for adaptation for natural systems would largely be exceeded throughout the world. Hence, the ecosystem services upon which human livelihoods depend would not be preserved. Even though some studies have suggested that adaptation in some areas might still be feasible for human systems, such assessments have generally not taken into account lost ecosystem services.

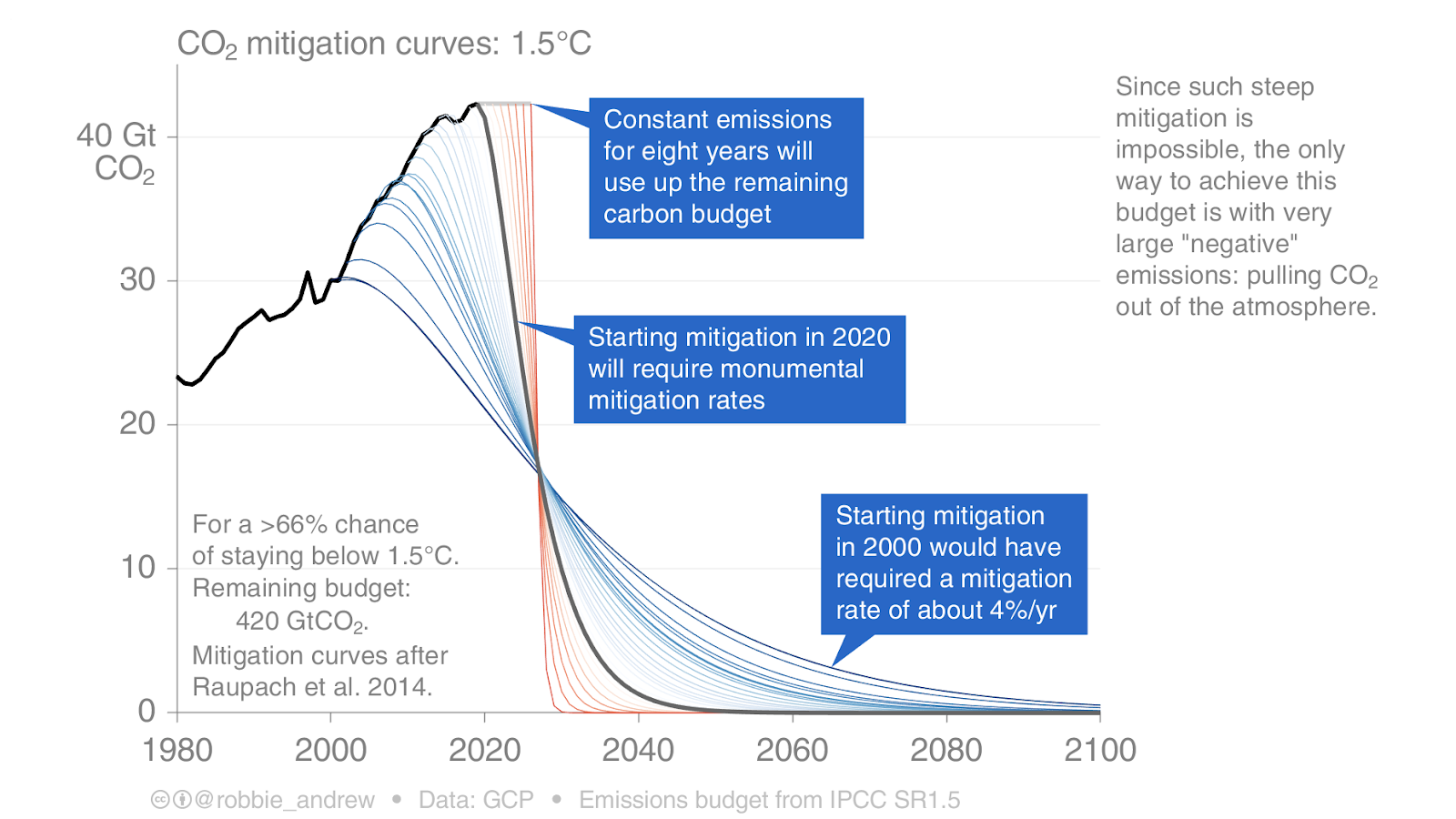

The 80K Hours problem profile makes no mention of the concept of a carbon budget - the amount of of carbon which we can emit before we are committed to a particular level of warming. Since 1751 the world has emitted 1500 Gt CO2 and SR15 estimates a remaining carbon budget of 420 Gt CO2 to have a 66% chance of limiting warming to 1.5C. We’re emitting ~40Gt CO2/year, so we’ll have used this budget up before 2030, and hence committed ourselves to more than 1.5C of warming. Additionally, SR15 predicted that to limit warming to 1.5C global emissions need to have actually declined by 45% by 2030. Global emissions are generally continuing to grow and yet to meet this target they must decline by ~8% a year, every year, globally.

All of the CO2 which is emitted is expected to persist in the atmosphere for centuries unless we can deploy negative emissions technologies at enormous scale. This means that the impacts of climate change are effectively irreversible.

5) EA appears to dismiss climate change because it is not an x-risk

EA has repeatedly asked whether climate change is an x-risk.

- 2019-12 - EA Global London - Could climate change make Earth uninhabitable for humans? [link]

- 2019-12 - Linch - 8 things I believe about climate change, including discussion of whether climate change is an x-risk [EA Forum]

- 2019-06 - Vox - Is climate change an “existential threat” — or just a catastrophic one? [link]

- 2019-04 - Tessa Alexanian, Zachary Jacobi - Rough Notes for “Second-Order Effects Make Climate Change an Existential Threat” [google doc]

- 2019-01 - ozymandias - Climate Change Is, In General, Not An Existential Risk [EA Forum]

- 2018-10 - John Halsted - is Climate an x-risk? [google doc]

- 2018-03 - John Halsted - Climate change, geoengineering, and existential risk [EA Forum]

This is an important question for the EA community to consider. The answer so far has been that climate change is not likely to be an x-risk. However, this appears to often result in climate change being written off as a worthy cause for EA. Without the x-risk label, it gets stacked up against other more immediate causes, and the better quantified short term interventions like global health are able to provide much more straightforward evidence for supporting them.

6) EA is in danger of making itself a niche cause by loudly focusing on topics like x-risk

My first introduction to EA was reading The Life You Can Save by Peter Singer. This made a compelling case that I had the ability, and the moral responsibility, to do concrete good in the world. The first charity that I started giving regularly to was the Against Malaria Foundation.

My impression over the last few years has been that EA has loudly invested a lot of energy into researching and discussing long term causes, with a particular focus on x-risks. Working to better understand and mitigate x-risks is an important area of work for EA, but my own experience is that it feels at times like EA is not a broad movement of people who want to do the most good in whatever cause they are working on, but a narrow movement of people who want to do the most good by focusing on x-risks. This concern was expressed by Peter Singer in an interview in December 2019:

“I certainly respect those who are working on the long-term future, and existential risk and so on, and I think that is important work, it should continue. But, I’m troubled by the idea that that becomes or is close to becoming the public face of the EA movement. Because I do think that there’s only this much narrower group of people who are likely to respond to that kind of appeal.”

This concern has also been expressed before by Dylan Matthews at Vox, with a response from several other members of the EA community here.

Essentially, there is a chance that a reasoned focus on x-risk has the risk of limiting the growth of EA as a movement to the set of people who can be convinced to be interested in x-risks.

The same arguments apply about the way that climate change is discussed within EA. At a time when many individuals and groups are talking about climate change as one of the most important issues of our time, it is striking that EA often downplays the importance of climate change. I’m certainly not the only person to have this impression of EA, as was evident in some of the comments on “Does climate change deserve more attention within EA?” from last year:

“The biggest issue I have with EA is the lack of attention to climate change. I am supporter and member of the EA but I take issue with the lack of attention to climate change. Add me to the category of people that are turned off from the community because it's weak stance of climate change.”

“I would like to offer a simple personal note that my focus and energy has turned away from EA to climate change only. I now spend all of my time and energy on climate related matters. Though I still value EA's approach to charity giving, it has begun to feel like a voice from the past, from a world that no longer exists. This is how it registers with me now.”

“I agree that I fall closer into this camp. Where the action tends to be towards climate change and that immediate threat, while I play intellectual exercises with EA. The focus on animal welfare and eating a vegan diet help the planet and fighting malaria are related to climate change. Other issues such as AI and nuclear war seem far fetched. It's hard for me to see the impacts of these threats without preying on my fears. While climate change has an impact on my daily life.”

“quickly after discovering EA (about 4 years ago) I got the impression that climate change and threats to biodiversity were underestimated, and was surprised at how little research and discussion there seems to be.”

“Yes, absolutely...the 80K podcast occasionally pays it lip service by saying "we agree with the scientific consensus", but it doesn't seem to go much further than that”

It is of course important to acknowledge that climate change was voted as the no. 2 top priority cause in the EA survey 2019. I find this result both reassuring and surprising. My surprise is due to everything that I am talking about in this post.

7) EA tries to quantify problems using simple models, leading to undervaluing of action on climate change

Every extra ton of CO2 in the atmosphere contributes to many effects, including:

- Changed weather patterns - more intense rain, more intense droughts, more heatwaves

- Increased wildfires

- Increased sea levels, higher storm surges, more intense hurricanes

- Melting glaciers, melting sea ice, reduced snowpack

- Ocean acidification

All of these effects have complex dynamics that interact with specific geographical and human population features around the world.

To properly weigh up the impact of climate change would require a cost function that we don’t know. All of these effects have direct and indirect impacts on human lives around the world.

They also have impacts beyond those on humans. SR15 predicted that at 2C of warming, 99% of all coral reefs will die. How does EA value that loss? There’s obviously not a single answer, but it is at least true that in both of the previous climate change cost effectiveness analysis, considerations like this were completely absent.

8) EA model of prioritising between causes doesn’t work for climate change which has a broad and effectively permanent impact

A choice between buying a malaria net or a deworming tablet is a relatively simple to model choice. However, regardless of which you choose, the product you buy, and how you deliver that product to the people who need it, will have a carbon footprint. In this way, climate change does not fit in neatly with an EA worldview which promotes the prioritisation of causes so that you can choose the most important one to work on. Global health interventions have a climate footprint, which I’ve never seen accounted for in EA cost effectiveness calculations.

Climate change is a problem which is getting worse with time and is expected to persist for centuries. Limiting warming to a certain level gets harder with every year that action is not taken. Many of the causes compared by EA don’t have the same property. For example, if we fail to treat malaria for another ten years, that won’t commit humanity to live with malaria for centuries to come. However, within less than a decade, limiting warming to 1.5C will become impossible.

9) Case study: Could climate change make Earth uninhabitable for humans?

Consider this talk from EA 2019. I am using this talk as an example rather than because I think my criticisms are unique to this talk.

- As an EA talk about Climate Change, of course it focuses on whether climate change is an x-risk.

- At 1:30, the speaker presents three possible mechanisms for climate being an x-risk - (1) Making Earth Uninhabitable, (2) Increasing Risk of Other X-Risks, (3) Contributing to Societal Collapse. The speaker makes it clear that (2) and (3) are likely to be higher impact, but are less tractable to study, and so the talk will focus on (1). This is a classic EA choice which will lead to the discussed risks relating to climate change being substantially reduced.

- At 4:55, the speaker makes the argument that we are planning to create settlements on the moon and mars, and that surviving on a warmer earth will surely be easier. This kind of technology based reassurance is something I’ve encountered before such as this comment which suggested that a warmer world could be made habitable by “ice vests” or “a system which burns fuel and then uses absorption chilling to cool the body”. This seems to miss the point that the current world climate makes life very comfortable and easy, and that our ability to survive in a more hostile climate doesn’t make that avoidable outcome much more acceptable.

- In the Q&A, at 21:00, the questioner said “... the mainline expectation is not so bad, is that a fair reading of your view?”. In the speaker’s answer they describe deaths from climate change as being likely to be of the same scale as the number of people who die in traffic accidents. This feels like a really trivialising comparison, and one which I’m highly sceptical about the veracity of. The speaker goes on to frame climate change as being a low priority x-risk. This is a perfect example of the way that EA downplays the severity of the mainline impacts of climate change.

- At 22:40 the questioner asked “do you feel that it is time now to be mobilising and transforming the economy, or do you feel like the jury is still out on that…” (which seems like an incredibly surprising question at this point in the climate movement - the questioner sounds a bit like a climate skeptic based on this question). In the speaker’s answer they state’s that we can emit as much in the future as we have emitted in the past. This makes it sound like we have plenty of time to address climate change. If we wish to limit warming to 1.5C this is definitely false - as stated above, at current emissions levels we will have spent our remaining carbon budget for a 1.5C world before 2030.

10) Case study: Climate is visibly absent or downplayed within some key EA publications and initiatives

On the front page of https://www.effectivealtruism.org/ there are seven articles listed. Climate Change isn’t mentioned, but AI, Biosecurity, and Animal Welfare are.

On the linked Read more… page there is a list of Promising Causes listed. Climate Change is not on this list.

In April 2018 Will Macaskill gave a TED talk titled “What are the most important moral problems of our time?”. The answer he presented was 1) Global Health, 2) Factory Farming, 3) Existential Risks. He mentions “Extreme Climate Change” in his list of x-risks and goes on to make the argument that none of these are very likely, but that the moral weight of extinction demands that we work on them. As detailed above, this is the classic lens that EA views climate change through - as an unlikely x-risk, rather than as a pressing problem facing the world today.

There are currently four available Effective Altruism Funds:

None of these directly fund work on climate change. The Long-Term Future fund is currently focused on cause prioritisation and AI risk.

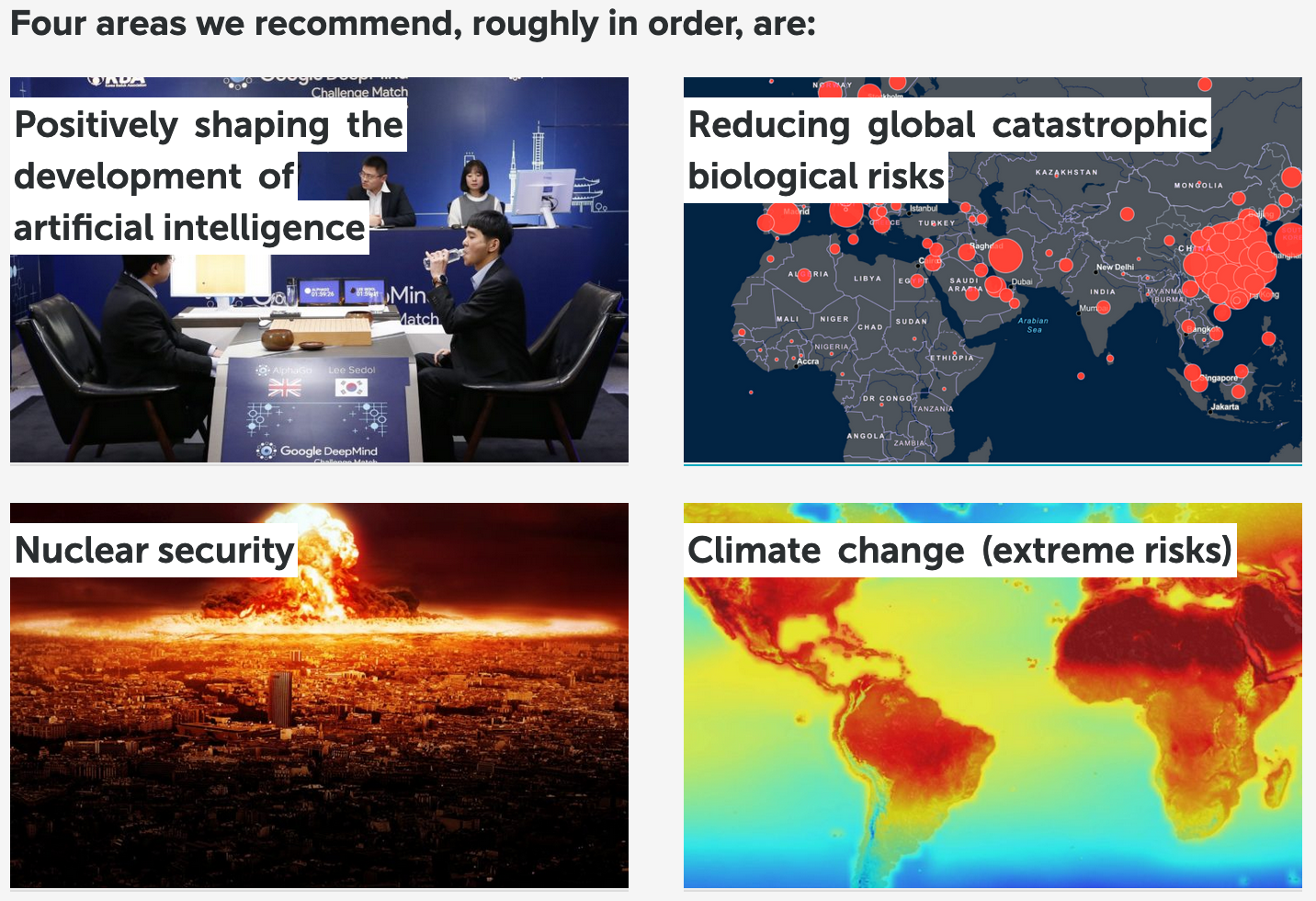

80,000 Hours has a summary of the cause areas they have studied. The first section is “Emerging technologies and global catastrophic risks” and this makes four recommendations. This is unusually good for EA as climate change makes it into the top 4 within this first section. However, as discussed earlier, the problem profile is focussed on the “extreme risks”.

Appendix 1) Timeline of climate related EA publications

In an effort to quantify how much attention climate has received within EA, I have compiled a list of relevant articles and publications from the last few years. This list is certainly not comprehensive but was compiled as part of writing this article.

- 2020-05 - 80K Hours - Climate Problem Profile [link]

- 2020-04 - Louis Dixon - Climate Shock by Wagner and Weitzman [EA Forum]

- 2020-02 - John Halstead, Johannes Ackva, FP - Climate & Lifestyle Report [pdf]

- 2020-01 - Lous Dixon - What should EAs interested in climate change do? [EA Forum]

- 2020-01 - EA Survey 2019 - Climate Change is no.2 votes cause [EA Forum]

- 2019-12 - EA Global London - Could climate change make Earth uninhabitable for humans? [link]

- 2019-12 - Giving Green launched [link]

- 2019-12 - Linch - 8 things I believe about climate change, including discussion of whether climate change is an x-risk [EA Forum]

- 2019-12 - Vox - Want to fight climate change effectively? Here’s where to donate your money. [link]

- 2019-11 - ImpactMatters launched [link]

- 2019-11 - Taylor Sloan - Applying effective altruism to climate change [blog part 1, blog part 2]

- 2019-10 - Martin Hare Robertson - Review of Climate Cost-Effectiveness Analyses [EA Forum]

- 2019-10 - Martin Hare Robertson - Updated Climate Change Problem Profile [EA Forum]

- 2019-10 - Hauke Hillebrandt - Global development interventions are generally more effective than Climate change interventions [EA Forum]

- 2019-09 - 2019-11 - Future of Life - Not Cool, 26 episode podcast [link]

- 2019-08 - Future of Life - The Climate Crisis as an Existential Threat with Simon Beard and Haydn Belfield [podcast]

- 2019-08 - Will MacAskill - AMA Response: Do you think Climate is neglected by EA? [EA Forum]

- 2019-07 - Danny Bressler - How Many People WIll Climate Change Kill? [EA Forum]

- 2019-07 - kbog - Extinguishing or preventing coal seam fires is a potential cause area [EA Forum]

- 2019-06 - Vox - Is climate change an “existential threat” — or just a catastrophic one? [link]

- 2019-05 - John Halsted + others - EA comment thread: Is biodiversity loss an x-risk? [EA Forum]

- 2019-04 - Louis Dixon - Does climate change deserve more attention within EA? [EA Forum]

- 2019-04 - Tessa Alexanian, Zachary Jacobi - Rough Notes for “Second-Order Effects Make Climate Change an Existential Threat” [google doc]

- 2019-03 - LetsFund - Clean Energy Innovation Policy [link]

- 2019-03 - EA Global London - Toby Ord Fireside chat, incl Climate Tail risk is underappreciated [link]

- 2019-01 - ozymandias - Climate Change Is, In General, Not An Existential Risk [EA Forum]

- -> 2019-08 - Pawntoe4 - Critique of Halsted’s doc [EA Forum]

- 2018-10 - John Halsted - is Climate an x-risk? [google doc]

- 2018-03 - John Halsted - Climate change, geoengineering, and existential risk [EA Forum]

- 2018-05 - John Halsted/FP - Climate Change Cause Area Report [pdf]

- 2017-12 - CSER - Climate Change and the Worst-Case Scenario [link]

- 2017-06 - Harvard EA - HOW TO ADDRESS CLIMATE CHANGEWITH EFFECTIVE GIVING - [pdf]

- 2016-07 - Future of Life - Op-Ed: Climate Change Is the Most Urgent Existential Risk [link]

- 2016-04 - 80K Hours - Climate Problem Profile [link]

- 2016-04 - Giving What We Can - Climate Problem Profile [link]

- 2016-01 - mdahlhausen - Searching for Effective Environmentalism Candidates [blog post]

- 2015-11 - Effective Environmentalism FB Group Created

- 2015-11 - Future of Life - Climate Problem Profile [link]

- 2015-04 - CSER - Climate Change and The Common Good [link]

- 2013-05 - Open Philanthropy - Anthropogenic Climate Change [link]

I disagree with the way "neglectedness" is conceptualised in this post.

Climate change is not neglected among the demographics EA tends to recruit from. There are many, many scientists, activists, lawyers, policymakers, journalists, researchers of every stripe working on this issue. It has comprehensively (and justifiably) suffused the narrative of modern life. As another commenter here puts it, it is "The Big Issue Of Our Time". The same simply cannot be said of other cause areas, despite many of those problems matching or exceeding climate change in scale.

When I was running a local group, climate change issues were far and away the #1 thing new potential members wanted to talk about / complained wasn't on the agenda. This happened so often that "how do I deal with all the people who just want to talk about recycling" was a recurring question among other organisers I knew. I'd be willing to bet that >80% of other student group organisers have had similar experiences.

This post itself argues that EA is losing potential members by not focusing on climate change. But this claim is in direct tension with claims that climate change is neglected. If there are droves of potential EAs who only want to talk about climate change, then there are droves of new people eager to contribute to the climate change movement. The same can hardly be said for AI safety, wild animal welfare, or (until this year, perhaps) pandemic prevention.

Many of the claims cited here as reasons to work on climate change could be applied equally well to other cause areas. I don't think there's any reason to think simple models capture climate change less well than they do biosecurity, great power conflict, or transformative AI: these are all complex, systemic, "wicked problems" with many moving parts, where failure would have "a broad and effectively permanent impact".

This is why I object so strongly to the "war" framing used here. In (just) war, there is typically one default problem that must be solved, and that everyone must co-ordinate on solving or face destruction. But here and now we face dozens of "wars", all of which need attention, and many of which are far more neglected than climate change. Framing climate change as the default problem, and working on other cause areas as defecting from the co-ordination needed to solve it, impedes the essential work of cause-impartial prioritisation that is fundamental to doing good in a world like ours.

Thanks for your feedback.

I think it's worth emphasizing that the title of this post is "Climate Change Is Neglected By EA", rather than "Climate Change Is Ignored By EA", or "Climate Change Is the Single Most Important Cause Above All Others". I am strongly in favor of cause-impartial prioritisation.

In "Updated Climate Change Problem Profile" I argued that Climate Change should receive an overall score of 24 rather than 20. That's a fairly modest increase.

I don't agree with this "direct tension". I'm arguing that (A) Climate Change really is more important than EA often makes it out to be, and that (B) EA would benefit from engaging with people about climate change from an EA perspective. Perhaps as part of this engagement you can encourage them to also consider other causes. However, starting out from an EA position which downplays climate change is both factually wrong and alienating to potential EA community members.

Thanks for the reply. As I recently commented on a different post, engagement with commenters is a crucial part of a post like this, and I'm glad you're doing that even though a lot of the response has been negative (and some of it has been mean, which I don't support). That isn't easy.

This sounds good to me. However, you don't actually give much indication indication in the post about how you think climate change stacks up compared to other cause areas. Though you do implicitly do so here:

For comparison, on 80K's website right now, AI risk, global priorities research and meta-EA are currently at 26, biosecurity and ending factory farming are at 23, and nuclear security and global health are at 21. So your implicit claim is that, on the margin, climate change is less important than AI and GPR, slightly more important than biosecurity and farmed animal welfare, and much more important than nuclear security and global health (of the bednets and deworming variety). Does that sound right to you? That isn't a gotcha, I am genuinely asking, though I do think some elaboration on the comparisons would be valuable.

I think I'm sticking to the "direct tension" claim. If oodles of smart, motivated young people are super-excited about climate change work, a decent chunk of them will end up doing climate change work. We're proposing that these are the sorts of people who would otherwise make a good fit for EA, so we can assume they're fairly smart and numerate. I'd guess they'd have less impact working on climate change outside EA than within it, but they won't totally waste their time. So if there are lots of these people, then lots of valuable climate change work will be done with or without EA's involvement. Conversely, if there aren't lots of these people (which seems false), the fact that we're alienating (some of) them by not prioritising climate change isn't a big issue.

I think you could argue that climate change work remains competitive with other top causes despite not being neglected (I'm sceptical, but it wouldn't astound me if this were the case). I think you could argue that the gains in recruitment from small increases in perceived openness to climate change work are worth it, despite climate change not being neglected (this is fairly plausible to me). But I don't think you can simultaneously argue that climate change is badly neglected, and that we're alienating loads of people by not focusing on it.

That does sound about right to me.

My claim is that EA currently (1) downplays the impact of climate change (e.g. focusing on x-risk, downplaying mainstream impacts) and (2) downplays the value of working on climate change (e.g. low neglectedness, low tractability). If you agree that (1, 2) are true, then EA is misleading its members about climate change and biasing them to work on other issues.

Perhaps I have misunderstood your argument, but I think you're saying that (1, 2) don't matter because lots of people already care about climate change, so EA doesn't need to influence more people to work on climate change. I would argue that regardless of how many people already care about climate change, EA should seek to communicate accurately about the impact and importance of work on different cause areas.

Could you elaborate a bit on why? This doesn't sound insane to me, but it is a pretty big disagreement with 80,000 Hours, and I am more sympathetic to 80K's position on this.

My claim is that the fact that so many (smart, capable) people care about climate change work directly causes it to have lower expected value (on the margin). The "impact and importance of work on different cause areas" intimately depends on how many (smart, capable) people are already working or planning to work in those areas, so trying to communicate that impact and importance without taking into account "how many people already care" is fundamentally misguided.

The claim that climate change is a major PR issue for EA, if true, is evidence that EA's position on climate change is (in at least this one respect) correct.

I'd like to extend my previous model to have three steps:

(1) EA downplays the impact of climate change (e.g. focusing on x-risk, downplaying mainstream impacts).

(2) EA downplays the value of working on climate change (e.g. low neglectedness, low tractability).

(3) EA discourages people from working on climate change in favor of other causes.

I think you are arguing that since lots of people already care about climate change, (3) is a sensible outcome for EA. To put this more explicitly, I think you are supportive of (2), likely due to you perceiving it as being not neglected. As I've stated in this post, I think it is possible to argue that climate change is not actually neglected. In fact you suggested below some good arguments for this:

But let's put that to one side for a moment, because we haven't talked about (1) yet. Even if you think the best conclusion for EA to make is (3), I still think it's important that this conclusion is visibly drawn from the best possible information about the expected impacts of climate change. Sections (1), (4), (5), and (7) in my post speak directly to this point.

I look at the way that EA talks about climate change and I think it misses some important points (particularly see section (4) of my post). These gaps in EA's approach to climate change cause me to have lower trust in EA cause prioritization, and at the more extreme end make me think "EA is the community who don't seem to care as much about climate change - they don't seem to think the impact will be so bad". I think that's a PR issue for EA.

Two things:

I feel like we're still not connecting regarding the basic definition of neglectedness. You seem to be mixing it up with scale and tractability in a way that isn't helpful to precise communication.

Thoughtful post!

I don't agree with your analysis in (3) - neglectedness to me is asking not 'is enough being done' but 'is this the thing that can generate the most benefit on the margin'.

For climate change it seems most likely not; hundreds of billions of dollars (and likely millions of work-years) are already spent every year on climate change mitigation (research, advocacy, or energy subsidies). The whole EA movement might move, what, a few hundred million dollars per year? Given the relatively scarce resources we have, both in time and money, it seems like there are places where we could do more good (the whole of the AI safety field has only a couple hundred people IIRC).

Thank you for your comments - I have some responses:

A huge amount is already spent on global health and development, and yet the EA community is clearly happy to try and find particularly effective global health and development interventions. There are definitely areas within the hugely broad field of climate change action which are genuinely neglected.

This seems pessimistic about the possible size of the EA movement. Maybe if EA didn't downplay climate change so much, it might attract more people to the movement and hence have a greater total amount of resources to distribute.

This is true. To steelman your point (and do some shameless self-promotion) - at Let's Fund we think funding advocacy for clean energy R&D funding is one such intervention, so they do exist.

Though I'm not entirely sure the comparison is fair. The kind of global poverty interventions that EAs favour (for better or for worse) tend to be near-term, low-risk, with a quick payoff. Climate change interventions are much less certain, higher-variance, and with a long payoff.

Thanks for the link, that's very interesting! I've seen that you direct donations to the Clean Energy Innovation program of the Information Technology and Innovation Foundation. How confident are you that the funds are actually fully used for the purpose? I understand that their accounting will show that all of your funds will go there, but how confident are you that they will not reduce their discretionary spending to this progam as a consequence? (I glanced at some of their recent work, and they have some pieces that are fairly confrontational towards China. While this may make sense from a short-term US perspective, it might even be net harmful if one takes a broader view and/or takes into account the possiblity of a military escalation between the US and China.) Did you consider the Clean Air Task Force when looking for giving opportunities?

You raise some excellent points that have a bunch of implications for estimating the value of EA funding policy research.

I'm very confident that the funds are used for their Clean Energy Innovation program.

We have a mutual agreement of understanding with ITIF that any donations through Let’s Fund will be restricted to the Clean Energy Innovation Program, led by Professor David Hart and Dr Colin Cunliff.

We're in regular contact with their fundraising person and the David Hart.

They have recently hired an additional person and are now also hiring for another policy analyst in Brussels. We think this is very likely in part due to the Let's Fund grant.

I think this is plausible but fairly unlikely that the effect is massive - I think think tank programs at think tanks such as ITIF do not tend to top up their programs with discretionary spending much, but there is a bit of "market" where the person who leads the program needs to acquire grant funding. The better the program is at receiving grant funding the more it'll be scaled up. Otherwise, there'd be no incentive for people running the individual programs to apply for grants.

However, of course additional funds, even if restricted to a program will likely be good for ITIF as a whole, because it benefits from the economies of scale and more basic infrastructure (e.g. support staff, a bigger office, communications staff).

> they have some pieces that are fairly confrontational towards China

Yes, so as argued above, I think donations through Let's Fund will predominantly benefit their Clean Energy program, but a small effect on the whole ITIFs activities can't be ruled out.

ITIF works on other issues and I haven't vetted their value in-depth, but my superficial review of ITIFs overall activities leads me to believe that none of their activities are very controversial. This is in part why we selected ITIF.

ITIF is a think tank based in Washington, DC. The Global Go To Think Tank Index has ranked ITIF 1st in their “Science and Technology think tanks“ category in 2017 and 2018.

They also rank quite well on the general rankings.

It's a very academic think tank with lots of their staff members holding advanced degrees and having policy experience in technocratic environments. I think they can still be described as quite centrist and nonpartisan, though ITIFs staff seems closer to the US Democrats than other parties. Also, it is fairly libertarian in terms of economic thinking.

More about ITIF here: https://itif.org/about

On their China stance in particular: I think they mostly argue against some of China's economic policies in a constructive not confrontational way (see everything about China here: https://itif.org/regions/china ). You could see the sign of the value of that going either way - it might be that more constructive criticism is better than not talking about it and then having populists being the only ones who talk about some of China's anti-competitive practices.

This is all very uncertain however. People who are very worried about unintentional consequences might not want to donate to ITIF for those reasons.

Yes, I read the FP report on it. I think the Clean Air Task force is an excellent giving opportunity in climate change. The main reason why I think ITIF has higher expected value is that ITIF is more narrowly focused on increasing clean energy R&D spending, which I make the case is the best policy to push currently on the margin. However, it is perhaps more risky than the Clean Air Task Force, which is more diversified.

Thanks a lot, this is super helpful! I particularly appreciated that you took the time to explain the internal workings of a typical think tank, this was not at all clear to me.

I find this whole genre of post tedious and not very useful. If you think climate change is a good cause area, just write an actual cause prioritization analysis directly comparing it to other cause areas, and show how it's better! If that's beyond your reach, you can take an existing one and tweak it. This reads like academic turf warring, a demand that your cause area should get more prestige, instead of a serious attempt to help us decide which cause areas are actually most important.

OK, but I don't know if anyone here was previously assuming that the impacts will definitely not be severe. The EA community has long recognized the risks of more severe impact. So this doesn't seem like a point that challenges what we currently believe.

I haven't read those previous posts you've written, but the burden of argument is on showing that a cause is effective, not proving that it's ineffective. We have many causes to choose from, and the Optimizer's Curse means we must focus on ones where we have pretty reliable arguments. Merely speculating "what if climate change is worse than the best evidence suggests???" does nothing to show that we've neglected it. It just shows that further cause prioritization analysis could be warranted.

This criticism doesn't make sense to me. The mere fact that a problem will be unsolved doesn't mean it's more important for us to work on it. What matters is how much we can actually accomplish by trying to solve it.

That's fine. Marginal/social cost of carbon is the superior way to think about the problem.

I've seen EAs talk about 'mainstream' costs many times. GWWC's early analysis on climate change did this in detail. In any case, my estimate of the long-term economic costs of climate change (detailed writeup in Candidate Scoring System: http://bit.ly/ea-css ) aggregates over the various scenarios.

This phrasing suggests to me that you didn't read, or perhaps don't care, what is actually in many of the links that you're citing. We do not believe that climate change is irrelevant because it's not an x-risk. We do, however, believe that the arguments in favor of mitigating x-risks do not apply to climate change. So that provides one reason to prioritize x-risks over climate change. This is clearly a correct conclusion and you haven't provided arguments to the contrary.

If you think that people will like EA more when they see us addressing on climate change, why don't you highlight all the examples of EAs actually addressing climate change (there are many examples) instead of writing (yet another, we've had many) post making the accusation that we neglect it?

Other problems have complex, far-reaching negative consequences too, so it's not obvious that simplistic modeling leads to an under-prioritization of climate change. It is very easy to think of analogous secondary effects for things like poverty.

In any case, estimating the damages of climate change upon the human economy has already addressed by multiple economic metanalyses. Estimating the short- and medium-term deaths has been done by GWWC. Estimating the impacts on wildlife is generally sidelined because we have no idea if they are net positive or net negative for wild animal welfare.

I briefly addressed it in Candidate Scoring System, and determined that it was very small. If you look at CO2 emissions per person and compare it to the social cost of carbon, you can see that it's not much for a person in the United States, let alone for people in (much-lower-emissions) developing countries.

Climate change being expected to persist for centuries is conditional upon the absence of major geoengineering. But we could quite plausibly see that in the later 21st century or anytime in the 22nd century.

Failing to limit warming to a certain level is a poor way of defining the problem. If we can't stay under 1.5C, we might stay under 2.0C, which is not that much worse. The right way to frame the problem is to estimate how much accumulated damage will be caused by some additional GHGs hanging around the atmosphere for, probably, a century or more. That is indeed a long term cost.

But other cause areas also have major long-run impacts. There is plenty of evidence and arguments for long-run benefits of poverty relief, health improvements and economic growth.

Pick another cause area that's currently highlighted, compare it to climate change, and show how climate change is a more effective cause area.

I often disagree with kbog, and I think this comment was pretty harsh, but I agree with his criticisms of the post

I concur; I disagree with the tone of kbog's comment but broadly agree with the content.

(A) Carbon budgets express an important idea about continued emissions committing us to particular levels of warming. This is particularly important when we are likely to exceed the 1.5C carbon budget in less than 10 years. (B) The 80K Hours problem problem also doesn't mention marginal/social cost of carbon. (C) Social cost of carbon is usually computed from an IAM, a practice which has been described as such:

"IAMs can be misleading – and are inappropriate – as guides for policy, and yet they have been used by the government to estimate the social cost of carbon (SCC) and evaluate tax and abatement policies." [Pindyck, 2017, The Use and Misuse of Models for Climate Policy]

I reviewed your writeup, focusing on pages 47 - 61 and I have some questions/comments.

Short term impacts (page 56):

Long run growth (page 56, 57)

Appendix 1 lists many of the more recent EA engagements with climate change. I agree that EA has not ignored climate change. However, the point of this post was to discuss some trends which I have observed in how EA engages with climate change.

There are legitimate causes for concern about both of the sources you cite - the first relies on IAMs, GWWC relies on one 2014 WHO publication which has some important limitations.

I agree that this is a plausible possibility, but not one which I'd like to have to rely on.

That depends on what you count as "not that much worse". The IPCC SR15 report predicts that hundreds of millions more people will be severely impacted at 2.0C versus 1.5C.

I already did that: "Review of Climate Cost-Effectiveness Analyses". I would love to get your feedback on that post.

You can also use economists' subjective estimates ( https://policyintegrity.org/files/publications/ExpertConsensusReport.pdf ) or model cross validation ( https://www.rff.org/publications/working-papers/the-gdp-temperature-relationship-implications-for-climate-change-damages/ ) and the results are not dissimilar to the IAMs by Nordhaus and Howard & Sterner. (it's 2-10% of GWP for about three degrees of warming regardless.)

In any case I think that picking a threshold (based on what exactly??) and doing whatever it takes to get there will have more problems than IAMs do.

Nice, that looks like a good noteworthy post. I will look at it in more detail (would take a while). Until then I'm revising from 258,000 tons down to 40,000 (geometric mean of their estimate and your 15,620 but biased a little towards you).

"40% of Earth’s population lives in the tropics, with 50% projected by 2050 (State of the Tropics 2014) so we estimate 6 billion people affected (climate impacts will last for multiple generations)." - The world population is expected to be ~10 billion by 2050, so 50% would be 5 billion. How are you accounting for multiple generations?

I figured many people will be wealthy and industrialized enough to generally avoid serious direct impacts, so it wasn't an estimate of how many people will live in warming tropical conditions. But looking at it now, I think that's the wrong way to estimate it because of the ambiguity that you raise. I'm switching to all people potentially affected (12 billion), with a lower average QALY loss.

Described in "short-run, robust welfare" section of "issue weight metrics," it's the fact that increases in wealth for middle-income consumers may be net neutral or harmful in the short run because they increase their meat consumption.

Subjective guess. Do you think it is too high or too low? Severely too high, severely too low?

Arbitrary guess based on the quoted factors. Do you feel that is too low or too high.

I'm not saying to rely on it. I'm saying your estimates of climate damages cannot rely on geoengineering not happening. The chance that we see "full" geoengineering by 2100 (restoring the globe to optimal or preindustrial temperature levels) is, hmm 25%? Higher probability for less ambitious measures.

If we were in in the 1980s it would be improper to write a model which assumed that cheap renewable energy would never be developed.

Based on these changes I've increased the weight of air pollution from 15.2 to 16. (It's not much because most of the weight comes from the long run damage, not the short run robust impacts. I've increased short run impact from 2.15 million QALYs to 3 million.)

Yes I will look into that and update things accordingly.

Will and Rob devote a decent chunk of time to climate change on this 80K podcast, which you might find interesting. One quote from Will stuck with me in particular:

I agree that, as a community, we should make sure we're up-to-date on climate change to avoid making mistakes or embarassing ourselves. I also think, at least in the past, the attitude towards climate work has been vaguely dismissive. That's not helpful, though it seems to be changing (cf. the quote above). As others have mentioned, I suspect climate change is a gateway to EA for a lot of altruistic and long-term-friendly people (it was for me!).

As far as direct longtermist work, I'm not convinced that climate change is neglected by EAs. As you mention, climate change has been covered by orgs like 80K and Founders Pledge (disclaimer, I work there). The climate chapter in The Precipice is very good. And while you may be right that it's a bit naive to just count all climate-related funding in the world when considering the neglectedness of this issue, I suspect that even if you just considered "useful" climate funding, e.g. advocacy for carbon taxes or funding for clean energy, the total would still dwarf the funding for some of the other major risks.

From a non-ex-risk perspective, I agree that more work could be done to compare climate work to work in global health and development. There's a chance that, especially when considering the air pollution benefits of moving away from coal power, climate work could be competitive here. Hauke's analysis, which you cite, has huge confidence intervals which at least suggest that the ranking is not obvious.

On the one hand, the great strength of EA is a willingness to prioritize among competing priorities and double down on those where we can have the biggest impact. On the other hand, we want to keep growing and welcoming more allies into the fold. It's a tricky balancing act and the only way we'll manage it is through self-reflection. So thanks for bringing that to the table in this post!

As somewhat of an outsider, this has always been my impression. For example, I expect that if I choose to work in climate, some EAs will infer that I have inferior critical thinking ability.

There's something about the "gateway to EA" argument that is a bit off-putting. It sounds like "those folks don't yet understand that only x-risks are important, but eventually we can show them the error of their ways." I understand that this viewpoint makes sense if you are convinced that your own views are correct, but it strikes me as a bit patronizing. I'm not trying to pick on you in particular, but I see this viewpoint advanced fairly frequently so I wanted to comment on it.

Thanks for sharing that. It's good to know that that's how the message comes across. I agree we should avoid that kind of bait-and-switch which engages people under false pretences. Sam discusses this in a different context as the top comment on this post, so it's an ongoing concern.

I'll just speak on my own experience. I was focused on climate change throughout my undergrad and early career because I wanted to work on a really important problem and it seemed obvious that this meant I should work on climate change. Learning about EA was eye-opening because I realized (1) there are other important problems on the same scale as climate change, (2) there are frameworks to help me think about how to prioritize work among them, and (3) it might be even more useful for me to work on some of these other problems.

I personally don't see climate change as some separate thing that people engage with before they switch to "EA stuff." Climate change is EA stuff. It's a major global problem that concerns future generations and threatens civilization. However, it is unique among plausible x-risks in that it's also a widely-known problem that gets lots of attention from funders, voters, politicians, activists, and smart people who want to do altruistic work. Climate change might be the only thing that's both an x-risk and a Popular Social Cause.

It would be nice for our climate change message to do at least two thing. First, help people like me, who are searching for the best thing to do with their life and have landed on climate because it's a Popular Social Cause, discover the range of other important things to work on. Second, help people like you, who, I assume, care about future generations and want to help solve climate change, work in the most effective way possible. I think we can do both in the future, even if we haven't in the past.

Yeah, I think many groups struggle with the exact boundary between "marketing" and "deception". Though EAs are in general very truthful, different EAs will still differ both in where they put that boundary and their actual evaluation of climate change, so their final evaluation of the morality of devoting more attention to climate change for marketing purposes will differ quite a lot.

I was arguing elsewhere in this post for more of a strict "say what you believe" policy, but out of curiosity, would you still have that reaction (to the gateway/PR argument) if the EA in question thought that climate change thought was, like, pretty good, not the top cause, but decent? To me that seems a lot more ethical and a lot less patronising.

Thanks for the question as it caused me to reflect. I think it is bad to intentionally misrepresent your views in order to appeal to a broader audience, with the express intention of changing their views once you have them listening to you and/or involved in your group. I don't think this tactic necessarily becomes less bad based on the degree of misrepresentation involved. I would call this deceptive recruiting. It's manipulative and violates trust. To be clear, I am not accusing anyone of actually doing this, but the idea seems to come up often when "outsiders" (for lack of a better term) are discussed.

Thanks for your comments and for linking to that podcast.

In my post I am arguing for an output metric rather than an input metric. In my opinion, climate change will stop being a neglected topic when we actually manage to start flattening the emissions curve. Until that actually happens, humanity is on course for a much darker future. Do you disagree? Are you arguing that it is better to focus on an input metric (level of funding) and use that to determine whether an area has "enough" attention?

It seems to me that this conception of neglectedness doesn't help much with cause prioritization. Every problem EAs think about is probably neglected in some global sense. As a civilization we should absolutely do more to fight climate change. I think working on effective climate change solutions is a great career choice; better than, like, 98% of other possible options. But a lot of other factors bear on what the absolute best use of marginal resources is.

But this doesn't make any sense. It suggests that if a problem is (a) severe and (b) insuperable, we should pour all our effort into it forever, achieving nothing in the process.

The impact equation in Owen Cotton-Barratt's Prospecting for Gold might be helpful here. Note that his term for neglectedness (what he calls uncrowdedness) depends only on the amount of (useful) work that has already been done, not the value of a solution or the elasticity of progress with work (i.e. tractability). (We can generalise from "work done" to "resources spent", where effort is one resource you can spend on a problem.)

Now, you can get into the weeds here with exactly what kinds of work count for the purposes of determining crowdedness (presumably you need to downweight in inverse proportion to how well-directed the work is), but I think even under the strictest reasonable definitions the amount of work that has gone into attacking climate change is "a very great deal".

I can think of some other arguments you might make, around the shape and scale of the first two terms in Owen's equation, to argue that marginal work put into climate change is still valuable, but none of them depend on redefining neglectedness.

Thanks for clarifying! I understand the intuition behind calling this "neglectedness", but it pushes in the opposite direction of how EA's usually use the term. I might suggest choosing a different term for this, as it confused me (and, I think, others).

To clarify what I mean by "the opposite direction": the original motivation behind caring about "neglectedness" was that it's a heuristic for whether low hanging fruit in the field exists. If no one has looked into something, then it's more likely that there is low hanging fruit, so we should probably prefer domains that are less established . (All other things being equal.)

The fact that many people have looked into climate change but we still have not "flattened the emissions curve" indicates that there is not low hanging fruit remaining. So an argument that climate change is "neglected" in the sense you are using the term is actually an argument that it is not neglected in the usual sense of the term. Hence the confusion from me and others.

This post is incredibly detailed and informative. Thank you for writing it.

I work in a climate-adjacent field and I agree that climate change is an important area for some of the world's altruistically-minded people to focus on. Similar to you, I prefer to focus on problems that will clearly affect people in my lifetime or shortly afterwards.

However, even though I'm concerned about and even work on climate change, I donate to the Against Malaria Foundation. When I looked at the most recent IPCC report, one of the biggest health impacts listed was an increase in malaria. If we could reduce or eradicate malaria, we could also improve lives under climate change.

If at some point you're considering writing a follow up post, I'd be interested in a direct comparison of some climate donations or careers with some global health donations or careers. Given your ethical point of view, I don't think your posts are likely to change the minds of people who are focused on the long term future, but you might change my mind about what I should focus on.

As a side comment, I think it can make perfect sense to work on some area and donate to another one. The questions "what can I do with my money to have maximal impact" and "how do I use my skill set to have maximal impact" are very different, and I think it's totally fine if the answers land on different "cause areas" (whatever that means).

Thanks for your comments.

As I mention in my post, the issue with this is that you are fighting an uphill battle to tackle malaria while climate change continues to expand the territory of malaria and other tropical diseases.

I have previously written a post titled Review of Climate Cost-Effectiveness Analyses which reviews prior attempts to do this kind of comparison. As I mention in my post above, my conclusion was that it is close to impossible to make this kind of comparison due to the very limited evidence available. However, the best that I think can be said is that action on climate change is likely to be at least as effective as action on global health, and it is plausible that action on climate change is actually more effective.

It's definitely possible I'm misunderstanding what you're trying to do here. However, I think it is usually not the case that if you attempt to do an impartial assessment of a yes-no question, all the possible factors point in the same direction.

I mean, I don't know this for sure, but I imagine if you were to ask me to closely investigate a cause area that I haven't thought about much before (wild animal suffering, say, or consciousness research, or Alzheimer's mitigation), and I investigated 10 sub-questions, I don't think all 10 of them will point in the same way. My intuition is that it's much more likely that I'd either find 1 or 2 overwhelming factors, or many weak arguments in favor or against, and some in the other direction.

I feel bad for picking on you here. I think it is likely the case that other EAs (myself included) have historically made this mistake, and I will endeavor to be more careful about this in the future.

That's a fair point. As per the facebook event description, I was originally asked to discuss two posts:

I ended up proposing that I could write a new post, this post. The event was created with a title of "Is climate change neglected within EA?" and I originally intended to give this post the same title. However, I realized that I really wanted to argue a particular side of this question and so I posted this article under a more appropriate title.

You are correct to call out that I haven't actually offered a balanced argument. Climate change is not ignored by EA. As is clear in Appendix A, there have been quite a few posts about climate change in recent years. The purpose of this post was to draw out some particular trends about how I see climate change being discussed by EA.

I think I and others here would have reacted much better if this post felt more curious/exploratory and less arguments-are-solders.

That doesn't mean you can't come down on a side. You can definitely come down on a side. But a good post should either make it's clear that it's only arguing for one side (and so only trying to be part of a whole) or it should reflect the entirety of your position, not just the points on one side. And if the entirety of your position is only points on one side, then either your argumentation had better be iron-solid or a lot of people are going to suspect your impartiality.

Concerning x-risks, my personal point of disagreement with the community is that I feel more skeptical of the chances to optimize our influence on the long-term future "in the dark" than what seems to be the norm. By "in the dark", I mean in the absence of concrete short-term feedback loops. For instance, when I see the sort of things that MIRI is doing, my instinctive reaction is to want to roll my eyes (I'm not an AI specialist, but I work as a researcher in an academic field that is not too distant). The funny thing is that I can totally see myself from 10 years ago siding with "the optimists", but with time I came to appreciate more the difficulty of making anything really happen. Because of this I feel more sympathetic to causes in which you can measure incremental progress, such as (but not restricted to) climate change.

Often times climate change is dismissed on the basis that there is already a lot of money going into this. But it's not clear to me that this proves the point. For instance, it may well be that these large resources that are being deployed are poorly directed. Some effort to reallocate these resources could have a tremendously large effect. (E.g. supporting the Clean Air Task Force, as suggested by the Founders Pledge, may be of very high impact, especially in these times of heavy state intervention, and of coming elections in the US.) We should be careful to apply the "Importance-Neglectedness-Tractability" framework with caution. In the last analysis, what matters is the impact of our best possible action, which may not be small just on the basis of "there is already a lot of money going into this". (And, for the record, I would personally rate AI safety technical research as having very low tractability, but I think it's good that some people are working on it.)

Thanks for sharing! This does seem like an area many people are interested in, so I'm glad to have more discussion.

I would suggest considering the opposite argument regarding neglectedness. If I had to steelman this, I would say something like: a small number of people (perhaps even a single PhD student) do solid research about existential risks from climate change -> existential risks research becomes an accepted part of mainstream climate change work -> because "mainstream climate change work" has so many resources, that small initial bit of research has been leveraged into a much larger amount.

(Note: I'm not sure how reasonable this argument is – I personally don't find it that compelling. But it seems more compelling to me than arguing that climate change isn't neglected, or that we should ignore neglectedness concerns.)

Yeah, I think there are various arguments one could make within the S/T/N framework that don't depend on redefining neglectedness:

I have no idea how solid any of these are (critiques welcome), and I don't think I'd find any of them all that compelling in the climate change case without strong evidence. But these are a few ways I could imagine circumventing neglectedness concerns (other than the obvious "the scale is really really big tho", which doesn't seem to have done the job in the climate change case).

I think that more research is definitely warranted. EAs can bring a unique perspective to something like climate change, where there are so many different types of interventions which probably vary wildly in effectiveness. I don't think enough research has been done to rule out the possibility of there existing hugely effective climate change interventions that are actually neglected/underfunded, even if climate change as a whole is not. And since people who care about climate change are typically science-minded, there's a chance a significant chunk could be persuaded to fund the more effective interventions once we identify them.

What a fantastic post. Thank you! Your frustration resonates strongly with me. I think the dismissive attitude towards climate issues may well be an enormous waste of goodwill towards EA concepts.

How many young/wealthy people stumble upon 80k/GiveWell/etc. with heartfelt enthusiasm for solving climate, The Big Issue Of Our Time, only to be snubbed? How many of them could significantly improve their career/giving plans if they received earnest help with climate-related cause prioritization, instead of ivory-tower lecturing about weirdo x-risks?

Can't we save that stuff for later? "If you like working on climate, you might also be interested in ..."?

This isn't to say that EA's marginal-impact priorities are wrong; I myself work mainly on AI safety right now. But a career in nuclear energy is still more useful than one in recyclable plastic straw R&D (or perhaps it isn't?), and that's worth researching and talking about.

I've spent a good bit of time in the environmental movement and if anyone could use a heavy dose of rationality and numeracy, it's climate activists. I consider drawdown.org a massive accomplishment and step in the right direction. It's sometimes dismissed on this forum for being too narrow-minded, and that's probably fair, but then what's EA's answer, besides a few uncertain charity recommendations? Where's our GiveWell for climate?

Given that people's concern about climate change is only going up, I hope that this important conversation is here to stay. Thanks again for posting!

I am sympathetic to the PR angle (ditto for global poverty): lots of EAs, including me, got to longtermism via more conventional cause areas, and I'm nervous about pulling up that drawbridge. I'm not sure I'd be an EA today if I hadn't been able to get where I am in small steps.

The problem is that putting more emphasis on climate change requires people to spend a large fraction of their time on a cause area they believe is much less effective than something else they could be working on, and to be at least somewhat dishonest about why they're doing it. To me, that sounds both profoundly self-alienating and fairly questionable impact-wise.

My guess is that people should probably say what they believe, which for many EAs (including me) is that climate change work is both far less impactful and far less neglected than other priority cause areas, and that many people interested in having an impact can do far more good elsewhere.

I wonder how much of the assessment that climate change work is far less impactful than other work relies on the logic of “low probability, high impact”, which seems to be the most compelling argument for x-risk. Personally, I generally agree with this line of reasoning, but it leads to conclusions so far away from common sense and intuition, that I am a bit worried something is wrong with it. It wouldn’t be the first time people failed to recognize the limits of human rationality and were led astray. That error is no big deal as long as it does not have a high cost, but climate change, even if temperatures only rise by 1.5 degrees, is going to create a lot of suffering in this world.

In an 80,000 hours podcast with Peter Singer the question was raised whether EA should split into 2 movements: present welfare and longtermism. If we assume that concern with climate issues can grow the movement, that might be a good way to account for our long term bias, while continuing the work on x-risk at current and even higher levels.

That sounds right to me. (And Will, your drawbridge metaphor is wonderful.)

My impression is that there already is some grumbling about EA being too elitist/out-of-touch/non-diverse/arrogant/navel-gazing/etc., and discussions in the community about what can be done to fix that perception. Add to that Toby Ord's realization (in his well-marketed book) that hey, perhaps climate change is a bigger x-risk (if indirectly) than he had previously thought, and I think we have fertile ground for posts like this one. EA's attitude has already shifted once (away from earning-to-give); perhaps the next shift is an embrace of issues that are already in the public consciousness, if only to attract more diversity into the broader community.

I've had smart and very morally-conscious friends laugh off the entirety of EA as "the paperclip people", and others refer to Peter Singer as "that animal guy". And I think that's really sad, because they could be very valuable members of the community if we had been more conscious to avoid such alienation. Many STEM-type EAs think of PR considerations as distractions from the real issues, but that might mean leaving huge amounts of low-hanging utility fruit unpicked.

Explicitly putting present-welfare and longtermism on equal footing seems like a good first step to me.

Rather than "many EAs", I would say "some EAs" believe that climate change work is both far less impactful and far less neglected than other priority cause areas.

I am not one of those people. I am currently in the process of shifting my career to work on climate change. Effective Altruism is a Big Tent.

"Some EAs" conveys very little information. The claim I'm making is stronger.

On the other hand, "many people [...] could do far more good elsewhere" is not the same as "all people". Probably some EA-minded people can have their greatest impact working on climate change. Perhaps you are one of those people.

There's a pretty important distinction between what your own best career path is and what the broader community should prioritise. I'm going to try to write more about this separately because it's important, but: if you think that working on climate change is the most impactful thing you can do, there are lots of good and bad reasons that could be, and short of a deep personal conversation or an explicit call for advice I'm not going to argue with you. I wish you all the best in your quest for impact.

But this post is a general call to change how the community as a whole regards and prioritises climate change work, and as such needs to be evaluated on a different level. I can disagree with these arguments without having an opinion on what the best thing for you to do is.

(Not that you said I couldn't do that. I just think it's important for that distinction to be explicitly there.)

EA has been a niche cause, and changing that seems harder than solving climate change. Increased popularity would be useful, but shouldn't become a goal in and of itself.

If EAs should focus on climate change, my guess is that it should be a niche area within climate change. Maybe altering the albedo of buildings?

I feel sometimes that the EA movement is starting to sound like heavy metalists (“climate change is too mainstream”), or evangelists (“in the days after the great climate change (Armageddon), mankind will colonize the galaxy (the 2nd coming), so the important work is the one that prevents x-risk (saves people’s souls)”). I say “amen” to that, and have supported AI safety financially in the past, but I remain skeptical that climate change can be ignored. What would you recommend as next steps for an EA ember who wants to learn more and eventually act? What are the AMF or GD of climate change?

Nothing you've written here sounds like anything I've heard anyone say in the context of a serious EA discussion. Are there any examples you could link to of people complaining about causes being "too mainstream" or using religious language to discuss X-risk prevention?

The arguments you seem to be referring to with these points (that it's hard to make marginal impact in crowded areas, and that it's good to work toward futures where more people are alive and flourishing) rely on a lot of careful economic and moral reasoning about the real world, and I think this comment doesn't really acknowledge the work that goes into cause prioritization.

But if you see a lot of these weaker (hipster/religious) arguments outside of mainstream discussion (e.g. maybe lots of EA Facebook groups are full of posts like this), I'd be interested to see examples.

The Effective Environmentalism group maintains a document of recommended resources.