Summary: in what we think is a mostly reasonable model, the amount of impact a group has increases as the group gets larger, but so do the risks of reputational harm. Unless we believe that, as a group grows, the likelihood of scandals grows slowly (at most as quickly as a logarithmic function), this model implies that groups have an optimal size beyond which further growth is actively counterproductive — although this size is highly sensitive to uncertain parameters. Our best guesses for the model’s parameters suggest that it’s unlikely that EA has hit or passed this optimal size, so we reject this argument for limiting EA’s growth.[1] (And our prior, setting the model aside, is that growth for EA continues to be good.)

You can play with the model (insert parameters that you think are reasonable) here.

Epistemic status: reasonable-seeming but highly simplified model built by non-professionals. We expect that there are errors and missed considerations, and would be excited for comments pointing these out.

Overview of the model

- Any group engaged in social change is likely to face reputational issues from wrongdoing[2] by members, even if it avoids actively promoting harmful practices, simply because its members will commit wrongdoing at rates in the ballpark of the broader population.

- Wrongdoing becomes a scandal for the group if the wrongdoing becomes prominently known by people inside and outside the group, for instance if it’s covered in the news (this is more likely if the person committing the wrongdoing is prominent themselves).

- Let’s pretend that “scandals” are all alike (and that this is the primary way by which a group accrues reputational harm).

- Reputational harm from scandals diminishes the group’s overall effectiveness (via things like it being harder to raise money).

- Conclusion of the model: If the reputational harm accrued by the group grows more quickly than the benefits (impact not accounting for reputational harm), then at some point, growth of the group would be counterproductive. If that’s the case, the exact point past which growth is counterproductive would depend on things like how likely and how harmful scandals are, and how big coordination benefits are.

- To understand whether a point like this exists, we should compare the rates at which reputational harm and impact grow with the size of the group. Both might grow greater than linearly.

- Reputational harm accrued by the group in a given period of time might grow greater than linearly with the size of the group, because:

- The total reputational harm done by each scandal probably grows with the size of the group (because more people are harmed).

- The number of scandals per year probably grows[3] roughly linearly with the size of the group, because there are simply more people who each might do something wrong.

- These things add up to greater-than-linear growth in expected reputational damage per year as the number of people involved grows.

- The impact accomplished by the group (not accounting for reputational damage) might also grow greater than linearly with the size of the group (because more people are doing what the group thinks is impactful, and because something like network effects might help larger groups more).

- Reputational harm accrued by the group in a given period of time might grow greater than linearly with the size of the group, because:

- To understand whether a point like this exists, we should compare the rates at which reputational harm and impact grow with the size of the group. Both might grow greater than linearly.

- Implications for EA

- If costs grow more quickly than benefits, then at some point, EA should stop growing (or should shrink); additional people in the community will decrease EA’s positive impact.

- The answer to the question “when should EA stop growing?” is very sensitive to parameters in the model; you get pretty different answers based on plausible parameters (even if you buy the setup of the model).

- However, it seems hard to choose parameters that imply that EA has surpassed its optimal point, and much easier to choose parameters that imply that EA should grow more (at least from this narrow reputational harm perspective).

- Note that we’re not focusing on the question “how good is it for EA to grow” here, which would matter for things like the cost-effectiveness of outreach efforts.

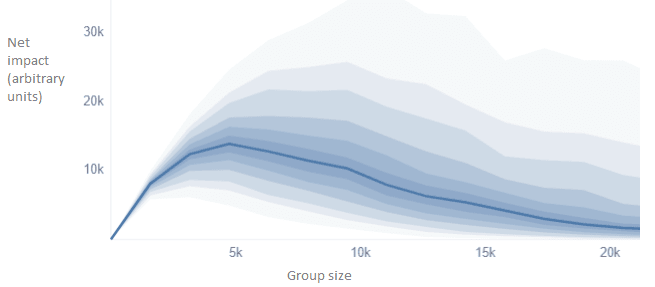

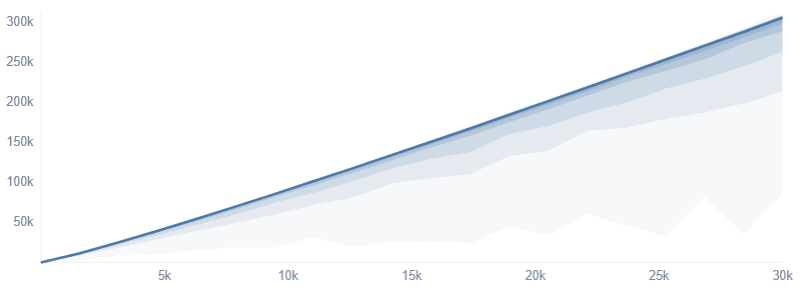

Here are two plots showing how the net impact (per year, with arbitrary units of impact) would change as a group grows — the plots are very different because the parameters are different and the model is very sensitive to that:

Technical model description

- Reputational harm assumption/setup:

- We start by assuming that each scandal (in the group) decreases the effectiveness of each person in the group by some percentage. I.e. due to a scandal in your group, the impact you would have had has been multiplied by an effectiveness penalty K. If we expect X scandals in your group in a given timeframe — let’s say a year, you’re getting that penalty X times, so your impact is decreased by a factor of K^X.

- If a group has N people (and no scandals), we can say that we’re overall getting N times the impact of the average person. (Where “impact” is “counterfactual impact caused by association with the group, not counting broad coordination benefits.” E.g. If a person would have been a doctor but decided to go into pandemic preparedness, the “impact” that we should track for this person is the difference between their positive impact in the latter and the former worlds.)

- We might also think that clustering these N people together has positive ~coordination effects for the whole group (like brand recognition making people more effective at achieving their goals, more sustained values, people supporting each other because they’re in the same group, etc. — more below). We think that this effect is probably real, but grows more slowly than linearly, so we’re semi-arbitrarily modeling it as log(N).

- We’re also assuming that coordination benefits aren’t separately harmed by scandals (outside of the fact that scandals harm individuals’ effectiveness).

- So the overall impact of a group of N people (per year) is the impact that group would have had if there were no scandals, multiplied by the effectiveness penalty factor K^X to account for reputational harm (N*impact-per-person*log(N)*K^X).

- Other simplifications/assumptions:

- We’re treating “scandal” as a binary: something either is a harmful scandal or isn’t. In reality, different scandals are bad to different extents. Someone could try to model this. Here, we’re talking about “expected number of scandals,” so we can try to account for the variation in real-world scandals when we’re setting parameters by saying that something less serious simply has a smaller chance of being a “scandal.”

- We’re assuming that everyone is equally “in the group.” This might matter if the variation in how much people are “part of the group” is somehow tied to other factors we care about (in which case it would not be ok to simply track the average).

- We list many other considerations below.

A more formal description of the model

- Let’s define some variables/functions:

- N — the variable that tracks the size of our group (this is a natural number)

- K — the effectiveness penalty factor due to reputational harm from 1 scandal (K should be a constant between 0 and 1)

- f(N) — the expected number of scandals, per year, in a group of size N

- gross_value(N) — the positive impact of a group of N people, ignoring reputational risk, per year

- net_value(N) — the overall impact of a group of size N, per year

- U — the unit of value that the average person in the group has per year, when unaffected by reputational harm.

- We model gross_value(N) as N * U * log N; each person has U impact independently, there are N people, and they also get some coordination benefits (see more on coordination benefits below) modeled as log N.

- Then the overall impact of the group is:

- net_value(N) = gross_value(N) * K^f(N) = (N * U * log N) * K^f(N)

Getting to the implications of the model

(Note: this section uses asymptotic (Big O) notation.)

- Is there ever a point at which marginal people joining the group is harmful?

- To understand whether net_value(N) ever starts going down as N goes up — i.e. whether there’s a point at which growth of the group is harmful — we need to understand whether the gross value grows faster than the reputational effectiveness penalty gets small, i.e. we need to understand which of these grows faster:

- N * U * log(N) (gross value), or

- 1/(K^f(N)) (1/reputational penalty)

- The surprising result is that whether or not additional people in the group will ever be net-negative entirely depends on whether or not f(N) ∈ O(log N) (i.e. whether, as the group grows, the frequency of scandals grows at most logarithmically).

- A sketch explanation/proof:

- Case 1: If f(N) ∈ O(log N) (i.e. f(N) grows at most as quickly as log N), then growth is always good

- Because K ^ (log N) ∈ O(N) ∈ O(N log N)

- Case 2: If f(N) ∈ o(log N) (i.e. f(N) grows more quickly than log N), then there will be some maximum beyond which growth is harmful

- Because N log N is dominated by 1/(K ^ g(N)) where g ∈ o(log N)

- Case 1: If f(N) ∈ O(log N) (i.e. f(N) grows at most as quickly as log N), then growth is always good

- A sketch explanation/proof:

- Is it plausible that the frequency of scandals grows at most as quickly as log? I.e. is the case where growth becomes net-negative at some point (Case 2) much more plausible than the other (Case 1)?

- We think Case 1 is actually pretty plausible, but we’re not sure which is in fact more likely. A justification for f (frequency of scandals based on group size) being sublinear can be found here.

- To understand whether net_value(N) ever starts going down as N goes up — i.e. whether there’s a point at which growth of the group is harmful — we need to understand whether the gross value grows faster than the reputational effectiveness penalty gets small, i.e. we need to understand which of these grows faster:

- If there is a point past which marginal people are net negative, the exact point is extremely sensitive to model parameters.

- I.e. a wide variety of answers for “when would growth be counterproductive?” is plausible.

- Squiggle code implementing this can be found here.

Discussion of parameter choices and the model setup

How does the frequency of scandals (in expectation) grow with the size of the group? (f(N))

Remember that we defined “scandal” as “wrongdoing that becomes prominent.” Given this, our best guess here is that frequency grows sublinearly with the size of the group.

- A naïve first-order approximation is that frequency should be linear in the size of the group because each new person is similarly likely to commit wrongdoing and cause a scandal.

- However, wrongdoing might only become a scandal that affects the reputation of the group if it involves a prominent[4] member of the group. And it seems likely that the size of the “prominent people” group in a broader community grows more slowly than the size of the community overall (i.e. you’re less likely to be a prominent member of a group if the group is huge than you are if the group is small).

- E.g. sexual misconduct seems to be more of a scandal for a university if done by a famous professor than by a random staff member.

- So there should be fewer “scandals” per person in larger groups, meaning that the frequency of scandals should grow sublinearly with the size of the group.

Note also that we can try to account for the variation in the importance of real-world scandals when we’re setting parameters by saying that something less significant simply has a smaller chance of causing a scandal. In other words, if you think that someone will definitely cause 3 scandals in the following year, but they’re all very small, you can model this here by saying that this is actually 1 scandal in the way we’re defining scandal here. (Whereas something unusually significant might be equivalent to two scandals.)

How should we define and model “reputational harm”?

The harms we would expect to accrue from scandals are things like:

- It’s harder for people in the group to do things the group considers high-impact:

- It’s harder to raise money for impactful projects

- It’s harder to attract employees and collaborators

- It’s harder to convince people to take action on your ideas

- People in the group are generally stressed and demoralized

- Some people outside of the group might no longer want to do things the group considers high-impact:

- They’re less likely to join the group in the future

- They don’t want to do things the group endorses because the group endorses them

Our guess is that reputational harm is best modeled as a percentage decrease in impact. This fits the first point above better than it fits the second, but even for 2 (a), harms might accrue in a similar pattern: the first scandal drives off the least interested x% of people, then the second scandal drives off the x% least interested of the remainder, etc. (See evaporative cooling.)

There are some costs which arguably do not fit this model. For example, the negative perception of early cryonicists may have deterred cryobiologists (who weren’t cryonicists) from doing cryonics-related research that they otherwise would have done independently. It seems plausible that from the point of view of the group, this is better modeled as an additive cost — flat negative impact — due to the reputational issues as opposed to a multiplier penalty on the positive impact of the members of the group. (Additionally, the “effectiveness penalty multiplier” model doesn’t allow for scandals to cause someone’s work to become negatively impactful, which doesn’t seem universally true.)

Another complication might be something like splintering; it’s possible that you can’t model group size as independent of scandal rate and reputational harm, because when scandals have certain effects, the group splinters into smaller groups or simply loses members.

Still, we think the percentage decrease model is the best that we have come up with.

How much does a scandal affect each person, for a group of a given size? (K)

We want to understand: given a fixed scandal, is it more harmful per person if the group the scandal is attached to is bigger? Our best guess is that per person in the group, harm per scandal decreases with the size of the group, but we’re modeling K as a constant for simplicity.

It seems like there are some counterbalancing factors:

- Worse for bigger groups:

- Bigger groups have more people affiliated with the scandal, and therefore more people who can be harmed

- Bigger groups are more likely to be known and might be considered more newsworthy

- A random individual committing a crime is usually not worthy of any reporting, but if it is attached to some well-known group, that is more worthy of journalism

- Better for bigger groups

- The effects are also more diffuse in bigger groups

- It seems less reasonable to blame each member of a larger group as much as you would for smaller groups

- Bigger groups are more likely to have an existing reputation in people’s minds, which means that individual scandals are less likely to affect their overall view

- Many people know at least one Harry Potter fan. If a Harry Potter fan causes a scandal, that’s not that likely to affect your view of all Harry Potter fans, in part because you have a stronger prior about the group. But if a fan of some fairly niche book series causes a scandal, you might have a weaker prior and update stronger.

- The effects are also more diffuse in bigger groups

- Some examples to inform intuition:

- Public perception of academia overall probably doesn't change much when a Princeton prof is accused of harassment or the like. But the perception of Princeton might change more, and if an even smaller group is well enough known (and that prof is in that group), then maybe the other people in the group are even more affected.

- But even very large groups (major religions, political parties) still seem to suffer some amount of reputational harm after scandals.

- Out best guess (fairly weak) is that, per person in the group, harm per scandal decreases with the size of the group; this would mean that we should model K as something like 1/log(N) instead of modeling it as a constant. But we’re going for a constant here for simplicity and because we’re quite unsure.

- Note also that, because the number of people affected as the group grows goes up linearly, overall, this means that the total reputational harm per scandal should grow with the size of the group, but sublinearly.

How do benefits, ignoring reputational harm, grow with the size of the group?

Our best guess is that benefits grow slightly superlinearly because of coordination benefits (but you can easily remove coordination benefits from the model).

- A naïve first-order approximation is that benefits (not accounting for reputational issues) are linear in the size of the group.

- If everyone in EA donated a constant amount of money, then getting more people into EA would linearly increase the amount of money being donated (which, for simplicity, we can say is a linear increase in impact)

- At least in some cases though, it seems like benefits are superlinear.

- Standard models of networks state that the value of groups tends to grow quadratically or exponentially

- When Ben asks people why they write for the EA Forum they often say something like “because everyone reads the Forum”; N people each writing because N people will read each thing — that’s quadratic value

- Brand recognition can help get things done, and larger groups have more brand recognition

- Other coordination benefits (e.g. a member of the group can identify and get access to people who’d be useful to coordinate with)

- On the other hand, there are also (non-reputation-related) costs of larger groups, like coordination costs

- Our tentative guess is that the benefits of groups like EA tend to grow slightly superlinearly

Parameter sensitivity

My (Ben) subjective experience of playing around with this model is that for reasonable parameter values, it seems pretty clear that groups of more than 500 people are better than smaller groups, but it's harder to get outputs that show that larger groups (or any reasonable size) are noticeably worse than smaller ones. I have to intentionally choose weird parameters to get a graph like the one above, where there is a clear peak and larger groups are worse – unless I intentionally do this, it usually seems like growth is neutral or good (although confidence intervals are often very wide). (Lizka agrees with this.)

When I try to think of scandals that plausibly decreased the effectiveness of people in EA by >5% the list feels pretty short: FTX is probably in there, but even disturbing news or incidents like the TIME article on sexual harassment seem unlikely to have caused one in 20 people to leave EA (or otherwise decreased effectiveness by >5%). And we have 10-20k person-years to have caused scandals (suggesting that the base rate of scandals per person per year is 1/20000 to 1/10000); plugging in those numbers here indicates that EA should grow vastly beyond its current size.

More importantly: when I try to argue backwards from the claim that EA is already too big, I have to put in numbers that seem absurd, like here.

So my guess is that if growth is bad, it's because this model is flawed (which, to be clear, is pretty likely, although the flaws might not necessarily point in the direction of making it more likely that growth is bad).

Other considerations about the parameters & model setup

- What happens if impact per person has long tails in a way that is predictably related to parameters in the model?

- I could imagine alternative models which have different results, e.g. it could be that the most impactful members are disproportionately benefited by larger groups (e.g. the best researchers disproportionately benefit from having more people to read their research)

- It’s not clear to me how this would shake out

- See also the next bullet point

- What if how much reputational harm affects someone is not independent of their impactfulness?

- Maybe the extremely committed members don’t care so much about reputational harm because they are diehards, and they are also the more impactful ones, so this model could overstate total damage

- Alternatively, we speculated above that reputation harms might disproportionately accrue to prominent members of the group, and it seems plausible that prominent members of the group are also disproportionately impactful (or are connected to disproportionately impactful people, who are affected more), meaning that this model understates total damage

- Maybe how much each new scandal affects the group’s effectiveness depends on the number of scandals that have hit the group in the past (in a way that’s hard to capture via f(N))

- E.g. maybe after 3 scandals that each harmed effectiveness by 10% (maybe via driving off 10% of the least interested people after each scandal), the group is in a new type of vulnerability, and a 4th scandal would cause significantly more damage. Meaning that we can’t keep K constant.

- See also Social behavior curves, equilibria, and radicalism

- How does this model work for multiple groups, especially when they’re overlapping?

- “Scandal” is poorly defined: scandals have a complicated relationship with brand recognition, we’re modeling “scandal” in an extremely simplified way

- Complicated relationship with brand recognition

- At the extreme: “all press is positive press”

- This extreme seems unlikely to be true, but there are likely complex ways in which different types of scandals have different types of impact

- E.g. possibly radical tactics increase support for more moderate groups

- Not all scandals are equal

- For instance, if a scandal is related to the group’s focus (e.g. Walmart employee is involved in a scandal for doing Walmart-related things, like embezzling — vs. something in their personal life) or involves prominent members of the group, it probably has a bigger impact.

- We can try to track this by defining (expected) “scandal” in a way that forces them to have a similar impact, but this affects how we should estimate the base rates of scandals at different group sizes, and might be hard to track.

- Complicated relationship with brand recognition

- Many other properties of groups can be at least somewhat related to group size and affect how much reputational harm a group accrues

- Some are listed below for EA.

- Does this argument prove too much? The existence of large groups seems like compelling evidence that the reputational costs of large groups can’t be that high

- But maybe there are reasons to think that existing large institutions have special circumstances that EA lacks. In particular: some institutions started before the Internet era and are seen as “part of the furniture” (e.g. major religions, political parties) and others aren’t actually trying to do anything terribly controversial (e.g. sports teams). It’s hard to think of a large group that’s trying to change the world which was started in the last 20 years that doesn’t have substantial reputational hits from the action of a minority of members.

- This model assumes that the rate of scandals is impossible to change, but that of course is not true. Projects like CEA’s Community Health and Special Projects team, the EA reform project, EA Good Governance Project, and others may reduce the incidence of issues.

- This post is intended to address the narrow question of how reputational harm scales. There are a large number of other reasons why growth might be good/bad (e.g. difficulty maintaining norms), and those are not addressed here.

EA-specific factors that this model ignores

| Factor | Possible implications |

| Probably attracts people who are more conscientious and nice than average | Decreases per-member frequency of scandals |

| The desire to make things work (rather than just compete) encourages most participants to try to get along and resolve conflicts amicably | Decreases per-member frequency of scandals |

| Some / many of the things we do are broadly regarded as good (e.g. GiveWell) | Decreases per-member frequency of scandals |

| The group isn’t super defined / is pretty decentralized; it’s not one massive organization. So e.g. someone donating to GWWC or effective charities can continue to do that as much as they could before (except maybe they’re demotivated) if someone prominent in a big animal advocacy organization is involved in a scandal | Decreases cost of scandal |

| Could be seen as a nonprofit | Unclear; nonprofits are sometimes held to higher standards (e.g. around compensation) but also have some default assumption of goodwill |

| Members tend to be from privileged demographics | Unclear; makes EA more “punchable” but also members have larger safety nets and more resources to push back |

| Is identified as powerful and allied with powerful groups (by some) | Unclear; makes EA more “punchable” but also members have larger safety nets and more resources to push back |

| A large set of different organizations with different practices any of which might be objectionable to someone | Increases per-member frequency of scandals, decreases cost of scandal

|

| A large set of different geographic subcultures with different practices | Increases per-member frequency of scandals, decreases cost of scandals |

| Tells people to take big actions which can frequently go badly and are expected to backfire at some decent rate | Increases per-member frequency of scandals |

| A high level of overlap between people's professional and friend networks means that almost all aspects of someone's life can be regarded as relevant for criticism, rather than just what they do in the course of their work | Increases per-member frequency of scandals |

| The desire to make things go well gives people a reason to stick around even if dissatisfied, and feel a moral responsibility to fight other people if they think what they're doing is harmful | Increases per-member frequency of scandals |

| Nobody has the authority to impose universal rules | Increases per-member frequency of scandals; possibly decreases cost of scandals (because scandals are legitimately not caused by the group) |

| Nobody can control who identifies themselves with EA, at least for the purposes of a critical journalist | Increases per-member frequency and cost of scandals |

| A large fraction of our communication, especially by new and less professional folks, is public and able to be used against us indefinitely (c.f. corporations or government agencies) | Possibly increases per-member frequency of scandals |

| Is engaged in activity contrary to the views of some existing political alliances and interests, so has accumulated active and motivated haters (and also some motivated by the FTX association) | Increases per-member frequency of scandals |

| Disproportionately attracts young people who tend to be harder to screen, and behave more erratically, and develop new mental health problems at higher rates | Increases per-member frequency of scandals |

| Could be seen as a political movement trying to influence society, which makes it seem particularly fair game to attacks | Increases per-member frequency of scandals |

Related work and work we’d be excited to see

- Some related work:

- More work we would be excited to see (not exhaustive):

- A compilation of reasons for and against growth

- More case studies/accounting of reputational harms that EA has accrued so far. Emma and Ben tried to do one survey here, and those results don’t make Ben think that the public has a terribly negative opinion of EA, but there is a lot more work that could be done.

- Empirical analyses that can inform us about what good parameters would be for this model (e.g. base rates, analyses into other groups’ sizes and how they’re doing, etc.)

- Example from Ben: one research project I would be interested in is collecting examples of scandals that were so bad that they destroyed a community. E.g. elevatorgate is possibly one, but my subjective experience is that it's surprisingly hard to find examples (which might mean that I'm overestimating how much of a risk these scandals are).

- Improvements on the model, including alternative models

- Commentary from Ben: I wish I had a better model of how reputational costs work. I’ve previously been involved in animal rights activism, and that community has a (sometimes deserved) terrible public reputation, but animal advocates seem to be making a lot of progress despite that. I struggle to think of any group that has successfully achieved change without having a substantially negative reputation in parts of society: feminists, environmentalists, neoliberals, etc. all have substantially achieved their aims while having a ton of public criticism.

Conclusion

As with many such models, you can choose parameters to get basically any possible outcome. But the settings that seem most plausible to us result in growth being good.

One of the few takeaways from this exercise that can be said with confidence is that bigger groups are likely to have more scandals, so if EA grows, that’s something we should prepare for and mitigate against.

Contributions

The original idea for a related model was developed by a person who wishes to remain anonymous. Ben and Lizka made this more nuanced and wrote this post as well as the squiggle code. The resulting model is rough and doesn’t have fully conclusive results, but we thought it was worth sharing.

- ^

Though reputation is not the only relevant consideration for thinking about whether it would be better for EA to be small.

- ^

Or stigmatized or unpopular behavior

- ^

We are not actually sure about this. See the linked section.

- ^

Note that “prominence” here is complicated. Arguably, the thing that matters is the prominence of someone as a member of the group. For instance, if a really famous actor happens to shop at Walmart and is involved in a widely covered scandal, it probably won’t affect Walmart’s reputation. However, if the person was also a spokesperson for Walmart, it probably would, at least a bit.

Moreover, prominence in the group might make someone’s wrongdoing newsworthy (and via news coverage, a scandal) even if they weren’t prominent outside of the group before that happened. (Imagine a relatively unknown spokesperson for Walmart committing wrongdoing.) I’m not sure how much this actually happens.

It probably also matters whether the wrongdoing in question was somehow related to the group; e.g. the group already has a reputation for something related, or the wrongdoing highlights hypocrisy from the group’s perspective, etc.

I think both exponential and quadratic are too fast, although it's still plausibly superlinear. You used N∗log(N), which seems more reasonable.

Exponential seems pretty crazy (btw, that link is broken; looks like you double-pasted it). Surely we don't have the number of (impactful) subgroups growing this quickly.

Quadratic also seems unlikely. The number of people or things a person can and is willing to interact with (much) is capped, and the average EA will try to prioritize somewhat. So, when at their limit and unwilling to increase their limit, the marginal value is what they got out of the marginal stuff minus the value of their additional attention on what they would have attended to otherwise.

As an example, consider the case of hiring. Suppose you're looking to fill exactly one position. Unless the marginal applicant is better than the average in expectation, you should expect decreasing marginal returns to increasing your applicant pool size. If you're looking to hire someone with some set of qualities (passing some thresholds, say), with the extra applicant as likely to have them as the average applicant, with independent probability p and N applicants, then the probability of finding someone with those qualities is 1−(1−p)N, which is bounded above by 1 and so grows even more slowly than log(N) for large enough N. Of course, the quality of your hire could also increase with a larger pool, so you could instead model this with the expected value of the maximum of iid random variables. The expected value of the max of bounded random variables, will also be bounded above by the max of each. The expected value of the max of iid uniform random variables over [0,1] is NN+1 (source), so pretty close to constant. For the normal distribution, it's roughly proportional to √log(N) (source).

It should be similar for connections and posts, if you're limiting the number of people/posts you substantially interact with and don't increase that limit with the size of the community.

Furthermore, I expect the marginal post to be worse than the average, because people prioritize what they write. Also, I think some EA Forum users have had the impression that the quality of the posts and discussion has decreased as the number of active EA Forum members has increased. This could mean the value of the EA Forum for the average user decreases with the size of the community.

Similarly, extra community members from marginal outreach work could be decreasingly dedicated to EA work (potentially causing value drift and making things worse for the average EA, and at the extreme, grifters and bad actors) or generally lower priority targets for outreach on the basis of their expected contributions or the costs to bring them in.

Brand recognition or reputation could be a reason to expect the extra applicants to EA jobs to be better than the average ones, though.

Is growing the EA community a good way to increase useful brand recognition? The EA brand seems less important than the brands of specific organizations if you're trying to do things like influence policy or attract talent.

Thanks Michael! This is a great comment. (And I fixed the link, thanks for noting that.)

My anecdotal experience with hiring is that you are right asymptotically, but not practically. E.g. if you want to hire for some skill that only one in 10,000 people have, you get approximately linear returns to growth for the size of community that EA is considering:

And you can get to very low probabilities easily: most jobs are looking for candidates with a combination of: a somewhat rare skill, willingness to work in an unusual cause area, willingness to work in a specific geographic location, etc. and multiplying these all together gets small quickly.

It does feel intuitively right that there are diminishing returns to scale here though.

I would guess that for the biggest EA causes (other than EA meta/community), you can often hire people who aren't part of the EA community. For animal welfare, there's a much larger animal advocacy movement and far more veg*ns, although probably harder to find people to work on invertebrate welfare and maybe few economists. For technical AI safety, there are many ML, CS (and math) PhDs, although the most promising ones may not be cheap. Global health and biorisk are not unusual causes at all. Invertebrate welfare is pretty unusual, though.

However, for more senior/management roles, you'd want some value alignment to ensure they prioritize well and avoid causing harm (e.g. significantly advancing AI capabilities).

Excited to see this!

Really sorry, but recently we released Squiggle 0.8, which added a few features, but took away some things (that we thought were kind of footguns) that you used. So the model is now broken, but can easily be fixed.

I fixed it here, with some changes to the plots.

2. Instead of Plot.fn(), it's now Plot.distFn() or Plot.numericFn(), depending on if its returning a number or distribution. Note that these are now more powerful - you can adjust the axes scales, including adding custom symlog scales. I added a symlog yScale.

Also, in the newer version, you can specify function ranges. Like,

You have the code,

This is invalid where n=0, so I changed the range to start slightly above that. (You can also imagine other ways of doing that)

In the future, we really only plan to have breaking changes in main version numbers, and we'll watch them on Squiggle Hub. I didn't see that there were recent active Squiggle models. Sorry for the confusion, again!

Thanks for this! I think I've replaced the relevant links. (And no need to apologize.)

Also, do feel free to post the model on Squiggle Hub. I haven't formally announced it here yet, but people are welcome to begin using it.

Thanks for writing this! This is a cool model.

Is linear a good approximation here? Conventional wisdom suggests decreasing marginal returns to additional funding and people, because we'll try to prioritize the best opportunities.

I can see this being tricky, though. Of course doubling the community size all at once would hit capacities for hiring, management, similarly good projects, and room for more funding generally, but EA community growth isn't usually abrupt like this (FTX funding aside).

In the animal space, I could imagine that doing a lot of corporate chicken (hen and broiler) welfare work first is/was important for potentially much bigger wins like:

But I also imagine that marginal corporate campaigns are less cost-effective when considering only the effects on the targeted companies and animals they use, because of the targets are prioritized and resources spent on a given campaign will have decreasing marginal returns in expectation.

GiveWell charities tend to have a lot of room for funding at given cost-effectiveness bars, so linear is probably close enough, unless it's easy to get more billionaires.

For research, the most promising projects will tend to be prioritized first, too, but with more funding and a more established reputation, you can attract people who are better fits, and can do those projects better, do projects you couldn't do without them, or identify better projects, and possibly managers who can handle more reports.

Maybe there's some good writing on this topic elsewhere?

My impression is that, while corporate spinoffs are common, mergers are also common, and it seems fairly normal for investors to believe that corporations substantially larger than EA are more valuable as a single entity than as independent pieces, giving some evidence for superlinear returns.

But my guess is that this is extremely contingent on specific facts about how the corporation is structured, and it's unclear to me whether EA has this kind of structure. I too would be interested in research on when you can expect increasing versus decreasing marginal returns.

Great read, and interesting analysis. I like encountering models for complex systems (like community dynamics)!

One factor I don't think was discussed (maybe the gesture at possible inadequacy of f(N) encompasses this) is the duration of scandal effects. E.g. imagine some group claiming to be the Spanish Inquisition or the Mongol Horde, or the Illuminati tried to get stuff done. I think (assuming taken seriously) they'd encounter lingering reputational damage more than one year after the original scandals! Not sure how this models out; I'm not planning to dive into it, but I think this stands out to me as the 'next marginal fidelity gain' for a model like this.

Thanks Oliver! It seems basically right to me that this is a limitation of the model, in particular f(N), like you say.

Neat!