Many thanks to Rani and Lin who contributed materially to the writing of this post, as well as to several others who reviewed this post.

The 0.7% campaign on foreign aid set out to contribute to campaigning efforts that would force the UK government to reverse their decision to cut annual aid spending from 0.7% of GDP to 0.5% of GDP. The UK government ended up sticking with the aid cut, meaning campaigning efforts as a whole did not succeed at this primary aim.

However, as this post will outline, the government’s win on this point was much more hard-fought than many expected, suggesting that campaign efforts may have had some impact.

Somewhat to our surprise, our model finds that donors to our campaign may have achieved a level of impact roughly comparable to the SoGive Gold Standard / a donation to a GiveWell-recommended charity.

In particular, there are reasons to believe that the innovations that we developed might be particularly valuable for achieving effective campaigning. This post will not put all the details of the innovation into the public domain. As this is a self-assessment, there is a risk of bias.

We do not believe that the fact that we have built a cost-effectiveness model is proof of robustness, indeed we believe it would be legitimate for someone who carefully reviewed the assumptions to come to different conclusions (either more or less optimistic about the campaign’s impact). Despite this, we do believe such models can be useful.

The group which ran the 0.7% campaign has now merged into the group Pandemic Prevention Network (formerly No More Pandemics, described in this EA Forum post), which will allow the group to leverage the experience outlined here and apply it to longtermist causes.This merger allows some of the organisational infrastructure and learning from the 0.7% campaign to survive and not be lost, however the Pandemic Prevention Network is operationally and strategically separate from this campaign.

For those who are interested in this work, there are opportunities to support the work of the Pandemic Prevention Network, including volunteering opportunities outlined at the end of this post.

1. Executive summary

1.1 About the campaign

In November 2020, the UK government stated in its spending review that it would go back on its earlier promise to provide 0.7% of GNI as foreign aid and it would reduce this to 0.5%.

A group of people, mostly in the EA community, came together to oppose this move, mostly sparked by this post on the EA Forum.

The campaign consisted of enabling people to write emails to their MP; the people were sought both organically and using facebook ads.

1.2 What the model found

After the campaign was over, we performed a cost-effectiveness analysis of our work.

A naive reading of the model suggests that our campaign achieved an impact circa 20x greater than a donation to a SoGive Gold Standard / GiveWell recommended charity. Applying an adjustment to account for uncertainties in evidence still gives a 3x greater impact than the SoGive Gold Standard.

There are plenty of assumptions going into this model, and interested readers are warmly invited to take a copy of the model and tweak the assumptions. Several of the assumptions are discussed in the rest of this document.

- Influencing policy can have large impacts

- The issue inspired lots of people to take action who otherwise wouldn’t have, essentially providing effort “for free”

- The model gives some credit to effectiveness of the innovation underlying the work, supported by peer feedback

1.3 Some key assumptions

In order to foreground what may be the weakest areas of the model, here are a couple of key assumptions

- Our model includes adjustments for the quality of the evidence, i.e. to reflect internal validity / replicability and external validity / generalisability

- To help get a flavour for how this might be calibrated, both SoGive and GiveWell apply a 95% replicability adjustment to the Against Malaria Foundation, reflecting the fact that malaria nets are very well-evidenced.

- For deworming interventions, GiveWell applies a factor of 13% for replicability, and 9% for external validity or generalisability. SoGive’s review on this is not yet published, however SoGive and GiveWell both highlight what SoGive calls the “black-box problem” -- i.e. there is a mystery around some of the middle steps of the theory of change.

- Given that there is no such mystery about the theory of change of this campaign, it seems that a higher (i.e. more favourable) adjustment may be appropriate.

- However given the issues with the generalisability outlined in section 6.1.1 below, it seems likely the adjustment should be fairly small (i.e. unfavourable to the project's impact).

- Opportunity cost of volunteer time

- The slack channel included around 20-30 people, and our fortnightly meetings typically included around a dozen people.

- The cost-effectiveness of the campaign was aided by the fact that the costs were low. The costs were so low because the work was done wholly by volunteers. This raises the question of how much value to place on the time given by the volunteers.

- This is the opportunity cost of the volunteer time. I.e. if the volunteers had not been involved in this project, would they have done something else valuable instead? This question was not formally assessed, so the assessment done here is largely based on guesswork.

- The campaign in question had a high profile and was newsworthy; it appeared that several people taking part were driven by a strong emotional pull. This suggests that for many of the participants, their work on this campaign was eating into leisure time.

- As far as we can tell from talking to our fellow volunteers, this appeared to be mostly the case, and the model assumes that the opportunity cost of volunteer time was zero. One volunteer worked essentially full time on the technology over around 2 months; this person indicated that they were fairly unlikely to have done something high impact if the project had not been there, and perhaps gained some benefit from the experience (i.e. possibly incurred a negative time cost).

- Note that if the assumption of close to zero opportunity cost is correct, this may allow us to draw reasonable inferences about this particular project, however it’s hard to be confident that this level of engagement can be guaranteed for future campaigns.

- Our model grants us 3% of the credit for the impact achieved; we believe this is high for such a small campaign group, but justified based on peer comments about the quality of the implementation of our campaign. Overall, the fact that the basis for this is expert opinion (as opposed to something more directly linked to impact) is a material evidence gap for this model.

- A key part of the reason why the model grants our campaign any credit, despite the fact we lost, is that campaigning efforts caused the government’s win to be harder-fought than expected, and that this will influence the government’s attitude to aid in the future; we have this information from sources who are more in-the-know than us, who have claimed this to be true with confidence. Similar comments to the above about this being based on expert opinion would be relevant here too.

This section is not intended to list every assumption where a reasonable reader could disagree; a careful review of the cost-effectiveness model will highlight several subjective inputs. The model includes a pessimistic and an optimistic value for each input, and the error bars are wide for several of the inputs.

If someone went through the model and inputted their own assumptions, it would not be surprising if they came out with an answer that was, say, an order of magnitude different in either direction, possibly more. The conclusion therefore, should not be interpreted as “this definitely outperforms the SoGive gold standard”, but rather “it’s interesting that this is in the same ballpark as the SoGive gold standard, even after the campaign failed”.

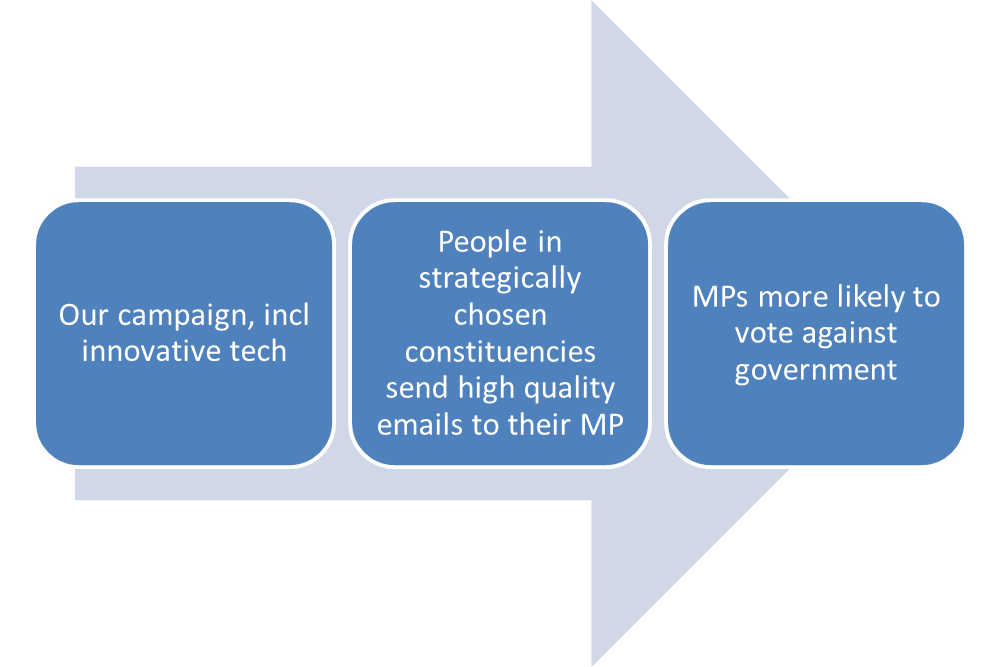

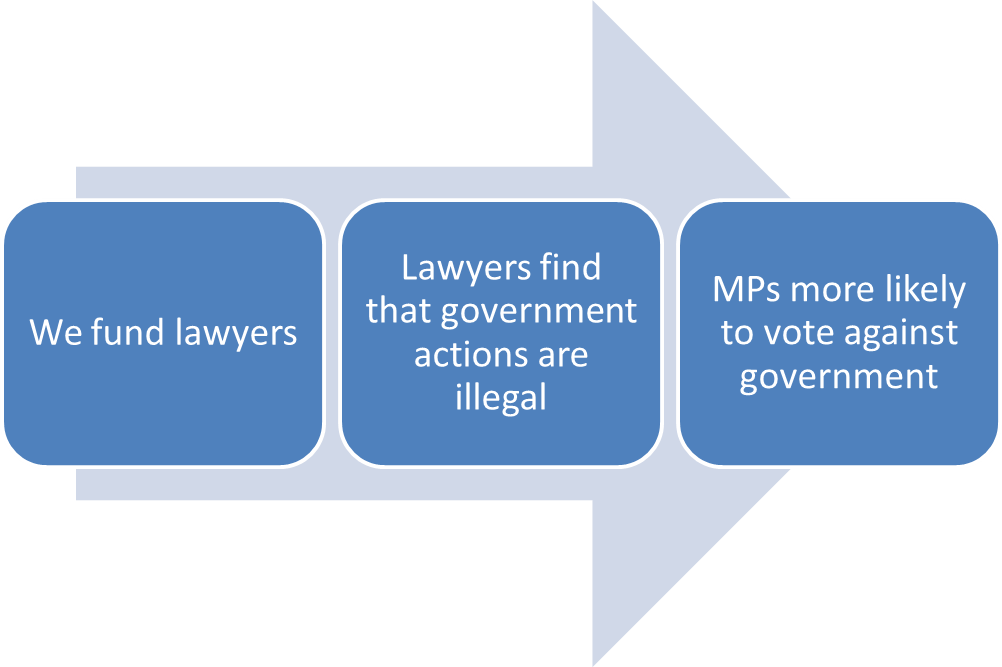

2. Intended theory of change: encourage a backbench rebellion

There were two components to the campaign:

- Email campaign: Getting constituents to send emails to backbench MPs

- Legal report: Highlighting the dubious legality of the government’s actions

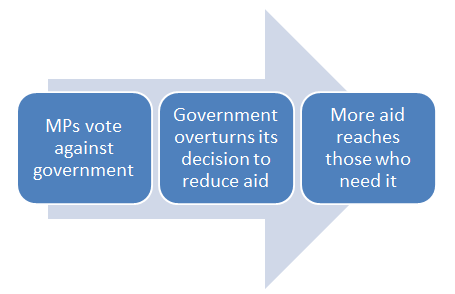

The intended next steps were:

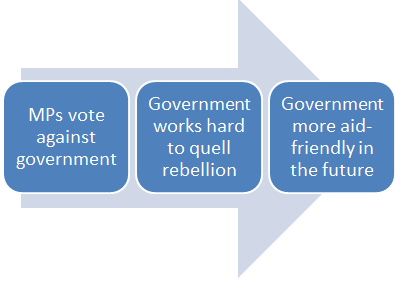

The actual next steps were

- “Government works hard to quell rebellion” refers to the fact that it was a (surprisingly) hard-fought victory

- “Government more aid-friendly in the future” means either the government is more likely to return the aid amount back to 0.7%, or at least not reduce aid further, as a result of the fact that it was a hard-fought victory

Both actions had small financial costs:

- The email campaign cost < £10k, most of which was spent on facebook ads

- The legal report cost < £5k

Most of the effort went on the email campaign. This is also the opportunity which is easier to replicate, hence this write-up and the aforementioned cost-effectiveness model focuses solely on the email campaign.

This is not to say that the legal report was low impact – indeed this attracted significant media attention, and likely also exerted pressure.

3. The outcome: the pro-aid lobby lost

At first glance, one might think that the campaign achieved nothing. It aimed to change the government’s actions relating to foreign aid, and this essentially did not happen.

What did happen:

- Nov 2020: Government announces that it will cut aid from 0.7% to 0.5%

- Jan 2021: Government instructs the relevant government foreign aid department (i.e. the FCDO, formerly DfID) to implement the cuts within weeks

- Mar 2021: Matrix Chambers determines that the government acted unlawfully by implementing this change without putting it to a parliamentary vote

- Jul 2021: Vote occurs, parliament votes in favour of the government, with 24 Conservative rebels

Some background on the politics:

- The Conservative party is a right-leaning party which is currently in power with a majority of 80 seats

- This means that 41 Conservative party rebels would be needed to defeat the government (assuming that all opposition parties vote to keep the 0.7%, which was considered to be – and turned out to be – a safe assumption)

- Getting this many MPs to rebel is a substantial undertaking

One of the areas of uncertainty was about going back from 0.5% to 0.7%. The government stated that the reduction in aid spend was temporary and that aid would be returned to 0.7% “as soon as the fiscal situation allows”. However it was not clear how this would be defined. In the bill finally voted on, the government made a commitment to return to spending 0.7% of GNI when, on a sustainable basis, there is a current budget surplus (i.e. the UK is not borrowing to finance day-to-day spending) and the debt to GDP ratio is falling. The 2021 budget allows for an increase back to 0.7% in the 2024/25 fiscal year, however there are reasons to be concerned about whether this will happen even then.

Although these points sound bleak for aid supporters, there are reasons to believe that there was some positive impact after all.

4. The government won, but it was a hard-fought victory

The government seemed highly likely to win, given that it has a substantial 80-seat majority. Furthermore the move is unusually popular with the electorate: YouGov research suggested that two thirds of Britons supported reducing the amount spent on foreign aid.

However, despite the reasons to believe that the government would be able to safely win this vote, the government appeared worried that it would lose the vote. They abandoned plans in March to have a vote over the aid cut for the current fiscal year, and pressed on with the cut without a mandate for it from MPs. Because cutting the aid budget without a Commons vote was of dubious legality, the government would likely not have proceeded this way if they felt they could have avoided it. The fact that they did not seek a vote is evidence that they weren’t confident they would win.

We have also heard from our contacts that the Prime Minister and Chancellor both invested several days of effort to make sure that they won the aid vote in July. Both of these individuals are very senior politicians whose time is very valuable, so there must have been serious concerns about a backbench rebellion to warrant their spending time on this.

So the government won, but it was a hard-fought victory.

Specifically, it appears that it was a harder-fought victory than expected. That fact that it was harder fought than expected is core to the impact model -- if the campaign was hard fought, but as hard-fought as expected, it wouldn’t have any impact on politicians’ future propensity to support international aid.

Part of the reason we have this impression is because of our conversations with people close to the relevant politicians. On the one hand, this is a good, knowledgeable source of information. On the other hand, as these people have been investing effort in supporting aid, they are at risk of bias or motivated reasoning themselves.

However, even without these accounts, some of the facts above also seem to lend themselves to this claim.

Questions about the extent to which this counterfactual impact was attributable to grassroots campaigning efforts in general (or our grassroots campaigning in particular) are discussed later in this write-up.

5. How good is it that the victory was hard-fought?

One of the risks is that when the criteria are met (in, e.g., 2024), the government may nonetheless decide not to increase aid, especially if the government is still a Conservative government at that stage. This risk seems credible given that

- The government claims that the measures to be used to decide when to return to 0.7% are clear, however they require that the measures are met “on a sustainable basis”, which is open to interpretation

- The reduction in aid which they proceeded with was of dubious legality, and it didn’t hold the government back then

They will be less likely to do this if they expect a backbench rebellion, and the more hard fought it was the previous time, the more cautious they will be next time.

Another risk is that another party coming into power may not consider it a priority to return to 0.7%. The fact that maintaining the 0.7% was so popular in 2021 may make them less likely to delay or deprioritise this.

Note that the extent to which this is good is tempered by the fact that aid in a few years’ time may be less effective than aid spent now. The reasons to believe this are:

- Tackling an aid reduction in the midst of Covid is particularly effective, given that estimates suggest that over 100 million people will be pushed into extreme poverty by Covid.

- The UK government merged DfID (the Department for International Development) with the foreign office. Higher amounts of aid now are likely still good, since the DfID culture of effective aid spending likely won’t be eroded immediately. Over the coming years, it’s not clear how this will evolve.

6. Arguably 3% of that hard-fought-ness is down to our group

Although this is written for an audience who is already familiar with the use of models, it’s worth reiterating that this sort of model is not to be taken literally. Instead, models need to be good enough to be useful. So, for example, if we now claim that “c.3% of the hard-fought-ness is down to our group” we’re only claiming this is a good enough approximation to be decision-relevant.

Some notes on the process:

- In order to design and conduct our campaign, we needed, in any case, to be in touch with other campaigners on this topic, several of whom were veteran campaigners with many years of experience.

- The numbers that we derived were based on the impressions that we gathered from actively being involved in this process and from conversations with experienced campaigners, as well as conversations with relevant parliamentarians. For some of the campaigners, we showed them our breakdown, and they agreed that this would be reasonable.

- This was done by people involved in the campaign. This leads to a risk of bias or motivated reasoning. However the act of cross-checking with veteran campaigners would likely have been impossible without the right relationships

6.1 Why not substantially less than 3%?

One could imagine that the correct attribution could be substantially < 1%, or indeed 0%. This might be consistent with beliefs such as “campaigning is ineffective or has very low effectiveness”, or “this particular campaign was ineffective or had low effectiveness”

6.1.1 Campaigning can be effective

James Ozden, who has been doing strategy work for Pandemic Prevention Network (the group that this campaign merged into), has written extensively on this. His review finds that campaigning can be effective. However, there is more than one way to campaign, and some of the studies in his review (notably Bugden (2020), Wouters and Walgrave (2017)) focus on protests, which is only very tenuously relevant for the 0.7% campaign.

The most relevant studies in his review, or found from other sources, are:

Does Grassroots Lobbying Work?: A Field Experiment Measuring the Effects of an e-Mail Lobbying Campaign on Legislative Behavior | Thank you to Michael Sadowsky for originally highlighting this to us. This RCT/field experiment finds that grassroots email lobbying has a substantial effect size on legislative voting behaviour. This is of questionable generalisability, because the study was conducted in New Hampshire, which is a highly unusual state within the US -- it has a citizen legislature paid a nominal salary, there are no staffs for rank and file members, potentially making legislators more dependent on interest groups for information. n = 71 in control group and 72 in treatment group

|

Call Your Legislator: A Field Experimental Study of the Impact of a Constituency Mobilisation Campaign on Legislative Voting | RCT/field experiment again finds substantial effect - approx 12 percentage point increase in probability of supporting the legislation after being targeted by phone campaign. Another US based study, this time in Michigan. Suggests that conclusions of campaigning’s effectiveness may be relevant across locations, at least within the US. This is less directly relevant as it refers to phone calls rather than emails, but shows further evidence that campaigning can be effective. Sample size: three treatment groups of 16,17,18 legislators; control group of 97 legislators. |

Who Listens to the Grass Roots? A Field Experiment on Informational Lobbying in the UK | RCT/field experiment comparing the effects of sending information-rich or information-poor letters to local councillors in their areas. Informational content was found not to make a difference in response overall, but information-rich letters had a higher quality response and encouraged councillors to pass the letter on to others. This has a UK focus so is more likely to be directly applicable to our campaign. Our campaign also encourages people to mention key information in their emails (see next section). Direct comparison between letters and emails may however not be possible, as letters are ‘higher cost’ in that they require more time from the person writing and sending the letter and may therefore be more likely to get a response. However a person-specific email, rather than copy and paste email, is also (somewhat) higher cost. The campaign in the study also appears to target local representatives, rather than those at the national level so may not be generalisable. Sample size: 496, of which half received info-rich, half received info-poor. |

6.1.2 Our campaign included highly rated features

Our campaign included some features that were considered positively by some of the seasoned, highly regarded campaigners who we spoke to.

Those features included:

- We were able to target strategically chosen constituencies

- We were able to get a disproportionately high number of Conservative-leaning people to take part, and get them to say so in their email to their MP

- We were able to get the constituents to mention tactically valuable things in their email, such as the fact that this government action is contrary to their manifesto, which we knew from our discussions with experts would be particularly likely to influence Conservative MPs

- We were able to get significant numbers of emails sent from constituents -- well into the hundreds

Reasons to be doubtful about the quality of the evidence, or to doubt that this campaign was particularly effective:

- Those who were positive about the campaign had a significant overlap with the people we consulted at the start to learn what good campaigning looked like. But note that some serious names were people we encountered after we started campaigning, so this objection is only partially legitimate.

- The evidence for the effectiveness of our campaign is based largely on expert opinion, not on evidence which is directly linked to impact. An example of evidence which is directly linked to impact would be if we had performed our campaign as a randomised control trial, i.e. if we had taken the group of MPs we were targeting and only targeted a randomly selected half of them, and then compared the results between the treatment group and the control group, and if there had been an at least statistically significant result, this would have been stronger evidence. The main reason for not doing this was sample size. We originally had a list of around 20 MPs to target; we thought this was likely too small to yield useful insights. If we had close to zero credence in emailing MPs in general or our particular expert-opinion-based approach, then we might nonetheless have turned this into an RCT, however with even a small amount of credence that the work was having impact, it seemed likely that covering our entire target group would outperform the information value of the RCT.

- As this is a self-assessment, there is a risk of bias

Although campaigning on this issue was not particularly neglected, we believe our own campaign had an outsized impact because we took a targeted approach to contacting MPs. Focussing on finding supporters in key constituencies with MPs who would be easy to persuade greatly increases the cost-effectiveness of campaigning. By doing this, you minimise costly attempts to get support from people whose MPs are already planning to rebel anyway, or have no chance of rebelling, or are not part of the government’s majority.

Though we know how many of the MPs whom we targeted with our campaign rebelled against the government, we cannot tell much from this about the extent to which our campaign changed the views of this group. Of the 47 MPs whom we targeted, 3 rebelled against the government. This is approximately the same proportion of non-targeted MPs who rebelled (we did not target 316 Conservative MPs, and of those we did not target, 21 rebelled). However, for many of the rebellious MPs whom we did not target, we avoided them because we had reason to think that they were sure to rebel against the government, and did not need persuading. We believed that the 47 MPs who we targeted were considered likely to vote for the government but able to be persuaded. So it is possible that in the absence of our campaign, the group of 47 persuadable MPs would have voted against the government at a lower rate than the group of 316 MPs, some of whom were always going to rebel.

The data about the rebellion rate is entirely consistent with the thesis underlying our models; it is consistent with the indications we received that the overall campaigning efforts were appearing effective, and that we should not focus our campaigning efforts on those MPs most likely to rebel. However this also highlights the complications that arise when the post-hoc assessment is based on a different theory of change than the theory of change originally envisaged.

6.1.3 Other evidence relating specifically to our campaign

We spoke to other NGOs who were also campaigning on this topic, and showed some of them our model and the assumptions around how much to attribute to our campaign. While they were not used to applying quantitative models to impact in this way, they understood, given the explanation, how the impact numbers had been reached and broadly agreed.

There were several other sources of evidence which we would have ideally liked to have gathered. E.g. ideally we would like to have interviewed the MPs we targeted to find out whether they were influenced, and also interviewed them in such a way that they would have perfect knowledge of the extent to which the emails they received influenced them, and of course we would have liked them to be willing to share this information with us. Even getting interviews with the MPs seemed unrealistic, let alone the other even more demanding conditions. The closest approximation to this is to speak with people who work in an MP’s office reading emails for an MP -- this is something we did before the campaign.

In a Commons debate in June, Steve Brine MP used the desires of constituents as an argument in favour of reinstating the 0.7% contribution (source: Hansard; although we became aware of this thanks to the “Thoughtful campaigner”, see their tweet) :

“I have had hundreds of contacts from constituents concerned about the changes being made to our aid programme. Not all of them agree with me, but many do. My judgment is that the people I represent, like their MP, are really proud of the support that we give around the world.”

Brine’s statements in the debate are evidence that constituent pressure influenced his view and his decision to argue for it. We think this is moderately good supporting evidence that constituent pressure can make a difference to MPs’ views. Brine directly referenced the correspondence he had received from constituents as an argument in favour of higher aid spending - suggesting that MPs put some weight on what their constituents say in correspondence. However, he did not directly state that constituent views had changed or strengthened his own view, and his is just one perspective.

This section is headed as though it refers just to the credit attribution (why does our group warrant c.3% of the credit), however the comments in this section also apply to the downward adjustment our model applies to account for the uncertainties in the evidence base. Another part of the reason why that adjustment was downweighted was that the theory of change outlined in the model was not the same as the theory of change planned for at the outset of the project. For more details about how the downward adjustment was calculated, have a look in the model in sheet 5, where the quality of evidence adjustment is indicated and there are notes setting out the rationale for the choice of adjustment.

6.2 Why not substantially more than 3%?

There are a small number of key individuals in Whitehall who we believe, and who many believe, are mostly responsible for the rebellion.

For example, in order to ensure that as many as 40 backbench MPs rebel, coordination becomes important. If you rebel, then you reduce the chance that you get access to promotion opportunities for e.g. ministerial positions. Rebelling and losing therefore constitutes the worst of both worlds, but if you know that there are likely to be >30 other rebels, then your willingness to rebel goes up.

Because of these important dynamics, we believe that conversations between key individuals in the corridors of power deserve the majority of the credit.

7. Addendum

This model was built prior to the events of early July 2022, which saw Boris Johnson indicate that he will resign. This does have some impact on the model’s output, because the model allowed for the possibility that the next government might be led by Johnson. However the impact is modest. This is because the model captures two factors: if the next government were a Johnson government, it would be more likely to remember the pain of dealing with the rebellion. If it’s not a Johnson government, it would be less likely to be as strongly opposed to aid as the Johnson government is. These two assumptions roughly cancel each other out.

8. Summary of overall cost-effectiveness

Our model finds that donors to our campaign may have achieved a level of impact roughly comparable to the SoGive Gold Standard / a donation to a GiveWell-recommended charity, with a naive estimate finding an approximately 20x greater impact than the SoGive Gold Standard, which becomes a 3x greater impact when an adjustment for the uncertainty of evidence is applied.

While the government won and the aid reduction was maintained, the government’s victory was more hard fought than expected. In our model we predict that ~3% of this ‘hard-fought-ness’ is due to our campaign. As this is a self-assessment, there is a risk of bias, and we believe key individuals in Westminster talking directly to MPs deserve the majority of the credit. However, past studies show that campaigning can be effective, our campaign had highly rated features, and discussions were had throughout the process with NGOs and experienced campaigners. This supports our model and gives evidence for the effectiveness of our campaign.

The model included a number of assumptions and judgement calls, and it would be reasonable for someone to conclude that the cost-effectiveness was materially lower or higher than the conclusion found by this model. It is worth stressing once again that the existence of a model does not prove that the conclusion is robust, but it does help to make the assumptions explicit and easy to critique.

However if we imagine a future campaign targeting a sufficiently high-impact outcome, the expected value of the campaigns would likely be substantially higher. For those who find longtermism compelling, longtermist outcomes would likely fall into this category.

9. Opportunities to get involved with our next campaign and project

Since the 0.7% campaign, we’ve taken a focus on using our campaigning methodology to build a public supporter movement for impacting pandemic prevention and preparedness. With our rebrand to Pandemic Prevention Network, we’re currently recruiting for following positions:

- Co-Founder and Chief Operating Officer. Find out more about the role here. (note that we may fill this role soon, so if you’re interested you’ll need to let us know promptly)

- Volunteers to support campaign delivery:

- Technology Officer/Software Developer

- Supporter Engagement Lead

- Digital Marketing Officer

- Website Lead

- Content Writer

Feel free to contact the CEO Jasmin Kaur (jasmin.kaur@pandemicpreventionnetwork.org) for any questions you may have about these positions.

Also, Andrew Mitchell (new UK Development Minister) is pro- getting the 0.7% amount restored, and had been a vocal backbench critic of the original Sunak cut over the years since the pandemic. His promotion to cabinet may be an indirect commitment by Sunak to get back to 0.7%. We can hope this comes sooner rather than later. Great post here, Sanjay!

Hey! If you'd like help recruiting a software developer, please reach out!

You might also like contacting Altruistic Agency

It would be a longer piece of work to engage with the model here, intuitively I find the estimate surprising

However I'd just say that the fact you've undertaken this process at all is valuable, and I think both the campaign and model will be good proto-examples for the future of how EA has tried to engage with policy change work.