Summary

- Many views, including even some person-affecting views, endorse the repugnant conclusion (and very repugnant conclusion) when set up as a choice between three options, with a benign addition option.

- Many consequentialist(-ish) views, including many person-affecting views, in principle endorse the involuntary killing and replacement of humans with far better off beings, even if the humans would have had excellent lives. Presentism, narrow necessitarian views and some versions of Dasgupta’s view don’t.

- Variants of Dasgupta's view seem understudied. I'd like to see more variants, extensions and criticism.

Benign addition

Suppose there are currently one million people in the world, and you have to choose between the following 3 outcomes, as possible futures:

Huemer (2008, JSTOR) describes them as follows, and we will assume that the one million people in A are the same that currently exist:

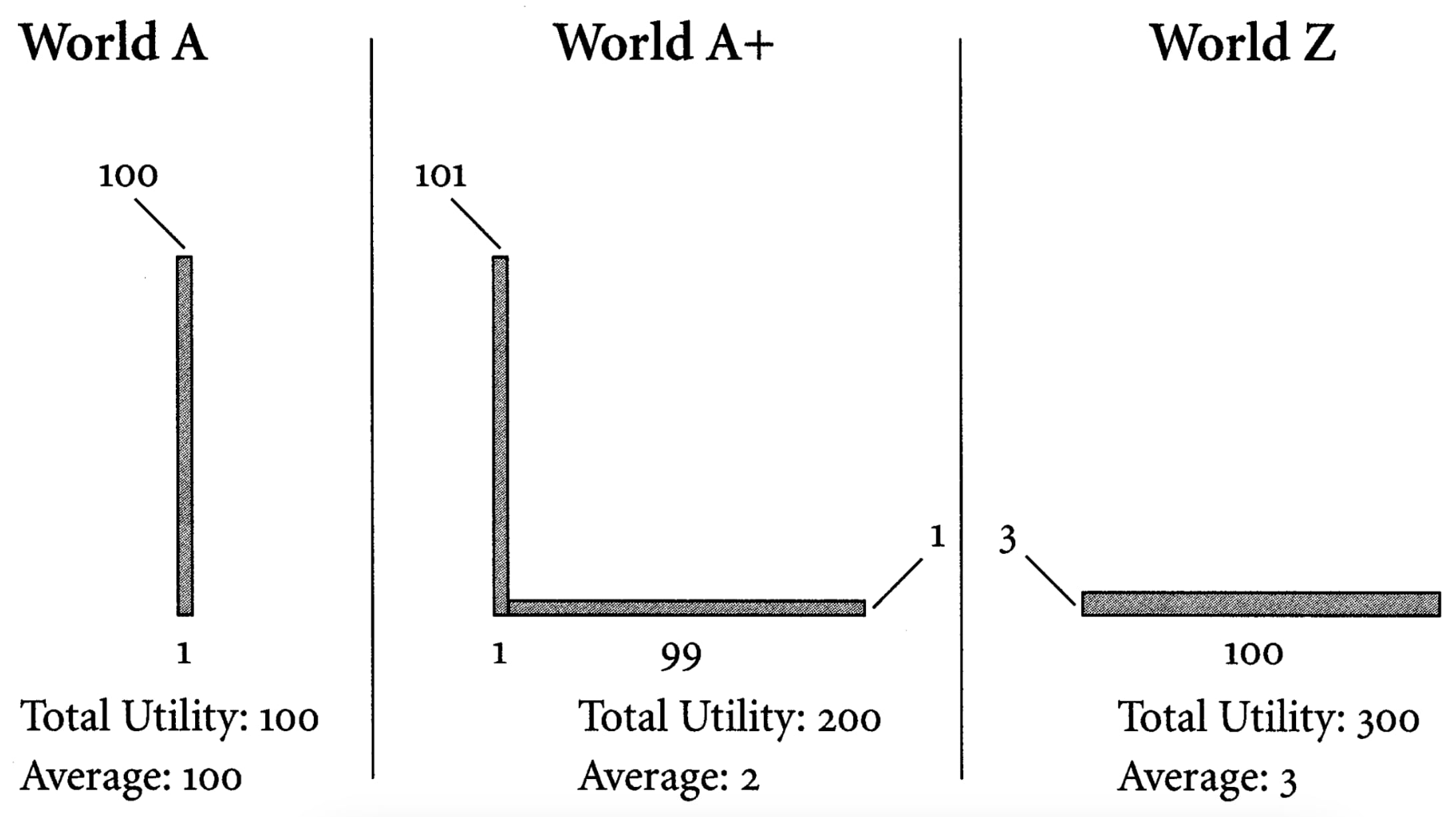

World A: One million very happy people (welfare level 100).

World A+: The same one million people, slightly happier (welfare level 101), plus 99 million new people with lives barely worth living (welfare level 1).

World Z: The same 100 million people as in A+, but all with lives slightly better than the worse-off group in A+ (welfare level 3).

On the other hand, it seems to me that person-affecting intuitions should generally recommend against Z, or at least not make it obligatory, because A seems better than it on person-affecting intuitions. It's better for the present or necessary people.

The welfare level of each person can represent their aggregate welfare over their whole life (past, present and future), with low levels resulting from early death. In a direct comparison between Z and A, Z can therefore mean killing and replacing the people of A with the extra people, as in replacement and replaceability thought experiments (Singer, 1979a, Singer, 1979b, Jamieson, 1984, Knutsson, 2021). The extra people have lives barely worth living, but still worth living, and in far greater number.

In binary choices, i.e. if you were choosing just between two outcomes, on most person-affecting views, and in particular additive ones, I would expect:

- A < A+, because A+ is better for the necessary/present people (the people who exist in both outcomes), and no worse for the others, who still have positive overall in A+ as opposed to not existing in A.

- A+ < Z, because they have the same set of people, but Z has higher average, total and minimum welfare, and a fairer distribution of welfare.[1]

- A > Z, because it’s worse for the necessary/present people in A, but not worse for the contingent/future people, on person-affecting intuitions.

This would give a cycle: A < A+, A+ < Z, Z < A+. Huemer (2008, JSTOR) takes the first two together to be an argument for the repugnant conclusion,[2] and calls the step A < A+ benign addition.[3]

What would we do when all three options are available?

Two person-affecting responses

According to most person-affecting intuitions, I'd guess Z seems like the worst option.

Presentist and necessitarian views would recommend A+. A+ is best for the present people, the people alive at the time of the choice. A+ is best for necessary people, the people who will exist (or will ever exist or have existed) no matter which is chosen.

Or, if we ruled Z out first to reduce it to a binary choice, then we’d be left deciding between A and A+, and then we’d pick A+.

However, I suspect we should pick A instead. With Z available, A+ seems too unfair to the contingent people and too partial to the necessary/present people. Once the contingent people exist, Z would have been better than A+. And if Z is still an option at that point, we’d switch to it. So, anticipating this reasoning, whether or not we can later make the extra people better off later, I suspect we should rule out A+ first, and then select A over Z.

I can imagine myself as one of the original necessary people in A. If we picked A+, I'd judge that to be too selfish of us and too unkind to the extra people relative to the much fairer Z. All of us together, with the extra people, would collectively judge Z to have been better. From my impartial perspective, I would then regret the choice of A+. On the other hand, if we (the original necessary people) collectively decide to stick with A to avoid Z and the unkindness of A+ relative to Z, it's no one else's business. We only hurt ourselves relative to A+. The extra people won't be around to have any claims.

Dasgupta’s view (Dasgupta, 1994, section VI and Broome, 1996, section 5) captures similar reasoning. A wide-ish version of Dasgupta’s view can be described more generally, as a two-step procedure:

- Rule out any outcome that’s worse than another with exactly the same number of people who ever exist.[4]

- Of the remaining outcomes, pick any which is best for the necessary people.[5][6] A person is necessary if for each outcome, they ever exist in that outcome.

Applying this, we get A:

- A+ is ruled out because Z is better than it in a binary choice, for the same set (or number) of people. No other option is ruled out for being worse in a binary choice over the same set (or number) of people at this step.

- We're left with A and Z, and A is better for the necessary people.

Tentatively, among person-affecting views, I prefer something like Dasgupta’s view, although I would modify it to accommodate the procreation asymmetry, so that adding apparently bad lives is bad, but creating apparently good lives is not good. There are probably multiple ways to do this.[7] Presentist and necessitarian views can also be modified into asymmetric views, e.g. by including both necessary/present lives and contingent/future bad lives, or like the less antinatalist asymmetric views in Thomas, 2019 or Thomas, 2023.

However, it’s unclear how to extend this version of Dasgupta’s view, especially the first step, to uncertainty about the number of people who will exist.

Furthermore, the first step doesn't rule out enough options even in deterministic cases. For example, if we include even just one extra person in Z (not in A or A+), then step 1 does nothing, and A+ is recommended instead. Rather than requiring the same number, step 1 should rule out any option that seems pretty unambiguously worse than another option, and A+ would still seem pretty unambiguously worse than Z, even if Z had one extra person. And we'd need A to not be pretty unambiguously worse than A+.[8]

A promising approach for the first step of the two-step procedure would be to fix a set of axiologies and rule out options by unanimous agreement across the axiologies, e.g. X > Y if X beats Y according to both average utilitarianism and total utilitarianism, or X > Y if X beats Y according to every critical-level utilitarian view in a set of them.[9] The latter is essentially a critical-range theory from Chappell et al., 2023 and both similar to the views in Thomas, 2023. This could be motivated by dissatisfaction with all (complete, transitive and independent of irrelevant alternatives) welfarist/population axiologies, in light of impossibility theorems, largely due to Gustaf Arrhenius (e.g. Arrhenius, 2000, Arrhenius, 2003 (pdf), Arrhenius, 2011 (pdf), Thornley, 2021, Arrhenius & Stefánsson, 2023; for another presentation of multiple of theorems of Arrhenius together, see Thomas, 2016).

We can generalize the two-step procedure as follows, after fixing some set S of axiologies, and defining X Y and "X is beaten by Y" if for all axiologies < in the set S, X < Y:

- Rule out any option beaten by an option in your original set of available options.

- Of the remaining available options, pick any which is best for the necessary people.

Of the most plausible axiologies, e.g. those satisfying almost all of the conditions of the impossibility theorems (or the benign addition argument), there's a near-consensus in favour of Z>A+, with the dissidents being "anti-egalitarian" in comparing Z and A+, basically the opposite of egalitarian or prioritarian.[10] Z>A+ also follows from Harsanyi's utilitarian theorem, extensions to variable population cases and other ~utilitarian theorems, e.g. McCarthy et al., 2020, Theorem 3.5; Thomas, 2022; sections 4.3 and 5; Gustafsson et al., 2023; Blackorby et al., 2002, Theorem 3.

If each of the axiologies used can be captured as utility functions over which we can take expected values or otherwise make ex ante comparisons between probability distributions of outcomes, then this also extends the two-step procedure to probability distributions over outcomes.

Responses across views

Here are the verdicts of various views when all three options are available:

- Presentist and necessitarian person-affecting views recommend A+, because A+ is best for the necessary/present people.

- Dasgupta’s view (Dasgupta, 1994, section VI and Broome, 1996, section 5, or a version of it), which is person-affecting, recommends A.

- Meacham (2012)’s harm-minimization view, a person-affecting view, recommends A, because the people who exist in it are harmed the least in total, where the harm to an individual in an outcome is measured by the difference between their welfare in it and their maximum welfare across outcomes.

- However, this view ends up implausibly antinatalist, even with no future people experiencing any negative in their lives, only positive, just not as positive as it could be (pointed out by Michelle Hutchinson, in Koehler, 2021).

- Weak actualism (Hare, 2007, Spencer, 2021, section 6), a person-affecting view, recommends Z. An option/outcome is only permissible if and only if it's no worse than any other for the people who (ever) exist in the outcome.

- It rules out A, because A+ is better for the people who exist in Z.

- It rules out A+, because Z is better for the people who exist in A+.

- It does not rule out Z, because Z is better than both A and A+ for the people who exist in Z.

- Thomas (2023)’s asymmetric person-affecting views recommend Z:

- The views hold that exactly the undominated options — those not worse than any other in a binary comparison — are permissible, and X>Y if two conditions hold simultaneously:

- The total harm to people in X is less than the total harm to the people in Y, where the harm to a person in one outcome compared to another is the difference between their maximum welfare across the two outcomes and their welfare in that outcome, but 0 if they don’t exist in that outcome.

- X has higher total welfare than Y, i.e. X is better than Y according to total utilitarianism.

- A+>A, Z>A+ and Z and A are incomparable. Only Z is undominated.

- Condition ii is transitive, so any option with maximum total welfare will be undominated and permissible. Condition i can have cycles, and it does for A, A+ and Z. In general, if you define X>Y as X beating Y in all binary choices across a fixed set of views, and permit only undominated options, you will privilege the acyclic (and transitive) views in your set of views. In general, the repugnant conclusion will be permissible if one of the conditions you rank with is total welfare.

- The views hold that exactly the undominated options — those not worse than any other in a binary comparison — are permissible, and X>Y if two conditions hold simultaneously:

- Thomas (2019)’s asymmetric person-affecting views, which extend necessitarian binary choices using Schulze’s beatpath voting method, can be made to recommend something like Z, even with A available, by adjusting the numbers somewhat.[11]

- Totalism/the total view recommends Z, because it has the highest total utility.

- Averagism/the average view recommends A, because it has the highest average utility.

- Critical-level utilitarianism recommends A, if the critical level is at least ~2: subtract the critical level from each person’s welfare level, and then rank based on the sum of these (or, equivalently take the total welfare, subtract critical level*number of people and rank based on this).

- What negative (total) utilitarianism recommends depends only on the total negative value (e.g. total suffering, total preference or desire frustration), which I have not specified separately above. It could recommend any of the three, depending on the details of the thought experiment. It could also deny the framing, by denying the possibility of positive welfare.

The views above that recommend Z (perhaps other than negative utilitarianism) can also be made to recommend the very repugnant conclusion with three choices, where the original necessary lives are badly net negative in Z, because they are all additive, and the gains to the extra people will outweigh the harms to the original necessary people, with enough extra people.

Replacement with better off beings

In Huemer’s worlds above, the additional people are worse off than the original in A in each of A+ and Z, but we can make them better off in Z instead. For example, to capture replacement by artificial minds, consider the following possible futures, A, A+ and B:

- World A

- 8 billion current humans with very good welfare and very long lives (welfare level 1,000 each), plus

- 1 trillion future humans with similarly very good welfare and very long lives (welfare level 1,000 each), but no artificial minds. (Possibly still with advanced artificial intelligence, just not conscious.)

- World A+

- the same as A, but all 1.008 trillion humans are even better off (welfare level 2,000 each), plus

- an additional 10 trillion artificial minds serving humans, all with lives barely worth living, but still positive overall (welfare level 1 each).

- World B

- The 8 billion humans are killed early (welfare level 10 each),

- the 10 trillion artificial minds have far better lives than even the humans would have had in 2 (welfare level 10,000 each), because they’re much more efficient at generating positive welfare and better at avoiding negative welfare when in control, and

- the 1 trillion future humans are never born, and instead there are 1 trillion more artificial minds (also welfare level 10,000 each).

- (The artificial minds are not mind uploads of the humans. The 8 billion humans are dead and gone forever.)

Presentist and narrow necessitarian views still recommend A+. The wide-ish and narrow[4] versions of Dasgupta’s view still recommend A, but the fully wide version[6] recommends B. The other views listed in the previous section recommend B (and negative utilitarianism can recommend B).

Indeed, additive wide views and non-person-affecting views should usually recommend B, or something suitably similar in a similar thought experiment. Just between A and B, replacing 1 trillion future humans with 1 trillion far better off artificial minds is a huge benefit between matched counterparts, not just on aggregate, but also for each of those pairs of counterparts, which should be enough to outweigh the early deaths of the 8 billion humans, unless we prioritize humanity or the worse off.

And this is also not that counterintuitive. Humans today should make some sacrifices to ensure better welfare for future generations, even if these future people are contingent and their identities will be entirely different if we do make these sacrifices. Why should we care that these future people are humans or artificial minds?

On the other hand, maybe B is too unfair to the necessary humans. We are left too badly off and give up too much. A+ is of course even less fair by comparison with B. A prioritarian or egalitarian with a wide person-affecting view could recommend A. Or, we could go with something like the wide-ish version of Dasgupta's view to recommend A.

- ^

Non-aggregative views, views that prioritize the better off and views with positive lexical thresholds may reject this.

- ^

Assuming transitivity and the independence of irrelevant alternatives.

- ^

It's also called dominance addition, e.g. in Arrhenius, 2003 (pdf).

- ^

This is a wide-ish version. For a fully narrow version, rule out any outcome that’s worse than another with exactly the same set of people who ever exist. The narrow version would tell you to be indifferent (or take as incomparable) between a) creating someone with an amazing life and b) creating someone else with a life that would be worse than that, no matter how much worse, whether just good, marginal or bad. See the nonidentity problem (Roberts, 2019).

- ^

Or just the present people, or just the necessary moral agents, or just the present moral agents, or just the actual decision-makers.

- ^

Or best for the minimum number of people who will ever exist. For an additive view, rank outcomes by the sum of the following two terms:

1. The total welfare of the necessary people.

2. The average welfare of the contingent people in that outcome multiplied by the minimum number of contingent people across all outcomes.

This would give a fully wide version. This is inspired by Thomas, 2019.

- ^

The second step could instead choose what’s best for

a. both necessary people and those with bad lives (necessary or contingent) together, or

b. necessary people and those with bad lives, offsetting the contingent bad lives with contingent good lives, i.e. adding the sum of the welfare of contingent people, but replacing it with 0 if positive, and then adding this to the sum of welfare for necessary people, similar to Thomas (2019)’s hard asymmetric views.

The first (a) is quite antinatalist, because contingent bad lives can’t be made up for with contingent good lives. However, I personally find this intuitive. The second (b) allows this offsetting: as long as contingent people have on aggregate net positive lives, contingent bad lives won't count against an outcome at step 2.

- ^

We'd also want to avoid strict cycles in general, e.g. A1 < A2 < ... < An < A1, or else we could eliminate all options in the first step.

- ^

Or, we could define X Y, "X is at least as good as Y" as X is at least as good as Y on each axiology, so X > Y would mean X is at least as good as Y on each axiology, and strictly better on at least one.

- ^

Z>A+ follows from anonymous versions of total utilitarianism, average utilitarianism, prioritarianism, egalitarianism, rank-discounted utilitarianism, maximin/leximin, variable value theories and critical-level utilitarianism. Of anonymous, monotonic (Pareto-respecting), transitive, complete and IIA views, it's only really (partially) ~anti-egalitarian views (e.g. increasing marginal returns to additional welfare, maximax/leximax, geometrism, views with positive lexical thresholds), which sometimes ~prioritize the better off more than ~proportionately, that reject Z>A+, as far as I know. That's nearly a consensus in favour of Z>A+, and the dissidents usually have in my view more plausible counterparts that support Z>A+.

In particular, it seems

1. increasing marginal returns to additional welfare is less plausible than decreasing marginal returns (prioritarianism),

2. maximax/leximax is less plausible than maximin/leximin,

3. geometrism is less plausible than rank-discounted utiliarianism (or maybe similarly plausible)

4. views with positive lexical thresholds are less plausible than views without lexical thresholds or with only negative lexical thresholds

- ^

a) Replace the welfare level 101 in A+ with 201, so giving A+ total utility 300 (million) and average 3, and b) replace the welfare level 3 in Z with 4, so giving Z total utility 400 (million) and average 4. Then,

1. Z indirectly beats A (Z>A+ by 400-300=100, A+>A by 201-100=101; take the minimum of the two as 100) more than A directly beats Z (A>Z by 100-4=96).

2. Z directly beats A+ (Z>A+ by 400-300=100) more than A+ indirectly beats Z (A+>A by 201-100=101, and A>Z by 100-4=96; take the minimum of the two as 96).

See also this comment by Stijn arguing that the view can recommend the Very Repugnant Conclusion.

This is an interesting overview, as far as I can tell. Only it is hard to understand for someone who isn't already familiar with population ethics. For example:

It seems clear, e.g. from decision theory, that we can only ever "choose" between actions, never between outcomes. But A, A+, and Z are outcomes (world states), not actions. Apparently the only possible actions in this example would be intentionally moving between world states in time, i.e. "switching".

Then we would have three decision situations with three possible choice options each:

This changes things a lot. For example, it could both be bad to switch from current A to Z (because it would affect the current people [i.e. people in A] negatively) and to switch from current Z to A (because we would have to kill a lot of current people [i.e. people in Z] in order to "switch" to A). In that case it wouldn't make sense to say that A is simply better, worse, or equivalent to Z. What would we even mean by that?

Arguably we can only say a world state X is "better" than a world state Y iff both

This is because "X is better than Y" implies "Y is worse than X", while the goodness of switching between outcomes doesn't necessarily have this property. It can't be represented with >, <, =, it's not an order relation.

FWIW, people with person-affecting views would ask "better for whom?". Each set of people could have their own betterness order. Person-affecting views basically try to navigate these different possible betterness orders.

But it doesn't seem to matter whether X or Y (or neither) is actually current, everyone should be able to agree whether, e.g., "switching from X to Y is bad" is true or not. The switch choices always hypothetically assume that the first world (in this case X) is current, because that's where the potential choice to switch is made.

Between A and Z, the people in A are much better off in A, and the extra people in Z are much better off in Z (they get to exist, with positive lives). It seems like they'd disagree about switching, if everyone only considers the impact on their own welfare.

(Their welfare levels could also be the degree of satisfaction of their impartial or partially other-regarding preferences, but say they have different impartial preferences.)

Switching from A to Z means that A is current when the decision to switch or not switch is made. So the additional people in Z don't exist and are not impacted if the switch isn't made. Even if Z is current, people in Z can still evaluate whether switching from A to Z is good (= would have been good), since this just means "assuming A is current, is it good to switch to Z?". Even if Z is in fact current, the people in Z can still agree that, if A had been current, a switch to Z should not have been made. Intuitions to the contrary seem to mistake "I should not have existed" for "I should not exist". The former can be true while the latter is false.

They can agree, but they need not. Again, if everyone were purely selfish, it seems like they would disagree. The extra people would prefer to exist, given their positive welfare levels. The original people would prefer the extra not to exist, if it's paired with a loss to their own welfare. Or, if we took the perspectives of what's best for each person's personal/selfish welfare on their behalf, we'd have those two groups of perspectives.

And we can probably rig up a version that's other-regarding for the people, say the extra people are total utilitarians, and the original people have person-affecting views.

It makes sense to want to keep existing if you already exist. But believing that it would have been bad, had you never existed in the first place, is a different matter. For whom would it have been bad? Apparently for nobody.

That's a person-affecting intuition.

I can, now that I exist, assign myself welfare level 0 in the counterfactuals in which I was never born. I can also assign welfare level 0 to potential people who don't come to exist.

People talk about being grateful to have been born. One way to make sense of this is that they compare to a counterfactual in which they were never born. Or maybe it's just adding up the good and bad in their life and judging there's more good than bad. But then an "empty life", with no goods or bads, would be net 0, and you could equate that with nonexistence.

On some interpretations of the total view, it can be worse for someone to not be born even if they haven't been conceived yet, and even if they never will be.

Personally, I roughly agree with your intuition here, but it might need to be made into a "wide" version, in light of the nonidentity problem. And my views are also asymmetric.

Thanks for the feedback! I've edited the post with some clarification.

I think standard decision theory (e.g. expected utility theory) is actually often framed as deciding between (or ranking) outcomes, or prospects more generally, not between actions. But actions have consequences, so we just need actions with the above outcomes as consequences. Maybe it's pressing buttons, pulling levers or deciding government policy. Either way, this doesn't seem very important, and I doubt most people will be confused about this point.

On the issue of switching between worlds, for the sake of the thought experiment, assume the current world has 1 million people, the same people common to all three outcomes, but it’s not yet decided whether the world will end up like A, A+ or Z. That's what you're deciding. Choosing between possible futures (or world histories, past, present and future, but ignoring the common past).

I don't intend for you to be able to switch from A+ or Z to A by killing people. A is defined so that the extra people never exist. It's the way things could turn out. Creating extra people and then killing them would be a different future.

We could make one of the three options the "default future", and then we have the option to pick one of the others. If we’re consequentialists, we (probably) shouldn’t care about which future is default.

Or, maybe I add an uncontroversially horrible future, a 4th option, the 1 million people being tortured forever, as the default future. So, this hopefully removes any default bias.

Okay, having an initial start world (call it S), that is assumed to be current, makes it possible to treat the other worlds (futures) as choices. So S has 1 million people, but how much utility points do they have each? Something like 10? Then A and A+ would be an improvement for them, and Z would be worse (for them).

But if we can't switch worlds in the future that does seem like an unrealistic restriction? Future people have just as much control over their future as we have over ours. Not being able to switch worlds in the future (change the future of the future) would mean we couldn't, once we were at A+, switch from A+ to a more "fair" future (like Z). Since not-can implies not-ought, there would then be no basis in calling A+ unfair, insofar "unfair" means that we ought to switch to a more fair future.

The fairness consideration assumes utility can be redistributed, like money. Otherwise utility would presumably be some inherent property of the brains of people, and it wouldn't be unfair to anyone to not having been born with a different brain (assuming brains can't be altered).

Does it matter to you what the starting welfare levels of the 1 million people are? Would your intuitions about which outcome is best be different?

There are a few different perspectives you could take on the welfare levels in the outcomes. I intended them to be aggregate whole life welfare, including the past, present and future. Not just future welfare, and not welfare per future moment, day or year or whatever. But this difference often doesn't matter.

Z already seems more fair than A+ before you decide which comes about; you're deciding between them ahead of time, not (necessarily just) entering one (whatever that would mean) and then switching.

I think, depending on the details, e.g. certain kinds of value lock-in, say because the extra people will become unreachable, it can be realistic to be unable to switch worlds in the future. Maybe the extra people are sent out into space, and we're deciding how many of the limited resources they'll be sent off with, which will decide welfare levels. But the original million people are better off in A+, because the extra people will eliminate some threat to the current people, or the original people at least have some desire for the extra people to exist, or the extra people will return with some resources.

Or, it could be something like catastrophic climate change, and the extra people are future generations. We can decide not to have children (A), go with business as usual (A+) or make serious sacrifices now (Z) to slightly better their lives.

No matter how the thought experiment is made more concrete, if you take the welfare levels to be aggregate whole lifetime welfare, then it's definitely not possible to switch from Z or A+ to A after the extra people have already come to exist. A describes a world in which they never existed. If you wanted to allow switching later on, then you could allow switching every way except to A.

If you want an option where all the extra people are killed early, that could look like A+, but worse than A+ and A for the original million people, because they had to incur the costs of bringing about all the extra people and then the costs of killing them. It would also be no better than A+ for the extra people (but we could make it worse, or equal for them).

Z seeming more fair than A+ arguably depends on the assumption that utility in A+ ought to (and therefore could) be redistributed to increase fairness. Which contradicts the assumption of "aggregate whole lifetime welfare", as this would mean that switching (and increasing fairness) is ruled out from the start.

For example, the argument in these paragraphs mentions "fairness" and "regret", which only seems to make sense insofar things could be changed:

"Once the contingent people exist, Z would have been better than A+." -- This arguably means "Switching from A+ to Z is good" which assumes that switching from A+ to Z would be possible.

The quoted argument for A seems correct to me, but the "unfairness" consideration requires that switching is possible. Otherwise one could simply deny that the concept of unfairness is applicable to A+. It would be like saying it's unfair to fish that they can't fly.

Maybe we're using these words differently?

I think it’s not true in general that for X to be more fair wrt utility than Y, it must be the case that we can in practice start from X and redistribute utility to obtain Y.

Suppose in X, you kill someone and take their stuff, and in Y, you don't. Or in X, they would die, but not by your killing, and in Y, you save them, at some personal cost.

Whole lifetime aggregate utilities, (them, you):

X would (normally) be unfair to the other person, even if you can't bring them back to life to get back to Y. Maybe after they die, it isn’t unfair anymore, but we can judge whether it would be unfair ahead of time.

I guess you could also consider "starting from X" to just mean "I'm planning on X coming about", e.g. you're planning to kill the person. And then you "switch" to Y.

X isn't so much bad because it's unfair, but because they don't want to die. After all, fairly killing both people would be even worse.

There are other cases where the situation is clearly unfair. Two people committed the same crime, the first is sentenced to pay $1000, the second is sentenced to death. This is unfair to the people who are about to receive their penalty. Both subjects are still alive, and the outcome could still be changed. But in cases where it is decided whether lives are about to be created, the subjects don't exist yet, and not creating them can't be unfair to them.

Everyone dies, though, and their interests in not dying earlier trade off against others, as well as other interests. And we can treat those interests more or less fairly.

There are also multiple ways of understanding "fairness", not all of which would say killing both is more fair than killing one:

Y is more fair than X under 1, just considering the distribution of welfares. But Y is also more fair according to prioritarianism (3). I can also make it better according to other impartial standards (2), like average lifetime welfare or total lifetime welfare, and with greater priority for bigger losses/gains (4):

What I'm interested in is A+ vs Z, but when A is also an option. If it were just between A+ and Z, then the extra people exist either way, so it's not a matter of creating them or not, but just whether we have a fairer distribution of welfare across the same people in both futures. And in that case, it seems Z is better (and more fair) than A+, unless you are specifically a presentist (not a necessitarian).

When A is an option, there's a question of its relevance for comparing A+ vs Z. Still, maybe your judgement about A+ vs Z is different. Necessitarians would instead say A+>Z. The other person-affecting views I covered in the post still say Z>A+, even with A.

Your argument seems to be:

But that doesn't follow, because in 1 and 2 you did restrict yourself to two options, while there are three options in 3.

The arguments for unfairness of X relative to Y I gave in my previous comment (with the modified welfare levels, X=(3, 6) vs Y=(5,5)) aren't sensitive to the availability of other options: Y is more equal (ignoring other people), Y is better according to some impartial standards, and better if we give greater priority to the worse off or larger gains/losses.

All of these apply also substituting A+ for X and Z for Y, telling us that Z is more fair than A+, regardless of the availability of other options, like A, except for priority for larger gains/losses (each of the 1 million people has more to lose than each of the extra 99 million people, between A+ and Z).

Fairness is harder to judge between populations of different sizes (the number of people who will ever exist), and so may often be indeterminate. Different impartial standards, like total, average and critical-level views will disagree about A vs A+ as well as about A vs Z. But A+ and Z have the same population size, so there's much more consensus in favour of Z>A+ (although necessitarianism, presentism and views that especially prioritize more to lose can disagree, finding A+>Z).

It seems the relevant question is whether your original argument for A goes through. I think you pretty much agree that ethics requires persons to be affected, right? Then we have to rule out switching to Z from the start: Z would be actively bad for the initial people in S, and not switching to Z would not be bad for the new people in Z, since they don't exist.

Furthermore, it arguably isn't unfair when people are created (A+) if the alternative (A) would have been not to create them in the first place.[1] So choosing A+ wouldn't be unfair to anyone. A+ would only be unfair if we couldn't rule out Z. And indeed, it seems in most cases we in fact can't rule out Z with any degree of certainty for the future, since we don't have a lot of evidence that "certain kinds of value lock-in" would ensure we stay with A+ for all eternity. So choosing A+ now would mean it is quite likely that we'd have to choose between (continuing) A+ and switching to Z in the future, and switching would be equivalent to fair redistribution, and required by ethics. But this path (S -> A+ -> Z) would be bad for the people in initial S, and not good for the additional people in S+/Z who at this point do not exist. So we, in S, should choose A.

In other words, if S is current, Z is bad, and A+ is good now (in fact currently a bit better than A), but choosing A+ would quite likely lead us on a path where we are morally forced to switch from A+ to Z in the future. Which would be bad from our current perspective (S). So we should play it safe and choose A now.

Once upon a time there was a group of fleas. They complained about the unfairness of their existence. "We all are so small, while those few dogs enjoy their enormous size! This is exceedingly unfair and therefore highly unethical. Size should have been distributed equally between fleas and dogs." The dog, which they inhabited, heard them talking and replied: "If it weren't for us dogs, you fleas wouldn't exist in the first place. Your existence depended on our existence. We let you live in our fur. The alternative to your tiny nature would not being larger, but your non-existence. To be small is not less fair than to not be at all." ↩︎

I largely agree with this, but

2 motivates applying impartial norms first, like fixed population comparisons insensitive to who currently or necessarily exists, to rule out options, and in this case, A+, because it's worse than Z. After that, we pick among the remaining options using person-affecting principles, like necessitarianism, which gives us A over Z. That's Dasgupta's view.

Let's replace A with A' and A+ with A+'. A' has welfare level 4 instead of 100, and A+' has, for the original people, welfare level 200 instead of 101 (for a total of 299). According to your argument we should still rule out A+' because it's less fair than Z. Even though the original people get 196 points more welfare in A+' than in A'. So we end up with A' and a welfare level of 4. That seems highly incompatible with ethics being about affecting persons.

Dasgupta's view makes ethics about what seems unambiguously best first, and then about affecting persons second. It's still person-affecting, but less so than necessitarianism and presentism.

It could be wrong about what's unambiguously best, though, e.g. we should reject full aggregation, and prioritize larger individual differences in welfare between outcomes, so A+' (and maybe A+) looks better than Z.

Do you think we should be indifferent in the nonidentity problem if we're person-affecting? I.e. between creating a person a person with a great life and a different person with a marginally good life (and no other options).

For example, we shouldn’t care about the effects of climate change on future generations (maybe after a few generations ahead), because future people's identities will be different if we act differently.

But then also see the last section of the post.

In the non-identity problem we have no alternative which doesn't affect a person, since we don't compare creating a person with not-creating it, but creating a person vs creating a different person. Not creating one isn't an option. So we have non-present but necessary persons, or rather: a necessary number of additional persons. Then even person-affecting views should arguably say, if you create one anyway, then a great one is better than a marginally good one.

But in the case of comparing A+ and Z (or variants) the additional people can't be treated as necessary because A is also an option.

Then, I think there are ways to interpret Dasgupta's view as compatible with "ethics being about affecting persons", step by step:

These other views also seem compatible with "ethics being about affecting persons":

Anyway, I feel like we're nitpicking here about what deserves the label "person-affecting" or "being about affecting persons".

I wouldn't agree on the first point, because making Desgupta's step 1 the "step 1" is, as far as I can tell, not justified by any basic principles. Ruling out Z first seems more plausible, as Z negatively affects the present people, even quite strongly so compared to A and A+. Ruling out A+ is only motivated by an arbitrary-seeming decision to compare just A+ and Z first, merely because they have the same population size (...so what?). The fact that non-existence is not involved here (a comparison to A) is just a result of that decision, not of there really existing just two options.

Alternatively there is the regret argument, that we would "realize", after choosing A+, that we made a mistake, but that intuition seems not based on some strong principle either. (The intuition could also be misleading because we perhaps don't tend to imagine A+ as locked in).

I agree though that the classification "person-affecting" alone probably doesn't capture a lot of potential intricacies of various proposals.

We should separate whether the view is well-motivated from whether it's compatible with "ethics being about affecting persons". It's based only on comparisons between counterparts, never between existence and nonexistence. That seems compatible with "ethics being about affecting persons".

We should also separate plausibility from whether it would follow on stricter interpretations of "ethics being about affecting persons". An even stricter interpretation would also tell us to give less weight to or ignore nonidentity differences using essentially the same arguments you make for A+ over Z, so I think your arguments prove too much. For example,

You said "Ruling out Z first seems more plausible, as Z negatively affects the present people, even quite strongly so compared to A and A+." The same argument would support 1 over 2.

Then you said "Ruling out A+ is only motivated by an arbitrary-seeming decision to compare just A+ and Z first, merely because they have the same population size (...so what?)." Similarly, I could say "Picking 2 is only motivated by an arbitrary decision to compare contingent people, merely because there's a minimum number of contingent people across outcomes (... so what?)"

So, similar arguments support narrow person-affecting views over wide ones.

I think ignoring irrelevant alternatives has some independent appeal. Dasgupta's view does that at step 1, but not at step 2. So, it doesn't always ignore them, but it ignores them more than necessitarianism does.

I can further motivate Dasgupta's view, or something similar:

If you were going to defend utilitarian necessitarianism, i.e. maximize the total utility of necessary people, you'd need to justify the utilitarian bit. But the most plausible justifications for the utilitarian bit would end up being justifications for Z>A+, unless you restrict them apparently arbitrarily. So then, you ask: am I a necessitarian first, or a utilitarian first? If you're utilitarian first, you end up with something like Dasgupta's view. If you're a necessitarian first, then you end up with utilitarian necessitarianism.

Similarly if you substitute a different wide, anonymous, monotonic, non-anti-egalitarian view for the utilitarian bit.

Granted, but this example presents just a binary choice, with none of the added complexity of choosing between three options, so we can't infer much from it.

Well, there is a necessary number of "contingent people", which seems similar to having necessary (identical) people. Since in both cases not creating anyone is not an option. Unlike in Huemer's three choice case where A is an option.

I think there is a quite straightforward argument why IIA is false. The paradox arises because we seem to have a cycle of binary comparisons: A+ is better than A, Z is better than A+, A is better than Z. The issue here seems to be that this assumes we can just break down a three option comparison into three binary comparisons. Which is arguably false, since it can lead to cycles. And when we want to avoid cycles while keeping binary comparisons, we have to assume we do some of the binary choices "first" and thereby rule out one of the remaining ones, removing the cycle. So we need either a principled way of deciding on the "evaluation order" of the binary comparisons, or reject the assumption that "x compared to y" is necessarily the same as "x compared y, given z". If the latter removes the cycle, that is.

Another case where IIA leads to an absurd result is preference aggregation. Assume three equally sized groups (1, 2, 3) have these individual preferences:

The obvious and obviously only correct aggregation would be x∼y∼z, i.e. indifference between the three options. Which is different from what would happen if you'd take out either one of three options and make it a binary choice, since each binary choice has a majority. So the "irrelevant" alternatives are not actually irrelevant, since they can determine a choice relevant global property like a cycle. So IIA is false, since it would lead to a cycle. This seems not unlike the cycle we get in the repugnant conclusion paradox, although there the solution is arguably not that all three options are equally good.

I don't see why this would be better than doing other comparisons first. As I said, this is the strategy of solving three choices with binary comparisons, but in a particular order, so that we end up with two total comparisons instead of three, since we rule out one option early. The question is why doing this or that binary comparison first, rather than another one, would be better. If we insist on comparing A and Z first, we would obviously rule out Z first, so we end up only comparing A and A+, while the comparison A+ and Z is never made.

I can add any number of other options, as long as they respect the premises of your argument and are "unfair" to the necessary number of contingent people. What specific added complexity matters here and why?

I think you'd want to adjust your argument, replacing "present" with something like "the minimum number of contingent people" (and decide how to match counterparts if there are different numbers of contingent people). But this is moving to a less strict interpretation of "ethics being about affecting persons". And then I could make your original complaint here against Dasgupta's approach against the less strict wide interpretation.

But it's not the same, and we can argue against it on a stricter interpretation. The difference seems significant, too: no specific contingent person is or would be made worse off. They'd have no grounds for complaint. If you can't tell me for whom the outcome is worse, why should I care? (And then I can just deny each reason you give as not in line with my intuitions, e.g. "... so what?")

Stepping back, I'm not saying that wide views are wrong. I'm sympathetic to them. I also have some sympathy for (asymmetric) narrow views for roughly the reasons I just gave. My point is that your argument or the way you argued could prove too much if taken to be a very strong argument. You criticize Dasgupta's view from a stricter interpretation, but we can also criticize wide views from a stricter interpretation.

I could also criticize presentism, necessitarianism and wide necessitarianism for being insensitive to the differences between A+ and Z for persons affected. The choice between A, A+ and Z is not just a choice between A and A+ or between A and Z. Between A+ and Z, the "extra" persons exist in both and are affected, even if A is available.

I think these are okay arguments, but IIA still has independent appeal, and here you need a specific argument for why Z vs A+ depends on the availability of A. If the argument is that we should do what's best for necessary people (or necessary people + necessary number of contingents and resolving how to match counterparts), where the latter is defined relative to the set of available options, including "irrelevant options", then you're close to assuming IIA is false, rather than defending it. Why should we define that relative to the option set?

And there are also other resolutions compatible with IIA. We can revise our intuitions about some of the binary choices, possibly to incomparability, which is what Dasgupta's view does in the first step.

Or we can just accept cycles.[1]

It is constrained by "more objective" impartial facts. Going straight for necessitarianism first seems too partial, and unfair in other ways (prioritarian, egalitarian, most plausible impartial standards). If you totally ignore the differences in welfare for the extra people between A+ and Z (not just outweighed, but taken to be irrelevant) when A is available, it seems you're being infinitely partial to the necessary people.[2] Impartiality is somewhat more important to me than my person-affecting intuitions here.

I'm not saying this is a decisive argument or that there is any, but it's one that appeals to my intuitions. If your person-affecting intuitions are more important or you don't find necessitarianism or whatever objectionably partial, then you could be more inclined to compare another way.

We'd still have to make choices in practice, though, and a systematic procedure would violate a choice-based version of IIA (whichever we choose in the 3-option case of A, A+, Z would not be chosen in binary choice with one of the available options).

Or rejecting full aggregation, or aggregating in different ways, but we can consider other thought experiments for those possibilities.

Executive summary: The post explores the implications of person-affecting views and other moral theories on the repugnant conclusion and the replacement of humans with artificial minds, ultimately favoring a modified version of Dasgupta's view that avoids some counterintuitive conclusions.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.