TLDR

We hosted a session at EAGxRotterdam during which 60 participants discussed potential reasons why there are fewer women in EA and how this could be improved. The main categories of solutions people came up with were (1) adjusting outreach strategies, (2) putting women in more visible positions, (3) making EA’s atmosphere more female-friendly, (4) pursuing strategies to empower women in EA, and (5) adjusting certain attributes of EA thought. The goal of this post is to facilitate a solution-oriented discussion within the EA community so we can make tangible progress on its currently skewed gender ratio and underlying problems.

Some notes before we start:

•Whether gender diversity is something to strive for is beyond this discussion. We will simply assume that it is and go from there. You could for example check out these posts (1, 2, 3) for a discussion on (gender) diversity if you want to read about this or discuss it.

•To keep the scope of this post and the session we hosted manageable, we focused on women specifically. However, we do not claim gender is binary and acknowledge that to increase diversity there are many more groups to focus on than just women (such as POC or other minorities).

•The views we describe in this post don’t necessarily correspond with our (Veerle Bakker's & Alexandra Bos') own but rather we are describing others’ input.

• Please view this post as crowdsourcing hypotheses from community members as a starting point for further discussion rather than as presenting The Hard Truth. You can also view posts such as these (1, 2, 3) for additional views on EA’s gender gap.

EA & Its Gender Gap

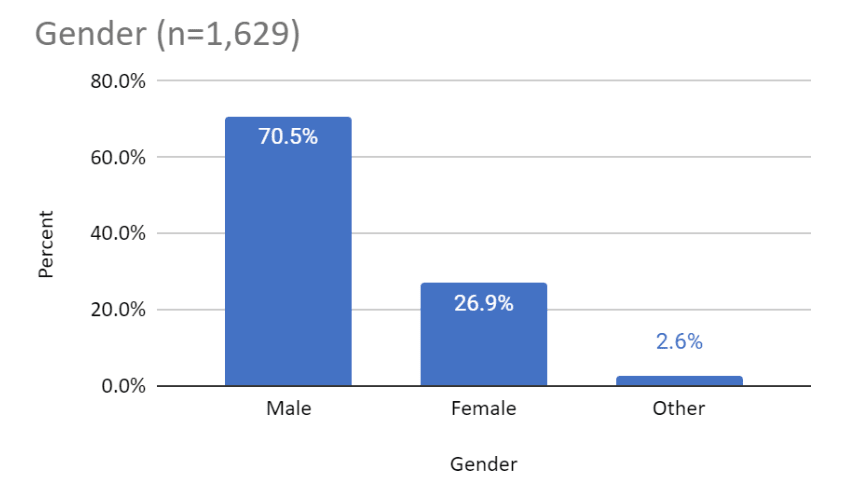

It is no secret that more men than women are involved with the EA community currently. In the last EA survey (2020), only 26.9% of respondents identified as female. This is similar to the 2019 survey.

The goal of this post is to get a solution-oriented discussion started within the wider EA community to take steps towards tangible change. We aim to do this by sharing the insights from a discussion session at EAGxRotterdam in November 2022 titled "Discussion: how to engage more women with EA". In this post, we will be going through the different problems the EAGx’ers suspected may underlie the gender gap. Each problem will be paired with the potential solutions they proposed.

Methodology

This post summarises and categorises the insights from group discussions from a workshop at EAGxRotterdam. Around 60 people attended this session, approximately 40 of whom were women. In groups of 5, participants brainstormed on both 1) what may be the reasons for the relatively low number of women in EA (focusing on the causes, 15 mins), and 2) in what ways the EA community could attract more women to balance this out (focusing on solutions, 15 mins). The discussions were based on these prompts. We asked the groups to take notes on paper during their discussions so that this could be turned into this forum post. We informed them of this in advance. If you want to take a deep dive and look at the source materials, you are welcome to take a look at the participants’ written discussion notes.

Limitations

This project has some considerable limitations. First of all, the groups’ ideas are based on short brainstorming sessions, so they are not substantiated with research or confirmed in other ways. It is also worth mentioning that not all attendees had a lot of experience with the EA community - some only knew about EA for a couple of weeks or months. Furthermore, a considerable amount of information may have gotten lost in translation because it was transferred through hasty discussion notes. Additionally, the information was then filtered through our lenses before reaching you in this post which, again, may skew it. We also likely influenced participants’ responses through the prompts that we gave them. It is also worth mentioning that in this post it is not visible which views were held more widely or were less common amongst participants, though we give some vague indicators such as 'some participants mentioned' sometimes.

Results

EA outreach strategies

One of the participants' main suspects for EA's relatively low number of women was EA community building outreach practices. They suspected the founder effect could be a potential cause for the skewed gender ratio (i.e. because there are already more men in a certain local group, they tend to bring in more men resulting in a ratio that is even more skewed than before). Participants also considered that this effect might be worsened by the study background of members of local groups (especially university groups). They reasoned that many people in EA have a STEM background and STEM is known to have an overrepresentation of men compared to women. So, reaching out to students with STEM backgrounds might contribute to an unbalanced gender ratio.

The participants brainstormed a couple of ideas of how to improve on outreach to involve more women:

- Have more diversity in outreach officers.

- Engaging more women as community builders.

- Outreach in groups with higher ratios of women.

- Learning from other fields with similar issues (don’t reinvent the wheel)

- For example, STEM programmes at university might have strategies for how to increase the percentage of female students compared to male students.

- Find best practices from other conferences with more women as speakers.

Women in visible positions

The attendees noted that there seem to be fewer female keynote speakers at EAG(x) conferences, which they linked to a lack of women in visible places in EA. Some solutions participants proposed were:

- Prioritising getting more women in leadership positions.

- Emphasising more female role models.

- Making an effort to mention women-led initiatives when talking to people.

- Writing a forum post highlighting some projects that women are working on within EA.

- Having more women in prominent and visible places, such as on the websites of EA organisations.

- Inviting more female keynote speakers at EAG(x) events.

Creating a Female-Friendly Atmosphere

Participants discussed the presence or absence of a female-friendly atmosphere in EA. They felt that, sometimes, women are not taken seriously (enough). Additionally, participants perceived men to be dominating discussions and hypothesised that they might feel a lower bar to speak compared to women. Another factor brought up by participants is flirting and/or asking women out in inappropriate contexts. Some attendees in the workshop also talked about it being intimidating to join a group of men which may withhold them from engaging. Moreover, someone described that there can be a sense of a ‘best epistemics competition’, that can be experienced as off-putting.

Possible solutions they proposed were:

- Publishing rules or a code of conduct to prevent sexual harassment. Some participants suggested the rule that men would not be able to ask women out but that women would be able to ask men out.

- Raising awareness about the bad experiences of women such as being hit on in inappropriate (professional) settings.

- Making clear that everyone is welcome at EA events, no matter their gender, ethnicity, class, physical capabilities etc. and to explicitly encourage women to apply for conferences, vacancies and the like to reduce self-doubt.

- Hosting workshops on the unequal gender ratio and how certain behaviours might contribute to this.

- Training facilitators to be aware of the unequal gender ratio and deal with this in appropriate ways (sensitivity training).

- Welcoming and onboarding people in a smooth, friendly way to make them feel included, especially if the rest of the group consists of mostly men but they are a woman.

- Setting up or improving feedback loops related to unwanted behaviour. So, in the case that something occurs, e.g. comments that make someone feel uncomfortable, the person who made the comment knows how that made the other feel and can learn from the situation.

Empowering women in EA

The attendees discussed a few potential actions to engage women with EA more strongly that are related to ‘empowerment’:

- Coaching/mentoring women. (Tip for women and people from other underrepresented groups: Magnify Mentoring)

- As local group organisers, setting the tone or offering resources specifically encouraging women to take more space in group settings and be more unapologetic.

- Women in EA forming small groups which get together to support and empower one another (sharing experiences, network, tools, tips, encouraging one another to apply for things, etc.) inspired by the book ‘Feminist Fight Club’ by Jessica Bennett.

Adjusting attributes of EA thought

There were a few topics attendees discussed that we categorised as ‘attributes to EA thought’. One of these topics is how EA does not focus specifically on gender inequality issues in its thinking (e.g. ‘the patriarchy’ is not a problem recommended to work on by the EA community). Another topic is the idea of one of the groups that women connect more to ethics of care (see footnote for definition).[1] They suspect that this type of ethics is not emphasised in EA and that that could be one of the reasons why EA is less popular among women compared to men. A third topic some groups mentioned was that the ‘high stakes’ in EA (lives depend on your actions) might lead to more self-doubt in women. Additionally, a group of participants discussed that it might be less clear how women-dominated fields (e.g. social sciences) can be useful in EA compared to a background in STEM.

Solutions that were mentioned in this theme were:

- To be more open to scholars of feminism, queer studies and gender studies.

- To be more open to EA cause areas ‘apart from AI’ and also target the topics that are generally more women-dominated.

- Giving more attention to issues such as the gender income gap.

Let’s discuss!

We would encourage you to use these prompts to start a conversation in the comments about how you think we could bridge the gender gap in EA.

- How could EA outreach be adjusted to attract more women?

- What EA cases/groups/events have you heard about or been a part of that had relatively more women? Why do you think this was the case? How could this be recreated elsewhere?

- What barriers have you experienced/heard of for women to join the EA community? How could these be lowered/taken away?

- What aspects of the EA community may make it less attractive to some women to stay? How could these aspects be improved upon?

- Do you think something about the EA philosophy or the way it is presented may discourage some women from engaging with it further? What exactly? (How) could this be adjusted?

- Bonus question: what could be concrete steps towards realising the proposed solutions in this post, and/or towards realising the solutions you would propose?

Discussion guidelines: We know that themes discussed in the post can be sensitive, so we would encourage everyone in the comments to be open to one another’s ideas, try to understand each others’ points of view and to give one another the benefit of the doubt. We would also encourage taking a constructive and solution-oriented approach.

Call to action

If you have any actionable ideas for projects or changes you can get moving to improve EA’s gender ratio, we encourage you to take the step to make it happen! This can range from inviting a woman to join you to an EA event, to adjusting your own behaviour or encouraging others to do so, all the way to setting up a new project or organisation.

Let’s work on solving this, (aspiring) change-makers!

Many thanks to Catherine Low for helping with shaping the discussion session, to all of the participants for sharing their views, and to Amarins Veringa & Stan van Wingerden for feedback on earlier versions of this post.

- ^

Ethics of care, according to Wikipedia, is “a normative ethical theory that holds that moral action centers on interpersonal relationships and care or benevolence as a virtue. EoC is one of a cluster of normative ethical theories that were developed by some feminists and environmentalists since the 1980s.” https://en.wikipedia.org/wiki/Ethics_of_care

Outreach in groups with higher ratios of women: this is the one thing that comes to my mind every time I think about this. As long as EA is downstream of a few male-dominated disciplines/fields, it seems virtually impossible for the gender ratio to change.

Here's a diagram I made to explain my thinking. The width of the arrow represents how many people are coming from each field, and the colored surface in each figure represents the gender ratio in each field:

If correct, this picture shows that you'd need to do something against this very strong society-wide selection pressure. Short of changing the gender ratios of the disciplines that EA is downstream of (society already spending significant amounts of resources on this), you would need to change which fields EA is getting people from. So here the outreach intervention you propose is equivalent to increasing the width of the arrows that represent female-dominated fields.

I disagree, because when I looked into gender dynamics in EA London, recruitment was not the issue. EA London 2016-2018 had as many women as men attending events as a first-time attendee, but they were much less likely to keep attending after their first, second or third event.

https://forum.effectivealtruism.org/posts/2RfQT7cybfS8zoy43/are-men-more-likely-to-attend-ea-london-events-attendance

It would be helpful to know the likelihood of continued attendance broken down by combinations of gender and academic field. It's plausible to me that the breakdown of academic fields between "women coming to their first EA event" and "men coming to their first EA event" could be significantly different, and that this difference could explain much of the difference in continued attendance. It's also plausible that, after controlling for academic field, women are significantly less likely to continue attending than men (e.g., women with a STEM background are less likely to continue attending than men with a STEM background, etc.)

My hunch is that both of these dynamics play a part in explaining lower levels of continued attendance by women. My confidence in the relative magnitude of each effect is pretty low.

True, not only outreach but also sufficient engagement/retention is needed in order to reliably increase the ratio.

I would be interested in more data about what people in EA's professional backgrounds are to see if the gender ratios are different from the gender ratio of the wider (non-EA) professions. It seems plausible to me that the framing of EA (heavily data-centric, emotionally detached) could attract/appeal to men more, whereas the focus on social impact and doing good might attract more women.

I think this is conflating the preferences of women in general with those of women from a particular class and cultural milieu[1]: highly educated urban professional-managerial class liberals[2] in the developed world.

Broad-spectrum appeals to (what are perceived as) their tastes and interests (as opposed to measures targeted at the interests of women qua women, like attempts to reduce sexual harassment):

This is not necessarily a bad choice. As something of a "liberal elite" myself, while I think the dominance of highly educated liberals within EA is both annoying and an epistemic weakness, the unfortunate fact of the matter is that EA is not a mass movement or likely to become one. Accepting a certain degree of parochialism in exchange for money and influence is probably worth it, and the PMC is probably the least-misaligned of all the patrons available.

But it is a dangerous choice, and not one that can be easily reversed. We shouldn't sleepwalk into it.

It used to be fashionable to worry about EA spiraling into quasi-religious extremism. Personally, I think a descent into characterless opportunism is far, far more likely. We'll take one more tiny step towards alignment with our chosen power base, cross some critical threshold from "involvement in EA looks good in contexts X Y and Z" to "involvement in EA is a good general-purpose elite resume builder", and that'll be that.

Or more precisely: the socially-normative views of that class and culture, which are often but not always the actual views of the majority.

AKA "coastal elites", "stuff white people like", "high cultural capital", "the brahmin left" - there are no non-controversial labels for them (ok, us) but I know you know who I'm talking about.

I definitely agree with your point that EA should avoid becoming an elite haven, and should be checking to ensure that it is not needlessly exclusionary.

However, I'm not sure that your equation of feminism with the professional managerial class actually holds up. According to this poll, 61% of american women identify with feminism "very well" or "somewhat well", including 54% of women without college degrees and 42% of women who lean republican. This is very far from being a solely elite thing!

If you want to be welcoming to women, you have to be welcoming to feminists. That doesn't mean cancelling or excluding people over terminology disputes or minor opinions. It means listening to people, and treating their viewpoints as valid and acceptable.

There might be differences between identifying with feminism and 'being open to scholars of feminism, queer studies and gender studies' though. Most Americans probably aren't familiar with academia to know of its latest thinking.

And like how different people have different notions of what counts as discriminatory, racist, sexist, or not discriminatory, racist, sexist, it's possible that different people have different notions of what 'feminism' means. (Some might consider it a position supporting equal rights between the sexes - others a position supporting women's rights. They might be thinking of the second, third, or fourth wave etc.)

The supplementary document containing the survey questions suggests the question asked was "How well, if at all, do each of the following describe you?" followed by "Environmentalist", "Feminist" and "A supporter of gun rights" (in random order), which doesn't seem to specify one specific notion of 'feminist' for survey participants to consider.

Although, to be fair, maybe there's actually more agreement among Americans on the definition of feminist (in the year of the survey, 2020) than I'm expecting.

In any case, I expect the differences in preferences of elite Anglosphere/U.S. women, and not-necessarily-elite, non-Anglosphere/non-U.S. women in general (e.g., in Europe, Asia, South America) would still be quite large.

We are either open to feminist scholarship or we are not. Do you think that if EA openly declared itself hostile to scholars of feminism, that most self described feminists would not be annoyed or alienated, at least a little bit? This seems rather unlikely.

There's a similarly large gap between scholars of conservativism and the average conservative. If EA declared that conservative scholars were not welcome, do you think the average conservative would be fine with it?

"We are either open to feminist scholarship or we are not."

This doesn't make sense to me. It seems to me that a group could be openly hostile, politely unwelcoming, welcoming in name but not do anything based on the scholarship, or make it a priority, to name a few!

So in my comment I was only trying to say that the comment you responded to seemed to point to something true about the preferences of women in general vs. the preferences of women who are "highly educated urban professional-managerial class liberals in the developed world".

Such perspectives seem easy to miss for people (in general/of all genders, not just women) belonging to the elite U.S./U.S.-adjacent progressive class - a class that has disproportionate influence over other cultures, societies etc., which makes it seem worthwhile to discuss in spaces where many belong to this class.

About your other point, I guess I don't have much of an opinion on it (yet), but my initial impression is that it seems like openness comes in degrees. Compared to other movements, I also rarely observe 'EA' openly declaring itself hostile to something (e.g. "fraud is unacceptable" but there aren't really statements on socialism, conservatism, religions, culture...).

There's feminism and then there's feminism (and also feminism, feminism, and feminism). Even within the academy you have radfems (e.g. Julie Bindel) and queer theorists (e.g. Julia Serano) and liberals (e.g. Martha Nussbaum) at each other's throats - and I very much doubt that the average self-identified feminist has any of them in mind (as opposed to, say, maternal leave and abortion access) when they claim that label.

I'm seeing an argument against dogmatically enforcing particular sub-branches of feminism, but that is not at all what the OP has suggested. Being open to feminism means being open to a variety of opinions within feminism.

What stance do you actually want EA to take when it comes to this issue? Do you want to shun feminist scholars, or declare their opinions to be unworthy of serious thought?

I don't think these are sub-branches of any single coherent thing, any more than contemporary left-of-center politics is just hashing things out between factions of the Jacobin club. Feminism is at most a loose intellectual tradition, not an ideology.

The actual cluster-structure here is something like

Engagement with radical feminism would be both actively harmful and, given their well-deserved reputation for rabid transphobia, a serious reputational risk. They should be completely ignored.

EA is already quite engaged with mainstream liberalism. This is basically fine, but the fact that it's often not acknowledged as an ideological stance is very bad: many EAs have a missing gear called "other worldviews make perfect sense on their own terms and are not deformed versions of your own". No change in attitude vis a vis liberal feminism is needed, just go take some old books seriously for a while or something.

The New Left has a bit to offer on the "big ideas" front, but it has terrible epistemics, worse praxis, and is also weird and alienating to a significant fraction of the population. I think it would be a serious mistake for EA organizations to promote their frameworks. Engagement with people who already buy it seems unlikely to matter much either way - it's an almost completely incommensurable worldview.

Any good-faith engagement whatsoever with left-of-liberal politics would be nice, but I'm not holding my breath.

Regarding missing gears and old books, I have recently been thinking that many EAs (myself included) have a lot of philosophical / cultural blind spots regarding various things (one example might be postmodernist philosophy). It's really easy to developer a kind of confidence, with narratives like "I have already thought about philosophy a lot" (when it has been mostly engagement with other EAs and discussions facilitated on EA terms) or "I read a lot of philosophy" (when it's mostly EA books and EA-aligned / utilitarianist / longtermist papers and books).

I don't really know what the solutions for this are. On a personal level I think perhaps I need to read more old books or participate in reading circles where non-EA books are read.

I don't really have the understanding of liberalism to agree or disagree with EA being engaged with mainstream liberalism, but I would agree that EA as a movement has a pretty hefty "pro-status quo" bias in it's thinking, and especially in it's action quite often. (There is an interesting contradiction here in EA views often being pretty anti-mainstream though, like thought on AI x-risks, longtermism and wild animal welfare.)

I would suggest, as a perhaps easier on-ramp, reading writing by analytic philosophers defending the views of people from other traditions or eras. A few examples:

I am generally skeptical of people attempting to preempt criticism on a controversial topic by declaring it out of scope for the discussion. While I understand the desire to make progress on an issue, establishing that the issue is a correct one is important! You attempt to pass off this evidential burden to the linked posts, but it's worth noting that at least the first two were generally regarded as quite weak at the time they were written (I haven't seen any significant discussion of the third and comments are not enabled).

The first, 'Why & How to Make Progress on Diversity & Inclusion in EA' (41 upvotes, 231 comments) attempted to lay out the scientific evidence for why gender ratio balance was important, but did so in a really quite disappointing way. Evidence was quoted selectively, studies were mis-described, other results failed to replicate and the major objections to the thesis (e.g. publication bias in the literature) were not considered. Overall the post was sufficiently weak that I think this comment was a fair summary: 'I am disinclined to be sympathetic when someone's problem is that they posted so many bad arguments all at once that they're finding it hard to respond to all the objections.'

The second, 'In diversity lies epistemic strength' (10 upvotes, 25 comments), argues that we should try to make the community more demographically diverse because then we'd benefit from a wider range of perspectives. But this instrumental argument relies on a key premise, that "we cannot measure the diversity of perspectives of a person directly, [so] our best proxy for it is demographic diversity", which seems clearly false: there are many better proxies, like just asking people what their perspectives are and comparing to the people you have already.

I'm open to gender diversity promotion actually being a worthwhile project and cause area. But I think we should reject an argumentative strategy of relying on posts that received a lot of justified criticism in an attempt to avoid evidential burden.

In the case of this post I actually think the issue is even clearer. Some of your proposals, like adopting "the patriarchy" as a cause area, or rejecting impartiality in favour of an "ethics of care", are major and controversial changes. EA has been, up until now, dedicated to evaluating causes purely based on their cost-effectiveness on impartial grounds, and not based on how they would influence the PR or outreach for the EA movement. We have conspicuously not adopted cause areas like abortion as a focus, even though doing so might help improve attract extremely under-represented groups (e.g. religious people, conservatives), because people do not think it is a highly cost-effective cause area, and I think this is the right decision. Suggesting we should adopt a cause that we would not otherwise have chosen - that PR/outreach benefits should be considered in the cause area evaluation process, comparable to scope/neglectedness/tractability - requires a lot of justification. And yet the issues that we have to discuss to provide that justification (e.g. how large are the benefits of gender balance, how large are the costs, and how cost-effective are the interventions?) fall squarely within the topics you have declared verboten.

I think it's permissible/reasonable/preferable to have forum posts or discussion threads of the rough form "Conditional upon X being true, what are the best next steps?" I think it is understandable for such posters to not wish to debate whether X is true in the comments of the post itself, especially if it's either an old debate or otherwise tiresome.

For example, we might want to have posts on:

I mostly agree with this, and don't even think X in the bracketing "conditional upon X being true" has to be likely at all. However, I think this type of question can become problematic if the bracketing is interpreted in a way that inappropriately protects proposed ideas from criticism. I'm finding it difficult to put my finger precisely on when that happens, but here is a first stab at it:

"Conditional upon AI timelines being short, what are the best next steps?" does not inappropriately protect anything. There is a lively discussion of AI timelines in many other threads. Moreover, every post impliedly contains as its first sentence something like "If AI timelines are not short, what follows likely doesn't make any sense." There are also potential general criticisms like "we should be working on bednets instead" . . . but these are pretty obvious and touting the benefits of bednets really belongs in a thread about bednets instead.

What we have here -- "Conditional upon more gender diversity in EA being a good thing, what are the best next steps?" is perfectly fine as far as it goes. However, unlike the AI timelines hypo, shutting out criticisms which are based on questioning the extent to which gender diversity would be beneficial risks inappropriately protecting proposed ideas from evaluation and criticism. I think that is roughly in the neighborhood of the point @Larks was trying to make in the last paragraph of the comment above.

The reason is that the proposed ideas that might be proposed in response to this prompt are likely to have both specific benefits and specific costs/risks/objections. Where specific costs/risks/objections are involved -- as opposed to general ones like "this doesn't make sense because AGI is 100+ years away" or "we'd be better off focusing on bednets" -- then bracketing has the potential to be more problematic. People should be able to perform a cost/benefit analysis, and here that requires (to some extent) evaluating how benefical having more gender diversity in EA would be. And there's not a range of threads evaluating the benefits and costs of (e.g.) adding combating the patriarchy as an EA focus area, so banishing those evaluations from this thread poses a higher risk of suppressing them.

Thank you, this explanation makes a lot of sense to me.

Fwiw, I think your examples are all based on less controversial conditionals, though, which makes them less informative here. And I also think the topics that are conditioned on in your examples already received sufficient analyses that make me less worried about people making things worse* as they will be aware of more relevant considerations, in contrast to the treatment in the background discussions that Larks discussed.

*(except the timelines example, which still feels slightly different though as everything seems fairly uncertain about AI strategy)

Hmm good point that my examples are maybe too uncontroversial, so it's somewhat biased and not a fair comparison. Still, maybe I don't really understand what counts as controversial, but at the very least, it's easy to come up with examples of conditionals that many people (and many EAs) likely place <50% credence on, but are still useful to have on the forum:

But perhaps "many people (and many EAs) likely place <50% credence on" is not a good operationalization of "controversial." In that case maybe it'd be helpful to operationalize what we mean by that word.

I think the relevant consideration here isn't whether a post is (implicitly or not) assuming controversial premises, it's the degree to which it's (implicitly or not) recommending controversial courses of action.

There's a big difference between a longtermist analysis of the importance of nuclear nonproliferation and a longtermist analysis of airstrikes on foreign data centers, for instance.

Hi Larks, thank you for taking the time to articulate your concerns! I will respond to a few below:

Concern 1: passing off evidential burden

• I agree it would be preferable if we would have a made a solid case for why gender diversity is important in this post.

-> To explain this choice: we did not feel like we could do this topic justice in the limited time we had available for this so decided to prioritize sharing the information in this post instead. Another reason for focusing on the content of the post above is that we had a somewhat rare opportunity to get this many people's input on the topic all at once - which I would say gave us some comparative advantage for writing about this rather than writing about why/whether gender diversity is important.

• As you specifically mention that you think "relying on posts that received a lot of justified criticism" is a bad idea, do you have suggestions for different posts that you found better?

Concern 2: "Some of your proposals, like adopting "the patriarchy" as a cause area, or rejecting impartiality in favour of an "ethics of care", are major and controversial changes"

• Something I'd like to point out here: these are not our proposals. As we mention in the post, 'The views we describe in this post don’t necessarily correspond with our (Veerle Bakker's & Alexandra Bos') own but rather we are describing others’ input.' For more details on this process, I'd recommend taking a look at the Methodology & Limitations if you haven't already.

-> Overall, I think the reasons you mention for not taking on the proposals under 'Adjusting attributes of EA thought' are very fair and I probably agree with you on them.

• A second point regarding your concern: I think you are conflating the underlying reasons participants suspected are behind the gender gap with the solutions they propose.

However,

saying 'X might be the cause of problem Y', is not the same as saying:

'we should do the opposite from X so that problem Y is solved'

Therefore, I don't feel that, for instance, your claim that a proposal in this post was to adopt "the patriarchy" as cause area fairly represents the written content. What we wrote is that "One of these topics is how EA does not focus specifically on gender inequality issues in its thinking (e.g. ‘the patriarchy’ is not a problem recommended to work on by the EA community)." This is a description of a concern some of the participants described, not a solution they proposed. The same goes for your interpretation that the proposal is "rejecting impartiality in favour of an ethics of care".

Larks, I think you're conflating 2 different things:

1) discussing whether something closer to gender parity (I think that's a more precise word than 'diversity' in this context) is desirable at all,

versus

2) discussing whether some particular step to promote it is worth the cost.

It's only the EDIT: first (original wrote second, sorry) that the post says its not focused on.

This is quite important because finding 'closer to gender parity is better, all things being equal' "controversial" is quite different from finding the claim that some specific level of prioritization of gender parity or some particular argument for why it is good is controversial. It's hard to tell which you are saying is "controversial" and it really effects how strongly anti-feminist what you are saying is.

Though in fairness, it is certainly hard to discuss whether any individual proposal is worth (alleged) costs, without getting into how much value to place on gender parity relative to other things.

Re: outreach to more women

EA London 2016-2018 had as many women as men attending events as a first-time attendee, but they were much less likely to keep attending after their first, second or third event.

I'd strongly encourage people to try to replicate this with their own local groups. Are there less women because less women show interest/hear about EA, or because they choose not to come back?

https://forum.effectivealtruism.org/posts/2RfQT7cybfS8zoy43/are-men-more-likely-to-attend-ea-london-events-attendance

Thanks for this post! Re: 'Empowering women in EA' - I am a coach and currently 80% of my clients are women in EA. I'm particularly excited to work with women who are already working (or want to work) on important problems in the world & who suspect that coaching might help them be more successful. More info here.

+

Not only are these directly contradictory (pigeonholing women into being community builders will preclude greater participation in speaking positions, which usually require a great deal of specialist knowledge), but EA is already known for shoving women into community building/operations roles (hypothesis: because they're not taken seriously as researchers/specialists).

This is going to get you nowhere without particular seats at those conferences, organizations, fellowships, etc. actually set aside for women. It's very easy to say "No, it's okay, we accept women, too!" and much harder to get a group of men to follow through with that.

I once approached a man at an EA social event who I had heard make a misogynistic comment that I found particularly reproachable. He apologized for being "unprofessional." We were playing beer pong; professionalism had nothing to do with it. There is some kind of mental disconnect making the rounds, to the effect that this behavior is only a problem in professional contexts, and men behaving poorly to women in social contexts has no ramifications on the community.

I think this is spot on. There have been many discussions on the forum proposing rules like "Never hit on women during daytime in EAG, but it's ok at afterparties". And they're all basically doing something blunt on the one hand, and not preventing people from being a**holes on the other hand.

I think the bright-line rules serve several important purposes. They are not replacements for "don't be an a**hole," but are rather complements. A norm against seeking romantic or sexual connection during EAG events, for instance, is intended in part to equalize opportunities for professional networking at a networking event (which doesn't happen if, e.g., some people are setting up 1:1s for romantic purposes).

It is easier for everyone to realize when a bright-line norm would or has been breached. That should make it more likely that the norms won't be breached in the first place, but also should make it more likely that norm violations will be reported and that appropriate action will be taken.

So I think this is a both/and situation.

I understand what tension you are describing, a question for clarification: I personally am not familiar with your description that "EA is already known for shoving women into community building/operations roles", where does this sense come from?

And I think that's another tangible proposal you're making here which I'd like to draw attention to and make explicit to see what others think: creating quota for how many spots have to go to women at conferences, organizations, fellowships etc.

My sense that women in EA are shoved into community building or operations roles comes from most of the women I know in EA having specialist/technical backgrounds but being shoved into community building or operations roles.

It also comes from conversations with those women where they confirm that they share the sense that EA is known for shoving women into community building and operations roles.

It also comes from the vast majority of websites of EA organizations featuring men as directors/leaders/researchers/etc. and women disproportionately in operations roles, and from going to fellowships and conferences where women are responsible for the day-to-day operations, and from planning events where women are responsible for the operations. In a community that's roughly 2/3s men, that's kind of insane.

I'm not pretending to have done a survey on this, but in my experience this has been such a well-known/previously-observed phenomenon that that survey would be a waste of time.

Also, I assumed the idea of quotas for women were why my post's agreement karma is pretty controversial, so I hope you have better luck than I did getting feedback on that idea.

Thanks for this post – as a woman in STEM and a community builder, gender diversity is something I'm trying to improve. I don't have any answers, but I want to say that I wholeheartedly support this initiative and would love to hear any suggestions on how to appeal to and retain minority groups in EA!