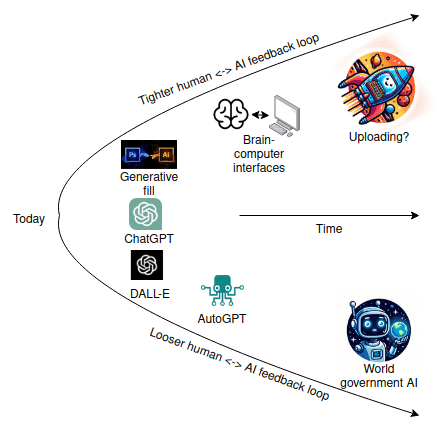

Next week for the 80,000 Hours Podcast I'm interviewing ethereum creator Vitalik Buterin on his recent essay 'My techno-optimism' which, among other things, responded to disagreement about whether we should be speeding up or slowing down progress in AI.

I last interviewed Vitalik back in 2019: 'Vitalik Buterin on effective altruism, better ways to fund public goods, the blockchain’s problems so far, and how it could yet change the world'.

What should we talk about and what should I ask him?

And also: about the "AI race" risk a.k.a. Moloch a.k.a. https://www.lesswrong.com/posts/LpM3EAakwYdS6aRKf/what-multipolar-failure-looks-like-and-robust-agent-agnostic