This post was written by David Janků with contributions from Adéla Novotná (graphs and analyses on the background) and useful edits by Sophie Kirkham.

Summary

- In autumn 2022, Effective Thesis held the Exceptional Research Award to encourage and recognize students conducting promising research that has the potential to significantly improve the world.

- We received 35 submissions, had 25 of them reviewed and chose 6 main prize winners and 4 commendation prize winners (see the winning submissions and winners’ profiles here).

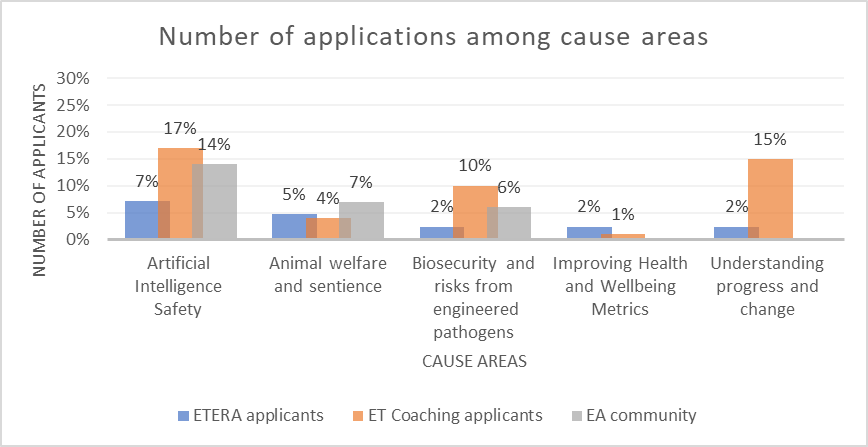

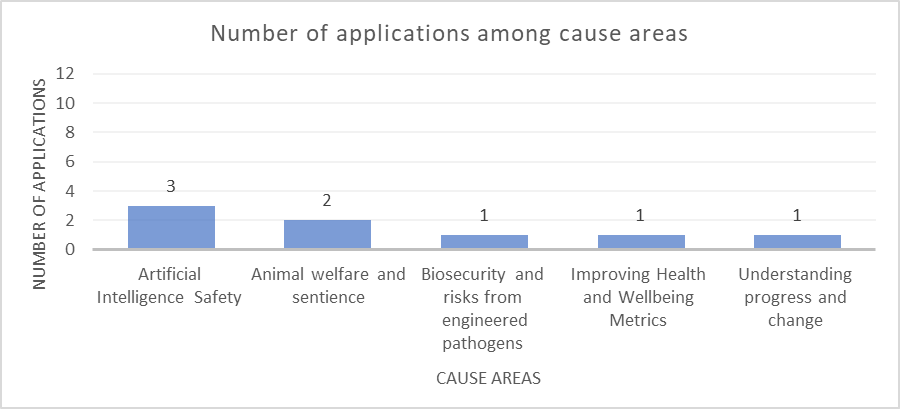

- We received applications from across a variety of study disciplines and cause areas, though causes that have traditionally been prioritised by EA leaders - such as AI alignment, biosecurity, and animal welfare - were relatively underrepresented in our sample. It is unclear to us why this is the case (e.g. whether people working on those causes already have existing cause-specific prizes to apply to; or have different publication norms - like publishing mainly via blog posts - that our award is not compatible with; or to what extent some of these directions are not bottlenecked by the creation of new research ideas and understanding but rather by execution of existing ideas). We think this represents room for improvement in the future.

- Only 57 % of all applicants and 50 % of all winners had any engagement with Effective Thesis prior to applying for the award, suggesting the award was able to attract students who are doing valuable work but who have not interacted with Effective Thesis before.

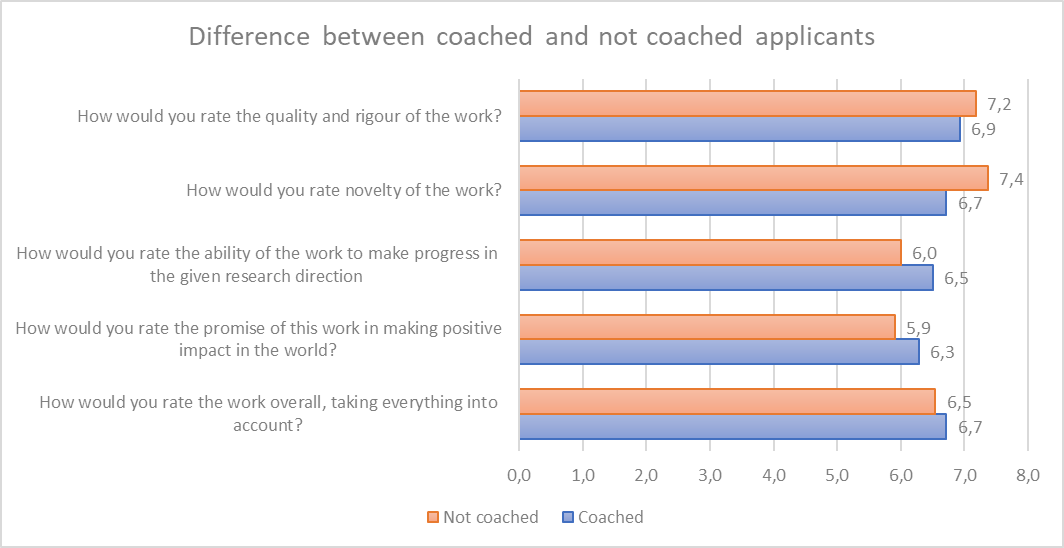

- That being said, people previously coached by Effective Thesis scored higher in the reviews on “potentially having more impact with their research” than people who were not coached, suggesting our coaching might help people arrive at better ideas for research topics.

- The review scores indicated that the quality of the application pool was fairly high. The highest scores were usually given for rigour and novelty, and the lower scores were for the ability to make progress in the research field and potential positive impact in the world. This is to be expected since achieving impact is harder than achieving rigour and novelty.

- Both reviewers and applicants (successful and unsuccessful) seemed to be happy about their participation in this award, as reflected by their willingness to be associated with the award publicly, high ratings of the usefulness of the reviews by applicants, and a number of qualitative comments we received.

What is ETERA and why did we decide to run it?

The Effective Thesis Exceptional Research Award (ETERA) has been established to encourage and recognize promising research, conducted by students from undergraduate to PhD level, that has the potential to significantly improve the world. We accepted submissions of theses, dissertations and capstone papers. Entries were required to have been submitted to a university between 1st January 2021 - 1st September 2022 and to relate to one or multiple prioritised research directions on our site. We accepted entries from all countries, though all entries had to be submitted in English.

A prize of 1000 USD was offered for winning entries (and 100 USD for commendation prizes). In addition, prize-winning entries would be featured on our website and promoted by us.

We expected the award to have three primary positive impacts:

- Providing a career boost for aspiring researchers already focused on very impactful questions and producing high quality work. Winning this award would give them additional credentials and increase their chances of building a successful research career.

- Motivating additional aspiring researchers to begin exploring questions in some of the research directions that we focus on (so they could apply for the award and potentially win).

- Identifying people already doing valuable work who were not known to Effective Thesis or the Effective Altruism community before.

Evaluation process

All submissions were first pre-screened for relevance by members of the Effective Thesis team. We then searched for suitable domain experts to review the submissions that passed the pre-screen.

The review process was double-blind (i.e. the authors of submissions didn’t know the identities of the reviewers, and the reviewers didn’t know the authors’ identities, their country of residence or the university they studied at). We aimed to select reviewers who would be able to judge not only the academic quality, rigour and novelty of submissions, but also the promise of submissions in terms of the positive impact they could have on the world (inclusive of various theories of change, such as increasing academic interest, influencing policy decisions, influencing funding decisions, influencing the general public, etc...).

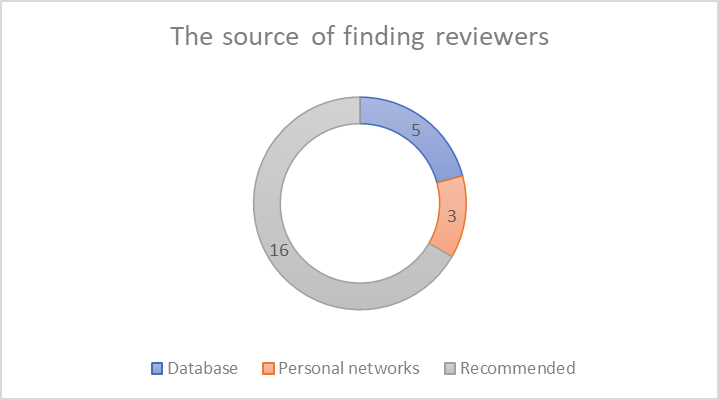

To identify reviewers, we made use of our personal connections in the EA community and field-specific research communities and our database of EA-aligned domain experts. We also asked people in our network for recommendations of suitable reviewers. We aimed to choose at least postdoc-level reviewers for PhD-level submissions and at least graduate-level reviewers for undergraduate-level submissions. In a few cases, we took into account a reviewer’s relevant research experience outside of academia.

We learned of two-thirds of the reviewers through recommendations, which is the source we preferred as our knowledge and network in the relevant fields are limited.

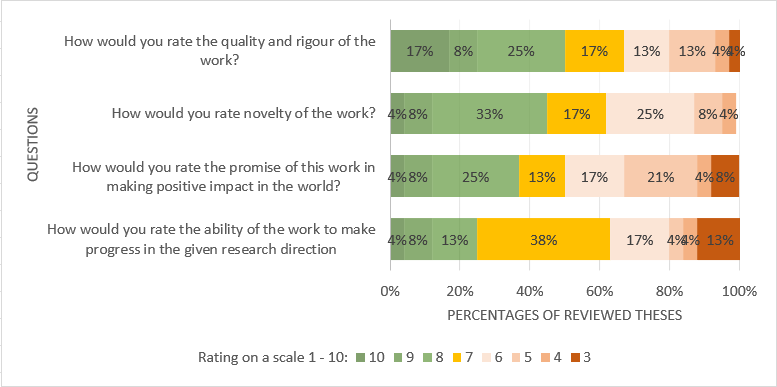

Each reviewer received one submission, which they scored on 4 scales of 1-10 points. Reviewers rated submissions on criteria of 1) quality and rigour; 2) novelty; 3) ability of the work to make progress in a given research direction; 4) promise of the work in making a positive impact in the world. Reviewers also provided a qualitative assessment of the work, which was later shared with the author of the submission in an anonymised form (see the review form here). Reviewers were asked to spend up to 4 hours reading and thinking about the submission they were reviewing and were reimbursed for their time and effort.

Finally, the same members of the Effective Thesis team who carried out the pre-screening read all the reviews and chose the award winners based on this information.

Submissions

Number of applications

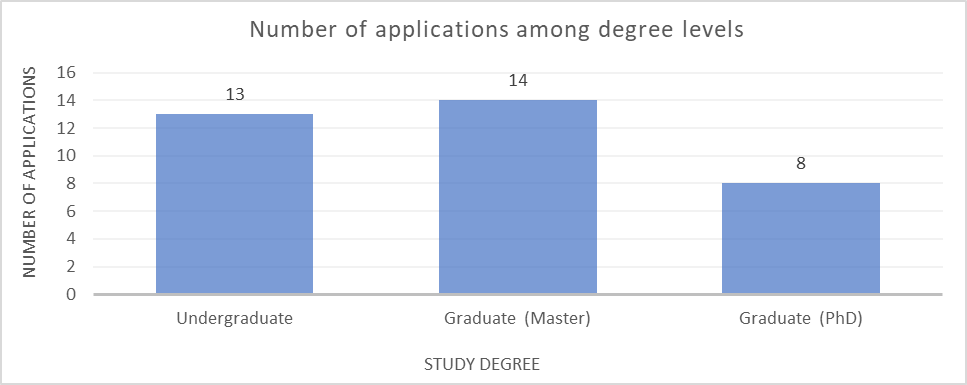

We have received 35 submissions.

By degree level

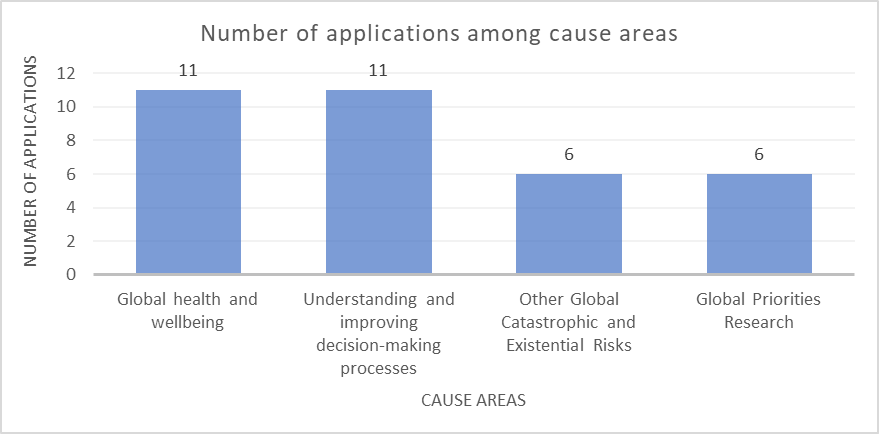

By cause areas

Note: Applicants could choose 1 or more cause areas related to their submission.

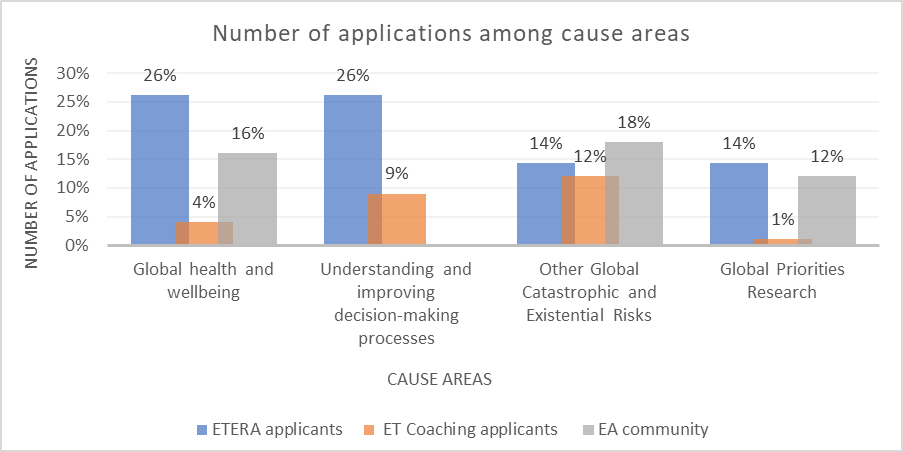

By cause area including Effective Thesis coaching applicants and EA community (sorted by ETERA applicants from highest to lowest)

Note: “ET Coaching applicants” shows the proportion of students who applied for our coaching between June 2021 and 2022 (see Impact Evaluation 2022). “EA community” shows the proportion of people who selected a given cause as “the top cause EA community should focus on” in the EA Survey 2020. Note that the EA survey categorises cause areas differently from Effective Thesis and as a result, there is no comparison data from the EA survey for categories “Understanding and Improving institutional decision-making processes”, “Improving Health and Wellbeing Metrics” and “Understanding Progress and Change”.

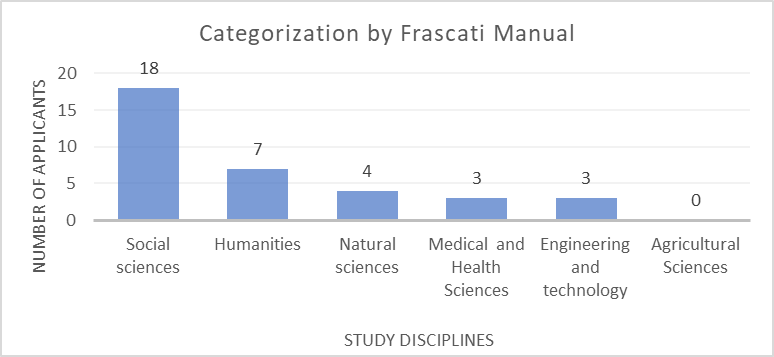

By study discipline

Note: The category “Other” consists of 12 disciplines that occurred only once. These are: Artificial Intelligence, Behavioral Science, Biology, Engineering, Chemistry, Developing and evaluating interventions, Law, Ocean Science and Technology, Psychiatry, Psychology, Public health/epidemiology, and Social Research Public Policy.

Note: Frascati Manual, wiki

Previous engagement with Effective Thesis

Engagement of all applicants

57% of the applicants used at least one service provided by Effective Thesis before applying for the award. The distribution across different services and other forms of engagement is displayed in the table below.

| Service (before 1st September 2022) | Yes | No |

| Coaching | 17 | 18 |

| Published their thesis on “Finished thesis” page (only students that were coached) | 6 | 11 |

| Community (before 15th December 2022) | 6 | 29 |

| ECRON | 11 | 24 |

| Research workshop on reasoning transparency | 1 | 34 |

| Filled out our impact evaluation form | 3 | 19 |

Does coaching help to generate better ideas for research topics?

Submissions of non-coached students scored higher in quality, rigour, and novelty compared to coached students. On the other hand, submissions of previously coached students scored higher in the ability of the work to make progress in the given research direction, the promise of the work in making a positive impact in the world and the overall score. This might suggest that going through our coaching process helps students to come up with ideas that are more impactful. However, this is only indirect evidence - to be more confident, we could look into how helpful the coached students said the coaching was for them in our yearly evaluation.

Three applicants to the award had previously filled out the summer 2022 evaluation of Effective Thesis services, and one of them was among the winners of the prize. All of them had attended a coaching session and subscribed to the Opportunities newsletter, and some of them had also used other services. They all highlighted different benefits of cooperation with Effective Thesis. One of them learned about their thesis topic from the Effective Thesis website, a second person found coaching valuable for thinking about their topic and the third person learnt about (and later received) funding to continue with their thesis research thanks to a coaching session.

The table below shows how these three students evaluated the impact and counterfactual value of Effective Thesis on various aspects of their research and related plans. To assess the impact and counterfactual value, we used to following questions:

Impact: “How much did Effective Thesis help you in each of the following ways, with 0 meaning we didn't help you at all and 10 meaning we were extremely helpful?”

Counterfactual value: “If Effective Thesis didn’t exist, how likely is it that you would have gotten this value some other way? (i.e. what is the counterfactual value of Effective Thesis)”

We reversed the scores below such that low values (1,2,3…) mean “low counterfactual value” (i.e. given person would be able to find the same help elsewhere), and high values (8,9,10) mean “high counterfactual value (i.e. given person would likely not be able to find the same help elsewhere).

| Student A | Student B | Student C | ||||

| Impact | Counterfactual value | Impact | Counterfactual value | Impact | Counterfactual value |

| Refining a research idea (or choosing between multiple ideas) that you originally came up with | 10 | 4 | 5 | 2 | 7 | 3 |

| Changing the general direction of your research focus | 10 | 4 | 6 | 7 | 7 | 3 |

| Helping you choose a new specific topic for your research project | 9 | 4 | 5 | 5 | 7 | 3 |

| Giving you useful career advice | 3 | 7 | 3 | 5 | 10 | 3 |

| Expanding your network of relevant people to talk to | 7 | 7 | 7 | 9 | 8 | 3 |

| Helping you find a thesis/PhD supervisor | 5 | 6 | 0 | 10 | 8 | 3 |

| Helping you find funding | 5 | 8 | 10 | 5 | 10 | 6 |

| Helping you find new research opportunities | 8 | 6 | 10 | 6 | 10 | 6 |

| Helping you test your fit for a research career | 7 | 8 | 1 | 1 | 8 | 3 |

This provides some further evidence that coaching does help students to come up with more impactful ideas, though there is a good chance that students might have received this type of help elsewhere. Because of the small sample size, we can’t draw strong conclusions.

Engagement of the winners

50% of the winners (either of a main prize or commendation prize) used at least one of Effective Thesis’ services, which is slightly less than the whole group of applicants (57%). The most popular service among main prize winners was coaching, which 4 out of 6 students received prior to submitting their thesis to our Award. Among the commendation prize winners, no service stands out.

| Service (before 1st September 2022) | Main prize winners | Commendation prize winners | ||

| Yes | No | Yes | No | |

| Coaching | 4 | 2 | 1 | 3 |

| Published their thesis on the “Finished thesis” page (only students that were coached) | 1 | 5 | 1 | 3 |

| Community (before 15th December 2022) | 0 | 6 | 0 | 4 |

| ECRON | 2 | 4 | 1 | 3 |

| Research workshop on reasoning transparency | 0 | 6 | 0 | 4 |

| Filled out our impact evaluation form | 1 | 5 | 0 | 4 |

Quality of application pool

Quality assessment from reviewers

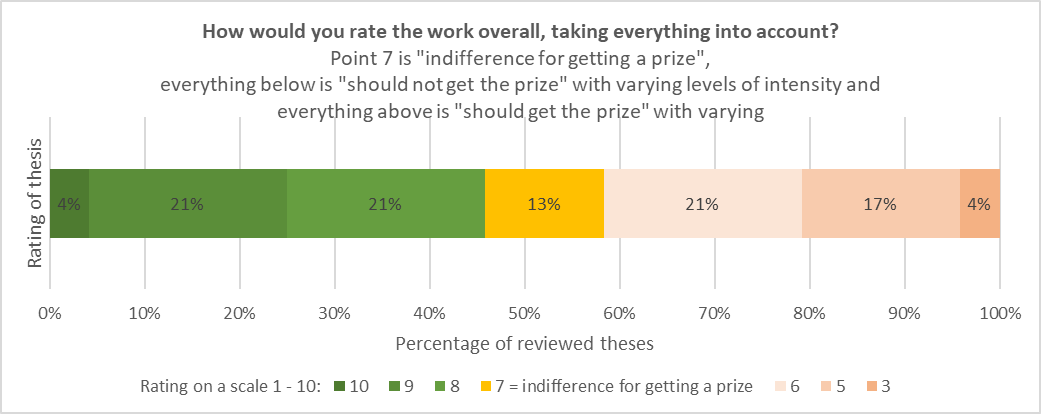

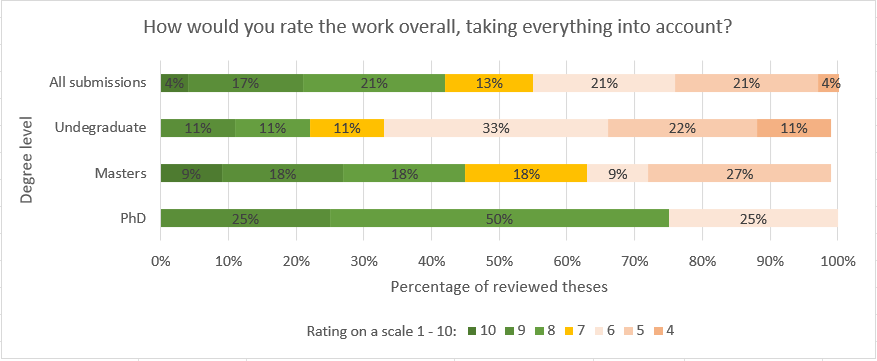

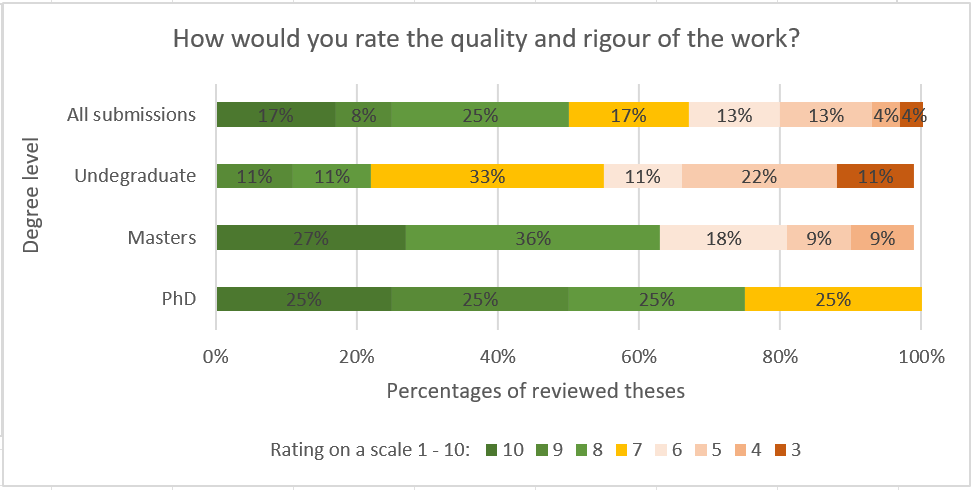

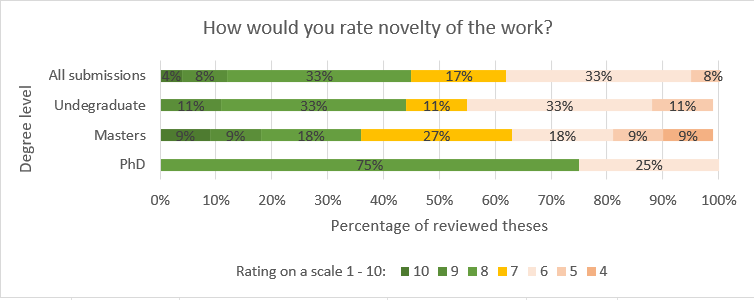

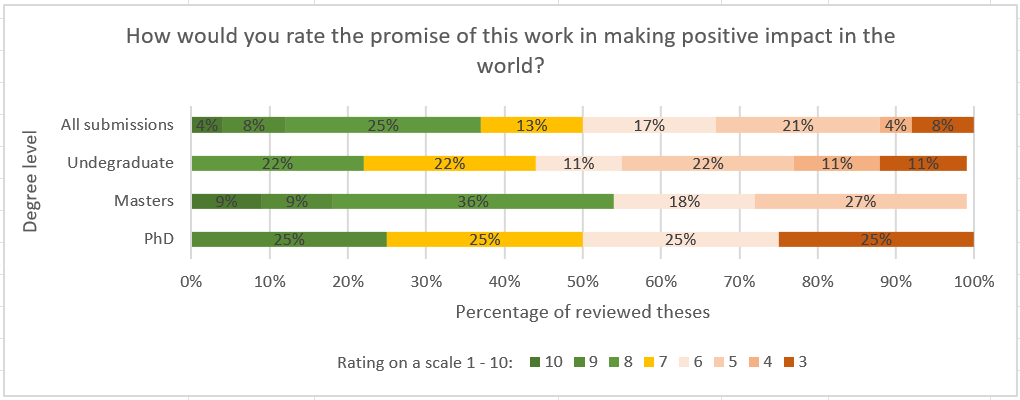

Reviewers assessed applications based on four criteria: (1) quality and rigour, (2) novelty, (3) the ability of the work to make progress in the given research direction and (4) promise of this work in making a positive impact in the world. Each reviewer also expressed the extent to which they recommended that the submission should win a prize. All reviewers were instructed to compare the thesis they reviewed with a reference group of "all undergraduate/masters/PhD theses in the top ~20 of programmes in their field".

Taking all factors into consideration, 45% of reviewed submissions were recommended for a prize by their reviewer (score 8-10), but only one received the highest score possible (score 10). In 13% of cases the reviewer gave a score of 7, reflecting a neutral position on whether the submission should receive a prize. In 40% of cases the reviewer recommended against the submission receiving a prize (scores below 7), but on a scale of 1 - 10, even these scores were mostly positioned around the middle (6 or 5). Those results suggest that all submissions showed medium to high overall scores (5 or above; mean =6,9) when being compared to the thesis produced in the top ~20 programmes in a similar field.

Overall, submissions were strong in their “quality and rigour” criterion (mean =7,3; 67% of them scored 7 or higher), and novelty (mean = 7,08; 62% of them scored 7 or higher). The weaker aspects of the submissions were their ability to make progress in the given research direction (mean = 6,5; 64% of them scored 7 or higher) and their promise in making a positive impact in the world (mean = 6,4; 50% of them scored 7 or higher).

By degree level

The overall ratings and scores for quality and rigour were generally higher for submissions at higher degree levels, but we should be aware that the distribution for the PhD degree level may be biased due to the small number of reviewed applications (4 submissions). Masters submissions scores are the best in the work's ability to make progress in the given research direction and the promise of the work in making a positive impact in the world. PhD submissions excel in novelty.

Winners

For more details about winners and their submissions, see the announcement page.

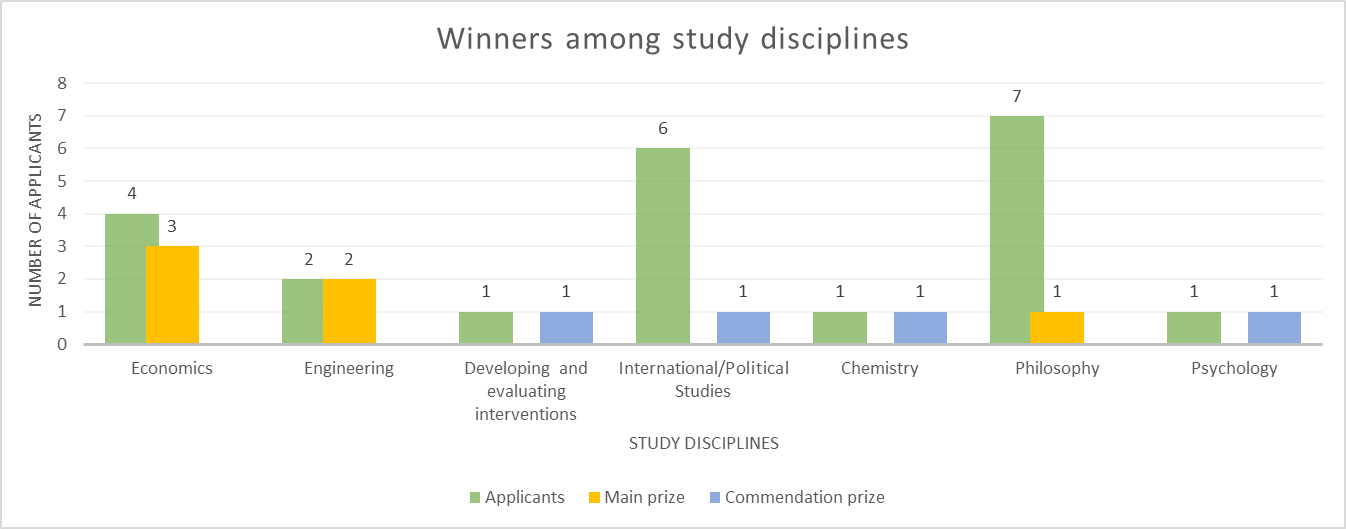

By study discipline

Out of 4 applicants with an Economics major, 3 won a main prize (2 at the Undergraduate level and 1 at the Master level), and both Engineering applicants were successful. On the other hand, only 1 Philosophy student won a prize despite Philosophy applicants being the largest group. We can see a similar pattern for students of International/Political Studies.

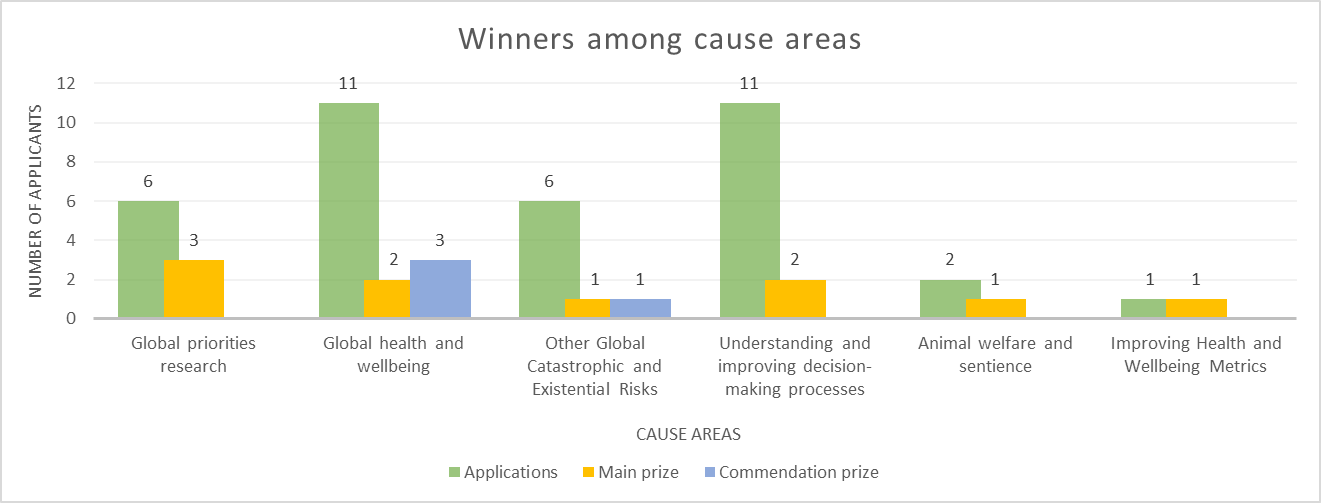

By cause area

Note: Applicants could choose 1 or more cause areas their submission is related to.

For more details about winners and their theses, see the announcement page.

Feedback

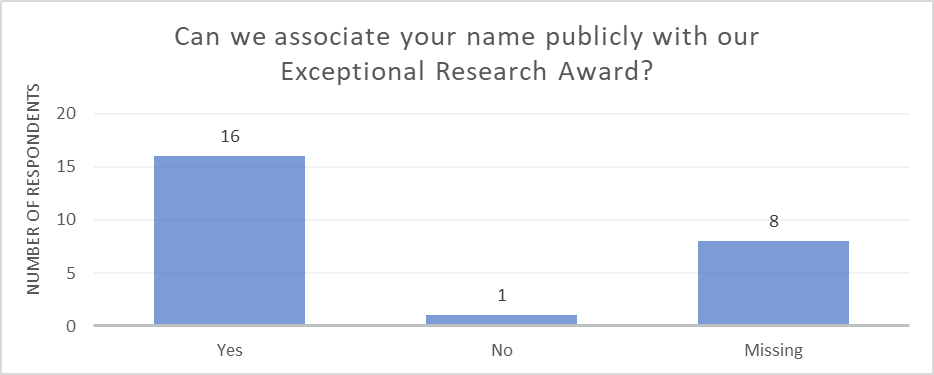

Feedback from reviewers

17 out of 24 reviewers provided feedback on our review process. The general impression we received was that reviewers were happy to have been part of the review process for our Award. This impression is supported by their high willingness to be publicly associated with the Award (see below).

Note: “Missing” category consists of reviewers who didn’t fill out the voluntary feedback form.

Regarding suggestions for improvements to the review and operational process of the Award, the factor most often mentioned was “calibration”, e.g. reviewers felt that reviewing or seeing other submissions from the same category would have made the reviews more balanced.

Feedback from participants

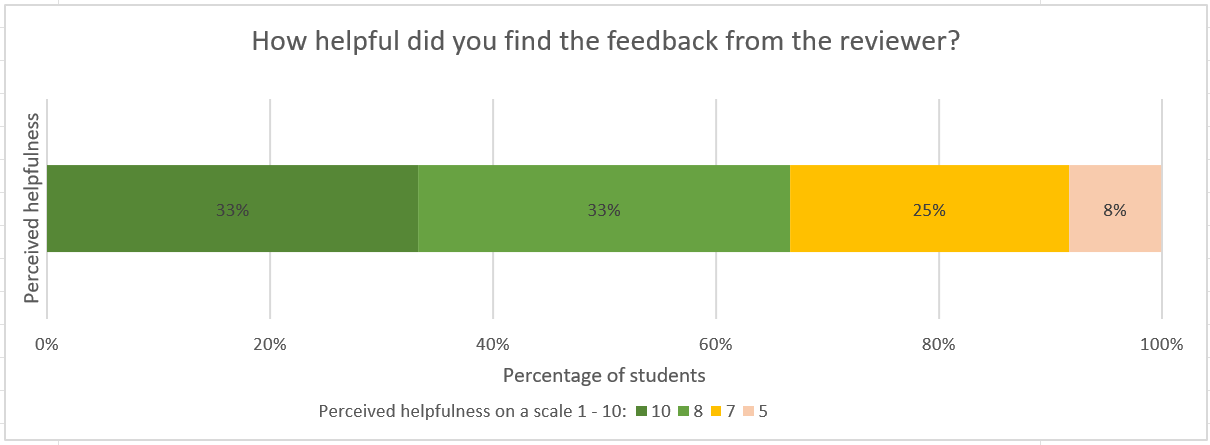

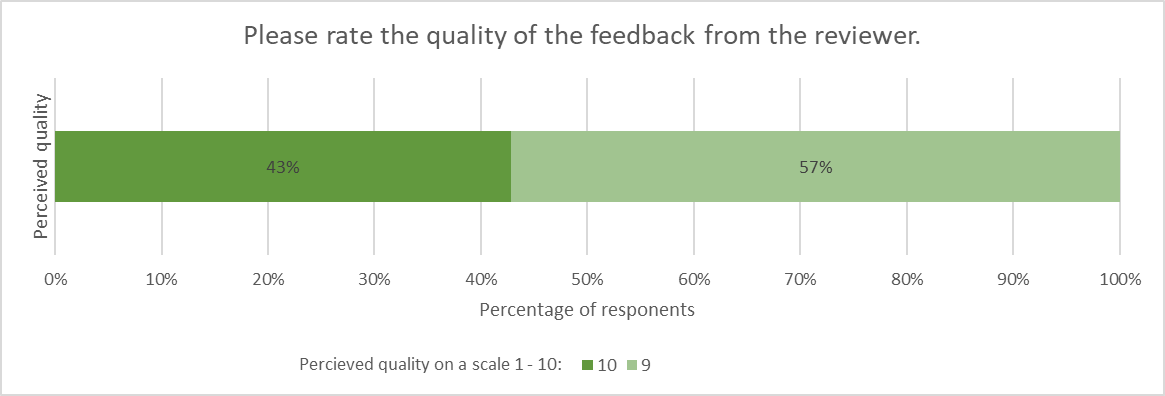

Applicants whose submission was reviewed (25) were sent the review and asked about the usefulness and quality of the review. 12 of them (approx 50 %) filled in the feedback form. Out of 10 applicants whose submission was not reviewed, no one filled in the feedback form.

Note: The second question (“Please rate the quality of the feedback from the reviewer.”) was answered only by 7 respondents because it was added to the form later, based on a suggestion from one of the participants saying that “helpfulness and quality might sometimes score differently”.

Overall, applicants reported finding the feedback from the reviewers helpful (mean = 8,2 on a 10-point scale) and high quality (mean = 9,4 on a 10-point scale). In an open-ended question, three applicants highlighted the quality of the reviews they received, two applicants mentioned good communication with award organisers, and one respondent appreciated receiving interesting feedback even though they didn’t receive an award.

Testimonials from participants

“I am so glad that I submitted my thesis into the competition. Doing so made me think of my thesis not just as an academic credential but as a way to connect with students and professionals across the world from other disciplines who want to make the world a better place using research.”

“I've had a great experience with 'Effective Thesis' and would recommend others to submit their thesis too :)”

“I was quite satisfied with the evaluation conducted by Effective Thesis. The reviewer's feedback was very well balanced, in terms of encouragement and critique - I agree with most of their points and appreciate the time they have invested in this review.

…

On the organisational front, I was very happy with the clear, patient communication and logistics managed by David, Sophie and Effective Thesis team.

I wish I had known about the work of Effective Thesis earlier during my PhD journey, and I wish it the best in this altruistic goal of encouraging researchers working on important problems.”

“Effective Thesis helped me to explore options for a thesis with researchers that I otherwise would not have been able to engage with”

Impact

The expected impacts were three-fold:

- Provide career boost for people who are already focused on very impactful questions and are producing high-quality work and end up winning this award (which would give them some additional credentials and increase their chances of building successful research career)

- Motivate more aspiring researchers to start exploring questions in some of the research directions that we focus on (so they can apply for the award and potentially win)

- Identifying people already doing valuable work who were not known to Effective Thesis or the Effective Altruism community before

Career boost

We found it very hard to find proxies for this effect, so, unfortunately, can’t speak of that very much.

Motivation for topic choice

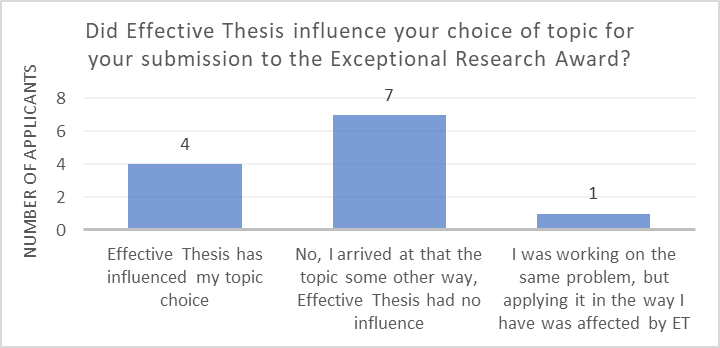

We have asked all students in the voluntary feedback form (that was shared at the time of the results announcement) whether Effective Thesis influenced their topic choice for their submission.

Out of 12 people who filled out the form (approx ⅓ of all applicants), 4 (33 %) of them said Effective Thesis did influence their topic choice. However, from this data, it is unclear whether our Exceptional Research Award was the main driver of their topic choice, or whether some other programmes we run (like coaching, content, etc…) were responsible for that change. In the future, we will try to develop some more sophisticated ways to ask about this with an eye to also limit potentially high social desirability in answers (i.e. people might report that they were not influenced by the prospects of receiving the Award and would have worked on the same topic anyway).

Identification of people previously unknown to us

50% of the winners (either of a main prize or commendation prize) were not known to Effective Thesis (i.e. didn't use any of our services) before (compared to 43 % of all the applicants).

Both of the undergraduate winners had already been identified by GPI, as both were working at GPI as pre-doctoral fellows prior to the submission deadline (1st September 2022). Similarly, one of the masters-level winners had already published a summary of their research findings on the EA forum, with comments from two other EA-aligned researchers. We have asked the remaining three main prize winners (1 master and 2 PhD level) how engaged they were with the EA community at the time of application for the Award. One chose “considerable engagement” and two chose “no engagement”.

Similarly, one of the commendation prize winners was already working at an EA-aligned global development charity. We have asked the remaining three commendation prize winners how engaged they were with the EA community at the time of application for the Award. Two chose “no” and one chose “mild” engagement.

Perhaps even if some of the winners already got recognised and identified by other actors in the EA ecosystem, our award can give them additional credentials by providing an extra vetting signal.

Other takeaways and learnings

Cause area profile of the submission pool

Compared to how (leaders of) the EA community prioritise cause areas, we received disproportionately fewer submissions relevant to the future of AI and to Biosecurity, which seem to be the two main fields the longtermist/EA community is interested in. That bears a question: Why is that?

Some hypotheses/speculations we considered are:

- Are there any other prizes which focus specifically on these areas and that people might prefer applying to for that reason?

- Is our Award not an attractive-enough venue for people focusing on these questions?

- Is the main bottleneck for these cause areas in research? (e.g. we sometimes hear from people working in biosecurity that the main bottleneck is not coming up with new ideas, but their implementation)

- Are there any cultural/structural norms that prevent people with these interests applying for the award? (e.g. because the relevant research usually happens outside of academia and is communicated via blog posts rather than academic journals/thesis submissions).

We plan to carry out a series of in-depth interviews with aspiring researchers focusing on these research directions to learn more about this. We would also welcome readers of this post to comment with their own hypotheses about this and ideas on how we can improve the Award going forward.

Acknowledgements

We would like to thank Rossa O'Keeffe-O'Donovan for useful inputs in the process of designing this award and for feedback on the draft of this report. Further, we would like to express deep gratitude to all reviewers, as well as people who took the time to recommend some of the reviewers, for their help and support that made it possible for the Exceptional Research Award to happen.

Why aren't you running the Effective Thesis Exceptional Research Award for 2023?