Overall reflections

- Very happy with the volume and quality of grants we’ve been making

- $600k+ newly committed across 12 projects

- Regrantors have been initiating grants and coordinating on large projects

- Independent donors have committed $35k+ of their own money!

- We plan to start fundraising soon, based on this pace of distribution

- Happy to be coordinating with funders at LTFF, Lightspeed, Nonlinear and OpenPhil

- We now have a common Slack channel to share knowledge and plans

- Currently floating the idea of setting up a common app between us…

- Happy with our experimentation! Some things we’ve been trying:

- Equity investments, loans, dominant assurance contracts and retroactive funding

- Grantathon, office hours, feedback on Discord & site comments

- Less happy with our operations (wrt feedback and response times to applicants)

- Taking longer to support to individual grantees, or start new Manifund initiatives

- Please ping us if it’s been a week and you haven’t heard anything!

- Wise deactivated our account, making international payments more difficult/expensive…

- In cases where multiple regrantors may fund a project, we’ve observed a bit of “funding chicken”

- Taking longer to support to individual grantees, or start new Manifund initiatives

Grant of the month

[$310k] Apollo Research

This is our largest grant to date! Many of our regrantors were independently excited about Apollo; in the end, we coordinated between Tristan Hume, Evan Hubinger and Marcus Abramovitch to fund this.

From Tristan:

I'm very excited about Apollo based on a combination of the track record of it's founding employees and the research agenda they've articulated.

Marius and Lee have published work that's significantly contributed to Anthropic's work on dictionary learning. I've also met both Marius and Lee and have confidence in them to do a good job with Apollo.

Additionally, I'm very much a fan of alignment and dangerous capability evals as an area of research and think there's lots of room for more people to work on them.

In terms of cost-effectiveness I like these research areas because they're ones I think are very tractable to approach from outside a major lab in a helpful way, while not taking large amounts of compute. I also think Apollo existing in London will allow them to hire underutilized talent that would have trouble getting a U.S. visa.

New grants

[$112k] Jesse Hoogland: Scoping Developmental Interpretability

Jesse posted this through our open call:

We propose a 6-month research project to assess the viability of Developmental Interpretability, a new AI alignment research agenda. “DevInterp” studies how phase transitions give rise to computational structure in neural networks, and offers a possible path to scalable interpretability tools.

Though we have both empirical and theoretical reasons to believe that phase transitions dominate the training process, the details remain unclear. We plan to clarify the role of phase transitions by studying them in a variety of models combining techniques from Singular Learning Theory and Mechanistic Interpretability. In six months, we expect to have gathered enough evidence to confirm that DevInterp is a viable research program.

If successful, we expect Developmental Interpretability to become one of the main branches of technical alignment research over the next few years.

Rachel was excited about this project and considered setting up a dominance assurance contract to encourage regrants, but instead offered 10% matching; Evan took her up on this!

[$60k] Dam and Pietro: Writeup on Agency and (Dis)Empowerment

A regrant initiated by Evan:

6 months support for two people, Damiano and Pietro, to write a paper about (dis)empowerment… Its ultimate aim is to offer formal and operational notions of (dis)empowerment. For example, an intermediate step would be to provide a continuous formalisation of agency, and to investigate which conditions increase or decrease agency.

[$40k] Aaron Silverbook: Curing Cavities

Following up from Aaron’s application last month (which went viral on Hacker News), Austin and Isaak split this regrant — structured as an equity investment into Aaron’s startup. A handful of small donors contributed as well~

[$30k] Lisa Thiergart & David Dalrymple: Activation vector steering with BCI

This regrant was initiated by Marcus, and matched by Evan. Marcus says:

When I talked with Lisa, she was clearly able to articulate why the project is a good idea. Often people struggle to do this

Lisa is smart and talented and wants to be expanding her impact by leading projects. This seems well worth supporting.

Davidad is fairly well-known to be very insightful and proposed the project before seeing the original results.

Reviewers from Nonlinear Network gave great feedback on funding Lisa for two projects she proposed. She was most excited about this one and, with rare exceptions, when a person has two potential projects, they should do the one they are most excited about.

I think we need to get more tools in our arsenal for attacking alignment. With some early promising results, it seems very good to build out activation vector steering.

[$25k] Joseph Bloom: Trajectory models and agent simulators

Marcus initiated this regrant last month; this week, Dylan Mavrides was kind enough to donate $25k of his personal funds, completing this project’s minimum funding goal!

[$10k] Esben Kran: Five international hackathons on AI safety

A $5k donation by Anton, and matched by a regrant from Renan Araujo:

I’m contributing to this project based on a) my experience running one of their hackathons in April 2023, which I thought was high quality, and b) my excitement to see this model scaled, as I think it has an outsized contribution to the talent search pipeline for AI safety. I’d be interested in seeing see someone with a more technical background evaluating the quality of the outputs, but I don’t think that’s the core of their impact here.

[$10k] Karl Yang: Starting VaccinateCA

A retroactive grant initiated by Austin:

I want to highlight VaccinateCA as an example of an extremely effective project, and tell others that Manifund is interested in funding projects like it. Elements of VaccinateCA that endear me to it, especially in contrast to typical EA projects:

They moved very, very quickly

They operated an object level intervention, instead of doing research or education

They used technology that could scale up to serve millions

But were also happy to manually call up pharmacies, driven by what worked well

[$6k] Alexander Bistagne: Alignment is Hard

Notable for being funded entirely by independent donors so far! Greg Colbourn weighs in:

This research seems promising. I'm pledging enough to get it to proceed. In general we need more of this kind of research to establish consensus on LLMs (foundation models) basically being fundamentally uncontrollable black boxes (that are dangerous at the frontier scale). I think this can lead - in conjunction with laws about recalls for rule breaking / interpretability - to a de facto global moratorium on this kind of dangerous (proto-)AGI.

A series of small grants that came in from our open call, funded by Austin:

[$2.5k] Johnny Lin: Neuronpedia, an AI Safety game (see also launch on LessWrong!)

[$500] Sophia Pung: Solar4Africa app development

[$500] Vik Gupta: LimbX robotic limb & other projects

Cool projects, seeking funding

AI Safety

[$5k-$10k] Peter Brietbart: Exploring AI Safety Career Pathways

[$500-$190k] Rethink Priorities: XST Founder in Residence

[$500-$38k] Lucy Farnik: Discovering Latent Goals

Other

[$25k-$152k] Jorge Andrés Torres Celis: Riesgos Catastróficos Globales

[$75k-$200k] Allison Burke: Congressional staffers' biosecurity briefings in DC

Shoutout to Anton Makiievskyi, volunteer extraordinaire

Anton has been volunteering in-person with Manifund these last two weeks. As a former poker player turned earning-to-giver, Anton analyzed how the Manifund site comes across to small and medium individual donors. He’s held user interviews with a variety of donors and grantees, solicited applications from top Nonlinear Network applicants, and donated thousands of dollars of his personal funds towards Manifund projects. Thanks, Anton!

Regrantor updates

We’ve onboarded a new regrantor: Ryan Kidd! Ryan is the co-director of SERI MATS, which makes him well connected and high context on up-and-coming people and projects in the AI safety space.

Meanwhile, Qualy the Lightbulb is withdrawing from our regranting program for personal reasons 😟

Finally, we’ve increased the budgets of two of our regrantors by $50k each: Marcus and Evan! This is in recognition for the excellent regrants they have initiated so far. Both have been reaching out to promising grantees as well as reviewing open call applications; thanks for your hard work!

Site updates

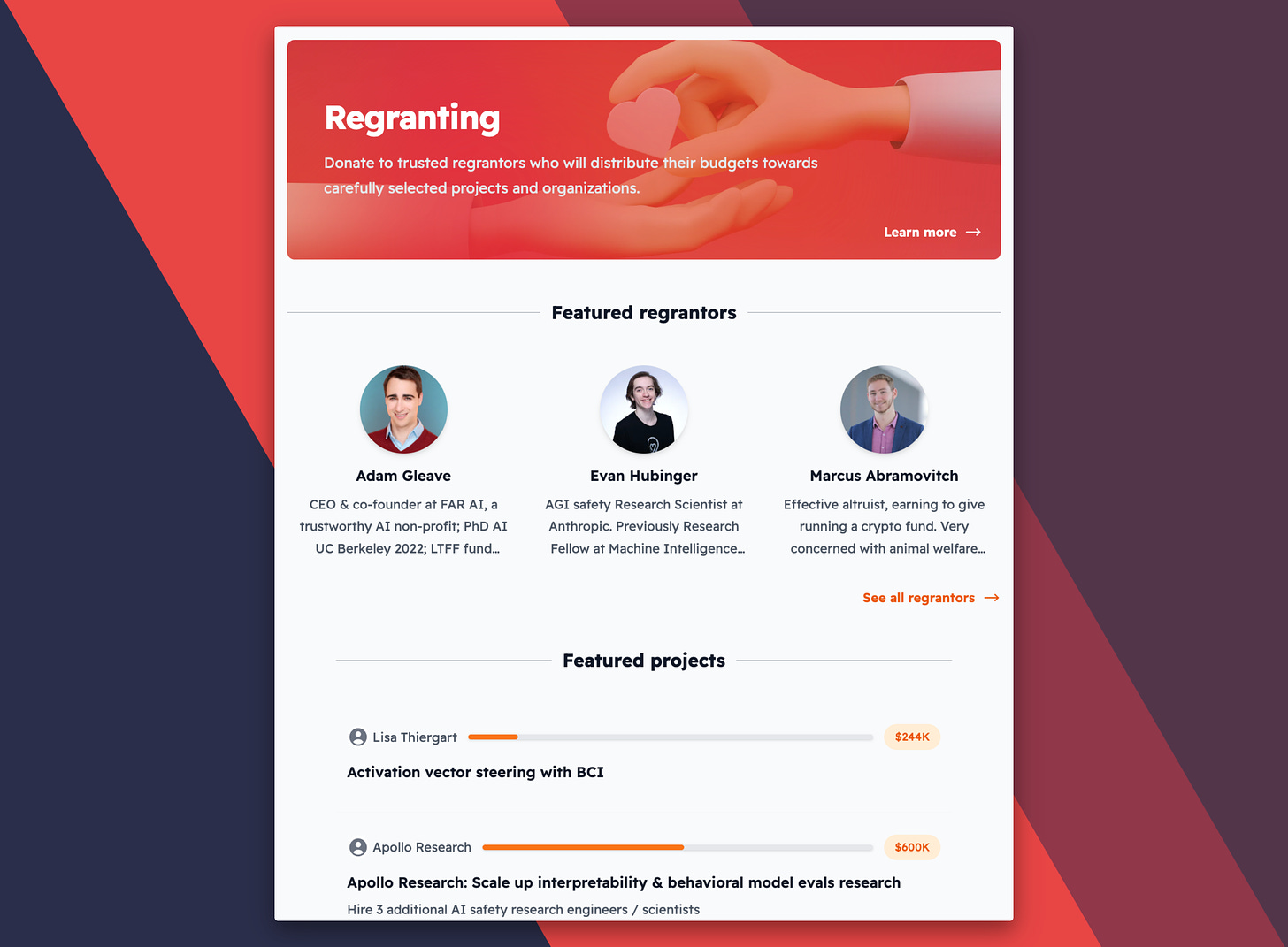

The home page now emphasizes our regrantors & top grants:

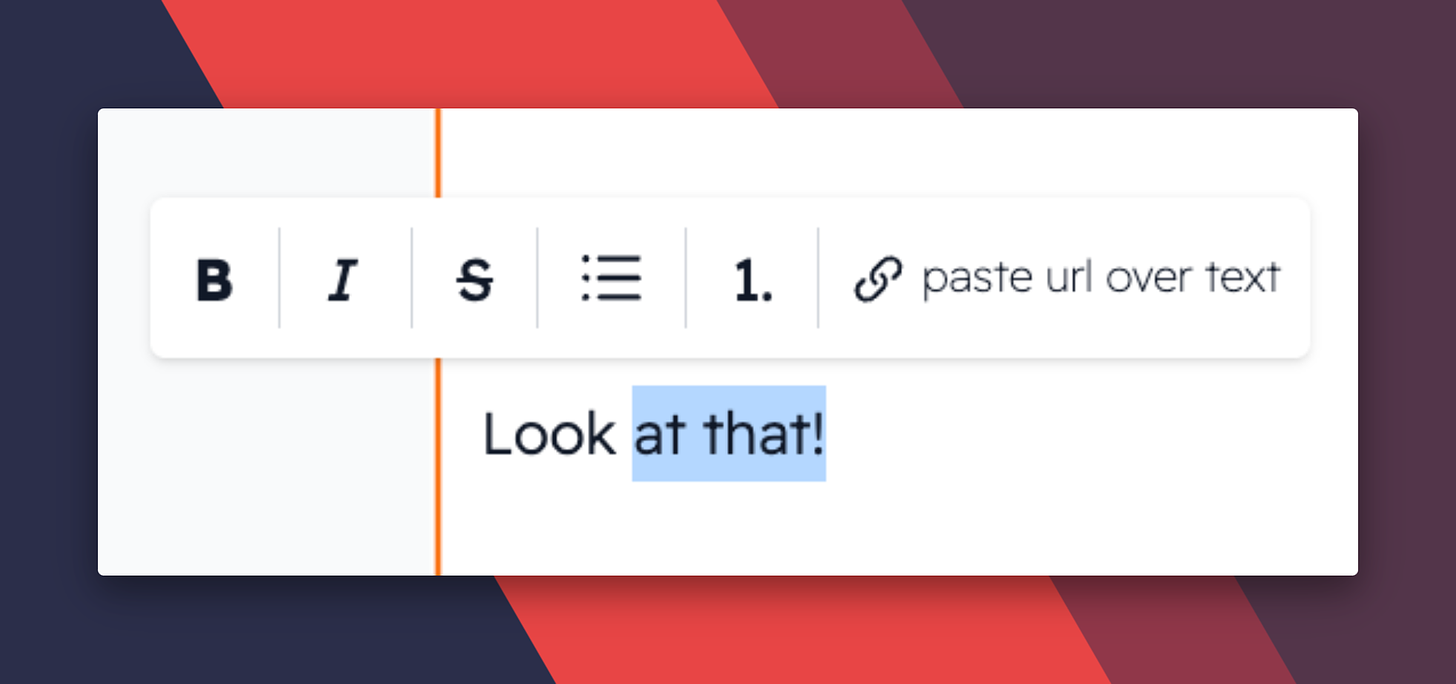

Our editor now saves changes locally, and prompts you to format your writing:

Community events

We ran a couple experiments intended to help out our regrantors and grantees:

- A grantathon (grant writing workshop) on Jul 19, where we dedicated 3 sessions of heads-down writing time mixed with 1:1 feedback sessions.

- Open office hours on Jul 30, where a few grantees and regrantors met on Discord to chat about their current projects.

We’ll continue to run events like this every so often; hop on our Discord to hear more!

Other links

- Announcing Manifest 2023, Manifold’s first conference (Sep 22-24 in Berkeley)

- LTFF posts their grant writeups and is fundraising; Asya Bergal posts her reflections

- Bet on whether Manifund will get into YCombinator…

- Manifold team offsite in Puerto Rico! Shoutout to Nonlinear for lending us their apartment:

Thanks for reading,

— Austin

Yay

Thanks for your work

It was heartwarming to read the shoutout, I appreciate it!

Wise is awful.. their customer service was not very helpful to me, setting up the account to connect to my bank is hard, and their UI is beautiful but not functional. I just use Paypal for international payments now and it is so much easier. Is there a reason you chose Wise over Paypal?

The main reason we'd prefer to use Wise is that they advertise much lower currency exchange fees; my guess is something like 0.5% compared to 3-4% on Paypal, which really adds up on large foreign grants.

That's a very good point! I just have a lot of bad experience dealing with Wise, but I also haven't had to deal with very large grants so the tradeoff wasn't as large.

Awesome work on this @Austin and team!