Tl;dr

I’ve developed two calculators designed to help longtermists estimate the likelihood of humanity achieving a secure interstellar existence after 0 or more major catastrophes. These can be used to compare an a priori estimate, and a revised estimate after counterfactual events.

I hope these calculators will allow better prioritisation among longtermists and will finally give a common currency to longtermists, collapsologists and totalising consequentialists who favour non-longtermism. This will give these groups more scope for resolving disagreements and perhaps finding moral trades.

This post explains how to use the calculators, and how to interpret their results.

Introduction

I argued earlier in this sequence that the classic concept of ‘existential risk’ is much too reductive. In short, by classing an event as either an existential catastrophe or not, it forces categorical reasoning onto fundamentally scalar questions of probability/credence. As longtermists, we are supposed to focus on achieving some kind of utopic future, in which morally valuable life would inhabit much of the Virgo supercluster for billions if not trillions of years.[1] So ultimately, rather than asking whether an event will destroy ‘(the vast majority of) humanity’s long-term potential’, we should ask various related but distinct questions:

- Contraction/expansion-related: What effect does the event have on the expected size of future civilisation? In practice we usually simplify this to the question of whether or not distant future civilisation will exist:

- Existential security-related: What is the probability[2] that human descendants (or whatever class of life we think has value) will eventually become interstellar? But this is still a combination of two questions, the latter of which longtermists have never, to my knowledge, considered probabilistically:[3]

- What is the probability that the event kills all living humans?

- What effect does the event otherwise have on the probability that we eventually reach an interstellar/existentially secure state, [4] given the possibility of multiple civilisational collapses and ‘reboots’? (where the first reboot is the second civilisation)

- Existential security-related: What is the probability[2] that human descendants (or whatever class of life we think has value) will eventually become interstellar? But this is still a combination of two questions, the latter of which longtermists have never, to my knowledge, considered probabilistically:[3]

- Welfare-related: How well off (according to whatever axiology one thinks best) would such life be?

Reboot 1, maybe

Image credit to Yuri Shwedoff

In the last two posts I described models for longtermists to think about both elements of the existential security-related question together.[5] These fell into two groups:

- a simple model of civilisational states, which treats every civilisation as having equivalent prospects to its predecessors at an equivalent technological level,

- a family of more comprehensive models of civilisational states that a) capture my intuitions about how our survival prospects might change across multiple possible civilisations, b) have parameters which tie to estimates in existing existential-research literature (for example, the estimates of risk of per year and per century described in Michael Aird’s Database of Existential Risk estimates (or similar)) and c) allow enough precision to consider catastrophes that ‘only’ set us back arbitrarily small amounts of time.

Since then I’ve been working intermittently on a functional implementation of both the simple model and the full set of the latter models, and though they’re both rough around the edges, I think they’re both now in a useable state. The rest of this post is about how to use and interpret them, and an invitation to do so - and to share your results, if you’re willing.

If you’re interested, but you feel like you need help to use either, feel free to DM me. In particular, if you understand the maths but not the Python code needed for the full calculator, I’m very happy to run the code for you if you let me know what parameter values you want to give it - more on how to select those below.

I don’t claim any specialist knowledge on what these values should be, and would love to crowdsource as many views as possible for the next and final post in this sequence, which will investigate the implications of my own beliefs - and of anyone else who chooses to join in.

Who are the calculators for, and what questions do they help answer?

The value I envision of the calculators is

- to allow longtermist global priorities researchers and collapsologists to investigate which assumptions imply that longtermists should focus primarily on extinction risk and which imply that they should focus comparatively more on lesser global catastrophes. For example, if they found the probability of becoming interstellar given a nuclear war was reduced by 51%, and thought that they had 2x the probability of preventing one as of preventing extinction via AGI takeover, it would make sense to prefer work on the former - even if the chance of nuclear war causing humans to almost immediately go extinct was very low.

- to help individual totalising consequentialists to directly estimate the counterfactual long-term value of significant life choices, without having to rely so much on heuristics. For example, you can translate some career choice or large donation you might make into a difference in your calculator input and compare the counterfactual difference it makes to our ultimate fate (such as ‘I could avert tonnes of CO2e emissions via donations to a climate charity, thereby reducing the annual risk of extinction by %, the annual risk of regressing to a preindustrial state by % and the annual risk of regressing to an industrial state by %, and increasing the chance of recovery if we did revert to such states by % OR I could go into legal activism, with a % chance of successfully introducing a bill to legally restrict compute, reducing the annual risk of extinction due to AI by % but delaying our progress towards becoming multiplanetary by %’).

- to investigate which assumptions imply that the prospects of stably reaching a stable astronomical state are low enough to make it not worth the short term opportunity costs despite the astronomical possible value, as David Thorstad contends.

- to look for other implications of our assumptions that might either challenge or reinforce common longtermist beliefs. For example, we might find examples of actions that we believe will reduce short term extinction risk while nonetheless decreasing the probability that we eventually become interstellar (by making lesser civilisational collapses more likely, or reaching key future milestones less likely).

But won’t these numbers be arbitrary?

The inputs to both the simple and full calculators are, ultimately, pulled out of one’s butt. This means we should never take any single output too seriously. But decomposing big-butt numbers into smaller-butt numbers is essentially the second commandment of forecasting.

More importantly, these models are meant to make more explicit the numeric assumptions that the longtermism community has been implicitly making all along. If a grantmaker puts all its longtermist-oriented resources into preventing extinction events on longtermist grounds, they are tacitly assuming that <the marginal probability of successfully averting extinction events per $> is higher than <the probability of successfully averting any smaller catastrophe per $, multiplied by the amount that smaller event would reduce our eventual probability of becoming interstellar>. Similarly if a longtermist individual focuses their donations or career exclusively on preventing extinction they are implying a belief that doing so has a better chance of bringing about astronomical future value than concern with any smaller catastrophes.

This is roughly the view Derek Parfit famously expressed in a thought experiment where he claimed that 99% of people dying would have a relatively bigger difference in badness from 100% of people dying than it has from 0% of people dying. So we might call this sort of view Parfitian fanaticism.[6]

My anecdotal impression is that Parfitian fanaticism is widely held among longtermists - quite possibly as the majority view.[7] It is an extremely strong assumption, so my hope is we will now be able to either challenge it or justify it more rigourously.

Finally, per this recent forum post, I think having explicit and reusable models that allow sensitivity analysis, Monte Carlo simulation and similar investigation can be valuable for decision-making even if we don’t take any individual forecast too seriously. While neither calculator supports such analysis natively, a) they could be used for manual sensitivity analysis, and b) it shouldn’t be too difficult to incorporate the calculators into a program that does (see Limitations/development roadmap for some caveats).

Interpreting the calculators

A useful heuristic when interpreting the results of either calculator is to assume some effectively constant astronomically large value, V, which human descendants will achieve only if they eventually become a widely interstellar civilisation, and next to which all value we might otherwise achieve looks negligible. This is an oversimplification which could and should be improved with further development of this project[8] and others like it,[9] but I think it’s a useful starting point. For deciding whether to focus on the long term at all, we will need to estimate the actual value of V under our assumptions, but for prioritising among different interventions from within a longtermist perspective, treating it as a constant is probably sufficient.

This simplifying assumption makes it much easier to compare the expected long-term value of events: if V is our astronomical value constant and is the event that we attain that value, then our expected value from any civilisational state S is

The cost of a counterfactual extinction event is then that same expected value - i.e. simply the loss of whatever future value we currently expect:

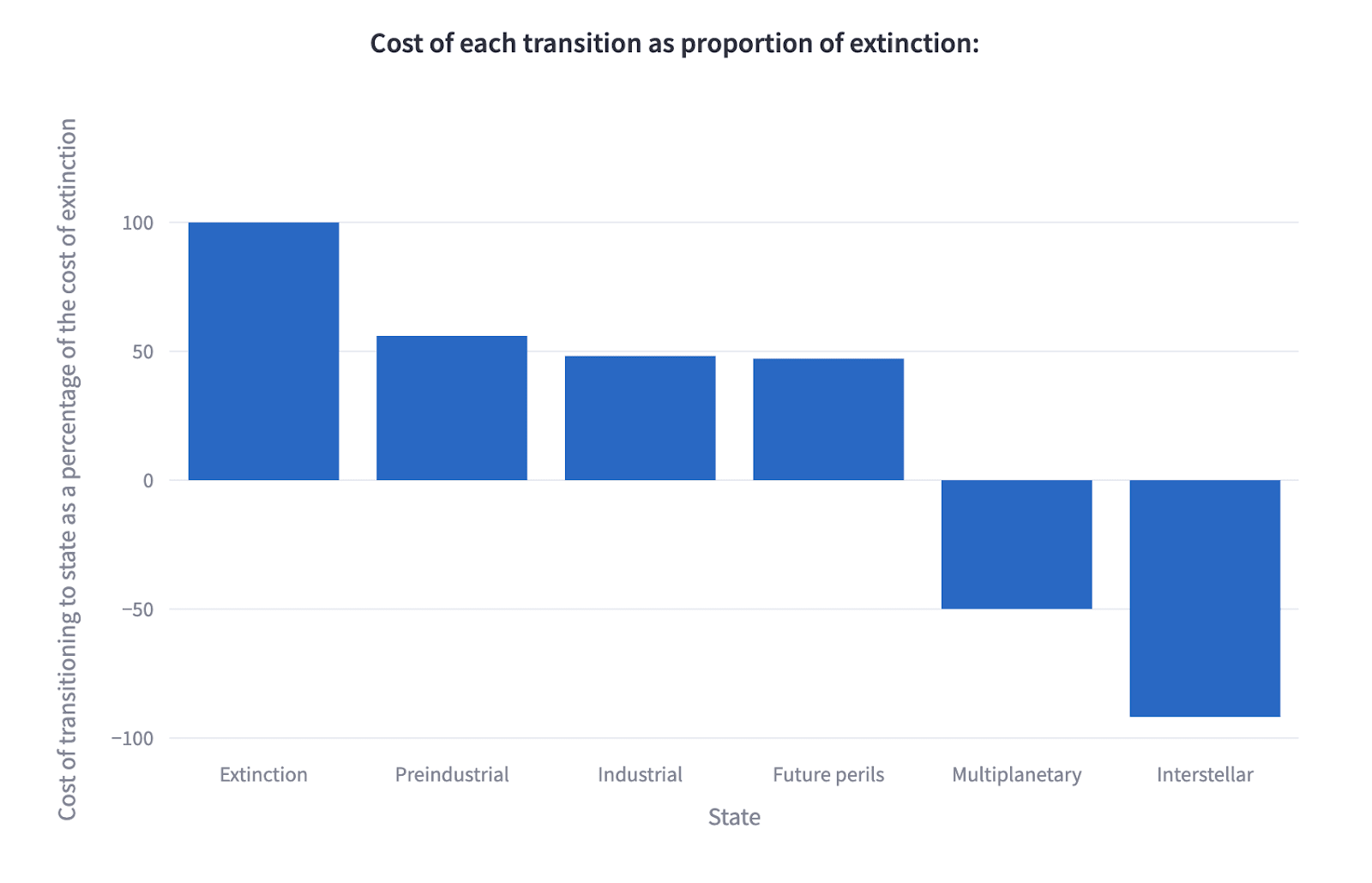

And if we generally define as some event that transitions us from our current time of perils to some other state (for example, 'nuclear war destroys all industry in the next 10 years' or 'humans develop a self-sustaining offworld settlement before 2070'), then the counterfactual expected value of in terms of V is

We can then express the expected cost of non-extinction transitions as a proportion of the cost of extinction.

This allows us to compare work on human extinction with work on other good or bad events. For example, if Event A would cause extinction with probability p/10, and Event B would cause an outcome 0.1x as bad as extinction with probability p, all else being equal, we should be indifferent between them.[10]

Similarly, ‘negative costs’ are equally as good as a positive cost is bad. So if Event C would with probability p cause an outcome with the extinction ‘cost’ -0.1x (note the minus), then we should be indifferent between effecting Event C and preventing Event A.

If that didn't make too much sense yet, hopefully it will when we see output from the simple calculator...

How to use the simple calculator

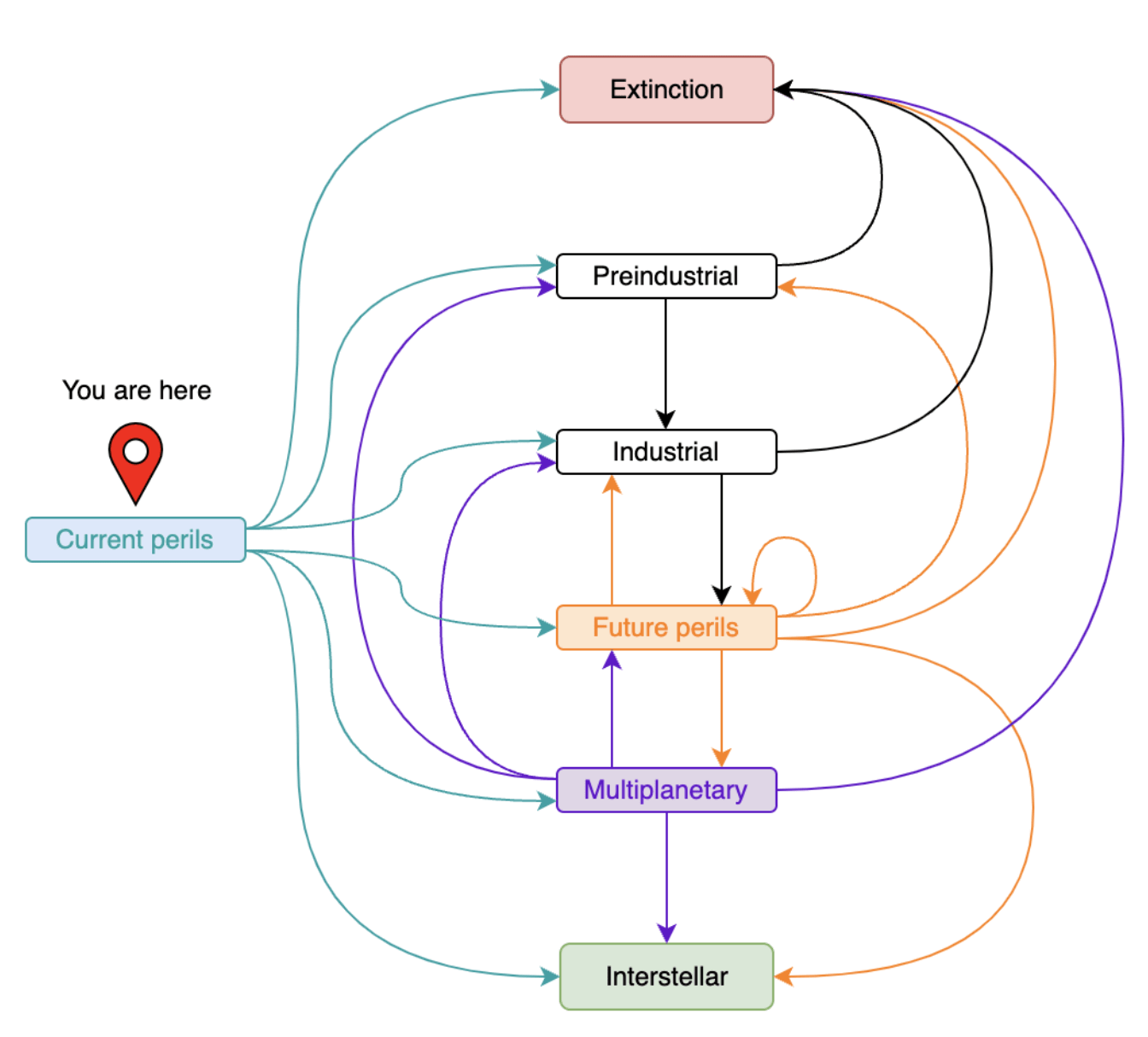

This calculator has an interactive Streamlit interface at https://l-risk-calculator.streamlit.app/. The instructions are all on the page; a quick summary is that your goal is to represent your credence for each transition in the following diagram:

Markov chain underlying the simple calculator - note the state definitions given in the previous post

As you choose the parameters, they’ll be stored in the URL, so you can share it around to people in the community, compare estimates, and see which components of your estimates are most pivotal in determining the final value. At the end you’ll be prompted to consider the effect of some change you could make in the present - this isn’t part of the estimation process per se, but allows you to use the calculator to compare the counterfactual value of a world conditional on some decision you might make.

This calculator is relatively straightforward, but a blunt instrument: the transitional probabilities one might select from it are necessarily a weighted average of each time we pass through the state, and thus are recursive - the transitional probabilities determine the number of times we expect to regress, but the number of times we expect to regress informs the weighted average that determines the transitional probabilities.

Given that, I personally view this primarily as a tool to compare strong differences of opinion or to look at the directional robustness of certain choices, where precision might be less important than the broad direction your assumptions lead you in. Since it’s currently much faster to run, it might also be better for sensitivity analysis.

For forecasting, or anything where higher fidelity seems important, the full calculator might be better. That said, the simple calculator is much easier to use, so if the latter seems too daunting, this might be a good place to start.

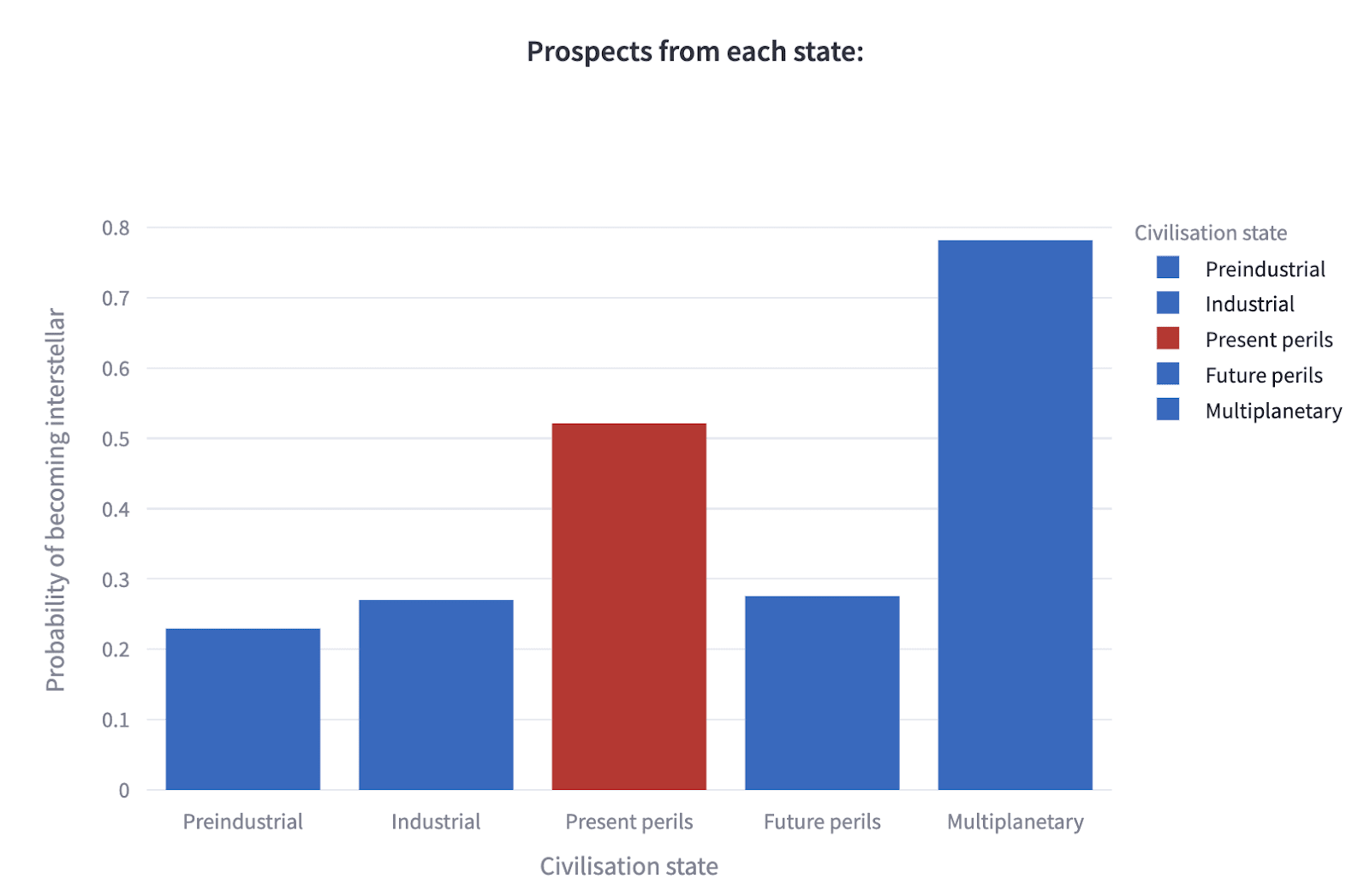

Example of output from the simple calculator

The following table is based on an early estimate of mine (not to be taken too seriously) - you can see the input that generated it on this calculator page. I’ve given a quick explanation of that input in this results document, where you can also see some other people’s estimates, which are very different to mine.

| Probability of becoming interstellar | Expected value (and therefore cost of extinction) from this state | Cost of transitioning to state, i.e. difference in expected value from current time of perils | Cost of transitioning to state as proportion of the cost of extinction | |

| Current time of perils | 0.52 | 0.52V | 0 | 0 |

| Preindustrial state† | 0.23 | 0.23V | 0.29V | 0.56 |

| Industrial state† | 0.27 | 0.27V | 0.25V | 0.48 |

| Future time of perils† | 0.28 | 0.28V | 0.24V | 0.47 |

| Multiplanetary state† | 0.78 | 0.78V | -0.26V | -0.50 |

† Assuming the same parameters that yielded the current time of perils estimate

Table 1: output from the simple calculator

Here are the same values given by the bar charts generated by the simple calculator:

If you give the simple calculator a go and want to share your results please add a link to them (and any context you want to include) to the same document. Feel free to add any disclaimers, and don’t feel like you need to have done in-depth research - it would be really interesting to collect some wisdom of the crowds on this subject.

How to use the full calculator

You can run the full calculator by cloning this repo and following the instructions in the readme. If I’ve already lost you with that sentence, but you feel like you understand the maths below, feel free to DM me and I’ll help you out (or if you just have problems getting it to work).

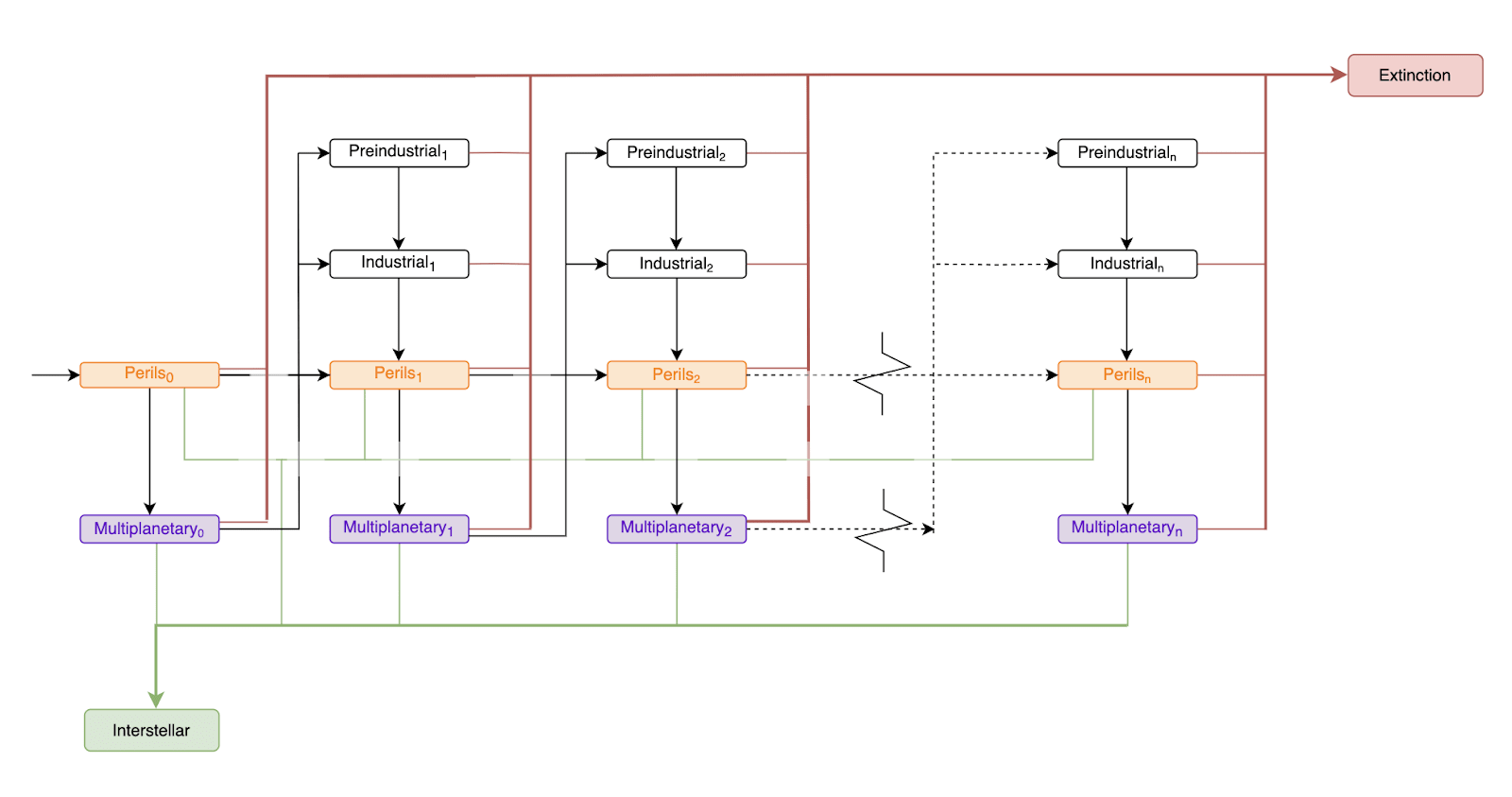

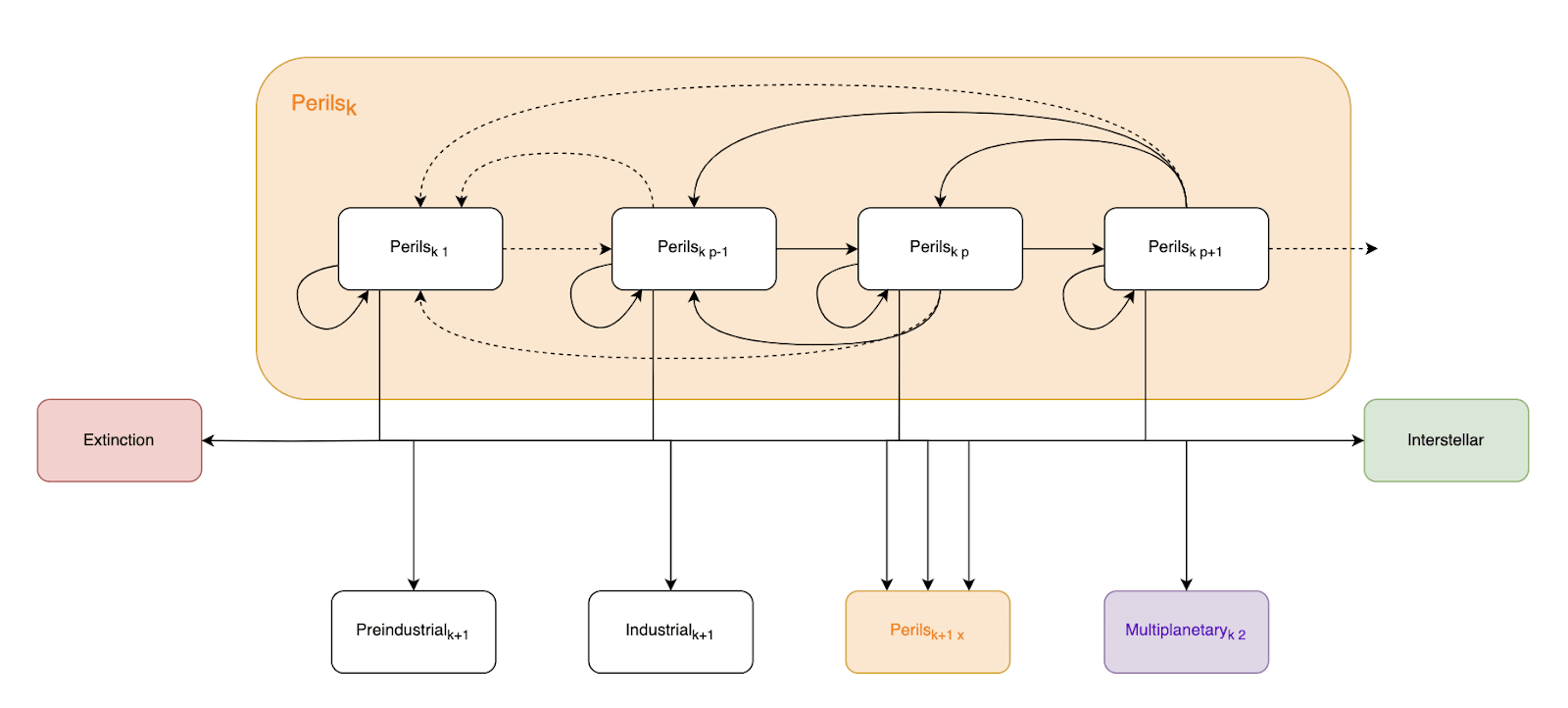

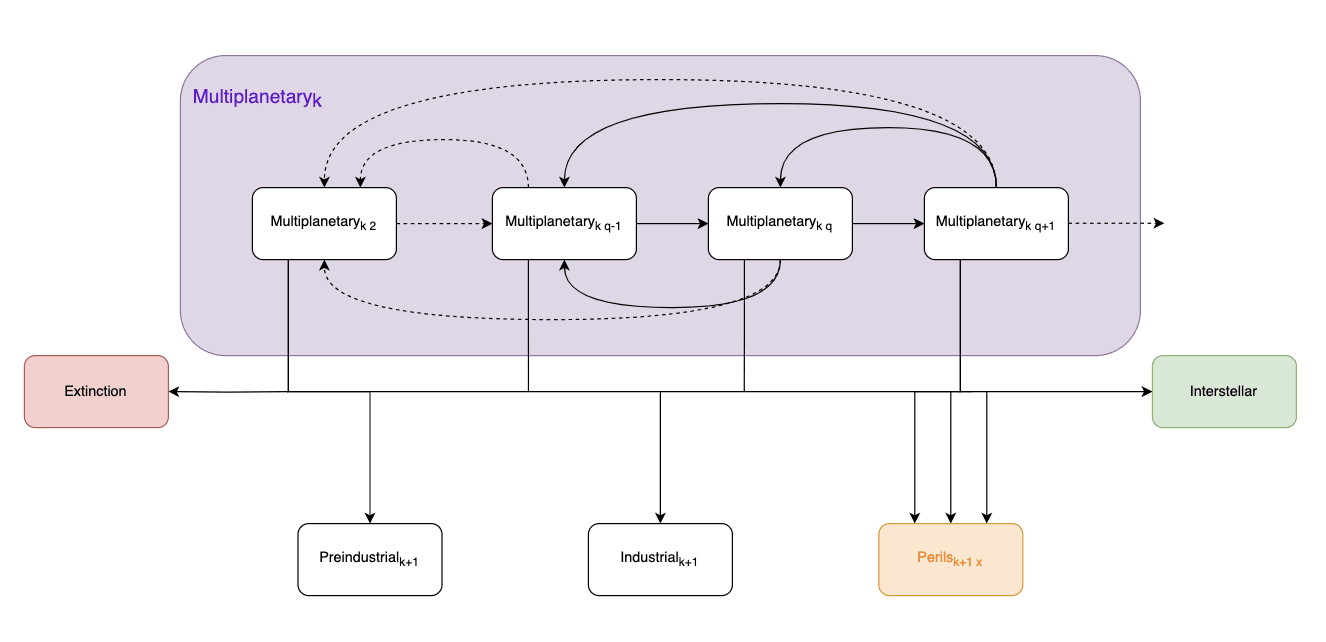

Here your goal is to fill out the transitional probabilities in the following three diagrams, using (to the extent you want) functions taking in the numbers in the numbered states (i.e. all states except extinction and interstellar):

Top level transitions[11]

Transitions within a time of perils

Transitions within a multiplanetary state

Any implementation obviously has to make strong assumptions about what to do with these parameters. These assumptions take the form of various functions that output some transitional probability per possible transition per parameterised state.[12]

These functions can be replaced to taste within the code (including with constants, as some are by default).[13] I’ll give formal definitions of them in a moment, but first it’s worth explaining the intuitions they capture:

- That the first reboot determines a number of variables for all future civilisations, since it will look very different to our own history but subsequent civilisations will look more analogous to it: technology lying around in some state of decay to learn from, residual lessons learned from the catastrophe, fossil fuels all but gone, high atmospheric CO2 concentrations, other resources depleted, etc. I imagine subsequent civilisation will magnify these effects by some amount.[14]

- That within a time of perils (see ‘Within a time of perils’ diagram above), all the transitions, good and bad, are most likely to be enabled by technology. To represent the advance of such technologies, the primary component of the transitional probability is an s-curve or diminishing returns curve representing probability-per-year (we can add some constant background risk to the per-year total of the regressive transitions).

- That at any given level of technology in a time of perils, there’s some probability which we can treat as constant of regressing to an earlier but still time-of-perilsy state. Such a regression could revert us to the technological equivalent of any earlier year, but is biased towards smaller regressions (i.e. we’re more likely to have a pandemic that sets us back 2 years than one that sets us back 20). I’m unsure how to model this bias simply, and it can make profound differences to the outcomes, so I’ve included three algorithms selectable as parameters that give some sort of upper and lower bounds to the bias:

- ‘exponential’, which gives an exponentially decaying chance of regressing increasing numbers of years and seems implausibly optimistic (regressing more than a few years through a time of perils would be vastly less likely than regressing to any earlier technological state).

- ‘linear’, which gives us a linearly decreasing chance[15] and seems implausibly pessimistic. On this algorithm, we should expect to see many multi-year regressions in our actual history, yet judging by global GDP, they’ve almost all been 0-2 years,[16] where 0 is approximately no annual change. Global GDP isn’t ‘technology’, so one could use a different basis for graphing this, but I don't know of a better simple quantitative proxy (and the exact nature of our ability to build enough nuclear warheads/rockets etc to transition between states is probably some common factor influencing both ‘GDP’ and ‘technology’).

- ‘mean’ is the mean of the above two algorithms.[17]

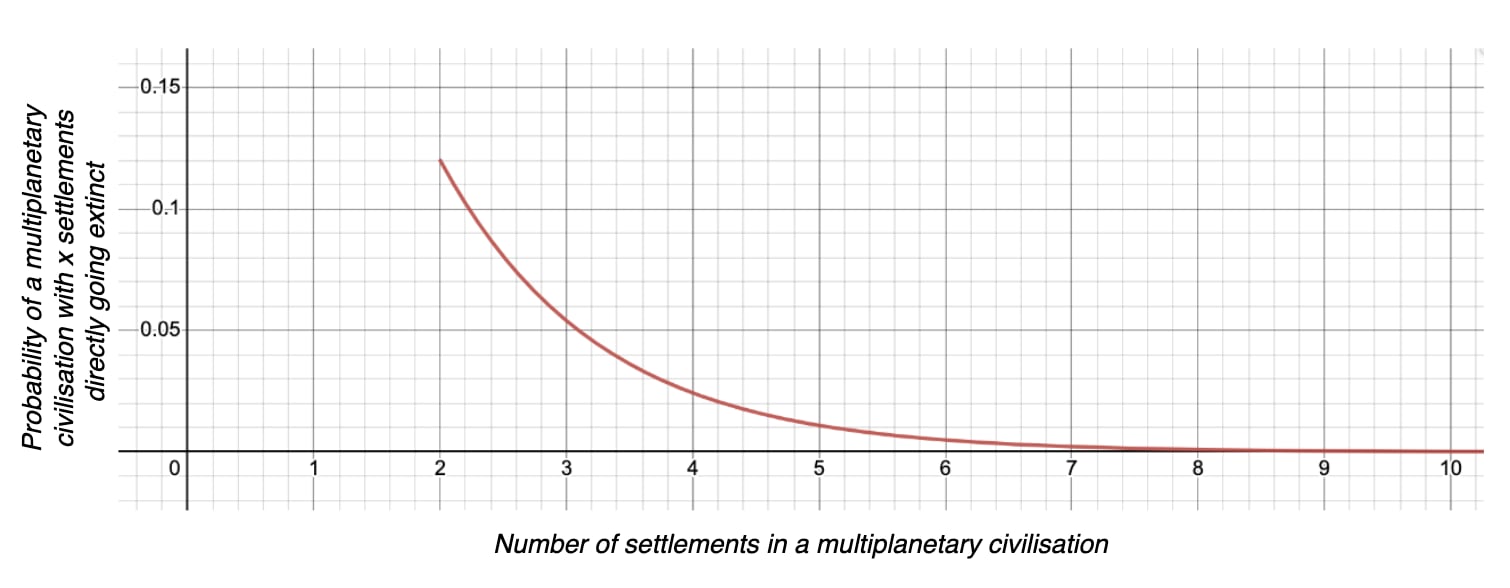

- That within a multiplanetary state, there is an exponential decay in regressive risks for each settlement added, and an s-curve representing an increasing chance of sending out interstellar colonisation missions as the number of self-sustaining settlements increases.[18] The S-curve function is used mainly for simplicity (it’s the same function as the S-curves representing transitions from the time of perils), but we can justify the high probability it implies towards the top end of the curve by the fact that, even liberally defining ‘settlements’, there’s only enough rocky mass in our solar system to have perhaps a couple of dozen really self-sustaining settlements - after that, we’ll have to look further afield.[19]

These functions need further parameters to determine their shape. For the purposes of making the calculator run straightforwardly, I’ve set a number of default parameters, which I’ve adapted as much as possible from relevant existing sources - Metaculus forecasts, global GDP history, Michael Aird’s database of existential risk estimates and other expert estimates of key criteria. There are extensive comments explaining the choice of defaults and offering some alternatives in this doc - feel free to add to the comments there.

I’ll discuss parameter choice more in the next post - but the point of the calculator is for you to replace some or all of the parameters with your own. These parameters are set in the params.yml file - so that’s what you’ll need to edit to input your own opinions.

Choosing your parameters using Desmos

The full calculator has around 65 required parameters and some optional extras that allow adjusting our current time of perils only[20], all of which can feel overwhelming. But the vast majority of these determine the shape of one of four types of graph of transitional probabilities; so you don’t need to ‘understand’ all the parameters (I don’t) to massage the graphs to a shape that looks empirically correct to you.

Given this, I strongly recommend forming your opinions with reference to the Desmos graphs linked in the following subsections. Desmos doesn’t allow named variables, so under each image below I’ve explained the mapping of the Desmos variables to those in the corresponding section of the params.yml file. So once the graphs are in a shape you think looks empirically/intuitively/historically apt, you can paste the relevant values across.

Note that for all the graphs, the x-value isn't set as a parameter anywhere, but rather the code iterates over all integer x-values up to some practical maximum that allows the program to terminate in a reasonable amount of time.

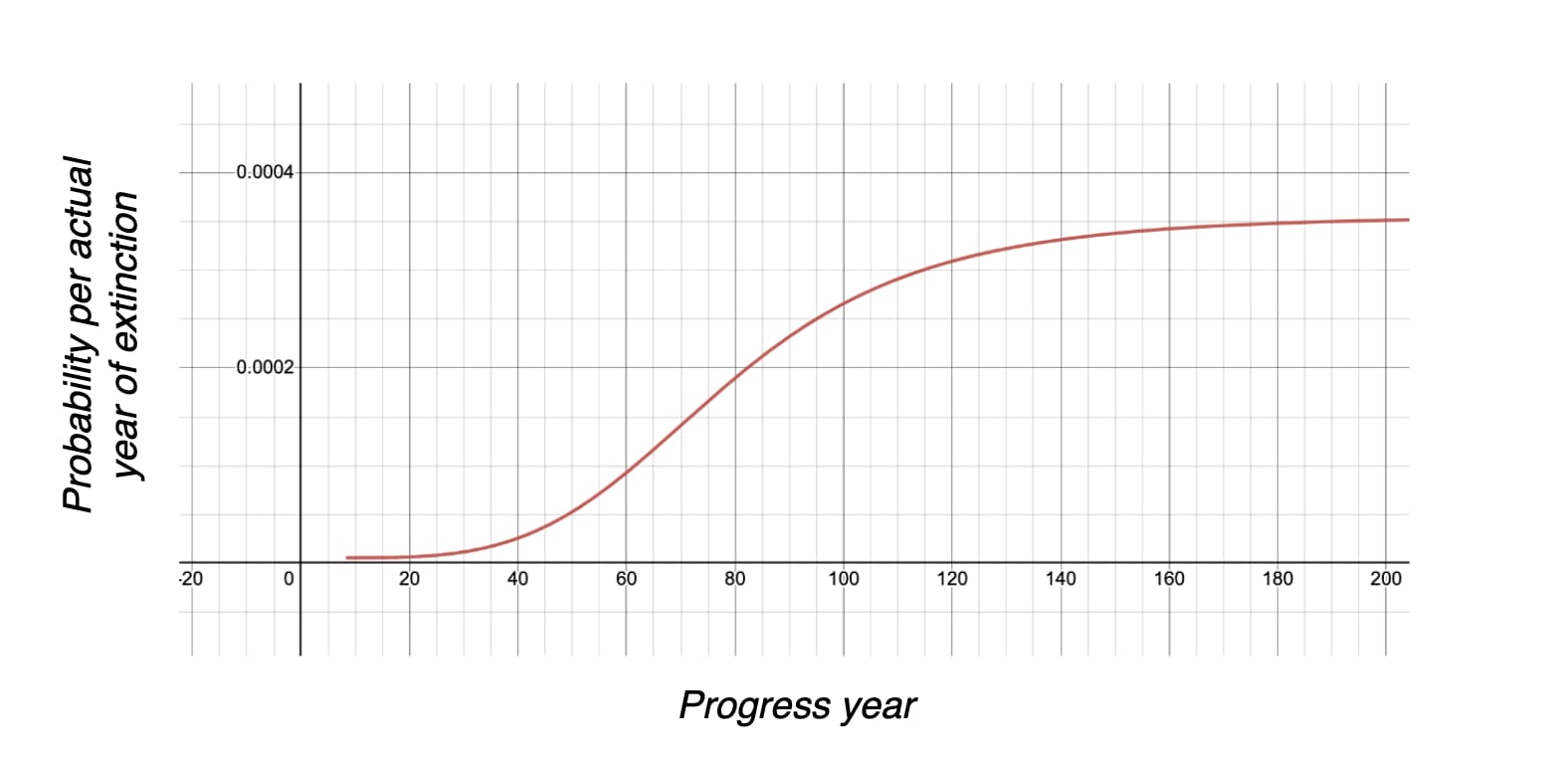

Milestone contractions and expansions[21] from post-industrial states

Example graph of the annual probability of directly going extinct in progress year x of the current time of perils (not showable in 2 dimensions: a per-civilisation multiplier on the x-scaling). An adjustable version is on Desmos, on which

- x=progress year

- a=‘y_scale’: the maximum per-year probability to which the graph asymptotes;

- b=‘x_scale’: how drawn out the curve is

- c=‘x_translation’: how many progress years after the start of the time of perils the anthropogenic risk rises above 0

- d=‘sharpness’: an abstract parameter determining the curve’s ‘S-ness’

Finally, we add the term m/n, where

- m=‘per_civilisation_background_risk_numerator’, and

- n=‘base_background_risk_denominator’: between them these parameters set a constant-per-civilisation value (if you want it to be constant across all civilisations, set the numerator to 1)

The same function, with different default values, describes annual probability of transitioning from our current time of perils to a preindustrial, industrial, multiplanetary†, and interstellar (existentially secure) state - though I gave the latter transition 0 probability by default so it has no default-values graph.

The same function is also used to represent the probability of becoming interstellar from a multiplanetary state†, though in that graph x=number of self-sustaining settlements, and x_translation is always 2 (since the definition of a multiplanetary state is 2 or more self-sustaining settlements).

† For these transitions we assume no ‘background risk’, so no m or n values.

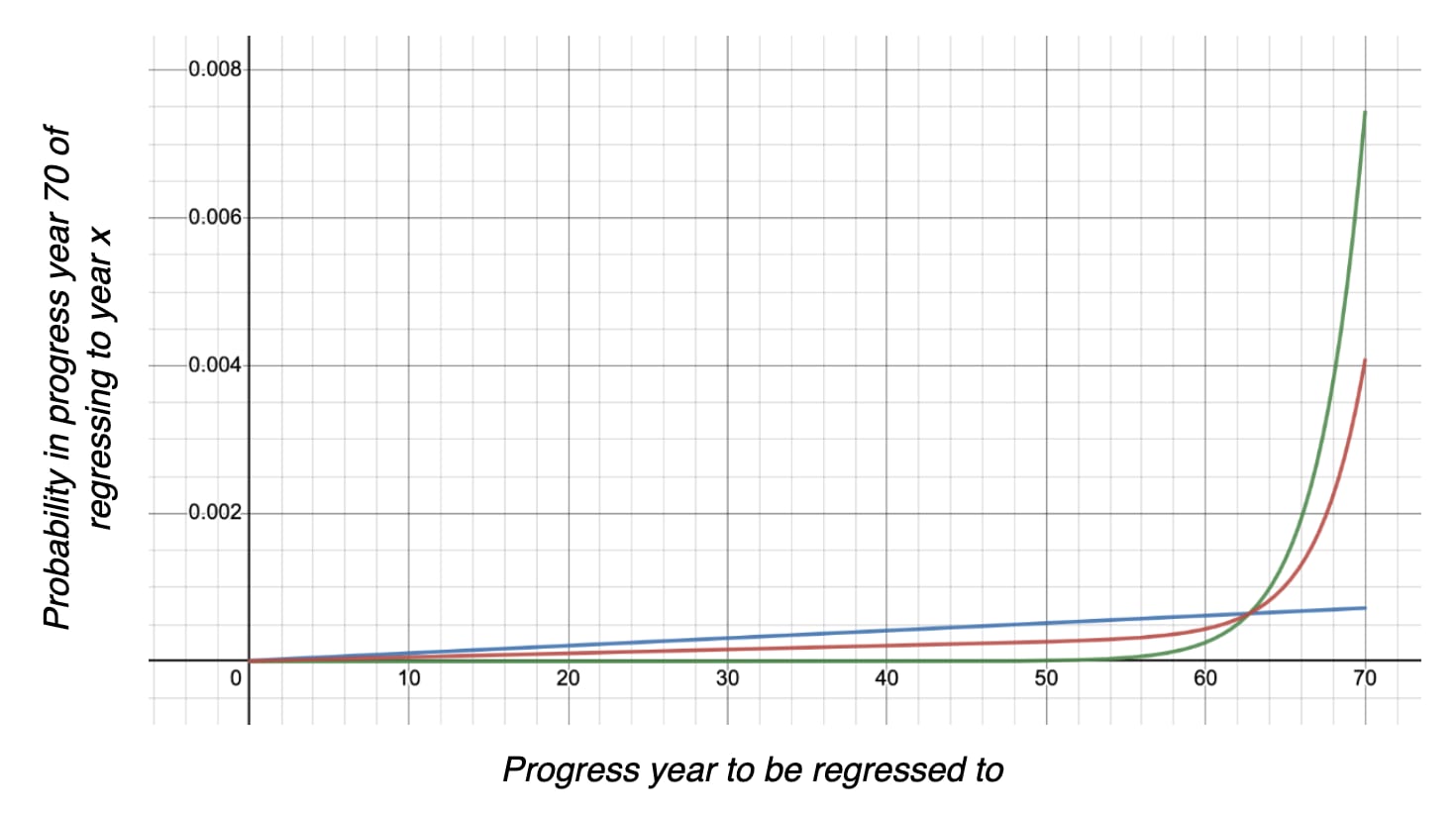

Regressing within a post-industrial state

Example graph of the probability of transitioning to progress year x from our current progress year (assumed to be p=70 in this diagram). The green line represents the ‘exponential’ algorithm described above, the blue line represents the ‘linear’ algorithm, and the red line is the ‘mean’ algorithm. An adjustable version is on Desmos, on which

- x=the progress year to which we might transition

- p=our current progress year (like x, the code iterates through these)

- a=‘any_regression’: total annual probability of an intraperils regression of any size,

- n=‘regression size skew’: determines the level of bias towards small regressions

Milestone regressions and expansions from a multiplanetary state

Example graph of the probability of a civilisation in a multiplanetary state with x settlements (defined as x>=2) directly going extinct . See adjustable version, on which

- x=number of self-sustaining settlements

- a=‘two_planet_risk’: the maximal risk, given when there are two planets

- b=‘decay_rate’: the proportion by which risk for an n-planet civilisation decreases if it becomes an (n+1)-planet civilisation

- c=‘min_risk’: the risk to which this value asymptotes

For simplicity I treat the probability of transitioning to preindustrial or industrial states from a multiplanetary state as 0 (it seems like an extremely precise level of destruction), so I don’t have separate example graphs for them. You can play with the above link separately with each of them in mind.

I use a combination of the above two functions (exclusively with the exponential variant of the first function) as a basis to calculate the probability of regressing from x to q settlements within a multiplanetary state (this reverses the emphasis from the intra-perils regressions graph above, which displays the probability of regressing to progress year x. For consistency with that graph, you might want to view it as the probability of regressing from q to x settlement; though I think that’s a less intuitive visualisation in for an intra-multiplanetary regression), but on that graph we optionally multiply by a further function that allows the risk of any regression to change as the number of settlements increases. This allows the view that over long time periods humans will become less - or more - prone to blowing ourselves up, or just develop better or worse defensive/expansive technologies than we do weapons. The parameters for that function:

- a=’two_planet_risk’: the base probability of any regression in this state, given for a civilisation with two planets (since that’s the defined minimum number to be in this state)

- b=’decay_rate’: how much does the probability of any regression drop per planet (negative rates express a greater tendency to blow ourselves up over time. For small negative rates, we can still reach an uncomfortable existential security through ‘backup planets’ still being settled faster than our tendency to blow them up. For large negative rates, RIP longtermism)

- c=’min_risk’: the lowest the chance of regressing 1+ planets can ever go

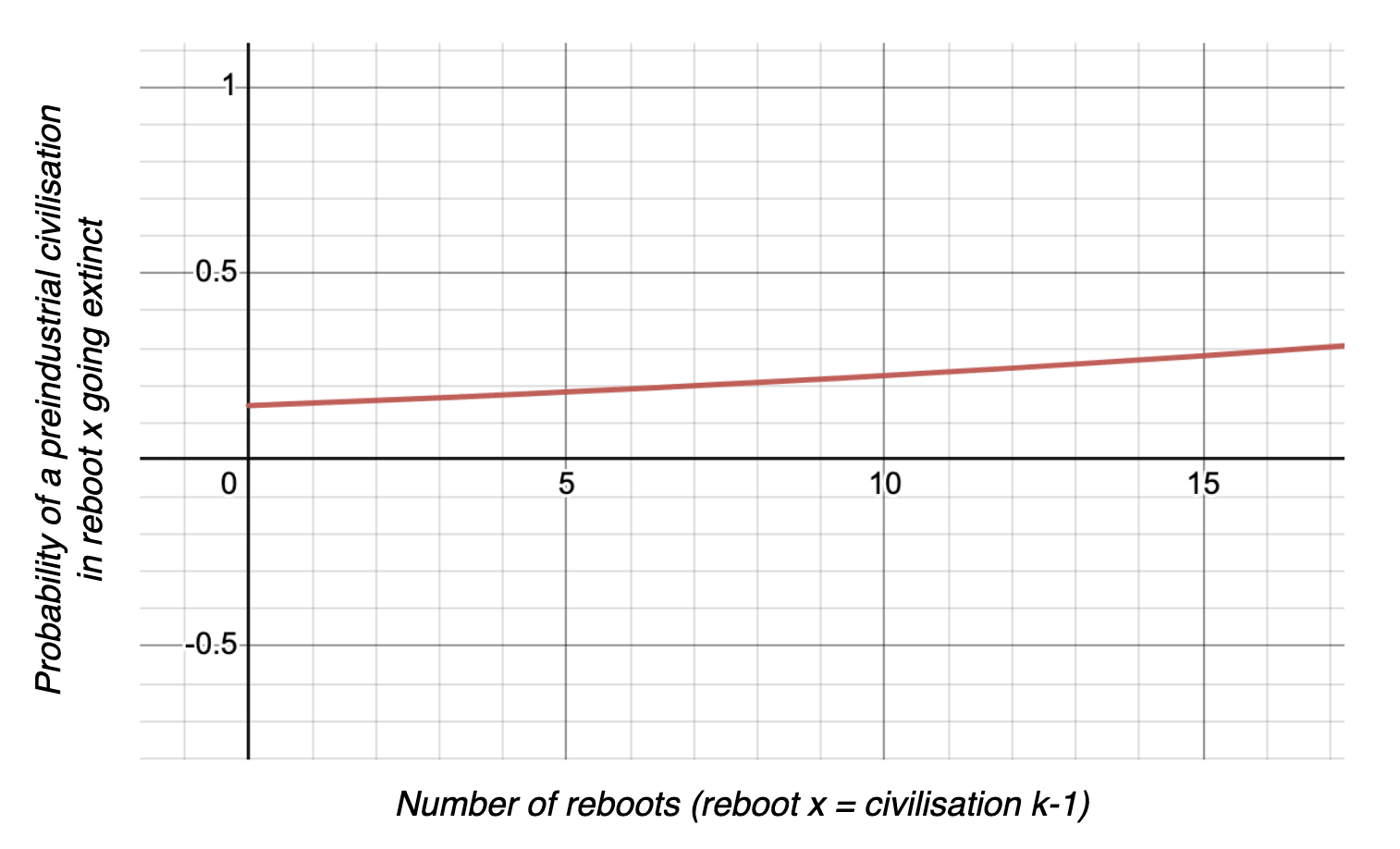

Going extinct from a pre-perils state

Example graph of the probability of directly going extinct from a preindustrial state in the xth reboot (assuming the only two possibilities are that or advancing to an industrial state). See adjustable version, on which

- x=number of reboots

- a=‘per_civilisation_annual_extinction_probability_multiplier’: the amount we multiply annual extinction probability for each reboot

- b=‘annual_extinction_probability_denominator’: such that is our annual extinction probability in reboot x

- c=‘base_expected_time_in_years’: expected number of years to recover industry

- d=‘stretch_per_reboot’: a per-reboot multiplier on expected number of years to recover industry.

Almost the same function is used to describe the probability of going extinct from an industrial state, but where c and d refer to reaching a time of perils, and with a further parameter g describing the reduced annual risk of extinction due to more advanced (and not-yet-civilisation-threatening) technology.

Choosing non-graphical parameters

The two required parameters that aren’t captured on the above graphs are

- ‘current_progress_year’ - given that there have been 79 actual years since 1945, do you assume we’re in progress year 79 now? Or do you assume that (for example) some years of stagnant or slightly negative economic growth mean our technology has fallen some number of years behind the ‘ideal’ trajectory?

- ‘stretch_per_reboot’ for every time of perils graph. There aren’t enough dimensions to show this on the graphs below: how much of a stretch or compression should there be to the x-axis of any given graph per reboot?

Finally, there’s a set of optional ‘current_perils_<standard parameter>’ (e.g. ‘current_perils_x_stretch’) parameters for every time of perils transition. These allow you to imagine changes to the current era which won’t be reflected in the graphs of any future times of perils. These are useful to investigate counterfactuals - they could be used to represent e.g. you working on a political or social change which wouldn’t be expected to persist through a civilisational collapse, or to express a belief that we’ve somehow done exceptionally well or exceptionally badly this time around.

Examples of output from the full calculator

Examples 1 & 2: my pessimistic and optimistic scenarios

The output of the full calculator goes into a bit more detail than the simple calculator. We can look at the probability of becoming interstellar from any specific civilisation’s time of perils, preindustrial state etc. In the table below, we look at the prospects from the first reboot, which should give us a better insight than the prospects from the average reboot that the simple calculator provides.

The table also compares outputs based on comparatively pessimistic and comparatively optimistic scenarios I envisage. Parameters for the optimistic base estimate are on row 2 of this spreadsheet, and parameters for the pessimistic view are on row 2 of this one. In the more pessimistic case, I'm loosely imagining the probability of regressing to an industrial state is relatively high, due some kind of fragile world - for example, depleting resources within our current era making energy returns on investment susceptible to a single sufficiently large global shock[22]). In the more optimistic case, I’m loosely imagining something more like the view longtermists anecdotally seem to hold, that rebuilding would be comparatively ‘easy’, at least the first time.

For what it’s worth, the pessimistic estimate is closer to my best guess, and uses inputs that at the time of writing are given as defaults in the calculator; this document contains my reasoning for these default parameters (feel free to leave comments and criticism on it). But both sets of values are rough estimates for which I don’t claim any specialist insight, so please don’t overindex on them:

| Probability of becoming interstellar | Expected value (and therefore cost of extinction) from this state | Cost of transitioning to state, i.e. difference in expected value from current time of perils | Cost of transitioning to state as proportion of the cost of extinction | |||||

| P(essimistic) or O(ptimistic) scenario | P | O | P | O | P | O | P | O |

| Current time of perils | 0.38 | 0.70 | 0.38V | 0.70V | 0 | 0 | 0 | 0 |

| Preindustrial state in first reboot† | 0.22 | 0.59 | 0.22V | 0.59V | 0.17V | 0.12V | 0.44 | 0.17 |

| Industrial state in first reboot† | 0.25 | 0.69 | 0.25V | 0.69V | 0.14V | 0.01V | 0.35 | 0.02 |

| Multiplanetary state in our current civilisation† | 0.57 | 0.72 | 0.57V | 0.72V | -0.19V | -0.02V | -0.50 | -0.03 |

† Assuming otherwise the same parameters that yielded the current time of perils estimates

Table 2: Direct output from the full calculator (pessimistic and optimistic scenarios)

In general, the more pessimistic one is about our prospects of either recovery from civilisational collapse or of successfully navigating through a time of perils, the higher the significance of relatively minor advancements and regressions, both in terms of absolute probability and (especially) relative to the cost of extinction. To put it another way, the more likely we are to eventually go extinct from regressed states, the more regressing to them approximates our eventual extinction - especially from a very long-term perspective.

Because of the more detailed input to the full calculator, we can simulate more specific counterfactual events that take place within our current time of perils. With a bit of creativity, we can simulate various such events allowing us to think both of their value in terms of V and as a proportion of the cost of a counterfactual extinction event. You can see how I generated the results on rows 3–6 of the same two spreadsheets as used for the previous table - basically I started with the same params as the pessimistic/optimistic scenario above, with some slight tweak to represent the counterfactual difference.

These are very naive estimates of ways to think of the events in question, meant primarily as simple examples of what you could do:

| Cost of event (i.e. difference in expected value from current time of perils) | Cost of event as proportion of the cost of extinction | |||

| P(essimistic) or O(ptimistic) scenario | P | O | P | O |

| Non-nuclear great power conflict (based on opportunity cost = counterfactual technological regression narrative)† | ||||

| Non-nuclear great power conflict (based on narrative of differentially accelerating progress of harmful technologies)† | ||||

| Counterfactually having averted the Covid pandemic† | ||||

| Counterfactually saving one person’s life† | ||||

† Assuming the same parameters that yielded the current time of perils estimate

Table 3: Derived output from the full calculator (pessimistic and optimistic scenarios);

Note the two different example ways of representing non-nuclear great power conflict; though I’m unsure if these are best thought of as alternatives or additive outcomes.

Consistent with above, the significance of still smaller events tends to be higher the more pessimistic the base estimate (though they can be quite sensitive to the parametric source of that pessimism).

Example 3: David Denkenberger’s assessment

David Denkenberger was kind enough to give a set of estimates of his own when reviewing this post (Denkenberger is the co-founder of ALLFED, though the opinions here are his own), which formed a middlingly optimistic alternative scenario (his parameters are on row 2 of this sheet):

| Probability of becoming interstellar | Expected value (and therefore cost of extinction) from this state | Cost of transitioning to state, i.e. difference in expected value from current time of perils | Cost of transitioning to state as proportion of the cost of extinction | |

| Current time of perils | 0.62 | 0.62V | 0 | 0 |

| Preindustrial state in first reboot† | 0.45 | 0.45V | 0.17V | 0.27 |

| Industrial state in first reboot† | 0.57 | 0.57V | 0.06V | 0.09 |

| Multiplanetary state in our current civilisation† | 0.73 | 0.73V | -0.1V | -0.16 |

† Assuming the same parameters that yielded the current time of perils estimates

Table 4: Direct output from the full calculator (David Denkenberger's scenario)

We can also compare what his estimate would imply about the significance of the same naively represented intra-perils counterfactual events:

| Cost of event (i.e. difference in expected value from base estimate) | Cost of event as proportion of the cost of extinction | |||

| Non-nuclear great power conflict (based on opportunity cost = counterfactual technological regression narrative)† | ||||

| Non-nuclear great power conflict (based on narrative of differentially accelerating progress of harmful technologies)† | ||||

| Counterfactually having averted the Covid pandemic† | ||||

| Counterfactually saving one person’s life† | ||||

† Assuming the same parameters that yielded the current time of perils estimates

Table 5: Derived output from the full calculator (David Denkenberger's scenario)

I’ve been uploading results of a series of test runs to this Google sheet, though they might change substantially as I develop my views - if you do use the full calculator, please consider adding your own results to the sheet, no matter how ill-informed you feel. You can always add caveats in the notes column.

Limitations/development roadmap

I have a few concerns about the calculators, some of which point to ways I would like to see them improved if I developed them further. The simple calculator is largely finished, though it could doubtless do with UI improvements - most of the limitations listed below apply primarily to the full calculator.

1) Model uncertainty

As an implementation of the model in the previous post, most of that model’s limitations apply. Some of these are partially addressable in the user’s parameter choices (e.g. run separate simulations with more vs less optimistic parameters for modern eras to represent the possibilities of civilisations taking short vs long amounts of time to regain technology), others would need substantial adjustments - or a whole different model - to incorporate.

There’s one I would particularly like to explicitly deal with…

2) AGI

The future trajectory of AI development seems unique among threats. Nuclear weapons, biotechnology and other advanced weaponry seem likely to pose an ongoing threat to civilisation, albeit one that might diminish exponentially as our civilisation expands. Also they could cause our civilisation to contract multiple times in much the same way each time.

By contrast, AGI seems likely to quickly lead to one of three outcomes: extinction, existential security, or business-as-usual with a new powerful albeit weaponisable tool. The first two aren’t ongoing probabilities from having the technology - they’re something that will presumably happen very quickly or not at all once we develop it (or, if creating a friendly AGI doesn’t mean the risk of an unfriendly one killing us reduces to near-0, either there is some similar ‘secure AGI’ that does, or eventually an AGI will inevitably kill us, meaning we don’t have any chance of a long-term future).[23]

To work this intuition into the model, I would like to introduce a separate class of states partitioning the whole of civilisation into pre- and post- development of AGI states. After AGI has been developed, subject to it not making us extinct, the risk of extinction from AGI during all future times of perils and multiplanetary states will be much lower. Also, subject to it not making us existentially secure, the probability of going directly from a time of perils to an ‘interstellar’ state (which only makes sense via gaining existential security from a benevolent AGI) will be approximately 0.

For the first release I've omitted AI as a separate consideration. To incorporate it in the meantime, you might

- Add a combination of high mid-late-perils extinction risk for current perils only (using the optional parameter), where we might expect to face the majority of AGI risk (since on most estimates of the people most concerned about AGI, we’re likely to develop it if nothing else terribly bad happens in the next few decades if not sooner) and/or

- Increase your base estimate for extinction risk at least at the start of a multiplanetary state and/or

- Try running the simulation two or more times with the same parameters except for an increase to extinction risk in the mid-late period of all times of perils and early multiplanetary states in one sim, and attribute to AGI whatever you think seems like an appropriate proportion of the difference in results and/or

- If feeling slightly more ambitious, add a boolean to the ‘parameterised_decaying_transition_probability’ function that checks if

k==0(i.e. if we’re looking at the multiplanetary state of our current civilisation) and upweights extinction risk at the start of that state, for the same reason as in 1.

3) Usability

The functions governing transitional probability are fairly complex, and I wonder whether they could be simpler without losing much of the control over the graph’s shape, perhaps to a decreasing derivative formula, or logistic function with artificially limited domain (though these would lose the intuitive property the current one has of letting you explicitly set maximum transitional risk per year), or to a piecewise linear function.

Perhaps more importantly, the full calculator currently is a Python script which users will need to run locally. In an ideal world it would have a browser-based UI - though the practicality of that might be limited by the runtime issues described below. If nothing else, I might be able to put a version of it on Google Colab that runs with minimal setup.

4) More detailed output

At the moment, the outputs of a simulation are just the probabilities of success from various states, and the derived values in the examples above. I think there’s some other interesting data we could pluck out, such as ‘expected number of civilisational reboots on a happy/unhappy path’, ‘number of years that implies between now and success/extinction’, and more. This would also allow some investigation on the question of how positive the future would be, if we imagine for example that we got lucky with modern moral sensibilities developing as they did, or just that more resource-constrained civilisations might have less - or more - incentive to cooperate. It would also make it easier to plug into a model like Arvo’s, in which we might assess the possible future value of humanity at various other milestones, and so need some way to estimate how long we had taken to reach them.

There are probably also some interesting further derived values - for example if the number of expected years on the typical happy path is high enough, we might want to take seriously the cost of expansion of the universe in the meantime or the probability of alien civilisations emerging first.

5) Runtime

Because we have to model a potentially very large number of states, depending on how much precision we opt for, the current runtime of the calculator can be several minutes or longer on my 2019 laptop. This isn’t a huge problem for generating a few individual estimates, but ideally it would someday be able to run a Monte Carlo simulation or several successive sims for sensitivity analysis. With the current runtimes the former would be effectively impossible for most users and the latter very slow.

Most of this runtime comes from the implementation of the times of perils as having potentially thousands of progress years, each year a state to which you could theoretically transition from any other year in the same era. I think this improves fidelity enough to be valuable, but a future version of the calculator could allow an alternate and simpler version of the time of perils for simulation purposes, or implement various algorithmic improvements.

6) Manual function selection

Whatever functions one picks for transitional probabilities will be oversimplifications, and ideally it would be simple to try alternatives with different emphases. The choice of functions straddles the boundary between ‘model’ and ‘parameter’ in a way that makes me wonder if there couldn’t be a way of giving alternatives, perhaps from a pre-determined list, as input in the parameters file (there’s already one option to do so as described above, for intra-perils regressions) - but without careful implementation this could get quite confusing, since different functions would require different parameters.

7) Better options to explore present counterfactuality

At the moment the calculator has partial functionality for testing various alternative parameters and comparing them to some base scenario. Nonetheless, I think there’s a lot of scope for making this kind of exploration more flexible, e.g. by changing the trajectory of a graph from some particular year (at the moment you can only change the whole graph for the current time of perils), or adjusting the probability of some transition within a particular time range, and so on.

8) Minimal automated testing 😔

This was just due to time restrictions - I would love to set up more tests to make the code easier to modify.

Contribute/submit feature requests

If you have any feature requests for these calculators, whether or not they were described above, please let me know. I won’t have a lot of time to work on them in the near future, but I do hope to gradually improve them if they see much use.

Relatedly, if you like the project and have enough Python knowledge to contribute, please ping me - I’d love to get critique on the existing code, or support in implementing any of the above ideas, or other feature requests.

Share your results!

The upcoming and final post in this series will detail some interesting results from my own use of the full calculator - but I’m not a researcher in the field. I’d love to incorporate some wisdom of the crowd - or wisdom of specialist researchers - from anyone who wants to try their hand at using either the simple or full calculators. At the risk of repeating myself, please consider

- linking to your estimates in the comments or in the open document if you use the simple calculator.

- opening a PR on Github with your row added to the autogenerated results.csv file, or just pasting your row onto this worksheet (though note that changing the parameter structure will mess up the columns for your entry). Please ping me if you want some guidance through the process - I’m happy to help you navigate the rough edges, and it would be very useful to me to see people’s actual usage and UI pain points.

- posting your own parameters them here or DMing them to me if you want me to run the calculator on your behalf (feel free to just post filled in Desmos graphs if that’s easier, though be careful to paste the correct URL, from the ‘Share Graph’ button in the top right) once you’re done adjusting them.

In the next and final post, I’ll do some digging for surprising implications of my own estimates and of anyone who’s submitted their own.

Happy calculating!

Acknowledgements

I owe thanks to Siao Si Looi, Derek Shiller, Nuño Sempere, Rudolf Ordoyne, Nia, Arvo Muñoz Morán, Justis Mills, David Manheim, Christopher Lankhof, Mohammad Ismam Huda, Ulrik Horn, John Halstead, Charlie Guthmann, Vasco Grilo, Michael Dickens, David Denkenberger and Agustín Covarrubias for invaluable comments and discussion on this post, the code and/or the project as a whole. Any remaining mistakes, whether existentially terminal or merely catastrophic, are all mine.

- ^

While I don’t want to sidetrack the main discussion, it might be worth a tangent into the two distinct moral reasons for pursuing such an intergalactic future. If you already lean towards this view, you can skip this extended footnote.

The first is that if we assume a totalising population axiology (the normal basis for longtermism), for whatever it is we value, more is more. That is, no matter how good we could make things on Earth - even if we could eliminate suffering and almost perfectly convert resources into whatever form we consider most valuable - we can presumably make them comparably good for life elsewhere. Then we can get vastly more of that goodness by expanding into space (back of the envelope: access to ~ times more rocky matter over the course of ~years rather than ~ years would give us ~ times more of whatever we value; optimistically, we might reach numbers that would dwarf even that.).

The second reason, which doesn’t require a totalising axiology, is existential security. I think a naive but reasonable calculation is to treat the destruction of life in each settlement as at least somewhat independent, more so the further apart they are. That would make extinction risk in such a state some kind of exponential decay function of number of self-sustaining settlements, such that the probability of extinction might be , where a is some constant or function representing the risk of a single-planet civilisation going extinct, b is some decay rate such that (where 1/2 implies the probabilities of each settlement going extinct are completely independent) and p is the number of self-sustaining settlements in your multiplanetary civilisation.

Three counterpoints to the latter argument are

* Aligned AGI might fix all our problems and make us existentially secure without needing the security of an interstellar state

* An unaligned AGI would always be able to kill an arbitrarily large civilisation

* Some other universe-destroying event would always be possible to triggerIf you think the first of these is the only way to existential security, then in models that follow you can assign 0 probability of reaching a ‘multiplanetary’ state, and suppose that we will either transition directly from a time of perils to an existentially secure state or we won’t become existentially secure.

If you think either the second or third counterpoint is true, then this project and longtermism are both irrelevant - eventually the threat in question will kill everyone, and we should perhaps focus on the short term.

But if AGI doesn’t perenially remain an existential threat and no universe-destroying events are possible (see e.g. soft/no take-off scenarios linked in this thread), the value of this risk function would quickly approach 0.

For more on the existential security argument, see Christopher Lankhof’s Security Among the Stars.

This all presumes the future isn’t net negative in expectation. If you believe that it is, then this project is probably not relevant to you, unless the different pathways of how we might get there seem useful to explore. For example if you think our values might get worse (or better) following civilisational collapse, you might be able to plug this in to some model of that process.

- ^

Strictly speaking the ‘probabilities’ discussed in this post are more like extrapolated credences, but since - in common with typical longtermist methodology - I apply these credences to probabilistic models, I refer to them as probabilities when it seems more intuitive to do so.

- ^

The closest thing I know to such an attempt is Luisa Rodriguez’s post What is the likelihood that civilizational collapse would cause technological stagnation? (outdated research), in which she gives some specific probabilities of the chance of a preagricultural civilisation recovering industry based on a grid of extinction rates and scenarios which, after researching the subject, she found reasonably plausible. But this relates only to a single instance of trying to do this (on my reading, specifically the first time, since she imagines the North Antelope Rochelle Coal Mine still having reserves), and only progresses us approximately as far as early 19th century England. Also, per the title’s addendum, she now considers the conclusion too optimistic, but doesn’t feel comfortable giving a quantified update.

[Edit: David Denkenberger also published some relevant risk estimates of the probability of collapse and recovery in Should we be spending no less on alternate foods than AI now?]

- ^

My inclination is to consider ‘interstellar’ to be close enough to ‘existentially secure’ as to be functionally equivalent; and since ‘interstellar’ is more specific it’s the term I’ve used in the code and elsewhere. But if you think existential security could be reached without becoming interstellar you can mentally replace ‘interstellar’ with ‘existential security’ throughout and set your parameters accordingly.

If you’re concerned that we might not be existentially secure even after we become interstellar, the calculators doesn’t explicitly address that concern - you could either represent it through either

* A high MAX_PLANETS constant in the code along with low probability of becoming ‘interstellar’ from relatively small numbers of planets; or

* Simply plugging in the output of the calculator to some further estimate of p(existential security | humanity becoming interstellar)

- ^

This isn’t because I think welfare questions are unimportant; they’re just outside the current scope of this project (though a future version could incorporate such questions - see the limitations/development roadmap section, lower down).

- ^

While I disagree with this view, I mean ‘fanaticism’ in the descriptive sense as used in a different context here , rather than as a pejorative. In this sense it means something like 'tendency to favour risk-neutral maximisation of some function': in this case the function being (1 - <probability of near-term extinction>).

- ^

In practice longtermist grantmakers often split their grants across extinction-related and smaller catastrophes - e.g. OP and Founders Pledge both have ‘global catastrophic risk’ buckets to cover both. But it’s unclear to what extent they do this on longtermist grounds and to what extent it's justified by putting ‘smaller global catastrophes’ in a different bucket and do in fact only prioritise in terms of extinction risk.

If mainly the latter (different bucket), then the grantmaker is still effectively expressing Parfitian fanaticism. If mainly the former (giving to smaller catastrophes on longtermist grounds), then the grantmaker is tacitly expressing the sort of credences which these calculators explicitly deal with - and therefore hopefully make more accurate.

- ^

On it oversimplifying number of future people: strictly speaking, as Bostrom observes, the expansion of the universe means we lose a huge amount of value for any substantive delay to our spreading our cosmological wings, but that huge loss looks negligible even over millions of years, compared to even relatively minor changes in the probability of eventually achieving V. This disparity is why longtermists generally focus on safety rather than speed.

On it oversimplifying average value per person: Unlike technological progress, it seems to me unlike technology, there’s no predictable patterns that let us imagine how values would evolve across multiple civilisations. This might simplify things in practice: you could imagine we have some level of moral development , and the average for other civilisations is some other level . Then you could convert ‘moral development’ into some per-person coefficient. Finally, we can let be our probability of achieving V without any regressions, and be our probability of achieving V after at least one regression. This would allow you to compare

to

- ^

I somehow only discovered Arvo Morán’s How bad would human extinction be? while writing this post, and it relates closely to the question of how much V would change over time. I’m still digesting the overlap between our work, but I think that a future version of the full calculator could incorporate something like the branching process he describes in this section if treating V as a constant seems to be a simplification too far.

- ^

To emphasise, this is assuming an abstract longtermist view. In practice we might lean towards averting whichever event which caused most expected short term suffering for many other reasons. This runs contrary to the ‘holy shit, x-risk’ philosophy of emphasising 0.1% probability outcomes in which literally everyone dies over outcomes in which (say) merely 50% of people die, which might be much more likely.

- ^

I dropped the ‘survival’ state that I originally described two posts ago because a) Luisa’s estimates that suggested it had a very low risk of extinction, and b) my sense that an event that killed >99.99% and <100% of the population was an extremely narrow target, and therefore c) its overall effect on the outcome seemed tiny. I do wonder whether I should reinstate it as a ‘hunter gatherer’ state distinct from agricultural, as a couple of people have suggested.

- ^

If you don't use the civilisation count for top level transitions, then the top level will be functionally more or less equivalent to the simple calculator (except for having a finite number of possible civilisations).

- ^

The code is somewhat modular, though less so than I’d like. Let me know if you want some help with inserting your own functions. Or, if you’re interested in helping make the process easier, see Contribute/submit feature requests section below.

- ^

This magnification can be different in different civilisational states - for example, you might think the increased resource scarcity would be a minor impediment in advancing from a preindustrial state through to a time of perils, a major impediment in a time of perils, and no impediment at all in a multiplanetary state.

One could also fairly easily put conditionals in the code to give special treatment to one or two reboots: for example, to express the view that the first time around we’d still have enough coal reserves to make a substantial difference, and that the second reboot would be much harder, but in reboots after that no other resource would deplete enough to make nearly as large a difference if it did.

In theory, these magnifications could either increase or decrease our prospects after a catastrophe. In my own simulations, though, I assume that the natural economics of each civilisation using up the most valuable resources available at the time will lead to prospects inevitably declining over most reboots.

Even so, in some cases, our prospects seem to improve slightly if we reboot to a second or third time of perils (imagine e.g. a scenario where an economy powered by renewables is comparably as easy to build as a fossil fuel economy - at least in early reboots before we deplete key minerals - and the detritus of the previous civilisations make things even easier by serving as blueprints for many key technological advancements, perhaps more so for benign than destructive technologies).

But to reach such a scenario we might have to get through some post-catastrophe state from which our prospects would be substantially worse - so one would have to be cautious advocating for apocalypse, even under such assumptions.

- ^

This leads to the awkwardly titled notion of a ‘linear regression algorithm’ in this programme, which has nothing to do with the statistical model of the same name.

- ^

Given our lack of historical context for this, one could instead use GDP of individual nations to inform this view if you thought they would give a more nuanced picture.

- ^

An arguably simpler way to represent ‘small but not miniscule chance of regressing further through a time of perils’ might have been a zipf distribution - essentially a discrete-valued Pareto distribution. I will probably add this as an option at some point, but it turns out to produce similar enough values to an equivalently parametered exponential algorithm, as evidenced on this graph (blue is zipf, green is exponential), that I think it would have very little effect on the calculator’s output vs the exponential algorithm. And for my taste, we know so little about how far relatively minor shocks might cause us to unravel that the somewhat more pessimistic mean algorithm captures my intuition better.

- ^

If you think a multiplanetary state is irrelevant (e.g. you think AI will lock us in one way or the other), you can set the maximum transition probability to that state as 0 and raise the probability of transitioning directly from a time of perils to an existential security/interstellar state above its default 0 value.

- ^

Rocky mass in the form of planetoids isn’t strictly a hard limit. A very advanced civilisation could theoretically construct something like O’Neill cylinders - but by the time even those were self-sustaining, it seems likely that we would both have started colonising other solar systems and be about as existentially secure as we would be likely to get.

- ^

Strictly speaking there are three further parameters in ./calculators/full_cache/runtime_constants.py, but these determine the level of approximation of the potentially infinite Markov chains, and you can ignore them unless you want to adjust the trade-off between precision and run-time.

- ^

Using the terminology I suggested here.

- ^

The Wikipedia page describes fossil fuels as having an EROI of ~30, nuclear energy around 75-80, and most renewables below 20 (with photovoltaics between 4-7). This seems to be a highly contentious topic, with at least one paper claiming that EROI is actually higher for renewables. This question is outside the scope of this work, but seems urgent for longtermists to answer if they believe in either a relatively low risk of direct extinction or a relatively high risk of smaller technological regression since it will heavily influence both the number of times we’d be able to re-reach a time-of-perils-level technology and the length of time we’d have to spend in the time of perils before reaching safer states if we did.

Corentin Biteau’s Great Energy Descent series imagines an extreme version of the pessimistic view, in which the decline in EROI is inexorable and irreversible. I assert no insight here, except that a very much weaker version of this claim could still suggest a fragile world, or a world which will be fragile unless/until certain precautions are taken.

- ^

There’s also the possibility that AGI replaces us with some entity (such as itself) that has consciousness, or some other trait that the user might consider to have moral value. It’s up to you when you choose the parameters to decide whether to account for this possibility in parameters that increase the probability of ‘extinction’, of ‘existential security’, or (perhaps less plausibly) of ‘business-as-usual’.

I think this is a very valuable project.

I also have not seen analyses of multiple reboots. But in terms of recovery from one loss of civilization, What We Owe the Future touches on it some. Also, my original cost-effectiveness analysis for the long-term future for nuclear war explicitly modeled recovery from collapse. However, then I realized that there were other mechanisms to long-term future impact, such as making global totalitarianism more likely or resulting in worse values in AGI, so I moved to reduction in long-term future value associated with nuclear war or other catastrophes. I like that you are breaking this up into more terms and more reboots, because I think that will result in more accurate modeling.

Thanks for the kind words, David. And apologies - I'd forgotten you'd published those explicit estimates. I'll edit them in to the OP.

My memory of WWOtF is that Will talks about the process, but other than giving a quick estimate of '90% chance we recover without coal, 95% chance with' he doesn't do as much quantifying as you and Luisa.

Also Lewis Dartnell talked about the process extensively in The Knowledge, but I don't think he gives any estimate at all about probabilities (the closest I could find was in an essay for Aeon where he opined that 'an industrial revolution without coal would be, at a minimum, very difficult').

Thanks for the calculator.

I was wondering about the welfare part of the equation, and it's not obvious how people get their welfare estimates in the calculator, from what I see in the post.

Are we talking about the welfare of just humans ? Animals ? (Farmed or wild animals)? Artificial sentience ? How do we reconcile all of these when we're not sure today whether global welfare is net positive ?

Of course, this depends on very important questions that are hard to assess. What are the consequences of bringing wild animals suffering to our planet? Is factory farming going to continue for a long time, especially as that long-termists are very optimistic about technology replacing all forms of animal farming, where it's not so obvious? Are artificial sentience going to have lives worth living ? How are we going to impact animals on other planets ?

So overall, what should we include in the 'welfare' part of the calculator?

Hey Corentin,

The calculators are intentionally silent on the welfare side, on the thought that in practice it's much easier to treat as a mostly independent question. That's not to say it actually is independent, and ideally I would like the output to include more information about what the pathways to either extinction or an interstellar state, so that people can do some further function on the output. I do think it's reasonable, even on a totalising view, to prioritise improving future welfare conditional on it existing and largely ignoring the question of whether it will - but that's not a question the calculators can help with except inasmuch as you condition on the pathway.

Even if they gave pathways, they would be agnostic on whose welfare qualified. Personally I'm interested in maximising total valence (I have an old essay still waiting for its conclusion on the subject), so every sentient being's mental state 'counts', but you could use these with a different perspective in mind. Primarily empirical questions about e.g. the duration of factory farming, and animal suffering in terraformed systems seem like they'd need their own research projects.

Thanks for all your work on this series, and sharing a draft of the post in advance! I commented around 1.5 years ago that I thought it was a pretty valuable series, but I am now much less optimistic:

You assume the value of the future given extinction is negligible. In order for this to make sense to me, I have to interpret the extinction as not involving TAI, and involving not only the loss of all humans, but also a significant part of our past evolutionary path (to ensure a low chance of full recovery). I suppose the annual probability of such extinction is lower than 10^-8, and therefore do not see tail risk mitigation as a specially promising path to avoid it. I guess indirect ways of decreasing extinction risk like boosting economic growth (relatedly) become more attractive for lower levels of risk.

Hey Vasco, thanks for the in-depth reply, and thanks again for trawling over this behemoth :)

Let me take these points in order:

I'm highly sceptical of point probability estimates for events for which we have virtually no information - that's exactly why I made these tools. Per Dan Schwarz's recent post, it seems much more important to me to give an interactive model into which people can put their own credences, so that we can then debate the input rather than the output.

I'm now reading through your nuclear war article, and have some pushback, but I don't want to get them sidetracked into it here (I'll try and post them as a comment there, which is probably more helpful anyway), and I don't think they'd increase my credence enough to materially affect your point.

More importantly, much of the point of the calculators is that one can still have very low credence of direct extinction from any of the sources you mentioned and still believe that such events substantially reduce the chance of us becoming interstellar by two basic mechanisms:

If by 'recovery' you mean 'reaching modern technology' this is compatible with what I've just written above - it might turn out to be relatively trivial to rereach modern technology, but increasingly implausible that we can ever progress beyond it.

If I understand you right, you're getting most of your confidence in recovery from situations where other intelligent species evolve? If so then this scenario seems like something longtermists shouldn't view too positively, though we could still use these calculators plus some other tools to model it: -

Based on the dramatic changes to the climate that precede the oceans evaporating I suspect Earth will become unhabitable to intelligent life in more like 100million to 500million years. If we're relying on reevolution of intelligent life, that might meaningfully cut down the number of chances we get.

I explicitly aimed to capture this concern in the OP description 'human descendants (or whatever class of life we think has value)'. If you think TAI replacing humans would be as good or better, you can treat scenarios where it does so as transitioning directly from whatever state we'd be in at the time to an interstellar/existentially secure state.

Fwiw in the Matthew Barnett post you linked to, I replied that I strongly support that position philosophically - I think my take was even more pro-conscious-AI than his.

Thanks for the follow up! I strongly upvoted it.

I mentioned point/mean probability estimates, but my upper bounds (e.g. 90th percentile) are quite close, as they are strongly limited by the means. For example, if one's mean probability is 10^-10, the 90th percentile probability cannot be higher than 10^-9, otherwise the mean probability would be higher than 10^-10 (= (1 - 0.90)*10^-9), which is the mean. So my point remains as long as you think my point/mean estimates are reasonable.

Makes sense. I liked that post. I think my comment was probably overly crictical, and not related specifically to your series. I was not clear, but I meant to point to the greater value of using standard cost-effectiveness analyses (relative to using a model like yours) given my current empirical beliefs (astronomically low non-TAI extinction risk).

Fair! I suspect the number of lives saved, maybe weighted by the reciprocal of the population size, would still be a good proxy for the benefits of interventions affecting civilisational collapse. When I tried to look into this quantitatively in the context of nuclear war, improving worst case outcomes did not appear to be the driver of the overall expected value. So I am guessing using standard cost-effectiveness analyses, based on a metric like lives saved per dollar, would continue to be a fair way of assessing interventions.

In any case, I assume your model would still be useful for people with different views!

I meant full recovery in the sense of reaching the same state we are in now, with roughly the same chances of becoming a benevolent interstellar civilisation going forward.

For my estimate of a probability of 0.0513 % of not fully recovering, yes, because I was assuming human extinction in my calculation. If the disaster is less severe, my probability of not fully recovering would be even lower.

If one thinks the probability of extinction or permanent collapse without TAI is astronomically low (as I do), the probability of a double catastrophe is astronomically low to the power of 2, i.e. it presents negligible risk. So I believe a single catastrophe has to be somewhat plausible for the possibility of further catastrophes to matter.

I think considerations related the astronomical waste argument are applicable not only to saving lives in catastrophes, but also in normal time. To bring everything into the same framework in a simple way, I would run a standard cost-effectiveness analysis, but weighting saving lives by a function of the population size (e.g. 1/"population size" as I suggested above).

On priors, I would say one should expect the values of a new similarly capable species to be as good as those of humans.

I imagine different theories make significantly different predictions about the difficulty of going from e.g. monkeys to humans, but I have the impression there is often little data to validate them, and therefore think significant weight should be given to a prior simply informed by how long a given transition took. This is why I got my estimate for the probability of not fully recovering relying on the time since the last mass extinction.

Even assuming we could only recover with pretty high likelihood once, we would need 2 events with astronomically low chances to go from where we are to extinction or permanent collapse. So the overall risk of these would be negligible if one agrees with my estimates. At the same time, I think you are raising great points. I do not think they have much strenght, but this is just because of my view that catastrophes plausibly leading to extinction or global collapse are very unlikely.

Makes sense. I think human extinction caused by TAI would be bad if it happened in the next few years, as I suppose there would not be enough time to plan a good transition in this case. Nevertheless, in a future dominated by sentient benevolent advanced AIs, human extinction would not be obviously bad.

Yeah, it sounds like this might not be appropriate for someone with your credences, though I'm confused by what you say here:

I'm not sure what you mean by this. What are you taking the mean of, and which type of mean, and why? It sounds like maybe you're talking about the arithmetic mean? If so that isn't how I would think about unknown probabilities fwiw. IMO it seems more appropriate to use a geometric mean to express this kind of uncertainty, or explicitly model the distribution of possible probabilities. I don't think either approach should limit your high-end credences.

Yeah, fair enough :)

Have you written somewhere about why you think permanent collapse is so unlikely? The more I think about it, the higher my credence seems to get :\

I'm not saying the sexual selection theory is strongly likely to be correct. But it seems to be taken seriously by evolutionary psychologists, and if you're finding that other theories of human intelligence give ultra-high credence of a new species evolving, it seems like that credence should be substantially lowered by even a modest belief in the plausibility of such theories.

Yes, I was referring to the arithmetic mean of a probability distribution. To illustrate, if I thought the probability of a given event was uniformly distributed between 0 and 1, the mean (best guess) probability would be 50 % (= (0 + 1)/2).

I agree the median, geometric mean, or geometric mean of odds are usually better than the mean to aggregate forecasts[1]. However, if we aggregated multiple probability distributions from various models/forecasters, we would end up with a final probability distribution, and I am saying our final point estimate corresponds to the mean of this distribution. Jaime Sevilla illustrated this here.

Maybe it helps to think about this in the context of a distribution which is not over a probability. If we have a distribution over possible profits, and our expected profit is 100 $, it cannot be the case that the 90th percentile profit is 1 M$, because in this case the expected profit would be at least 100 k$ (= (1 - 0.90)*1*10^6), which is much larger than 100 $.

You may want to check Joe Carlsmith's thoughts on this topic in the context of AI risk.

No, at least not in any depth. I think permanent collapse would require very large population and infrastructure losses, but I see these as very unlikely, at least in the absence of TAI. I estimated a probability of 3.29*10^-6 of the climatic effects of nuclear war before 2050 killing 50 % of the global population (based on the distribution I defined here for the famine death rate). Pandemics would not directly cause infrastructure loss. Indirectly, there could be infrastructure loss due people stopping maintenance activities out of fear of being infected, but I guess this requires a level of lethality which makes the pandemic very unlikely.

Besides more specific considerations like the above, I have consistently ended up arriving to tail risk estimates much lower than canonical ones from the effective altruism community. So, instead of regarding these as a prior as I used to do, now I immediately start from a lower prior, as I should not expect by risk estimates to go down/up[2]. For context on me arriving to lower tail risk estimates, you can check the posts I linked at the start of my 1st comment. Here are 2 concrete examples I discussed elsewhere:

Relatedly, my extinction risk estimates are much lower than Toby Ord's existential risk estimates given in The Precipice.

I aggregated probabilities using the median to estimate my prior extinction risk for wars and terrorist attacks, and using the geometric mean to obtain my nuclear war extinction risk.

If I expected my best guess to go up/down, I should just update my best guess now to the value I expect it will converge to.

Xia 2022 predicts a shortfall of 7.0 % for 5 Tg without adaptation (see last row of Table S2).

Woah, this goes way over my head, so I'm gonna keep on re-reading it until I understand it (hopefully). Thanks for this post!

I'm happy to talk you through using it if you're finding it confusing.

If you (or anyone else) reading this wants to catch me for some support, I'm on the EA Gather Town as much as possible (albeit currently in New Zealand time), so you can log in there and ping me :)

Can't find the EA Gather Town via this link or on the Gather app. Can you give its exact handle/label? Thanks.

Hm, the link works ok for me. What happens when you open it? It can be a bit shonky on mobile phones - maybe try using it on a laptop/desktop if you haven't.

It's called 'EA coworking and lounge', if that helps.

Executive summary: Two new calculators allow longtermists to explicitly model and compare the value of reducing existential risk versus the value of mitigating lesser catastrophes, based on the user's assumptions about civilizational trajectories and prospects for recovery.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.