Overview

I have previously written about the importance of making it as easy as possible for EAs to make a fully-informed decision on preferred cause area, given the potentially astronomical differences in value between cause areas. Whilst one piece of feedback was sceptical about the claim that these vast expected value differences exist, generally feedback agreed that the idea of making cause prioritisation easier, for example by highlighting key considerations that have the biggest effect on choice of preferred cause area, could be high impact.

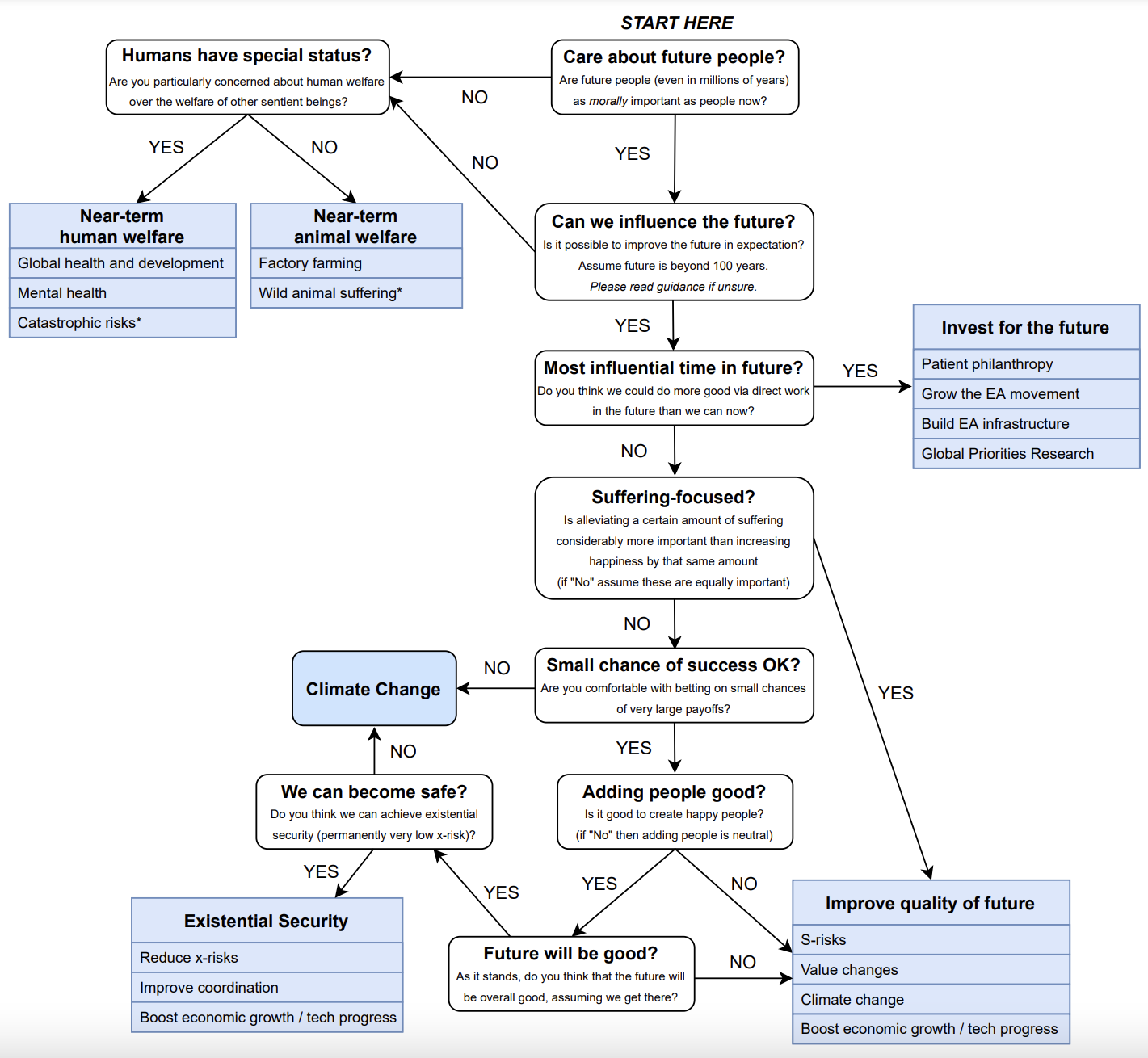

In light of this I have decided to progress this idea by putting together a first draft cause prioritisation flowchart designed to guide people through the process of cause prioritisation. The flowchart would ideally be accompanied by guidance assisting making informed decisions throughout the flowchart. I haven’t finalised this guidance, although present a sample for one particular decision in the flowchart. At this point I am attempting a proof of concept rather than delivering a final product, and so would welcome feedback on both the idea and the preliminary attempt.

Introducing the flowchart

In the following section you can see my draft flowchart. The flowchart asks individuals ethical and empirical questions that I view as most important in determining which cause area they should focus on. Only cause areas that are accepted as important by a non-negligible proportion of the EA community are included in the flowchart. In addition, some foundational assumptions common to EA are made, including a consequentialist view of ethics in which wellbeing is what has intrinsic value.

A key component of a final flowchart would be accompanying guidance to help individuals make informed decisions as they progress through the flowchart. I have not put together all of this guidance at this stage, however as part of the proof of concept I have attempted to illustrate what this might look like for the “Can we influence the future?” question. My vision for a final flowchart would be being able to click on each box to be guided to easily-digestible reading enabling an informed choice on how to proceed.

In my view, some strengths of this flowchart compared to the main previous attempt include:

- It includes an up-to-date set of cause areas: the previous attempt that I have come across is, in my opinion, slightly out of date in that it excludes options that have fairly recently entered the mainstream of EA, such as investing for the future. The previous attempt also includes some cause areas that aren’t typically considered high impact by EAs today, such as education or human rights.

- It includes more nuances relevant to longtermist cause areas: my flowchart considers nuances such as the consideration of whether or not one thinks that the future is likely to be good. It has been suggested/hinted that not believing that the future is likely to be good may lead one away from longtermism as one would not want to prolong a bad future. However, there is a class of cause areas that could be said to broadly sit under “Improving quality of the future” that would remain robust to this view, and indeed other views that some people think invalidate longtermism such as a person-affecting view of population ethics.

- It includes (very incomplete) guidance that assists with making decisions along the flowchart: my vision for a final flowchart is one that is accompanied by guidance helping one make an informed decision throughout the flowchart. This feels like an essential part of any flowchart - without it one is just making gut judgements on very complex questions for which much discussion has already taken place. This is my main bone to pick with the previous attempt.

I would like to note that I don’t see my flowchart as clearly better than the previous attempt and I certainly don’t see it as final or even close to final. I think it is likely that substantial improvements can be made on my attempt.

The (draft) flowchart

Sample Guidance

Can we influence the future?

Is it possible to improve the future (beyond 100 years) in expectation?

Key reading:

- The case for strong longtermism (June 2021) by MacAskill and Greaves (Section 4)

Whilst it might at first seem unrealistic that we can positively influence the far future (say more than 100 years from now) in expectation, many EAs believe that there are a variety of ways in which we can do so.

One class of interventions that aims to influence the far future in a positive way are those that involve trying to ensure we stay or end up in “persistent states” of the world that are better than others. A persistent state is a state of the world which is likely to last for a very long time (even millions of years) if entered. If we can do things to increase the probability that we end up in a better persistent state than a worse one, we will then have influenced the far future for the better in expectation on account of how long the world is likely to stay in that better state.

There are a number of real world examples of attempting to steer into better rather than worse persistent states:

- Mitigating risks of premature human extinction: Human extinction is a persistent state as it would be very unlikely for humanity to re-evolve once extinct. The existence of humanity is also a persistent state: while we face risks of premature extinction, humanity’s expected persistence is vast. If we expect future lives to be net positive and adopt a total utilitarian view of population ethics in which the goodness of a state of the world depends on the total sum of welfare, then extinction would be a vastly worse persistent state. In this case, doing things to reduce the chance of premature human extinction would have high expected value. This could include the detection and potential deflection of asteroids, or reducing the risks of extinction-level pandemics.

- Influencing the choice among non-extinction persistent states: There are also potential persistent states of the world that do not rely on extinction. Many EAs are concerned about risks of artificial superintelligence (ASI). ASI could be developed once we have built human-level AI which could then recursively self-improve, designing ever-better versions of itself, quickly becoming superintelligent. From there, in order to better achieve its aims, it could try to gain resources, and try to prevent threats to its survival. It could therefore be incentivised to take over the world and eliminate or permanently suppress human beings. Alternatively, if an authoritarian country were the first to develop ASI, with a sufficient lead, they could use this technological advantage to achieve world domination and quash any competition. In either of these scenarios, once power over civilisation is in the hands of an ASI, this could persist as long as civilisation does. Different versions of the ASI-controlled futures are therefore persistent states with significantly differing expected value, and working to ensure ASI is aligned to our interests could therefore improve the far future in expectation.

Outside of the class of interventions that involve steering between persistent states, one can look to speed up progress to improve every time period in the future:

- Speeding up sustainable progress: Provided value per unit time doesn’t plateau at a modest level, bringing forward the march of progress could have long-lasting beneficial effects compared to status quo, as each time period would be better off than it would have been otherwise. Therefore boosting economic growth or speeding up technological progress could have very large positive effects. Under this scenario, one would want to ensure that such progress is sustainable i.e. that it can reliably continue for a long time period. This can be achieved by tackling climate change and ensuring that we can progress in a way that doesn’t negatively impact the environment or biodiversity on which our progress relies.

There are also a number of “meta” options to improve the far future:

- Global priorities research: It may be the case that further research can uncover interventions that would significantly improve the far future, that we aren’t aware of at the moment. Provided that subsequent governments or philanthropists would take due note of the results, this ‘meta-option’ could easily have much greater far-future expected benefits than the best available near-future expected benefits, since it could dramatically increase the expected effectiveness of future governmental and philanthropic action.

- Investing for the future: It might be that we are not living at the most influential time now as we may have a better idea about how to do good in the future, including on how to improve the very far future. In this case we may want to invest for these more influential periods, for example by movement building now, or investing financially. Investing financially may also be high impact on the far future due to investment returns meaning the pot of money grows over time, even in real terms.

There are therefore a number of potential ways to impact the far future that have been put forward by EAs. If you think any of the above have serious potential to impact the far future in a positive way, you should answer “Yes” at this point.

Next steps

At this point I would welcome feedback on:

- The general idea of having a cause prioritisation flowchart with guidance: my (ambitious) vision would be for such a flowchart to be used widely by new EAs to help them make an informed decision on cause area, ultimately improving the allocation of EAs to cause areas.

- The flowchart itself: are there any key considerations that have been missed? Are there any other cause areas that should be included? It is very difficult if not impossible to put together a flowchart including all relevant nuances but a final flowchart should include all key considerations.

- The sample guidance: Is this roughly the right length, detail and difficulty to guide someone through the flowchart?

I would also be interested to hear if anyone else would be interested in collaborating on such a flowchart given that there is more work to be done. I should say however that I may abandon this project if feedback is lukewarm/negative and it doesn’t look like pursuing with it would be high impact.

" future people are as morally important as those alive now" seems like a very high bar for longtermism. If e.g. you think future people are 0.1% as important, but there's no time discount for when (as long as they don't exist yet), this doesn't prevent you from concluding the future is hugely important. Similarly for some exponential discounts (though they need to be extremely small).

Absolutely agree with that.

My idea of a guided flowchart is that nuances like this would be explained in the accompanying guidance, but not necessarily alluded to in the flowchart itself which is supposed to stay fairly high-level and simple. It may be however that that box can be reworded to something like "Are future people (even in millions of years) of non-negligible moral worth" or something like that.

Ideally someone would read the guidance for each box to ensure they progressing through the flowchart correctly.

I think if you present a simplified/summarised thing along with more detailed guidance you should assume that almost nobody will read the guidance.

Almost nobody? I'd imagine at least some people are interested in making an informed decision on cause area and would be interested in learning.

You might be right though. I'm not getting a huge amount of positive reception on this post (to put it lightly) so it may be that such a guided flowchart is a doomed enterprise.

EDIT: you could literally make it that you click on a box and guidance pops up so it could theoretically be very easy to engage with it.

Interesting idea, thanks for doing this! I agree it's good to have more approachable cause prioritization models, but there're also associated risks to be careful about:

Also, I think the decision-tree-style framework used here has some inherent drawbacks:

A more powerful framework than decision trees might be favored, though I'm not sure what a better alternative would be. One might want to look at ML models for candidates, but one thing to note is that there's likely a tradeoff between expressiveness and interprettability.

And lastly:

I think there have been some discussions going on about EA decoupling with consequantialism, which I consider worthy. Might be good to include non-consequentialist considerations too.

Thanks for this, you raise a number of useful points.

I guess this risk could be mitigated by ensuring the model is frequently updated and includes disclaimers. I think this risk is faced by many EA orgs, for example 80,000 Hours, but that doesn't stop them from publishing advice which they regularly update.

I like that idea and I certainly don't think my model is anywhere near final (it was just my preliminary attempt with no outside help!). There could be a process with engagement with prominent EAs to finalise a model.

Also fair. However it seems that certain EA orgs such as 80,000 Hours do adopt certain views, naturally excluding other views (for which they have been criticised). Maybe it would make more sense for such a model to be owned by an org like 80,000 Hours which is open about their longtermist focus for example, rather than CEA which is supposed to represent EA as a whole.

As I said to alexjrl, my idea for a guided flowchart is that nuances like this would be explained in the accompanying guidance, but not necessarily alluded to in the flowchart itself which is supposed to stay fairly high-level and simple.

I don't think a flowchart can be 100% prescriptive and final, there are too many nuances to consider. I just want it to raise key considerations for EAs to consider. For example, I think it would be fine for an EA to end up at a certain point in the flowchart and then think to themselves that they should actually choose a difference cause area because there is some nuance that the flowchart didn't consider that means they ended up in the wrong place. That's fine - but it would still be good to have systematic process in my opinion that ensures EAs consider some really key considerations.

Feedback like this is useful and could lead to updating the flowchart itself. I have to say I'm not sure why the most influential time being in the future wouldn't imply investing for that time though - I'd be interested to hear your reasoning.

Fair point. As I said before if an org like 80,000 Hours owned such a model perhaps they wouldn't have to go beyond consequentialism. If CEA did I would suspect that they should.

Thanks for the reply, your points make sense! There is certainly a problem of "degree" to each of the concerns I wrote about in the comment, so arguments both for and against it should be taken into account. (To be clear, I wasn't raising my points to dismiss your approach; Instead, they're things that I think need to be taken care of, if we're to take such approach.)

Caveat: I haven't spend much time thinking about this problem of investing vs direct work, so please don't take my views too seriously. I should have made this clear in my original comment, my bad.

My first consideration is that we need to distinguish between "this century is more important than any given century in the future" and "this century is more important than all centuries in the future combined". The latter argues strongly against investing for the future; But the former doesn't seem to, as by investing now (patient philanthropy, movement building, etc.) you can potentially benefit many centuries to come.

The second consideration is that there're many more factors than "how important this century is". The need of the EA movement is one (and is a particularly important consideration for movement building), personal fit is another, among others.

Quick thoughts:

Thanks for this work, I like your approach! It is visually appealing and easy to follow. It is helpful for me but a little incomplete as I'd like to change some parts.

I think that it could be a good idea to treat this as a project, e.g., 'The EA priorities flowchart (template) project". You could put the current template in an easily editable/accessible format (draw.io) and share it in occasional updates as you develop it.

IMHO, more people are likely to be receptive to the idea of working together to 'flow-charting how to prioritise by building on your template' than in following whatever prioritisation approach you develop/recommend in a specific flowchart.

While you can make each template based on your/your team's opinions and attempts to prioritise, I think you should recommend users/reader to take and build their own versions rather than just adapt your perspective. I'd also refer them to relevant resources.

Hope this helps!

I'd be interested in an extended flowchart to prioritize among x-risks and s-risks, with questions like:

Thanks for making this! The idea, reasoning, and initial draft all seem promising/reasonable to me.

Some quick thoguhts:

Why is climate change the result of answering "no" to "We can become safe?" and "Small chance of success OK?"

Just to clarify this was just my first attempt with no outside review and it is far from final, so I'm open to the possibility that there are problems with the flowchart itself.

Also, as I have said to other commenters my idea of a guided flowchart is that nuances and explanations would be in the accompanying guidance, but not necessarily alluded to in the flowchart itself which is supposed to stay fairly high-level and simple.

On your specific question, my thinking was:

I guess my main point though is that this flowchart is far from final and there are certainly improvements that can be made! Also that accompanying guidance would be essential for such a flowchart.

Great chart! Another minor wording thing: I don’t know whether to interpret “Most influential time in future” as “[This is the] most influential time in future” or “The most influential time is still to come.” From the context, I think it’s the second, but my first reading was the first. :-)

I think "Speeding up sustainable progress" is presented here substantially too positively, or more specifically that some very important counterpoints aren't raised but should be. More discussion can be found at https://forum.effectivealtruism.org/tag/speeding-up-development . And I think (from memory) the Greaves & MacAskill paper cited either doesn't mention or argues against a focus on speeding up development?

Any update here? Did you refine the flow chart further or is it still the same as above?

No update. Interest seemed to be somewhat limited.