Epistemic Status

Related Posts

- Where The Falling Einstein Meets The Rising Mouse (Slatestarcodex)

- The range of human intelligence (AI Impacts)

Preamble: Why Does This Matter?

This question is important for building intuitions for thinking about takeoff dynamics; the breadth of the human cognitive spectrum (in an absolute sense) determines how long we have between AI that is capable enough to be economically impactful and AI that is capable enough to be existentially dangerous.

Our beliefs on this question factor into our beliefs on:

- The viability of iterative alignment strategies

- Feasibility of attaining alignment escape velocity

- Required robustness to capability amplification of alignment techniques

Introduction

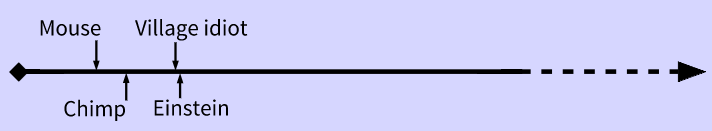

The Yudkowsky-Bostrom intelligence chart often depicts the gap between a village idiot and Einstein as very miniscule (especially compared to the gap between the village idiot and a chimpanzee):

Challenge

However, this claim does not feel to me to track reality very well/have been borne out empirically. It seems that for many cognitive tasks, the median practitioner is often much[1] closer to beginner/completely unskilled/random noise than they are to the best in the world ("peak human"):

- Mathematics

- Theoretical physics

- Theoretical research in general

- Fictional writing

- Writing in general

- Programming

- Music

- Art

- Creative pursuits in general

- Starcraft

- Games in general

- Invention/innovation in general

- Etc.

It may be the case that median practitioners being much closer to beginners than the best in the world is the default/norm, rather than any sort of exception.

Furthermore, for some particular tasks (e.g. chess) peak human seems to be closer to optimal performance than to median human.

I also sometimes get the sense that for some activities, median humans are basically closer to an infant/chimpanzee/rock/ant (in that they cannot do the task at all) than they are to peak human. E.g. I think the median human basically cannot:

- Invent general relativity pre 1920

- Solve the millennium prize problems

- Solve major open problems in physics/computer science/mathematics/other quantitative fields

And the incapability is to the extent where they cannot usefully contribute to the problems[2].

Lacklustre Empirical Support From AI's History

I don't necessarily think the history of AI has provided empirical validation for the Yudkowsky intelligence spectrum. For many domains, it seems to take AI quite a long time (several years to decades) to go from parity with dumb humans to exceeding peak human (this was the case for Checkers, Chess, and Go it also looks like it will be the case for driving as well)[3].

I guess generative art might be one domain in which AI quickly went from subhuman to vastly superhuman.

Conclusions

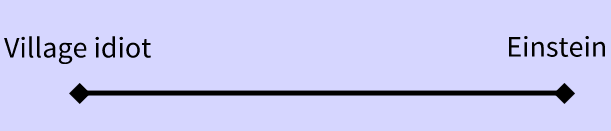

The traditional intelligence spectrum seems to track reality better for many domains:

- ^

My intuitive sense is that the scale difference between the two gaps is often not like 2x or 3x but measured in orders of magnitude.

I.e. the gap between Magnus Carlsen and the median human in chess ability is 10x - 1000x the gap between the median human and a dumb human.

- ^

Though I wouldn't necessarily claim that the median human is closer to infants than to peak humans on those domains, but the claim doesn't seem obviously wrong to me either.

- ^

I'm looking at the time frame from the first artificial system reaching a certain minimal cognitive level at the domain until an artificial system becomes superhuman. So I do not consider AlphaZero/MuZero surpassing humans in however many hours of self play to count as validation given that the first Chess/Go systems to reach dumb human level were decades prior.

Though perhaps the self play leap at Chess/Go may be more relevant to forecasting how quickly transformative systems would cross the human frontier.

Note that this is still a tiny amount of time, historically speaking.