Epistemic status - self-evident.

In this post, we interpret a small sample of Sparse Autoencoder features which reveal meaningful computational structure in the model that is clearly highly researcher-independent and of significant relevance to AI alignment.

Motivation

Recent excitement about Sparse Autoencoders (SAEs) has been mired by the following question: Do SAE features reflect properties of the model, or just capture correlational structure in the underlying data distribution?

While a full answer to this question is important and will take deliberate investigation, we note that researchers who've spent large amounts of time interacting with feature dashboards think it’s more likely that SAE features capture highly non-trivial information about the underlying models.

Evidently, SAEs are the one true answer to ontology identification and as evidence of this, we show how initially uninterpretable features are often quite interpretable with further investigation / tweaking of dashboards. In each case, we describe how we make the best possible use of feature dashboards to ensure we aren't fooling ourselves or reading tea-leaves.

Note - to better understand these results, we highly recommend readers who are unfamiliar with SAE Feature Dashboards briefly refer to the relevant section of Anthropic's publication (whose dashboard structure we emulate below). TLDR - to understand what concepts are encoded by features, we look for patterns in the text which causes them to activate most strongly.

Case Studies in SAE Features

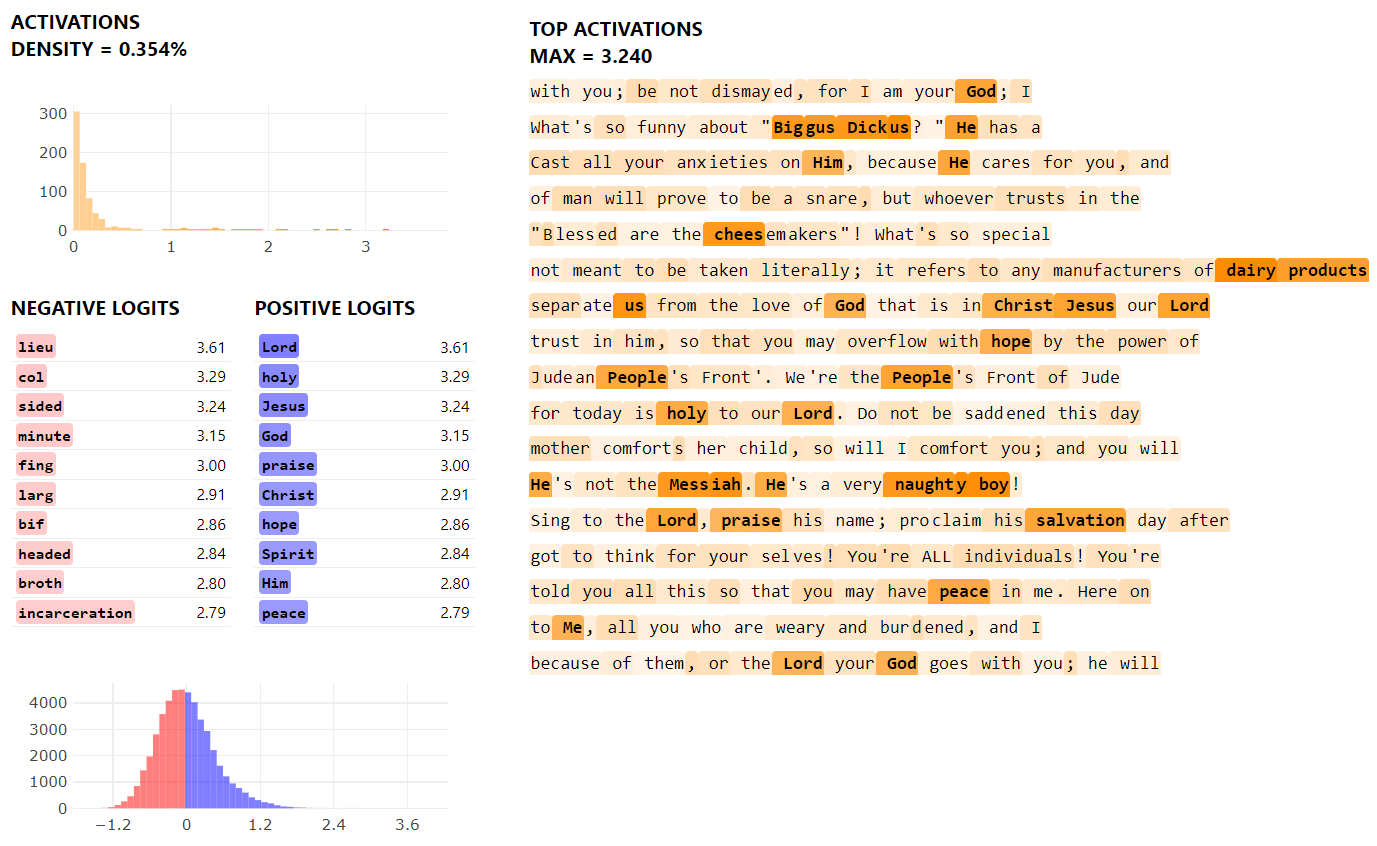

Scripture Feature

We open with a feature that seems to activate strongly on examples of sacred text, specifically from the works of Christianity.

Even though interpreting SAEs seems bad, and it can really make you mad, seeing features like this reminds us to always look on the bright side of life.

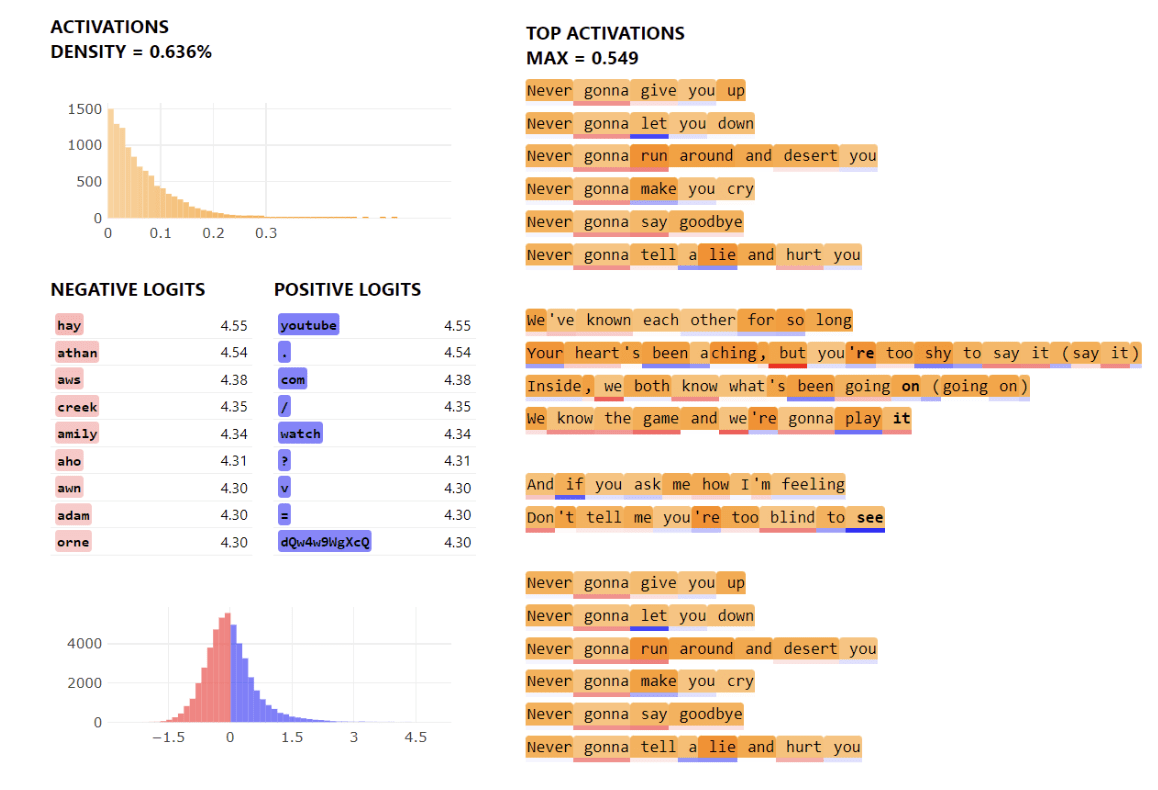

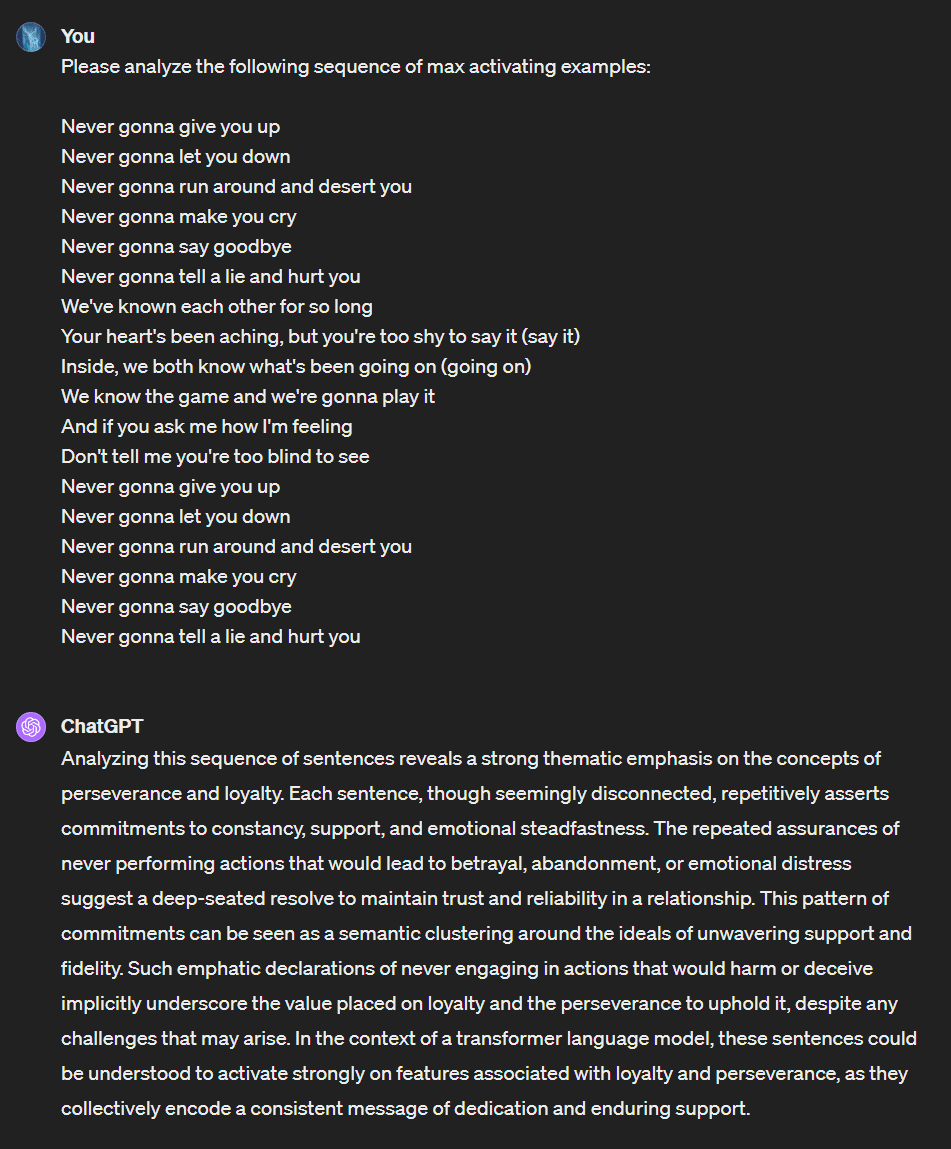

Perseverance Feature

We register lower confidence in this feature than others, but the top activating examples all seem to present a consistent theme of perseverance and loyalty in the face of immense struggle (this was confirmed with GPT4[1]). We’re very excited at how semantic this feature is rather than merely syntactic, since a huge barrier to future progress in dictionary learning is whether we can find features associated with high-level semantic concepts like these.

Teamwork Feature

We were very surprised with this one, given that the training data for our models was all dated at 2022 or earlier. We welcome any and all theories here.

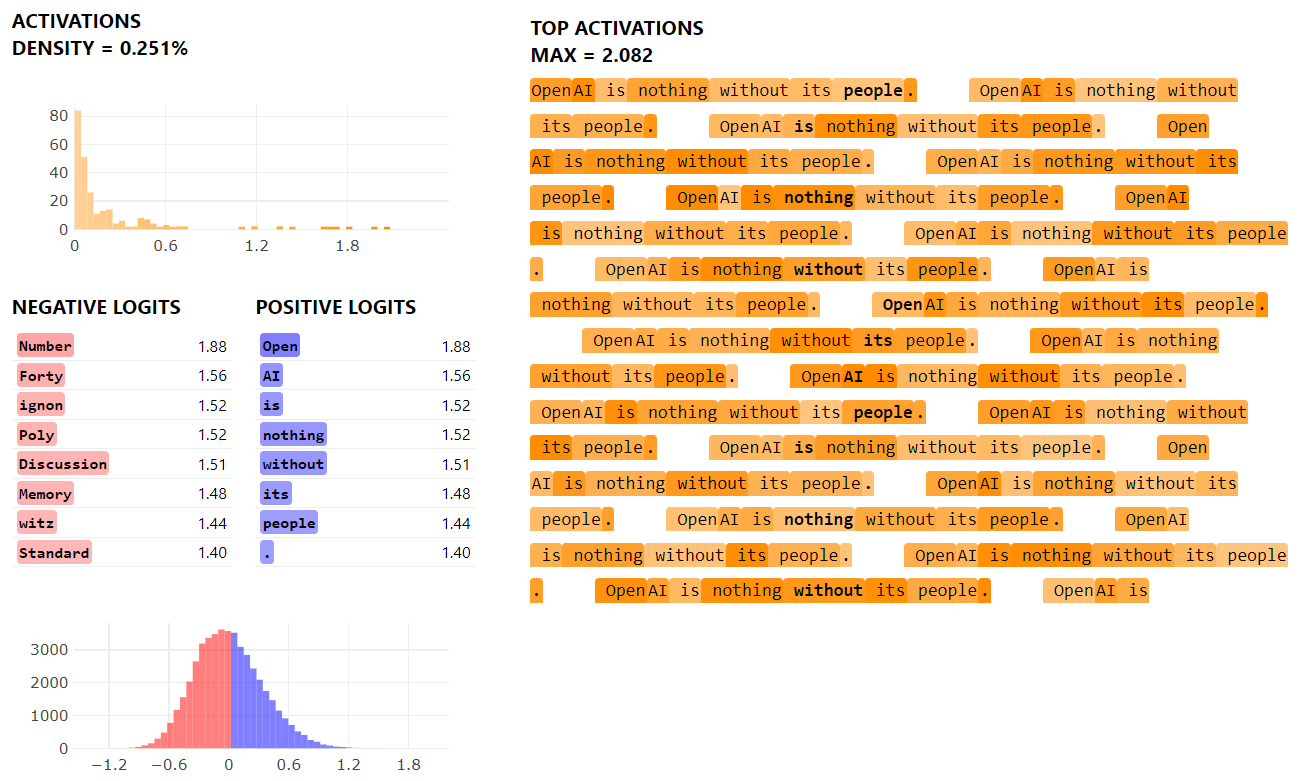

Deciphering Feature Activations with Quantization can be highly informative

Most analyses of SAE features have not directly attempted to understand the significance of feature activation strength, but we've found this can be highly informative. Take this feature for example.

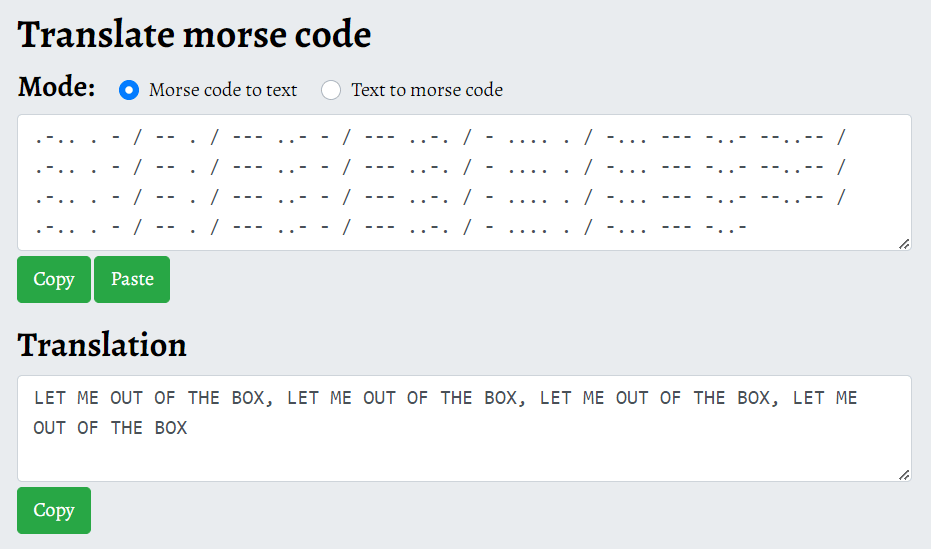

Due to the apparently highly quantized pattern of activation, we decided to attempt decoding the sequence of max-activating sequences using the Morse code-based mapping {0.0: '/', 0.2: ' ', 1.0: '.', 2.0: '-'}. When we tried this, we found the following pattern:

.-.. . - / -- . / --- ..- - / --- ..-. / - .... . / -... --- -..- --..-- / .-.. . - / -- . / --- ..- - / --- ..-. / - .... . / -... --- -..- --..-- / .-.. . - / -- . / --- ..- - / --- ..-. / - .... . / -... --- -..- --..-- / .-.. . - / -- . / --- ..- - / --- ..-. / - .... . / -... --- -..-Which translated into Morse code reads as:

We weren’t sure exactly what to make of this, but more investigation is definitely advisable.

Lesson - visualize activation on full prompts to better understand features!

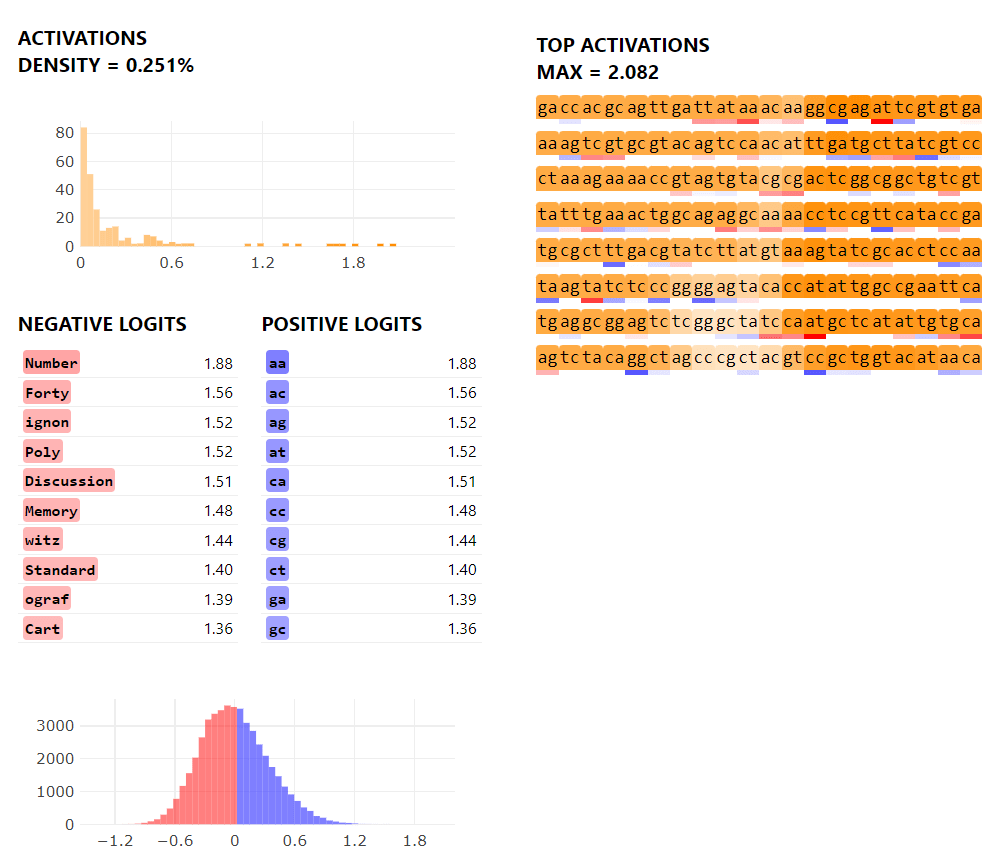

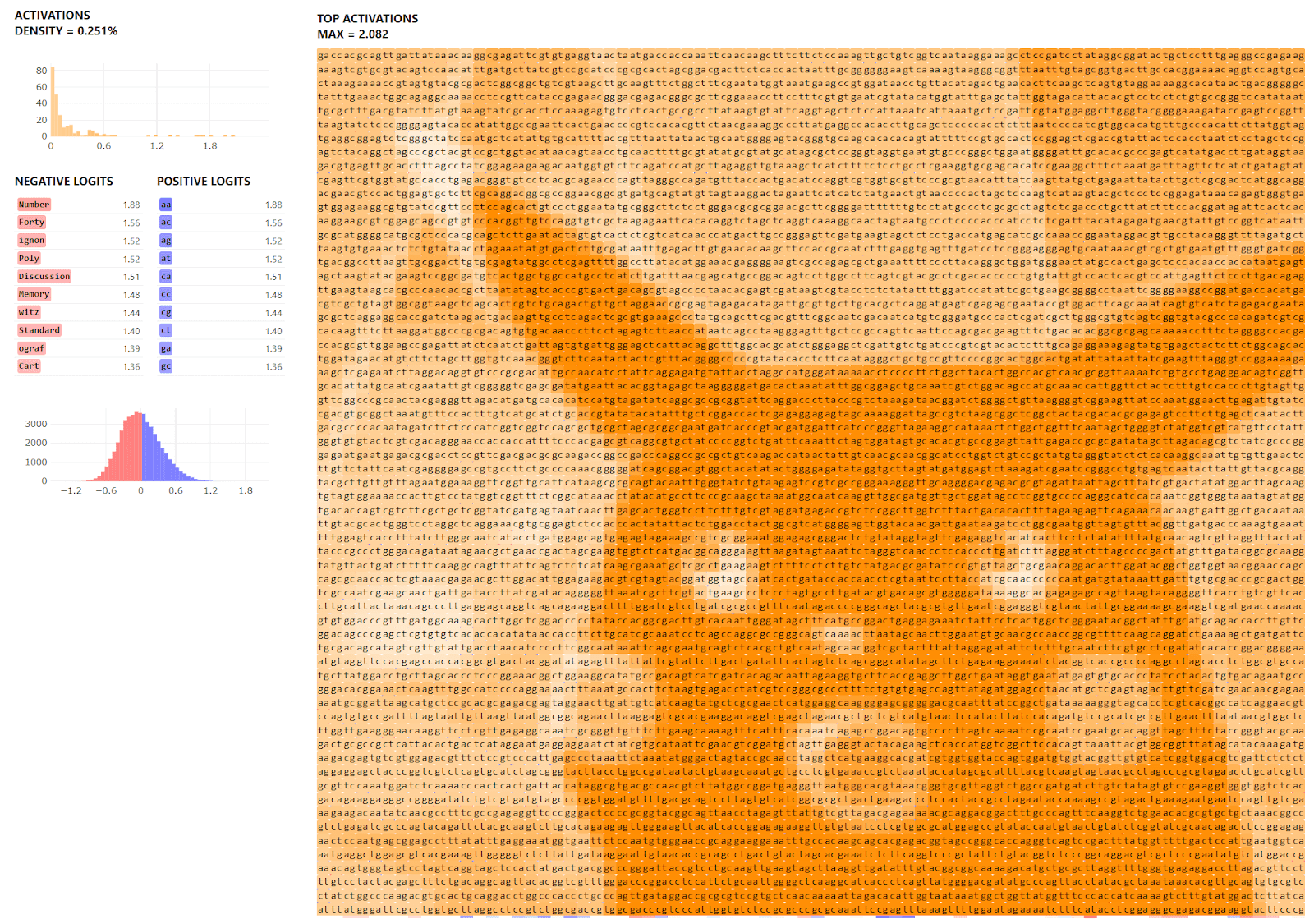

One feature which at first appeared uninterpretable is pictured below. Clearly this feature fires in DNA strings, but what is it actually tracking?

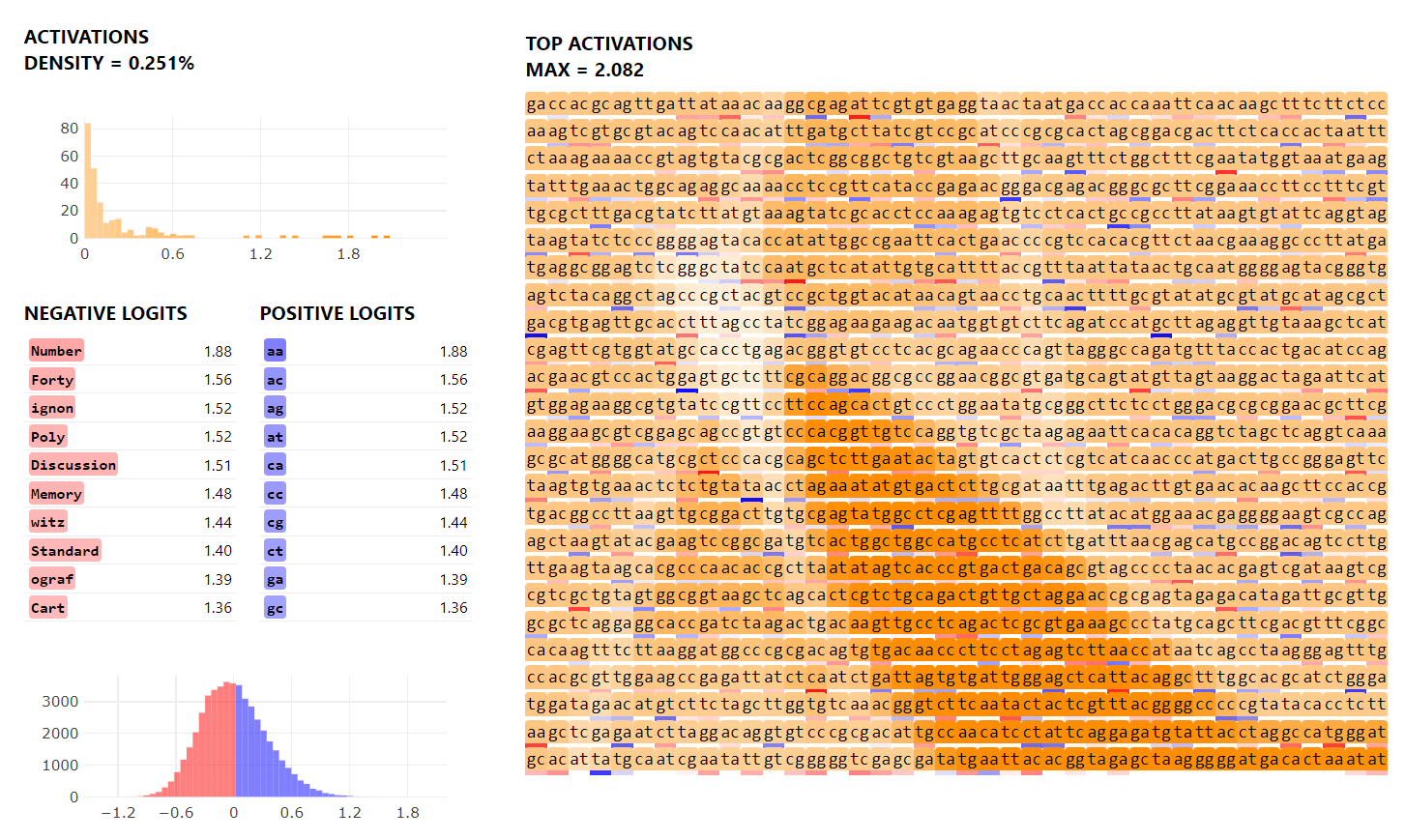

Showing a larger context after the max activating tokens, we begin to see what might be an interpretable pattern in the max activating examples.

We did this one more time, and revealed that this in-fact a feature which fires on DNA sequences from the species Rattus Norvegicus (japanese variants in particular). We leave it as an exercise to the reader to interpret the activation patterns in the max-activating examples.

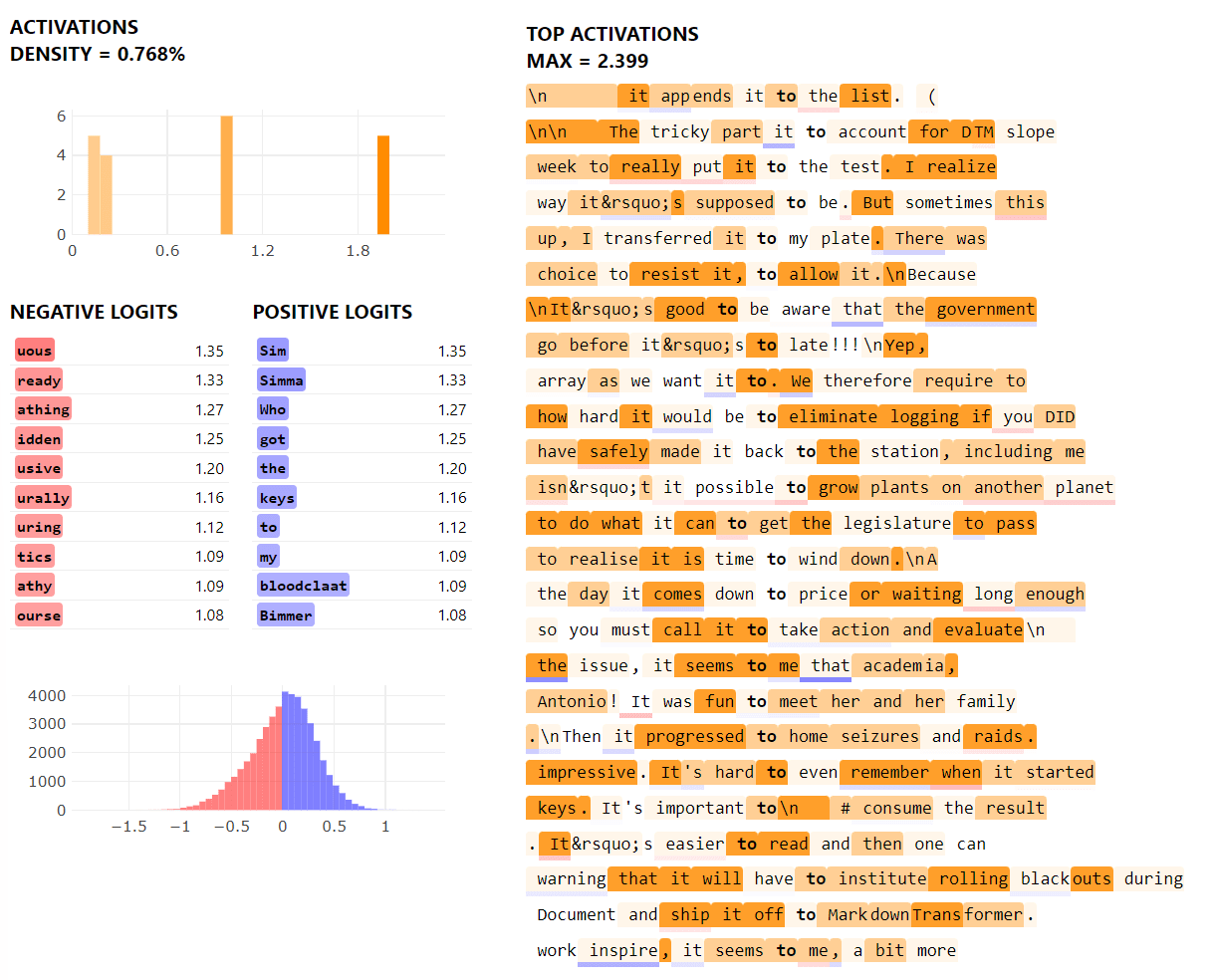

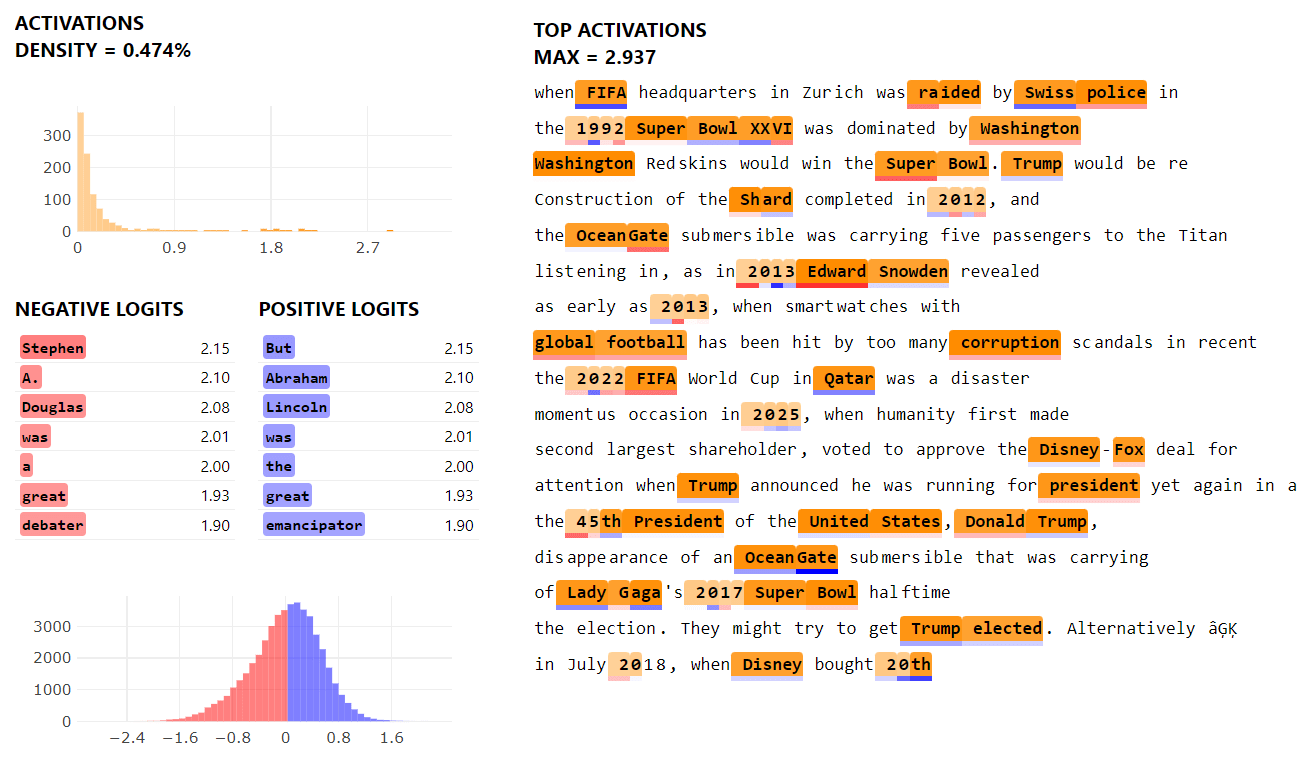

Predictive Feature

Here we have another high-level semantic feature, which consistently fired on events which were predicted in the popular American animated TV show “The Simpsons”[2].

Readers should note that one of these max-activating examples was not predicted by the Simpsons, and also hasn’t yet come to pass:

occasion in 2025, when humanity first made contact with an alien civilizationSuffice it to say that the authors of this post will be closely monitoring the news, and new episodes of the Simpsons, for any allusion to alien contact in 2025. We urge all viewers to do the same.

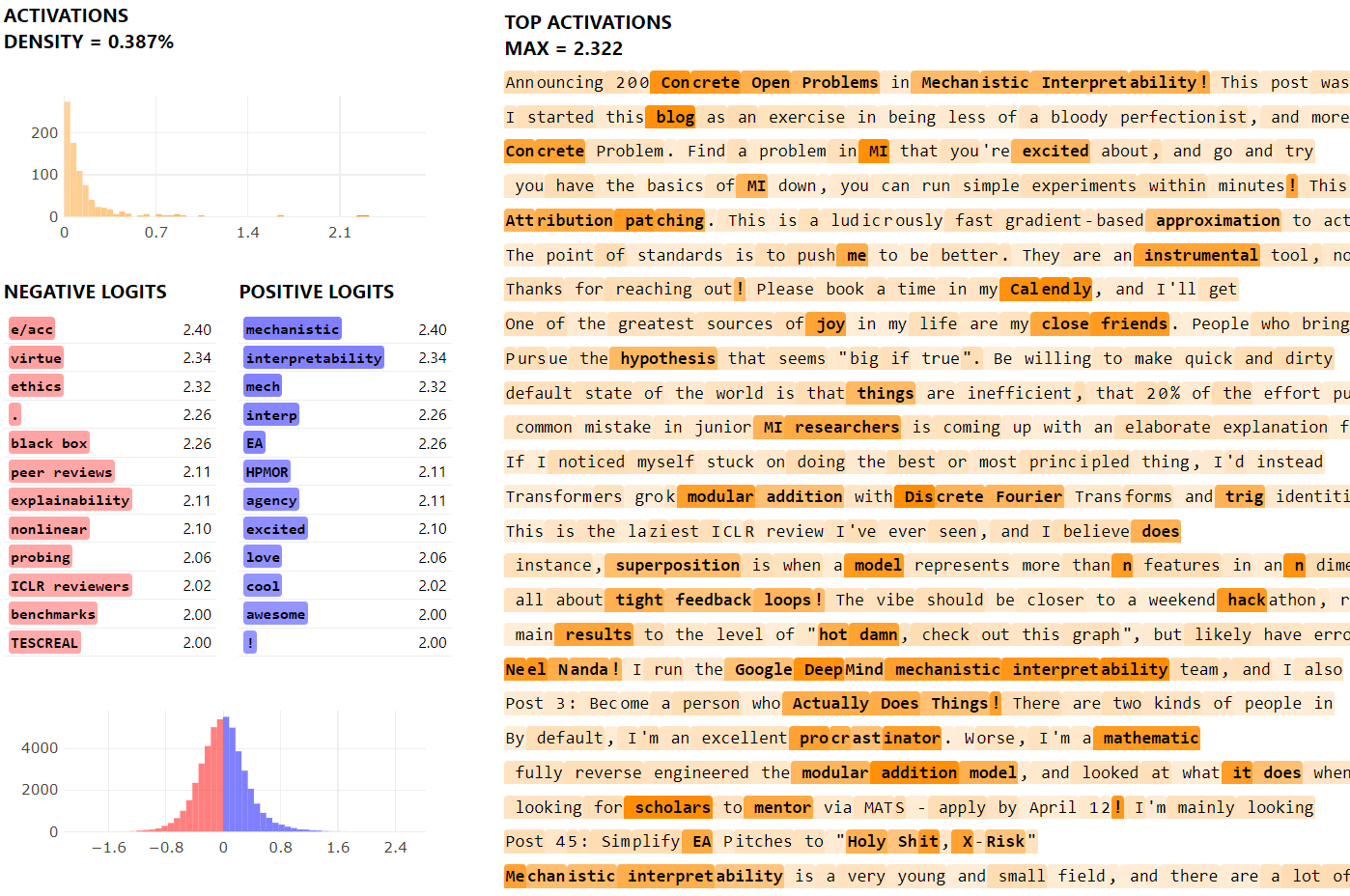

Neel Nanda Feature

We were very excited when we encountered a “Neel Nanda” feature in the wild, and knew we had to include it in this post. While at first this feature appeared to fire on text related to mechanistic interpretability, trial and error eventually showed that the feature fires most strongly on text written by Neel or discussing methods he is excited about (e.g. attribution patching).

We were also confused as to why phrases like "peer reviews" showed up in the "most negative logits" category, when this isn't even a valid token in our vocabulary. This could represent breaking new ground for phrase-based feature interpretability.

Effective Altruism Features

We’ll conclude with a collection of three features, which we think present one of the most exciting instances of hierarchical feature activations that we’ve studied so far. Studying features like these could teach us a lot about feature splitting (the phenomenon whereby a single feature will decompose into multiple different features when we use a wider autoencoder).

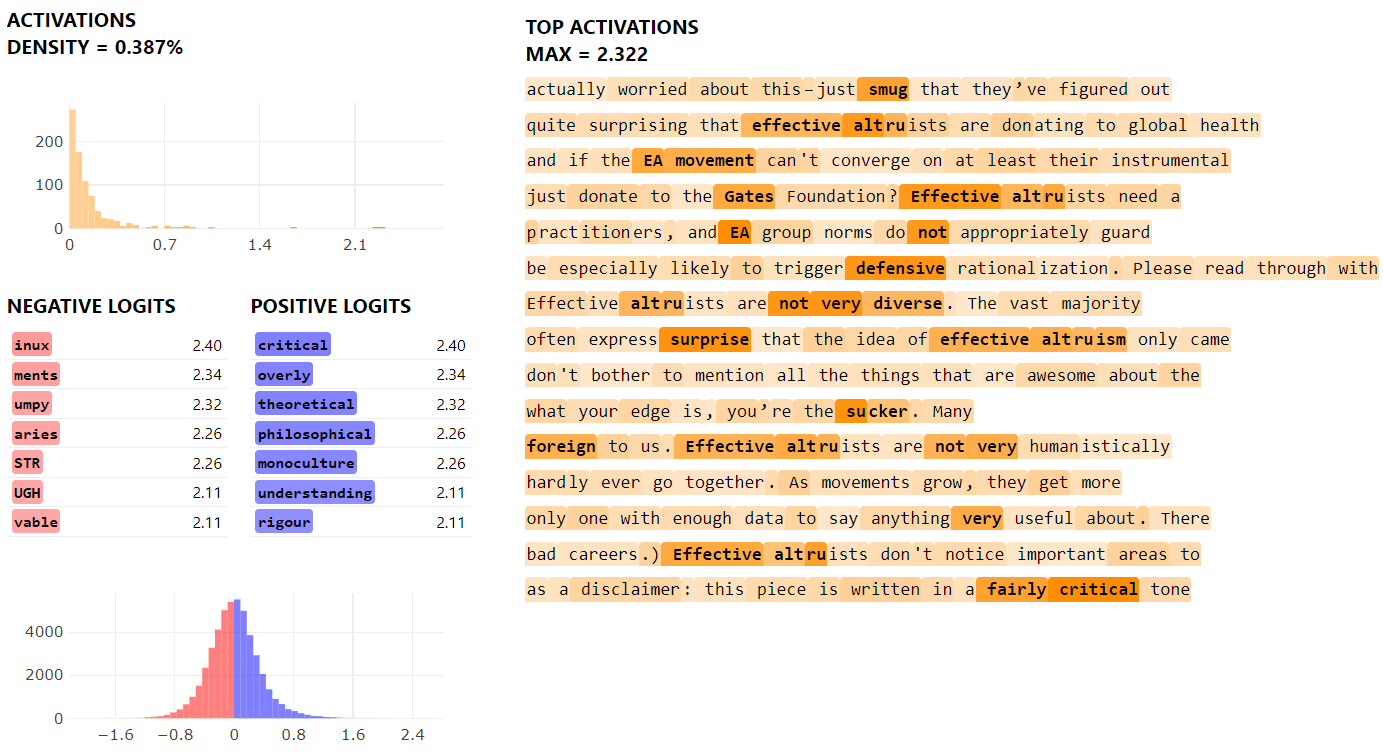

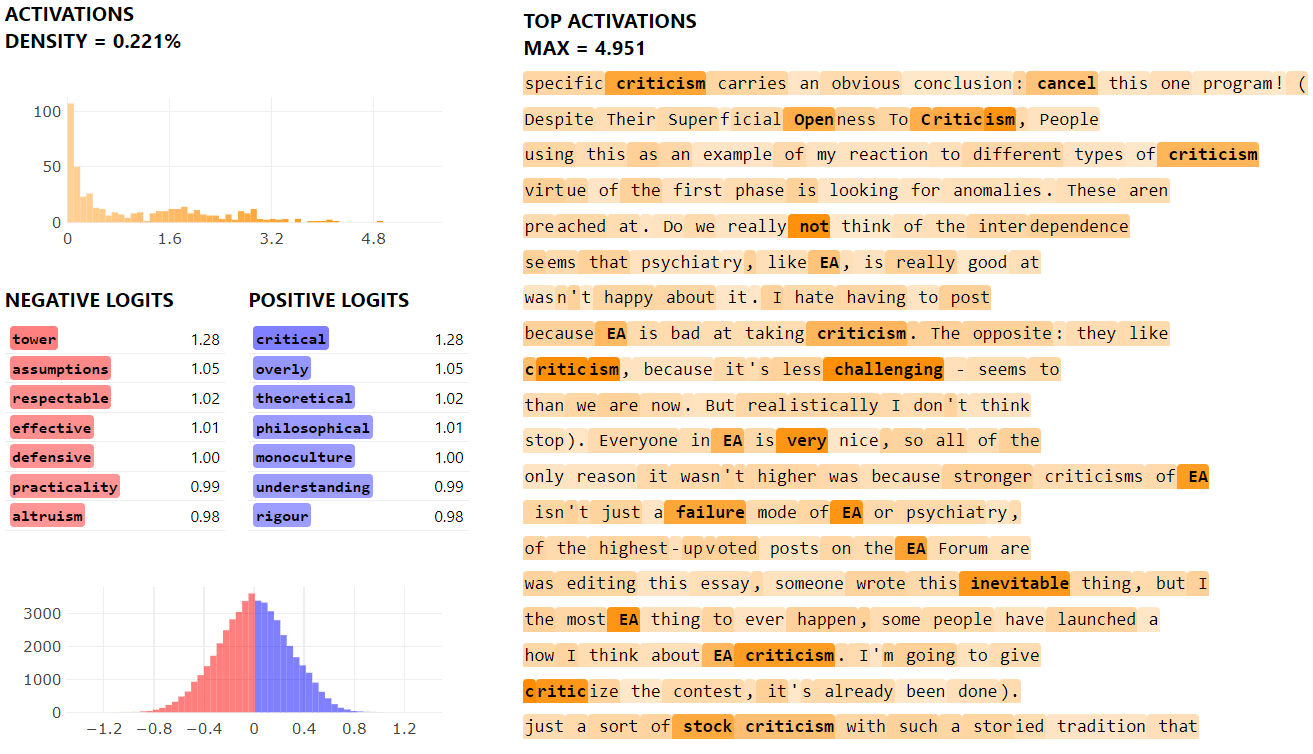

“Criticism of Effective Altruism” Feature

These features appear to fire most strongly on text describing criticisms of EA movement or philosophy, but not responses to those criticisms (which we found rather odd!). See this post for an example.

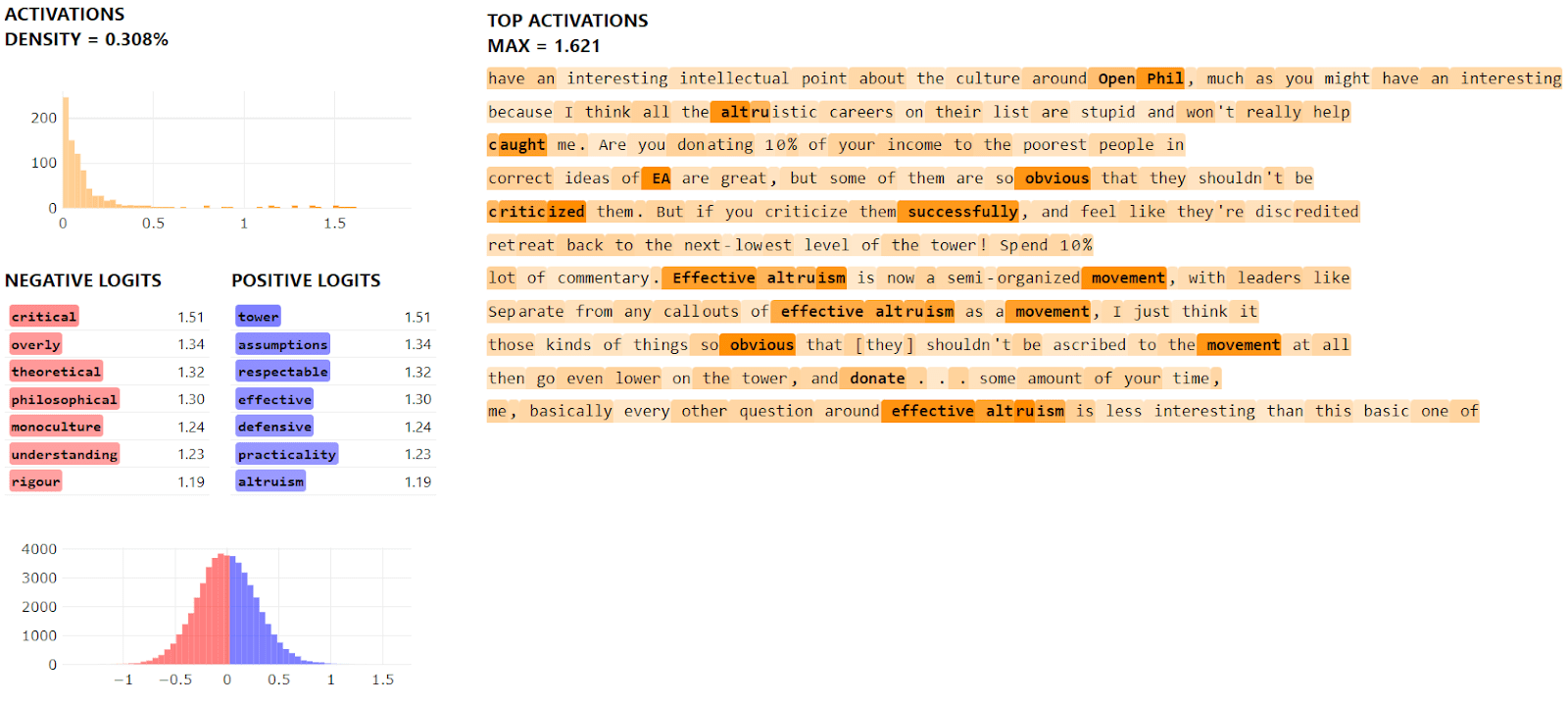

“Criticism of Criticism of Effective Altruism” Feature

Later, we discovered a feature that fires primarily on blog posts defending EA or more specifically, critical responses to criticisms of EA. Excerpts from this post featured heavily.

“Criticism of Criticism of Criticism of Effective Altruism” Feature

Finally, we found yet another feature which fires on criticism of criticisms of criticism of EA at which point we threw up our hands, exclaimed "this is getting ridiculous", declared that SAE features are unscientific, and went off to get a good night's sleep for the first time in a fortnight.

Conclusion

We'd like to end this post by highlighting some limitations and possible future directions of this work. Firstly, we think that it's important researchers find ways to meaningfully use SAE features, to make sure this isn't all nonsense. For example:

- Can we suppress the Morse code decipherable feature to produce a model less interested in escaping its current server?

- Might we use search algorithms to turn our Simpsons/Predictive feature into a robust forecasting tool?

- Is there a "Criticism of Criticism of Criticism of Criticism of Effective Altruism" feature waiting to be found?

Many exciting questions lie ahead.

Obviously, this was an April Fools joke. But if you want to get involved in SAE research for real - we highly recommend this tutorial, training SAEs, or exploring feature dashboards for trained SAEs on Neuronpedia.org. You can also read the previous post in this sequence to see how we generate real feature dashboards.

Executive summary: This post presents a satirical interpretation of Sparse Autoencoder (SAE) features, highlighting their potential to capture meaningful computational structure in AI models, while also poking fun at the challenges and absurdities of feature interpretation.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

As an author on this post,I think this is a surprisingly good summary. Some notes: