TLDR: Group leaders! Please get in touch with us regarding any outreach testing you have done or if you are even a little bit interested in doing this at any point in the future, no matter at what scale.

Introduction

I am working with David Reinstein, the EA Market Testing Team (EAMT) and several collaborators to organize and expand EA university and city groups’ outreach testing. This forum post offers an overview of the EAMT project as a whole. The public Gitbook also has more information, as well as our data, progress, information, and resources. The University/City Groups section specifically addresses this topic.

Objective

We want to promote systematic testing, analysis, and sharing evidence about ‘what works best’ in outreach, intake, and retention. Groups are an important part of growing the EA community, and University groups offer a unique opportunity for redirecting people towards solving the world's most pressing problems. Many students who are sympathetic to EA ideas simply haven’t heard of EA yet. We want to maximize the ability of groups to not only reach new EAs but also create stronger EAs.

To the best of our knowledge, there hasn’t been a sustained effort across universities to consolidate data or do substantial tests (randomized trials) spanning multiple years. Preliminary efforts suggest that many groups have pockets of knowledge, resources, and tools that aren’t being effectively shared, such as…

- Independent strategy documents

- Anecdotal and descriptive evidence

- Data from small-scale independent testing (with minimal analysis)

- Plans, methods, and survey instruments for testing

- Lists of the relevant contacts

Our Reasoning and Methodology

Benefits of Collaboration

Autonomy is good at finding methods that are right for a group, but a lot of this overlaps. Where we can, we want to combine these efforts and have universities test the same things. This can help them save time and money, improve testing, and help disseminate ideas that raise student interest. By putting these efforts in one place, we can see what works best. Groups can then use these ideas, avoid repeating work, and make sure that organizations and universities are working together well.

Should every group work on its own? This ‘autonomy’ could make sense if each university EA group had different goals, or operated in a very different context. It might be necessary if communication between groups was difficult, or if there were big ‘free-rider’ problems between groups. We don’t think this is the case. Although universities differ, there is plenty of overlap. People are in EA precisely because they want to work towards shared goals.

This collaboration enables economies of scope and scale. Student groups don’t need to individually waste hours designing their own materials to test, planning experimental designs, writing surveys to measure engagement, getting access to survey and promotion platforms, and analyzing the results. These can largely be done and set centrally (while allowing each group to adapt the materials to their particular circumstances).

Stronger Trials

It’s also often difficult for an individual group to cleanly conduct a test. If they want to compare two approaches they may need to (e.g.) run two versions of a fellowship at the same time. This duplication can be costly, and it also risks ‘contamination’ (students in Fellowship A are likely to talk to those in Fellowship B, making it difficult to cleanly infer the relative impact). With an organized collaboration, the ‘randomization of treatment assignments’, (e.g., which fellowship material to use) could be done between different universities. [It could also be done between different universities-years and units, e.g., with A-B vs B-A designs.] We also get the benefit of larger sample sizes [as well as comparisons across contexts (‘random effects’)], increasing statistical power and robustness.

The benefits are not only from large careful random-controlled trials. Small amounts of meaningful data can lead to important Bayesian updating. We can also learn from careful consideration of descriptive observation. If many university groups report the same patterns and insights, these are likely to be meaningful. Bringing these together into shared resources will yield value.

Sustained Effort

Centralizing and professionalizing this effort also increases the chances of success through ‘continuity’. Most groups are run by students, who face unpredictable study pathways and life paths. A larger project, linked to professionals involved with the EAMT, increases the chances of a sustained project. This overcomes common failure modes for very promising projects (a pivotal student loses interest, finds a different very shiny object, moves to a new school, etc.).

Outline of Current Work

The UGAP handbook contains most of the current information surrounding best practices for outreach. These have been summarised from different data points; some formal testing, some anecdotal and some intuitive. UGAP is currently the best central body for accessing information regarding outreach methods for groups, but we think we can expand upon their efforts. UGAP also has a focus on groups within the program, whereas we want to conduct testing as broadly as possible.

This is not to detract from UGAP’s efforts. We are actively collaborating with them as they are currently the most active organisation in this area.

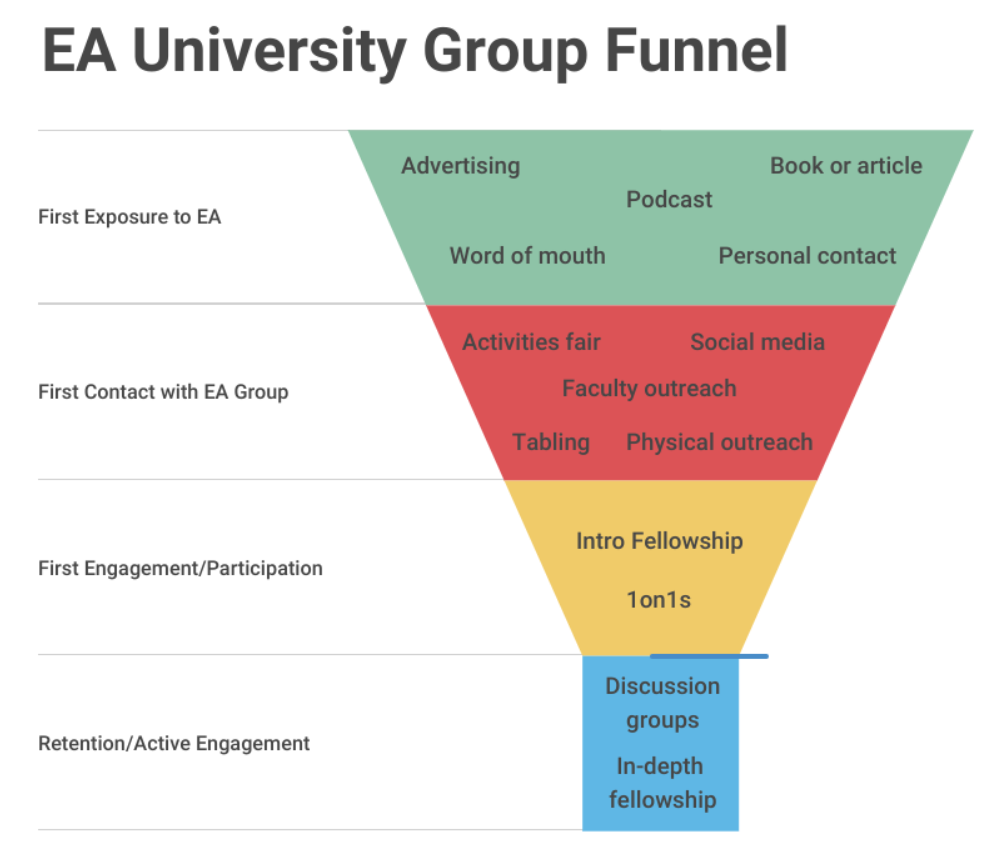

This funnel map is our basic understanding of the processes used to draw in new members to EA university groups. Each stage gives us grounds for testing through the different variations of these approaches. This is not just about testing which methods work for attracting the highest number of new members (ie. which Call to Action to use at activity fairs, etc), but also increasing engagement and developing high-level EAs (ie. fellowship programme alternatives, discussion group topics, etc).

In the Gitbook you’ll also see the current work being done by individual groups. A lot of this is preliminary but offers the foundation for formal testing. Many of these have been individually run by groups but allow us a great platform to build off. This is the beginning of efforts to centralise testing ideas, methods and results together from a diverse set of groups and students who are actively engaged in outreach. If you’re aware of any other relevant testing being done, please let us know or put us in touch with the relevant people. We want to incorporate as much as we can into the Gitbook.

Summary

We see universities as an integral component of the EA community. Outreach methods can be further refined by coordinated and rigorous testing to maximise student intake and create more engaged, high-level EAs.

EAMT will function in two ways in this space. It will act as a multiplier for groups, increasing the impact of their independent research by giving them a platform. Perhaps more importantly, it can coordinate large-scale testing between groups, but we need to make group leaders aware of EAMT to make this happen.

If you’re a group leader or organiser doing any form of independent testing, informal or formal, we want to hear from you. If you’re interested in doing any of this in the future, we also want to hear from you. Eventually, this will transform into formalised testing (limited to/based on group capacity) and ultimately improve outreach methods for EA groups.

Thank you to David Reinstein for his help with this post and thank you to the early collaborators in this space such as Dave Banerjee, Max Gehred, Robert Harling and Jessica McCurdy.

Hi Kynan thanks for writing this post.

It is great to see other people looking into more rigorous community building work! I really like the objective and methodology you set out, and do think that there are currently huge inefficiencies and loss in how information is currently transferred between groups.

I think one thing I am worried about with doing this on a large scale is the loss of qualitative nuance behind quantitative data. It seems difficult to really develop good models of why things work and what the key factors to consider are, without actually visiting groups or taking the time to properly understand the culture and people there. I would guess that processing the raw numbers are useful for being able to roll out products/outreach methods that are better in expectation better than current methods, but I would still expect there to be lots of variance in outcomes without developing a richer model that groups can then adapt.

I am one of the full-time organisers of EA Oxford and am currently looking at doing some better coordination and community building research with other full-time organisers in England. I would be keen to chat if you would like to talk more about this!

Thanks for being in touch (and I enjoyed our conversation).

One thing to note is that some of the trials we are considering could be considered trials in 'what general paths and approaches to recommend', rather than narrowly-defined specific scripts.

E.g., "reach out to a broad group of students" vs "focus on a small number of likely high-potential students." This could be operationalized, e.g., through which 'paths to involvement' (through fellowship completion or through attending meetings and events), or through 'which courses/majors to reach out to'.

However, every university group could still have the flexibility to adopt the recommended guidelines in a manner that aligns with their unique culture and surroundings.

We could then try to focus on some generally agreed 'aggregate outcome measures'. This could then be considered a test of 'which recommended general approach works better' (or, if we can have subgroup analysis, 'which works better where'.

Agreed on all points. An important consideration is heavily involving group leaders and organisers in this process to preserve the qualitative aspects of 'what works' in outreach. Keeping those involved with implementing the methods engaged throughout the research process is vital for ensuring these methods transfer into the real world. Whilst some of the nuances are inevitably going to be lost through large-scale testing, we can counteract this by knowing where to allow room for flexibility and where rigidity is worthwhile.

I'll be in touch, thanks!

Thanks for the post and interested to see the results! Is EAMT considering doing a similar future project with professionals? My current sense is that we have, as a community, a far weaker model of what works for outreach and retention amount professionals than students. Feel free to reach out if you’d be interested in discussing further.

Thanks Sarah, I would be interested in this. We have already established connections with local groups, not just limited to the university ones, and they could be included in this research.

There might be a case to make that outreach and retention among professionals, whether associated with a group or not, could be even more valuable. This is an area that has not been explored much. I have talked about this with HIP, but it would be great to have someone who can take the lead or co-lead this project and focus on it more.