‘We are in favour of making people happy, but neutral about making happy people’

This quotation from Jan Narveson seems so intuitive. We can’t make the world better by just bringing more people into it...can we? It’s also important. If true, then perhaps human extinction isn’t so concerning after all...

I used to hold this ‘person-affecting’ view and thought that anyone who didn’t was, well...a bit mad. However, all it took was a very simple argument to completely change my mind. In this short post I want to share this argument with those who may not have come across it before and demonstrate that a person-affecting view, whilst perhaps not dead in the water, faces a serious issue.

Note: This is a short post and I do not claim to cover everything of relevance. I would recommend reading Greaves (2017) for a more in-depth exploration of population axiology

The (false) intuition of neutrality

There is no single ‘person-affecting view’, instead a variety of formulations that all capture the intuition that an act can only be bad if it is bad for someone. Similarly something can be good only if it is good for someone. Therefore, according to standard person-affecting views, there is no moral obligation to create people, nor moral good in creating people because nonexistence means "there is never a person who could have benefited from being created".

As noted in Greaves (2017), the idea can be captured in the following

Neutrality Principle: Adding an extra person to the world, if it is done in such a way as to leave the well-being levels of others unaffected, does not make a state of affairs either better or worse.

Seems reasonable right? Well, let’s dig a bit deeper. If adding the extra person neither makes the state of affairs better or worse, what does it do? Let’s consider that it leaves the state of affairs equally as good as the original state of affairs.

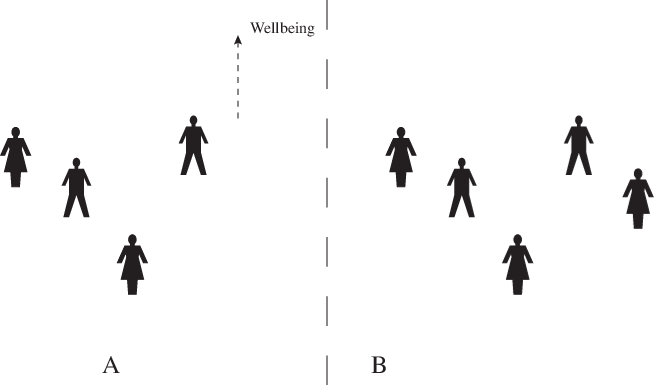

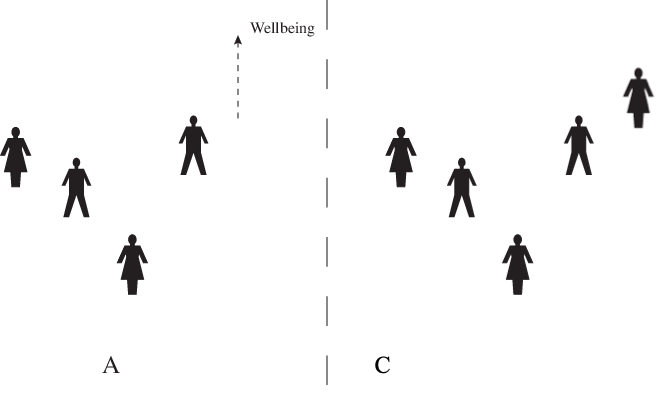

In this case we can say that states A and B below are equally as good as each other. A has four people and B has the same four people with the same wellbeing levels, but also an additional fifth person with (let’s say) a positive welfare level.

We can also say that states A and C are equally as good as each other. C again has the same people as A, and an additional fifth person with positive welfare.

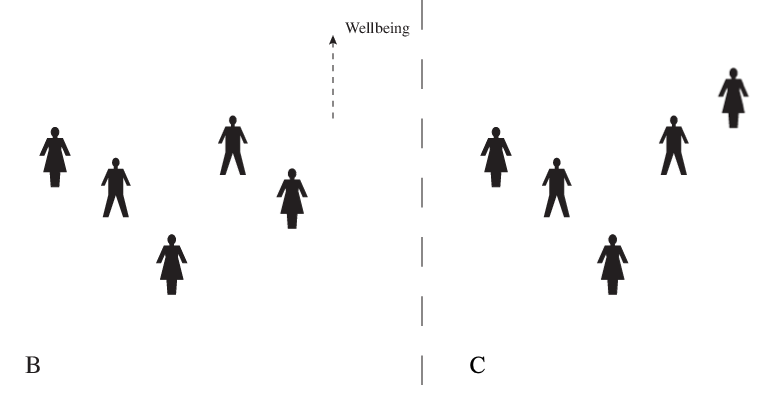

So A is as good as B, and A is as good as C. Therefore surely it should be the case that B is as good C (invoking a reasonable property called transitivity). But now let’s look at B and C next to each other.

Any reasonable theory of population ethics must surely accept that C is better than B. C and B contain all of the same people, but one of them is significantly better off in C (with all the others equally well off in both cases). Invoking a person-affecting view implies that B and C are equally as good as each other, but this is clearly wrong.

You might be able to save the person-affecting view by rejecting the requirement of transitivity. For example, you could just say that yes… A is as good as B, A is as good as C, and C is better than B! Well...this just seemed too barmy to me. I’d sooner divorce my person-affecting view than transitivity.

Where do we go from here?

If the above troubles you, you essentially have two options:

- You try and save person-affecting views in some way

- You adopt a population axiology that doesn’t invoke neutrality (or at least one which says bringing a person into existence can only be neutral if that person has a specific “zero” welfare level)

To my knowledge no one has really achieved number 1 yet, at least not in a particularly compelling way. That’s not to say it can’t be done and I look forward to seeing if anyone can make progress.

Number 2 seemed to me like the best route. As noted by Greaves (2017) however, a series of impossibility theorems have demonstrated that, logically, any population axiology we can think up will violate one or more of a number of initially very compelling intuitive constraints. One’s choice of population axiology then appears to be a choice of which intuition one is least unwilling to give up.

For what it’s worth, after some deliberation I have begrudgingly accepted that the majority of prominent thinkers in EA may have it right: total utilitarianism seems to be the ‘least objectionable’ population axiology. Total utilitarianism just says that A is better than B if and only if total well-being in A is higher than total wellbeing in B. So bringing someone with positive welfare into existence is a good thing, and bringing someone with negative welfare into existence is a bad thing. Bringing someone into existence with “zero” welfare is neutral. Pretty simple right?

Under total utilitarianism, human extinction becomes a dreadful prospect as it would result in perhaps trillions of lives never coming into existence that would have otherwise. Of course we have to assume these lives are of positive welfare to make avoiding extinction desirable.

It may be a simple axiology, but total utilitarianism runs into some arguably ‘repugnant’ conclusions of its own. To be frank, I’m inclined to leave that can of worms unopened for now...

References

Broome, J., 2004. Weighing lives. OUP Catalogue. (I got the diagrams from here - although slightly edited them)

Greaves, H., 2017. Population axiology. Philosophy Compass, 12(11), p.e12442.

Narveson, J.,1973. Moral problems of population. The Monist, 57(1), 62–86.

It's great to have a short description of the difficulties for person-affecting intuitions!

That a good argument. Still, I find person-affecting views underrated because I suspect that many people have not given much thought to whether it even makes sense to treat population ethics in the same way as other ethical domains.

Why do we think we have to be able rate all possible world states according to how impartially good or bad they are? Population ethics seems underspecified on exactly the dimension where many moral philosophers derive "objective" principles from: others’ interests. It’s the one ethical discipline where others’ interests are not fixed. The principles that underlie preference utilitarianism aren’t sufficiently far-reaching to specify what to do with newly created people. And preference utilitarianism is itself incomplete, because of the further question: What are my preferences? (If everyone's preference was to be a preference utilitarian, we'd all be standing around waiting until someone has a problem or forms a preference that's different from selflessly adhering to preference utilitarianism.)

Preference utilitarianism seems like a good answer to some important question(s) that fall(s) under the "morality" heading. But it can't cover everything. Population ethics is separate from the rest of ethics.

And there's an interesting relation between how we choose to conceptualize population ethics and how we then come to think about "What are my life goals?"

If we think population ethics has a uniquely correct solution that ranks all world states without violations of transitivity or other, similar problems, we have to think that, in some way, there's a One Compelling Axiology telling us the goal criteria for every sentient mind. That axiology would specify how to answer "What are my life goals?"

By contrast, if axiology is underdetermined, then different people can rationally adopt different types of life goals.

I self-identify as a moral anti-realist because I'm convinced there's no One Compelling Axiology. Insofar as there's something fundamental and objective to ethics, it's this notion of "respecting others' interests." People's life goals (their "interests") won't converge.

Some people take personal hedonism as their life goals, some just want to Kill Bill, some want to have a meaningful family life and die from natural causes here on earth, some don't think about the future at all and live the party life, some discount any aspirations of personal happiness in favor of working toward positively affecting transformative AI, some want to live forever but also do things to help others realize their dreams along the way, some want to just become famous, etc.

If you think of humans as the biological algorithm we express, rather than the things we come to believe and identify with at some particular point in our biography (based on what we've lived), then you might be tempted to seek a One Compelling Axiology with the question "What's the human policy?" ("Policy" in analogy to machine learning.) For instance, you could plan to devote the future's large-scale simulation resources to figuring out the structure of what different humans come to value in different simulated environments, with different experienced histories. You could do science about this and identify general patterns. But suppose you've figured out the general patterns and tell the result to the Bride in Kill Bill. You tell her "the objective human policy is X." She might reply "Hold on with your philosophizing, I'm going to have to kill Bill first. Maybe I'll come back to you and consider doing X afterwards." Similarly, if you tell a European woman with husband and children about the arguments to move to San Francisco to work on reducing AI risks, because that's what she ended up caring about on many runs of simulations of her in environments where she had access to all the philosophical arguments, she might say "Maybe I'd be receptive to that in another life, but I love my husband in this world here, and I don't want to uproot my children, so I'm going to stay here and devote less of my caring capacity to longtermism. Maybe I'll consider wanting to donate 10% of my income, though." So, regardless of questions about their "human policy," in terms of what actual people care about at given points in time, life goals may differ tremendously between people, and even between copies of the same person in different simulated environments. That's because life goals also track things that relate to the identities we have adopted and the for-us meaningful social connections we have made.

If you say that population ethics is all-encompassing, you're implicitly saying that all the complexities in the above paragraphs count for nothing (or not much), and that people should just adopt the same types of life goals, no matter their level of novelty-seeking, achievement striving, proscociality, embeddedness in meaningful social connections, views on death, etc. You're implicitly saying that the way the future should ideally go has almost nothing to do with the goals of presently existing people. To me, that stance is more incomprehensible than some problem with transitivity.

Alternatively, you can say that maybe all of this can't be put under a single impartial utility function. If so, it seems that you're correct that you have to accept something similar to the violation of transitivity you describe. But is it really so bad if we look at it with my framing?

It's not "Even though there's a One Compelling Axiology, I'll go ahead and decide to do the grossly inelegant thing with it." Instead, it's "Ethics is about life goals and how to relate to other people with different life goals, as well as asking what types of life goals are good for people. Probably, different life goals are good for different people. Therefore, as long as we don't know which people exist, not everything can be determined. There also seems to be a further issue about how to treat cases where we create new people: that's population ethics, and it's a bit underdetermined, which gives more freedom for us to choose what to do with our future lightcone."

So, I propose to consider the possibility of drawing a more limited role for population ethics than it is typically conceptualized under. We could maybe think of it as: A set of appeals or principles by which beings can hold accountable the decision-makers that created them. This places some constraints on the already existing population, but it leaves room for personal life projects (as opposed to "dictatorship of the future," where all our choices about the future light cone are predetermined by the One Compelling Axiology, and so have no relation to which exact people are actually alive and care about it).

To give a few examples for population-ethical principles:

(Note that the first principle is about objecting to the fact of being created, while the latter two principles are about objecting to how one was created.)

We can also ask: Is it ever objectionable to fail to create minds – for instance, in cases where they’d have a strong interest in their existence?

(From a preference-utilitarian perspective, it seems left open whether the creation of some types of minds can be intrinsically important. Satisfied preferences are good because satisfying preferences is just what it means to consider the interests of others. Also counting the interests of not-yet-existent beings is a possible extension of that, but a somewhat peculiar one. The choice looks underdetermined, again.)

Ironically, the perspective I have described becomes very similar to how non-philosophers commonly think about the ethics of having children:

Universal principles fall out of considerations about respecting others' interests. Personal principles fall out of considerations about "What are my life goals?"

Personal principles can be inspired by considerations of morality, i.e., they can be about choosing to give stronger weight to universal principles and filling out underdetermined stuff with one's most deeply held moral intuitions. Many people find existence meaningless without dedication to something greater than oneself.

Because there are different types of considerations at play in all of this, there's probably no super-elegant way to pack everything into a single, impartially valuable utility function. There will have to be some messy choices about how to make tradeoffs, but there isn't really a satisfying alternative. Just like people have to choose some arbitrary-seeming percentage of how much caring capacity they dedicate toward self-oriented life goals versus other-regarding ones (insofar as the separation is clean; it often isn't so clean), we have to also somehow choose how much weight to give to different moral domains, including the considerations commonly discussed under the heading of population ethics, and how they relate to my life goals and those of other existing people.

Thanks. There's a lot to digest there. It's an interesting idea that population ethics is simply separate to the rest of ethics. That's something I want to think about a bit more.

I don't assign much credence to neutrality, because I think adding bad lives is in fact bad. I prefer the procreation asymmetry, which might be stated this way:

Also, you can give up the independence of irrelevant alternatives instead of transitivity. This would mean that which of two options is better might depend on what alternatives there are available to you, i.e. the ranking of outcomes depends on available options. I actually find this a fairly intuitive way to avoid the repugnant conclusion.

A few papers taking this approach to the procreation asymmetry and which avoid the repugnant conclusion:

I also have a few short arguments for asymmetry here and here in my shortform.

Hey Michael,

Thanks for this, I suspected you might make a helpful comment! The procreation asymmetry is my long lost love. It's what I used to believe quite strongly but ultimately I started to doubt it for the same reasons that I've outlined in this post.

My intuition is that giving up IIA is only slightly less barmy than giving up transitivity, but thanks for the suggested reading. I certainly feel like my thinking on population ethics can evolve further and I don't rule out reconnecting with the procreation asymmetry.

For what it's worth my current view is that the repugnant conclusion may only seem repugnant because we tend to think of 'a life barely worth living' as a pretty drab existence. I actually think that such a life is much 'better' than we intuitively think. I have a hunch that various biases are contributing to us overvaluing the quality of our lives in comparison to the zero level, something that David Benatar has written about. My thinking on this is very nascent though and there's always the very repugnant conclusion to contend with which keeps me somewhat uneasy with total utilitarianism.

I think giving up IIA seems more plausible if you allow that value might be essentially comparative, and not something you can just measure in a given universe in isolation. Arrow's impossibility theorem can also be avoided by giving it up. And standard intuitions when facing the repugnant conclusion itself (and hence similar impossibility theorems) seem best captured by an argument incompatible with IIA, i.e. whether or not it's permissible to add the extra people depends on whether or not the more equal distribution of low welfare is an option.

It seems like most consequentialists assume IIA without even making this explicit, and I have yet to see a good argument for IIA. At least with transitivity, there are Dutch books/money pump arguments to show that you can be exploited if you reject it. Maybe there was some decisive argument in the past that lead to consensus on IIA and no one talks about it anymore, except when they want to reject it?

Another option to avoid the very repugnant conclusion but not the repugnant conclusion is to give (weak or strong) lexical priority to very bad lives or intense suffering. Center for Reducing Suffering has a few articles on lexicality. I've written a bit about how lexicality could look mathematically here without effectively ignoring everything that isn't lexically dominating, and there's also rank-discounted utilitarianism: see point 2 in this comment, this thread, or papers on "rank-discounted utilitarianism".

Thanks for all of this. I think IIA is just something that seems intuitive. For example it would seem silly to me for someone to choose jam over peanut butter but then, on finding out that honey mustard was also an option, think that they should have chosen peanut butter. My support of IIA doesn't really go beyond this intuitive feeling and perhaps I should think about it more.

Thanks for the readings about lexicality and rank-discounted utilitarianism. I'll check it out.

I think the appeal of IIA loses some of its grip when one realizes that a lot of our ordinary moral intuitions violate it. Pete Graham has a nice case showing this. Here’s a slightly simplified version:

Suppose you see two people drowning in a crocodile-infested lake. You have two options:

Option 1: Do nothing.

Option 2: Dive in and save the first person’s life, at the cost of one of your legs.

In this case, most have the intuition that both options are permissible — while it’s certainly praiseworthy to sacrifice your leg to save someone’s life, it’s not obligatory to do so. Now suppose we add a third option to the mix:

Option 3: Dive in and save both people’s lives, at the cost of one of your legs.

Once we add option 3, most have the intuition that only options 1 and 3 are permissible, and that option 2 is now impermissible, contra IIA.

Thinking back on this, rather than violating IIA, couldn't this just mean your order is not complete? Option 3 > Option 2, but neither is comparable to Option 1.

Maybe this violates a (permissibility) choice function definition of IIA, but not an order-based definition?

Thanks, this is an interesting example!

I think if you are a pure consequentialist then it is just a fact of the matter that there is a goodness ordering of the three options, and IIA seems compelling again. Perhaps IIA potentially breaks down a bit when one strays from pure consequentialism, I’d like to think about that a bit more.

Yeah, for sure. There are definitely plausible views (like pure consequentialism) that will reject these moral judgments and hold on to IIA.

But just to get clear on the dialectic, I wasn’t taking the salient question to be whether holding on to IIA is tenable. (Since there are plausible views that entail it, I think we can both agree it is!)

Rather, I was taking the salient question to be whether conflicting with IIA is itself a mark against a theory. And I take Pete’s example to tell against this thought, since upon reflection it seems like our ordinary moral judgments violate the IIA. And so, upon reflection, IIA is something we would need to be argued into accepting, not something that we should assume is true by default.

Taking a step back: on one way of looking at your initial post against person-affecting views, you can see the argument as boiling down to the fact that person-affecting views violate IIA. (I take this to be the thrust of Michael’s comment, above.) But if violating IIA isn’t a mark against a theory, then it’s not clear that this is a bad thing. (There might be plenty of other bad things about such views, of course, like the fact that they yield implausible verdicts in cases X, Y and Z. But if so, those would be the reasons for rejecting the view, not the fact that it violates IIA.)

I think IIA is more intuitive when you're considering only the personal (self-regarding) preferences of a single individual like in your example, but even if IIA holds for each individual in a group, it need not for the group, especially when different people would exist, because these situations involve different interests. I think this is also plausibly true for all accounts of welfare or interests (maybe suitably modified), even hedonistic, since if someone never exists, they don't have welfare or interests at all, which need not mean the same thing as welfare level 0.

If you find the (very) repugnant conclusion counterintuitive, this might be a sign that you're stretching your intuition from this simple case too far.

I thought the person-affecting view only applies to acts, not states of the world. I don't hold the PAV but my impression was that someone who does would agree that creating an additional happy person in World A is morally neutral, but wouldn't necessarily say that Worlds B and C aren't better than World A.

Also, in my view, a symmetric total view applied to preference consequentialism is the worst way to do preference consequentialism (well, other than obviously absurd approaches). I think a negative view/antifrustrationism or some mixture with a "preference-affecting view" is more plausible.

The reason I think this is because rather than satisfying your existing preferences, it can be better to create new preferences in you and satisfy them, against your wishes. This undermines the appeal of autonomy and subjectivity that preference consequentialism had in the first place. If, on the other hand, new preferences don't add positive value, then they can't compensate for the violation of preferences, including the violation of preferences to not have your preferences manipulated in certain ways.

I discuss these views a bit here.

Thanks that's interesting. I have more credence in hedonistic utilitarianism than preference utilitarianism for similar reasons to the ones you raise.

I dispute this, at least if we interpret the positive-welfare lives as including only happiness (of varying levels) but no suffering. If a life contains no suffering, such that additional happiness doesn't play any palliative role or satisfy any frustrated preferences or cravings, I'm quite comfortable saying that this additional happiness doesn't add value to the life (hence B = C).

I suspect the strength of the intuition in favor of judging C > B comes from the fact that in reality, extra happiness almost always does play a palliative role and satisfies preferences. But a defender of the procreation asymmetry (not the neutrality principle, which I agree with Michael is unpalatable) doesn't need to dispute this.

Thanks, an interesting view, although not one I immediately find convincing.

I have higher credence in hedonistic utilitarianism than preference utilitarianism so would not be concerned by the fact that no frustrated preferences would be satisfied in your scenario. Improving hedonistic welfare, even if there is no preliminary suffering, still seems to me to be a good thing to do.

For example we could consider someone walking around, happy without a care in the world, and then going home and sleeping. Then we could consider the alternative that the person is walking around, happy without a care in the world, and then happens upon the most beautiful sight they have ever seen filling them with a long-lasting sense of wonder and fulfilment. It seems to me that the latter scenario is indeed better than the former.

Maybe your intuition that the latter is better than the former is confounded by the pleasant memories of this beautiful sight, which could remove suffering from their life in the future. Plus the confounder I mentioned in my original comment.

Of course one can cite confounders against suffering-focused intuitions as well (e.g. the tendency of the worst suffering in human life to be much more intense than the best happiness). But for me the intuition that C > B when all these confounders are accounted for really isn't that strong - at least not enough to outweigh the very repugnant conclusion, utility monster, and intuition that happiness doesn't have moral importance of the sort that would obligate us to create it for its own sake.

This might depend on how you define welfare. If you define it to be something like "the intrinsic goodness of the experience of a sentient being" or something along those lines, then I would think C being better than B can't really be disputed.

For example if you accept a preference utilitarian view of the world, and under the above definition of welfare, the fact that the person has higher welfare must mean that they have had some preferences satisfied. Otherwise in what sense can we say that they had higher welfare?

If we have this interpretation of welfare I don't think it makes any sense to discuss that C might not be better than B. What do you think?

Under this interpretation I would say my position is doubt that positive welfare exists in the first place. There's only the negation or absence of negative welfare. So to my ears it's like arguing 5 x 0 > 1 x 0. (Edit: Perhaps a better analogy, if suffering is like dust that can be removed by the vacuum-cleaner of happiness, it doesn't make sense to say that vacuuming a perfectly clean floor for 5 minutes is better than doing so for 1 minute, or not at all.)

Taken in isolation I can see how counterintuitive this sounds, but in the context of observations about confounders and the instrumental value of happiness, it's quite sensible to me compared with the alternatives. In particular, it doesn't commit us to biting the bullets I mentioned in my last comment, doesn't violate transitivity, and accounts for the procreation asymmetry intuition. The main downside I think is the implication that death is not bad for the dying person themselves, but I don't find this unacceptable considering: (a) it's quite consistent with e.g. Epicurean and Buddhist views, not "out there" in the history of philosophy, and (b) practically speaking every life is entangled with others so that even if my death isn't a tragedy to myself, it is a strong tragedy to people who care about or depend on me.

The intransitivity problem that you address is very similar to the problem of simultaneity or synchronicity in special relativity. https://en.wikipedia.org/wiki/Relativity_of_simultaneity Consider three space-time points (events) P1, P2 and P3. The point P1 has a future and a past light cone. Points in the future light cone are in the future of P1 (i.e. a later time according to all observers). Suppose P2 and P3 are outside of the future and past light cones of P1. Then it is possible to choose a reference frame (e.g. a non-accelerating rocket) such that P1 and P2 have the same time coordinate and hence are simultaneous space-time events: the person in the rocket sees the two events happening at the same time according to his personal clock. It is also possible to perform a Lorentz transformation towards another reference frame, e.g. a second rocket moving at constant speed relative to the first rocket, such that P1 and P3 are simultaneous (i.e. the person in the second rocket sees P1 and P3 at the same time according to her personal clock). But... it is possible that P3 is in the future light cone of P2, which means that all observers agree that event P3 happens after P2 (at a later time according to all clocks). So, special relativity involves a special kind of intransitivity: P2 is simultaneous to P1, P1 is simultaneous to P3, and P3 happens later than P2. This does not make space-time inconsistent or irrational, neither does it make the notion of time incomprehensible. The same goes for person-affecting views. In the analogy: the time coordinate corresponds to a person's utility level. A later time means a higher utility. You can formulate a person-affecting axiology that is 'Lorentz invariant' just like in special relativity.

My favorite population ethical theory is variable critical level utilitarianism

https://stijnbruers.wordpress.com/2020/04/26/a-game-theoretic-solution-to-population-ethics/

https://stijnbruers.files.wordpress.com/2018/02/variable-critical-level-utilitarianism-2.pdf

This theory is in many EA-relevant cases (e.g. dealing with X-risks) equal to total utilitarianism, except that it avoids the very repugnant conclusion: situation A involves N extremely happy people, situation B involves the same N people, now extremely miserable (very negative utility), plus a huge number M of extra people with lives barely worth living (small positive utility). According to total utilitarianism, situation B would be better if M is large enough. I'm willing to bite the bullet of the repugnant conclusion, but this very repugnant conclusion is for me one of the most counterintuitive conclusions in population ethics. VCLU can easily avoid this.

Thanks, I'll check out your writings on VCLU!

I go back and forth between person-affecting (hedonic) consequentialism and total (hedonic) utilitarianism on about a six-monthly basis, so I sure understand what you're struggling with here.

I think there's a stronger intuition that can be made to argue for a person-affecting view though, which is that the idea of standing 'outside of the universe' and judging between world A, B & C is entirely artificial and impossible. In reality, no moral choices that impact axiological choices can be made outside of a world where agents already exist, so it's possible to question the fundamental assumption that comparisons between possible worlds is even legitimate, rather than just comparison between 'the world as it is' and 'the world as it would be if these different choices were made'. From that perspective, it's possible to imagine a world with one singular happy individual and a world with a billion happy individuals just being literally incomparable.

I ultimately don't buy this, as I do think divergence between axiology and morality is incoherent and that it is possible to compare between possible worlds. I'm just very uncomfortable with its implications of an 'obligation to procreate', but I'm less uncomfortable with that than the claim that a world with a billion happy people is incomparable to a world with a singular happy person (or indeed a world full of suffering).