I started the student group at the University of Illinois this semester and after talking to about a dozen student group organizers at the Boston and London conferences I was encouraged by a few people to post about what our group is like. This is probably the first of three posts and I'll follow it with a post about the way I've used/adapted the intro materials and a post about ideology/evangelism/AI safety/longtermism stuff. [1]

TLDR:

- Our new student group's relationship to EA is closely related to the funding situation of the CEA/OpenPhil/FTX Fund/etc constellation, which we've dubbed "Big EA." One of my primary activities is assuring people that it's not suspect that Big EA provides us so much money.

- There's a dynamic where the typical club member is sympathetic to EA but would not do direct work except for the money, and might even see this behavior as a kind of "grifting" even though I and Big EA wouldn't.

- But I think this is fine and that concerns about EA spending described in recent posts are overrated.

The Prometheus Science Bowl

I'm involved in the national high school/college quiz bowl circuit. In January, a friend who worked with me on a business where we ran online quiz bowl tournament after the COVID lockdowns heard about an online science bowl tournament run by Devansh with no entry fee and $28,000 in prizes. Quiz bowl tournaments don't typically offer cash prizes and science bowl (which I'm not well acquainted with) only offers prizes like this for the national championships.

Having run many online quiz bowl tournaments I scheduled a call to give some advice about preventing cheating and give some leads on finding volunteer readers or teams, only to discover that the readers wouldn't be volunteering but would instead make $25/hr (quiz bowl tournaments usually give a stipend for lunch at most). I told Devansh that he could double the field size goal with this kind of money and remember replying to one question with "normally yes, but you have an infinite money printer."

The staff payment was probably perceived as decadent spending by the UIUC guys because the tournament could have reached its staff goals with half the wage, and the OpenPhil investment as liberal because the tournament director was a high school student.

The way it was explained to me is that OpenPhil values a switch to an AI safety research career as +$20M in expected value, so a $100k science bowl tournament only has to have a 0.5% chance of convincing at least one of the ~800 participants to make a career change in order to be net positive. By this logic Devansh clearly exceeded reasonable metrics and the tournament ran very well. It was just a novelty for our first interaction with EA to be this hilarious leviathan with more money than God.

EAGx Boston

The contract work for the tournament brought EA back to the front of my mind. A friend and I had considered starting an EA group here in fall 2020 and I even contacted CEA/some nearby student groups but we were ultimately occupied with other things.

I learned that CEA funds student groups for things like food, books, posters, and T-shirts. I had trouble seeing the value proposition of books and posters so I just asked for money to buy food at our weekly meetings. The enthusiasm level for the club was low enough that the meetings wouldn't be attended at all without free food or if I moved the meeting to a building on the other side of campus. A typical view was "This EA stuff is good (except AI safety) and someone should be doing it, but not me, I have my career at Amazon/Nvidia/academia to attend to."

Then I brought 7 members of the group to the Boston conference and their view of EA might have actually gone down slightly overall from exposure to AI safety stuff.[2] At the conference they also heard about this retreat stuff which was universally considered decadent.

After the conference, a bunch of them effectively said "Arjun, if EA is so talent-constrained and not funding-constrained, you should go and find some technical projects and get grants from Big EA to pay us $100/hr or whatever to complete these projects." We had been thinking about an idea like this for some time and I think its EV overwhelms the cost spent by CEA in funding our pizza and Boston trips (which kept the club activity at the level needed to produce the idea).

So among some stories of undergrads mostly hanging out or professionals doing networking that's unrelated to EA I can cite this as an example of the money delivering +EV.

"Grifting"

Anecdotally, I’ve spoken to several organisers who aren’t convinced of longtermism but default to following the money nevertheless. I’ve even heard (joking?) conversations about whether it’s worth 'pretending' to be EA for the free trip.

The message is out. There’s easy money to be had. And the vultures are coming. On many internet circles, there’s been a worrying tone. “You should apply for [insert EA grant], all I had to do was pretend to care about x, and I got $$!” Or, “I’m not even an EA, but I can pretend, as getting a 10k grant is a good instrumental goal towards [insert-poor-life-goals-here]” . . . Or, “All you have to do is mouth the words community building and you get thrown bags of money.”

The weird part of this dynamic is when members of the club themselves might think of themselves as grifting even though I and Big EA don't:

Toy example: Big EA pays EA UIUC $100/hr to build some project which we think is altruistically worth less than $50/hr but which Big EA thinks is worth $200/hr.

Real Example: I'm planning to spend a lot of time competing for one of the $100,000 blog prizes. I'm not fully convinced that the altruistic value of the contestants' output justifies funding the prize—maybe it does—but when you add the personal value in expected earnings from a chance at winning the prize and improvements to my writing skill or network or whatever else, I'm happy to compete.

There's nothing wrong with this (this is how trade normally works), but it's weird because the Big EA position on the expected value is usually less commonsensical, so new people wandering into the club or spectating in the vicinity encounter a bunch of people apparently profiting from the areas where their skills and interest align with a wealthy benefactor who is spending money in silly ways. This is bad optics.

You could divide the grifter spectrum into groups:

- True believers think it's worthwhile to effect the most utility possible and are personally interested in pursuing this by convincing Big EA to help them work on the most important problems.

- This can be divided into people who are confident in their evaluations in what's most effective and try to convince Big EA of their conclusions, and people who trust Big EA to support the most important causes/ideas without always agreeing with its reasoning

- Coincidental allies are people with a personal interest that coincidentally overlaps with areas that Big EA is interested in funding. A member of our club often talks about his pet interest in securing the electrical grid or something and was pleased to report that FTX thinks this is important.[3]

- EA sympathizers think it's worthwhile to effect the most utility possible but aren't personally interested in pursuing this opportunity because of the money/status/intrinsic interest of the work, but they will do work for money. This describes many members of EA UIUC, who mostly have technical backgrounds.

- Literal grifters try to get money by misrepresenting their beliefs or making fraudulent claims about the work they're doing.

And I think all the non-grifters are fine. I only see two downsides:

1. Since coincidental allies and sympathizers have motivations other than EA, then new discoveries about the nature of impact could leave their plans incompatible with EA goals.

For example, if Big EA no longer thinks that electrical grid stuff is worth funding, but building a university is worth funding, my electrical grid friend isn't going to work on the university even if he has a comparative advantage from an altruistic perspective. This might imply that funding for general career skills is best spent on true believers but not much else.

2. Literal grifters could masquerade as sympathizers.

This would create barriers to legitimate funding since grantmakers would have to adjust for the risk of grifting and honest grant-seekers might have to engage in wasteful signaling games. Things like this certainly take place:

I can speak of one EA institution, which I will not name, that suffers from this. Math and cognitive science majors can get a little too far in EA circles just by mumbling something about AI Safety, and not delivering any actual interfacing with the literature or the community.

- quinn, commenting on "The Vultures Are Circling"

Beyond deliberate misleading, motivated reasoning is always easy. As someone without any strong position on longtermism yet, I get a very strong impression that it would be in my material/social interest to support it.

That said, the reputation of grantmakers to discern genuine applicants from frauds seems good and my prior is that people tend to be over-concerned with fraud in cases like these (e.g. in American politics it's widely believed that people fixate on welfare or voter fraud beyond their actual importance). Tightening the purse strings to excess is also a failure mode.

My impression of the Boston and London conferences is that most of the people there are "coincidental allies" by this framing. They'd be in their chosen careers regardless of whether it was an EA cause area.

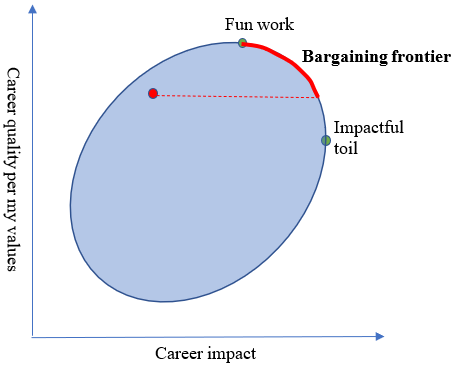

An even better model is probably something like Eric Neyman's in My bargain with the EA Machine:

I think realistically for the UIUC club members (and myself) it's better to think of thresholds of money/status/career capital, especially career capital that hedges against a risk like EA collapsing.

A moment that struck me as exciting at Boston was when I found some crypto people to talk to a few UIUC guys who took an interest and one of them mentioned a mutual acquaintance undertaking a project to "mint new EA crypto millionaires" and another immediately replied "what's the point?" I was reminded of the Sam Altman quote:

A common criticism of people in Silicon Valley ... are people who say some version of the following sentence. My life’s work is to build rockets, so ... I’m going to make a hundred million dollars in the next ... four years, trading crypto currency with my crypto hedge fund, because I don’t want to think about the money problem anymore, and then I’m going to build rockets. They never do either. I believe if these people would just pick one thing or the other, you know, I’m obsessed with money, so I’m going to go make a hundred million dollars, however long that takes, but with a long enough time horizon that’s reasonable. I’m just going to build rockets right now. I believe they would succeed at either.

In fact, I pitch EA to my friends by mostly listing cause areas in which Big EA takes an interest and asking if they've had any career aspirations or moonshot ideas in those areas. Ultimately I assume that most people have somewhere in their hearts a romantic notion of a life spent in service of mankind that they give up in exchange for material security or status or risk, and a huge value of EA is presenting an opportunity to overcome those obstacles and just build philanthropic rockets.

- ^

A bunch of the organizers I spoke to were interested just by my descriptions of the experiences other organizers had described in earlier conversations. There's probably an audience for narratives of what you've been up to even if you don't think of what you're doing as very interesting.

- ^

Will be treated in the next post.

- ^

He'll probably post about this in the near future but I can give you his contact information now if you take an interest in the topic.

Very important perspective from someone on the front lines of recruiting new EAs. Thanks for sharing!

I'm one of the characters here (the electrical-grid guy and the friend who considered starting it with him in Fall

20202021).10/10 blog post and I agree with most things here (both that they are correct and that the general anecdotes apply to me personally). There are a few points I'd like to highlight.

I'm skeptical of longtermism (particularly the AGI threat mentioned above). I'm afraid that a combination of:

de-facto p-hacks the analyses of the average person within EA-spheres. There may very well be extreme humanity-scale downsides, but (if these patterns manifest themselves like they may) the p-hacking applies not only to the possible downside but to the effectiveness of current actions as well. If the AGI downside analysis is correct and the tools that exist are de-facto worthless (which a fair number of AI-alignment folks have mentioned is a possibility to us), then it makes more sense to pivot towards "direct action", "apolitical" or "butlerian jihad accelerationism". These conclusions are quite uncomfortable (and often discarded for good reason IMO) and the associated downsides make other X-risk areas a much stronger consideration instead (not because the downside is larger per-se, but we have a reasonable grasp on the mechanisms at play as they exist today and how they will probably exist in the future, because nuclear issues have been around for the past 80 years and pandemics have been around since the beginning of civilizations and animal domestication). I believe this conclusion (i.e. effectiveness of current mitigation strategies is massively overestimated, NOT that the other solutions ought to be pursued) has been discounted because:

I know at least five people at the University of Illinois who have refused associations with EA because of (what they see as) a disproportional focus on AI-alignment (for the reasons I've listed above and more). All of these people have a solid background in either AI or formal verification in AI and non-AI systems. I'm not here to say whether the focus on AGI is valid, but I am here to say that it is polarizing for a large number of people who would contribute otherwise, and this isn't considered in how EA appears to these high-EV potential contributors.

The downside of no-longer-being-EA-aligned is probably better than no-longer-being-VC-aligned which is the more common case in these high-risk high-rewards industries. EA not taking an equity stake in something that (might, depending on the context) be revenue generating minimizes the incentive alignment downsides because there is no authority to force change outside of withholding money (which is a conscious decision on behalf of the founder/leader and not a forced circumstance like being fired by a vote from the board).

I'd love to build philanthropic rockets with the communities here (and I've reached out to multiple industry people at EAGxBoston and beyond). EA and its systems are a great positive to the world, but in a space where effectiveness is gauged in relative terms and "ill-posed problems", its difficult to be aware of biases as they happen. Hopefully the AGI-extinction sample size remains zero, so it'll remain an open question unless somebody finally explains Infrabayesianism to me :)

Great writeup!

Is there an OpenPhil source for "OpenPhil values a switch to an AI safety research career as +$20M in expected value"? It would help me a lot in addressing some concerns that have been brought up in local group discussions.

Update: I think he actually said "very good" AI safety researcher or something and I misremembered. The conversation was in January and before I knew anything much about the EAverse.

Thanks for the update!

I’m surprised by that figure. $1billion would only lead on 50 AI safety researchers and they seem to only have any $10 billion.

And there's only what, 100 AI safety researchers in the world? Huge increase relative to the size of the field. But I think what they've actually said is the avg value is more like 3m and it could be 20m for someone spectacular

I think there's some misunderstanding of the figure. The figure is an EV that's probably benchmarked off of cash transfers (i.e. givedirectly). The logic being, if Openphil can recruit for an AI researcher for any less than $20 million USD, they have made more impact than donating it to GiveDirectly. Not that they intend to spend 20 million on each counterfactual career change.

It's a bit surprising, but not THAT surprising. 50 more technical AI safety researchers would represent somewhere from a 50-100% increase in the total number, which could be a justifiable use of 10% of OpenPhil's budget.

This is what I remember Devansh (whom I pinged about your comment; I'll update when he replies) telling me when I first called him. I might have misremembered.

I feel like this is falsifiable, perhaps by handing out surveys or interviews asking participants around career path, and how they've updated over time.

I suspect what you stated is true for many "median engaged EA's" but not true for highly engaged EA's. For me personally, my career direction is radically different as a result of becoming an Aspiring EA / being a member of my local group.

Not sure if my visualization of a median-engaged EA is the same as yours but what percentage of Boston conference attendees do you estimate you would call "highly-engaged"?

I am sort of making a number up, since I didn't attend EAGxBoston, but I would guess 30-50% . If they've been around for some years, have been donating some fraction of their income, have changed things in the life because of EA, then they're probably highly engaged.