In March 2020, I wondered what I’d do if - hypothetically - I continued to subscribe to longtermism but stopped believing that the top longtermist priority should be reducing existential risk. That then got me thinking more broadly about what cruxes lead me to focus on existential risk, longtermism, or anything other than self-interest in the first place, and what I’d do if I became much more doubtful of each crux.[1]

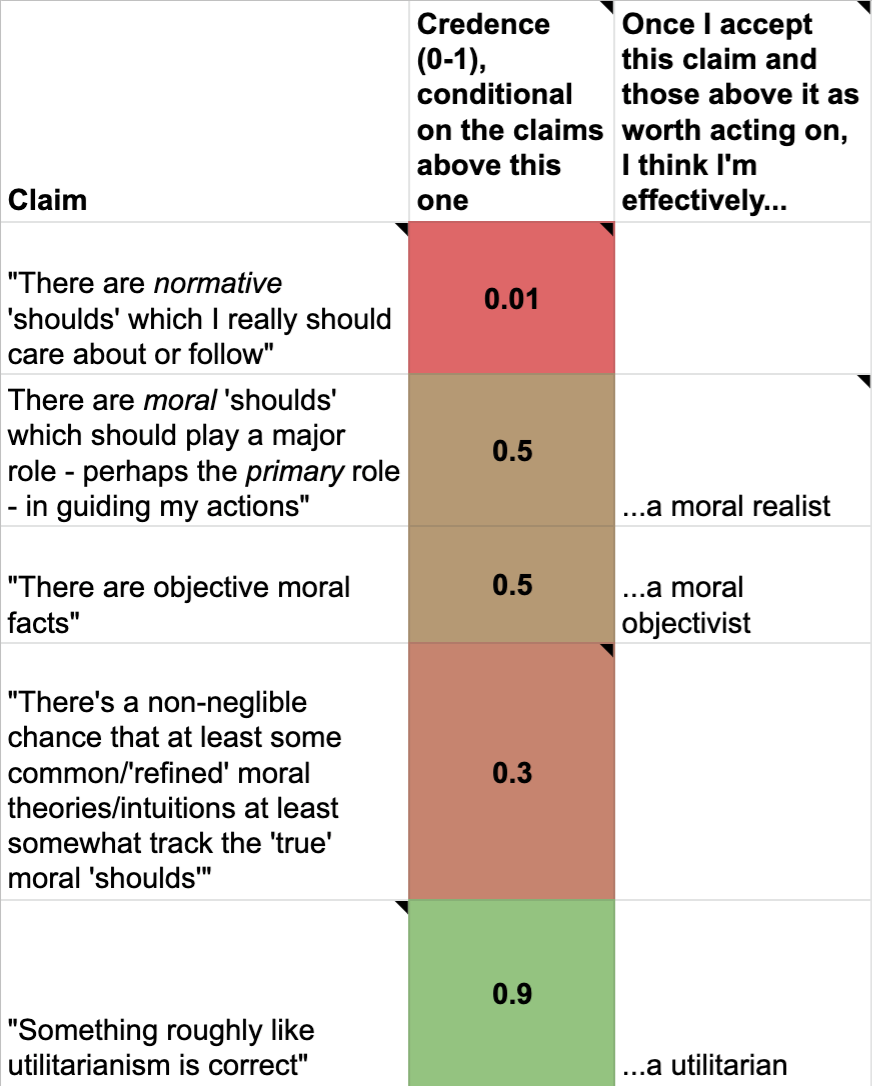

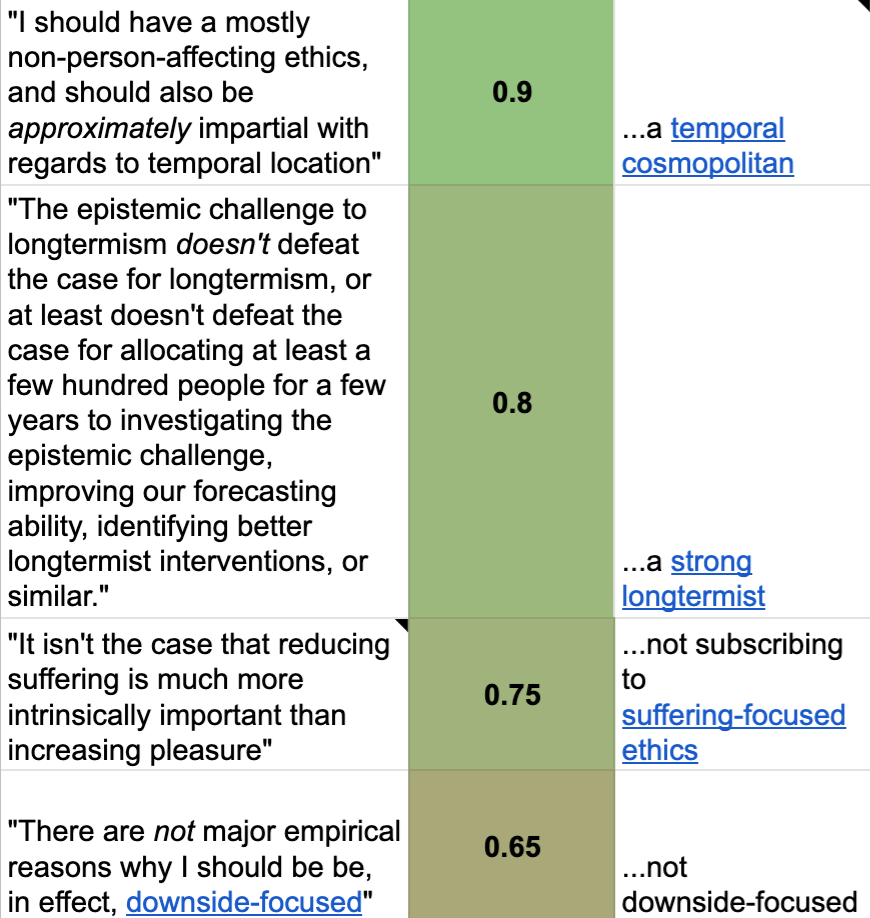

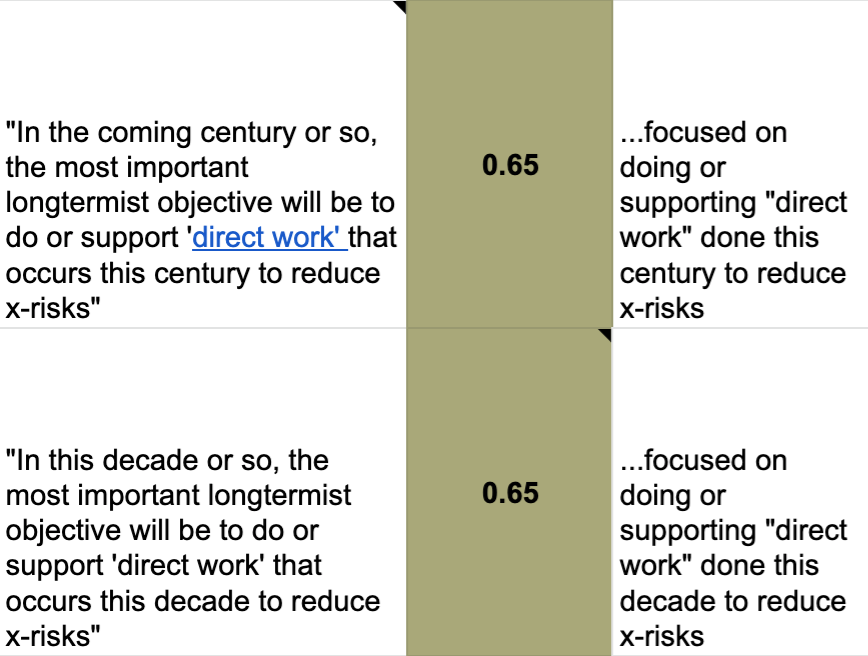

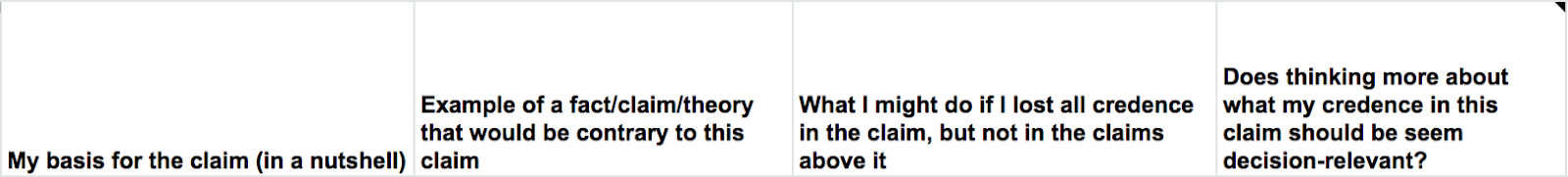

I made a spreadsheet to try to capture my thinking on those points, the key columns of which are reproduced below.

Note that:

- It might be interesting for people to assign their own credences to these cruxes, and/or to come up with their own sets of cruxes for their current plans and priorities, and perhaps comment about these things below.

- If so, you might want to avoid reading my credences for now, to avoid being anchored.

- I was just aiming to honestly convey my personal cruxes; I’m not aiming to convince people to share my beliefs or my way of framing things.

- If I was making this spreadsheet from scratch today, I might’ve framed things differently or provided different numbers.

- As it was, when I re-discovered this spreadsheet in 2021, I just lightly edited it, tweaked some credences, added one crux (regarding the epistemic challenge to longtermism), and wrote this accompanying post.

The key columns from the spreadsheet

See the spreadsheet itself for links, clarifications, and the following additional columns:

Disclaimers and clarifications

- All of my framings, credences, rationales, etc. should be taken as quite tentative.

- The first four of the above claims might use terms/concepts in a nonstandard or controversial way.

- I’m not an expert in metaethics, and I feel quite deeply confused about the area.

- Though I did try to work out and write up what I think about the area in my sequence on moral uncertainty and in comments on Lukas Gloor's sequence on moral anti-realism.

- I’m not an expert in metaethics, and I feel quite deeply confused about the area.

- This spreadsheet captures just one line of argument that could lead to the conclusion that, in general, people should in some way do/support “direct work” done this decade to reduce existential risks. It does not capture:

- Other lines of argument for that conclusion

- Perhaps most significantly, as noted in the spreadsheet, it seems plausible that my behaviours would stay pretty similar if I lost all credence in the first four claims

- Other lines of argument against that conclusion

- The comparative advantages particular people have

- Which specific x-risks one should focus on

- E.g., extinction due to biorisk vs unrecoverable dystopia due to AI

- See also

- Which specific interventions one should focus on

- Other lines of argument for that conclusion

- This spreadsheet implicitly makes various “background assumptions”.

- E.g., that inductive reasoning and Bayesian updating are good ideas

- E.g., that we're not in a simulation or that, if we are, it doesn't make a massive difference to what we should do

- For a counterpoint to that assumption, see this post

- I’m not necessarily actually confident in all of these assumptions

- If you found it crazy to see me assign explicit probabilities to those sorts of claims, you may be interested in this post on arguments for and against using explicit probabilities.

Further reading

If you found this interesting, you might also appreciate:

- Brian Tomasik’s "Summary of My Beliefs and Values on Big Questions"

- Crucial questions for longtermists

- In a sense, this picks up where my “Personal cruxes” spreadsheet leaves off.

- Ben Pace's "A model I use when making plans to reduce AI x-risk"

I made this spreadsheet and post in a personal capacity, and it doesn't necessarily represent the views of any of my employers.

My thanks to Janique Behman for comments on an earlier version of this post.

Footnotes

[1] In March 2020, I was in an especially at-risk category for philosophically musing into a spreadsheet, given that I’d recently:

- Transitioned from high school teaching to existential risk research

- Read the posts My personal cruxes for working on AI safety and The Values-to-Actions Decision Chain

- Had multiple late-night conversations about moral realism over vegan pizza at the EA Hotel (when in Rome…)

Thank you for sharing! I generally feel pretty good about people sharing their personal cruxes underlying their practical life/career plans (and it's toward the top of my implicit "posts I'd love to write myself if I can find the time" list).

I must confess it seems pretty wild to me to have a chain of cruxes like this start with one in which one has a credence of 1%. (In particular one that affects one's whole life focus in a massive way.) To be clear, I don't think I have an argument that this epistemic state must be unjustified or anything like that. I'm just reporting that it seems very different from my epistemic and motivational state, and that I have a hard time imagining 'inhabiting' such a perspective. E.g. to be honest I think that if I had that epistemic state I would probably be like "uhm I guess if I was a consistent rational agent I would do whatever the beliefs I have 1% credence in imply, but alas I'm not, and so even if I don't endorse this on a meta level I know that I'll mostly just ~ignore this set of 1% likely views and do whatever I want instead".

Like, I feel like I could understand why people might be relatively confident in moral realism, even though I disagree with them. But this "moral realism wager" kind of view/life I find really hard to imagine :)

I'd have guessed that:

Am I wrong about that (admittedly vague) claim?

Or maybe what seems weird about my chain of cruxes is something like the fact that one that's so unlikely in my own view is so "foundational"? Or something like the fact that I've only identified ~12 cruxes (so far from writing out all the assumptions implicit in my specific priorities, such as the precise research projects I work on) and yet already one of them was deemed so unlikely by me?

(Maybe here it's worth noting that one worry I had about posting this was that it might be demotivating, since there are so many uncertainties relevant to any given action, even though in reality it can still often be best to just go ahead with our current best guess because any alternative - including further analysis - seems less promising.)

Hmm - good question if that would be true for one of my 'cruxes' as well. FWIW my immediate intuition is that it wouldn't, i.e. that I'd have >1% credence in all relevant assumptions. Or at least that counterexamples would feel 'pathological' to me, i.e. like weird edge cases I'd want to discount. But I haven't carefully thought about it, and my view on this doesn't feel that stable.

I also think the 'foundational' property you gestured at does some work for why my intuitive reaction is "this seems wild".

Thinking about this, I also realized that maybe some distinction between "how it feels like if I just look at my intuition" and "what my all-things-considered belief/'betting odds' would be after I take into account outside views, peer disagreement, etc.". The example that made me think about this were startup founders, or other people embarking on ambitious projects that based on their reference class are very likely to fail. [Though idk if 99% is the right quantitative threshold for cases that appear in practice.] I would guess that some people with that profile might say something like "sure, in one sense I agree that the chance of me succeeding must be very small - but it just does feel like I will succeed to me, and if I felt otherwise I wouldn't do what I'm doing".

Weirdly, for me, it's like:

I think that this is fairly different from the startup founder example, though I guess it ends up in a similar place of it being easy to feel like "the odds are good" even if on some level I believe/recognise that they're not.

Actually, that comment - and this spreadsheet - implied that my all-things-considered belief (not independent impression) is that there's a ~0.5-1% chance of something like moral realism being true. But that doesn't seem like the reasonable all-things-considered belief to have, given that it seems to me that:

So maybe actually my all-things-considered belief is (or should be) closer to 50% (i.e., ~100 times as high as is suggested in this spreadsheet), and the 0.5% number is somewhere in-between my independent impression and my all-things-considered belief.

That might further help explain why it usually doesn't feel super weird to me to kind-of "act on a moral realism wager".

But yeah, I mostly feel pretty confused about what this topic even is, what I should think about it, and what I do think about it.

I'm actually not very confident that dropping the first four claims would affect my actual behaviours very much. (Though it definitely could, and I guess we should be wary of suspicious convergence.)

To be clearer on this, I've now edited the post to say:

Here's what I say in the spreadsheet I'd do if I lost all my credence in the 2nd claim:

And here's what I say I'd do if I lost all my credence in the 4th claim:

This might look pretty similar to reducing existential risk and ensuring a long reflection can happen. (Though it also might not. And I haven't spent much time on cause prioritisation from the perspective of someone who doesn't act on those first four claims, so maybe my first thoughts here are mistaken in some basic way.)

That seems like a fair comment.

FWIW, mostly, I don't really feel like my credence is that low in those claims, except when I focus my explicit attention on that topic. I think on an implicit level I have strongly moral realist intuitions. So it doesn't take much effort to motivate myself to act as though moral realism is true.

If you'd be interested in my attempts to explain how I think about the "moral realism wager" and how it feels from the inside to kind-of live according to that wager, you may want to check out my various comments on Lukas Gloor's anti-realism sequence.

(I definitely do think the wager is at least kind-of weird, and I don't know if how I'm thinking makes sense. But I don't think I found Lukas's counterarguments compelling.)

This is a super interesting exercise! I do worry how much it might bias you, especially in the absence of equally rigorously evaluated alternatives.

Consider the multiple stage fallacy: https://forum.effectivealtruism.org/posts/GgPrbxdWhyaDjks2m/the-multiple-stage-fallacy

If I went through any introductory EA work, I could probably identify something like 20 claims, all of which must hold for the conclusions to have moral force. It would. then feel pretty reasonable to assign each of those claims somewhere between 50% and 90% confidence.

That all seems fine, until you start to multiply it out. 70%^20 is 0.08%. And yet my actual confidence in the basic EA framework is probably closer to 50%. What explains the discrepancy?

I think the real question is, selfishly speaking, how much more do you gain from playing video games than from working on longtermism? I play video games sometimes, but find that I have ample time to do so in my off hours. Playing video games so much that I don't have time for work doesn't sound pleasurable to me anyway, although you might enjoy it for brief spurts on weekends and holidays.

Or consider these notes from Nick Beckstead on Tyler Cowen's view: "his own interest in these issues is a form of consumption, though one he values highly." https://drive.google.com/file/d/1O--V1REGe1-PNTpJXl3GHsUu_eGvdAKn/view

3. I shouldn't just ask what I should do selfishly speaking

It could be that that's the only question I have to ask. That would happen if I work out what seems best to do from an altruistic perspective, then from a self-interested perspective, and I notice that they're identical.

But that seems extremely unlikely. It seems likely that there's a lot of overlap between what's quite good from each perspective (at least given my current knowledge), since evolution and socialisation and such have led me to enjoy being and feeling helpful, noble, heroic, etc. But it seems much less likely that the very best thing from two very different perspectives is identical. See also Beware surprising and suspicious convergence.

Another way that that could be the only question I have to ask is if I'm certain that I should just act according to self-interest, regardless of what's right from an altruistic perspective. But I see no good basis for that certainty.

This is part I said "it seems plausible that my behaviours would stay pretty similar if I lost all credence in the first four claims", rather than making a stronger claim. I do think my behaviours would change at least slightly.

2. Credence in X vs credence I should roughly act on X

I think maybe you mean, or what you should mean, is that your actual confidence that you should, all things considered, act roughly as if the EA framework is correct is probably closer to 50%. And that's what's decision relevant. This captures ideas like those you mention, e.g.:

I think a 50% confidence that the basic EA framework is actually correct[2] seems much too high, given how uncertain we should be about metaethics, consequentialism, axiology, decision theory, etc. But that uncertainty doesn't mean acting on any other basis actually seems better. And it doesn't even necessarily mean I should focus on reducing those uncertainties, for reasons including that I think I'm a better fit for reducing other uncertainties that are also very decision-relevant (e.g., whether people should focus on longtermism or other EA cause areas, or how to prioritise within longtermism).

So I think I'm much less than 50% certain that the basic EA framework is actually correct, but also that I should basically act according to the basic EA framework, and that I'll continue doing so for the rest of my life, and that I shouldn't be constantly stressing out about my uncertainty. (It's possible that some people would find it harder to put the uncertainty out of mind, even when they think that, rationally speaking, they should do so. For those people, this sort of exercise might be counterproductive.)

[2] By the framework being "actually correct", I don't just mean "this framework is useful" or "this framework is the best we've got, given our current knowledge". I mean something like "the claims it is based on are correct, or other claims that justify it are correct", or "maximally knowledgeable and wise versions of ourselves would endorse this framework as correct or as worth acting on".

tl;dr

I'll split that into three comments.

But I should note that these comments focus on what I think is true, not necessarily what I think it's useful for everyone to think about. There are some people for whom thinking about this stuff just won't be worth the time, or might be overly bad for their motivation or happiness.

1. Where I agree with your comment

Am I correct in thinking that you mean you worry how much conducting this sort of exercise might affect anyone who does so, in the sense that it'll tend to overly strongly make them think they should reduce their confidence in their bottom-line conclusions and actions? (Because they're forced to look at and multiply one, single, conjunctive set of claims, without considering the things you mention?)[1]

If so, I think I sort-of agree, and that was the main reason I consider never posting this. I also agree that each of the things you point to as potentially "explaining the discrepancy" can matter. As I note in a reply to Max Daniel above:

And as I note in various other replies here and in the spreadsheet itself, it's often not obvious that a particular "crux" actually is required to support my current behaviours. E.g., here's what I say in the spreadsheet I'd do if I lost all my credence in the 2nd claim:

This is why the post now says:

And this is also why I didn't include in this post itself my "Very naive calculation of what my credence "should be" in this particular line of argument" - I just left that in the spreadsheet, so people will only see that if they actually go to where the details can be found. And in my note there, I say:

[1] Or you might've meant other things by the "it", "you", and "bias" here. E.g., you might've meant "I worry how much seeing this post might bias people who see it", or "I worry how much seeing this post or conducting this exercise might cause a bias towards anchoring on one's initial probabilities."

Thank you for writing this! I'm currently drafting something similar and your post gave me some new ideas on how to structure it so it would be easy to follow.

Glad to hear that!

I'll be interested to see yours once it's out, partly just because this sort of thing seems interesting in general and partly in case the structure you land on is better than my one.

(I expected some confusion to be created by the fact that my approach just shows a single, conjunctive chain, and by the fact that much of the clarifications and justifications and implications are tucked away in the spreadsheet itself. And comments so far have indicated that those concerns may indeed have been justified.)

Interesting!

By x-risks, do you mean primarily extinction risks? Suffering-focused and downside-focused views, which you cover after strong longtermism, still support work to reduce certain x-risks, specifically s-risks. Maybe it would say more about practical implications to do x-risks before suffering-focused or downside-focused? On the other hand, if you say you should focus on x-risks, but then find that there are deep tradeoffs between x-risks important to downside-focused views compared to upside-focused views, and you have deep/moral uncertainty or cluelessness about this, maybe it would end up better to not focus on x-risks at all.

In practice, though, I think current work on s-risks would probably look better than non x-risk work even for upside-focused views, whereas some extinction risk work looks bad for downside-focused views (by increasing s-risks). Some AI safety work could look very good to both downside- and upside-focused views, so you might find you have more credence in working on that specifically.

It also looks like you're actually >50% downside-focused, conditional on strong longtermism, just before suffering-focused views. This is because you gave "not suffering-focused" 75% conditional on previous steps and "not downside-focused" 65% conditional on that, so 48.75% neither suffering-focused nor downside-focused, and therefore 51.25% suffering-focused or downside-focused, but (I think) suffering-focused implies downside-focused, so this is 51.25% downside-focused. (All conditional on previous steps.)

I don't think this is quite right.

The simplest reason is that I think suffering-focused does not necessarily imply downside-focused. Just as a (weakly) non-suffering-focused person could still be downside-focused for empirical reasons (basically the scale, tractability, and neglectedness of s-risks vs other x-risks), a (weakly) suffering-focused person could still focus on non-s-risk x-risks for empirical reasons. This is partly because one can have credence in a weakly suffering-focused view, and partly because one can have a lot of uncertainty between suffering-focused views and other views.

As I note in the spreadsheet, if I lost all credence in the claim that I shouldn't be subscribe to suffering-focused ethics, I'd:

That said, it is true that I'm probably close to 50% downside-focused. (It's even possible that it's over 50% - I just think that the spreadsheet alone doesn't clearly show that.)

And, relatedly, there's a substantial chance that in future I'll focus on actions somewhat tailored to reducing s-risks, most likely by researching authoritarianism & dystopias or broad risk factors that might be relevant both to s-risks and other x-risks. (Though, to be clear, there's also a substantial chunk of why I see authoritarianism/dystopias as bad that isn't about s-risks.)

This all speaks to a weakness of the spreadsheet, which is that it just shows one specific conjunctive set of claims that can lead me to my current bottom-line stance. This makes my current stance seems less justified-in-my-own-view than it really is, because I haven't captured other possible paths that could lead me to it (such as being suffering-focused but thinking s-risks are far less likely or tractable than other x-risks).

And another weakness is simply that these claims are fuzzy and that I place fairly little faith in my credences even strongly reflecting my own views (let alone being "reasonable"). So one should be careful simply multiplying things together. That said, I do think that doing so can be somewhat fruitful and interesting.

It seems confusing for a view that's suffering-focused not to commit you (or at least the part of your credence that's suffering-focused, which may compromise with other parts) to preventing suffering as a priority. I guess people include weak NU/negative-leaning utilitarianism/prioritarianism in (weakly) suffering-focused views.

What would count as weakly suffering-focused to you? Giving 2x more weight to suffering than you would want to in your personal tradeoffs? 2x more weight to suffering than pleasure at the same "objective intensity"? Even less than 2x?

FWIW, I think a factor of 2 is probably within the normal variance of judgements about classical utilitarian pleasure-suffering tradeoffs, and there probably isn't any objective intensity or at least it isn't discoverable, so such a weakly suffering-focused view wouldn't really be distinguishable from classical utilitarianism (or a symmetric total view with the same goods and bads).

It sounds like part of what you're saying is that it's hard to say what counts as a "suffering-focused ethical view" if we include views that are pluralistic (rather than only caring about suffering), and that part of the reason for this is that it's hard to know what "common unit" we could use for both suffering and other things.

I agree with those things. But I still think the concept of "suffering-focused ethics" is useful. See the posts cited in my other reply for some discussion of these points (I imagine you've already read them and just think that they don't fully resolve the issue, and I think you'd be right about that).

I think this question isn't quite framed right - it seems to assume that the only suffering-focused view we have in mind is some form of negative utilitarianism, and seems to ignore population ethics issues. (I'm not saying you actually think that SFE is just about NU or that population ethics isn't relevant, just that that text seems to imply that.)

E.g., an SFE view might prioritise suffering-reduction not exactly because it gives more weight to suffering than pleasure in normal decision situations, but rather because it endorses "the asymmetry".

But basically, I guess I'd count a view as weakly suffering-focused if, in a substantial number of decision situations I care a lot about (e.g., career choice), it places noticeably "more" importance on reducing suffering by some amount than on achieving other goals "to a similar amount". (Maybe "to a similar amount" could be defined from the perspective of classical utilitarianism.) This is of course vague, and that definition is just one I've written now rather than this being something I've focused a lot of time on. But it still seems a useful concept to have.

(Minor point: "preventing suffering as a priority" seems quite different from "downside-focused". Maybe you meant "as the priority"?)

I think my way of thinking about this is very consistent with what I believe are the "canonical" works on "suffering-focused ethics" and "downside-focused views". (I think these may have even been the works that introduced those terms, though the basic ideas preceded the works.) Namely:

The former opens with:

And the latter says:

I think another good post on this is Descriptive Population Ethics and Its Relevance for Cause Prioritization, and that that again supports the way I'm thinking about this. (But to save time / be lazy, I won't mine it for useful excepts to share here.)

FWIW, I agree with both of these points, and think they're important. (Although it's still the case that I'm not currently focused on s-risks or AI safety work, due to other considerations such as comparative advantage.)

I'm unsure of my stance on the other things you say in those first two paragraphs.

No, though I did worry people would misinterpret the post as meaning extinction risks specifically. As I say in the post:

Other relevant notes from the spreadsheet itself, from the "In the coming century or so" cell:

And FWIW, here's a relevant passage from a research agenda I recently drafted, which is intended to be useful both in relation to extinction risks and in relation to non-extinction existential risks:

Note that that section is basically about what someone who hasn't yet specialised should now specialise to do, on the margin. I'm essentially quite happy for people who've already specialised for reducing extinction risk to keep on with that work.

Thanks for telling me about this post!

I answered Brian’s questions at one point, which struck me as an interesting exercise. I suppose most of your questions here are semi-empirical questions to me thanks to evidential cooperation, and ones whose answers I don’t know. I’m leaning in different directions from you on some of them at the moment.

Thank you for this interesting post! In the spreadsheet with additional info concerning the basis for your credences, you wrote the following about the first crucial crux about normativity itself: "Ultimately I feel extremely unsure what this claim means, how I should assess its probability, or what credence I should land on." Concerning the second question in this sentence, about how to assess the probability of philosophical propositions like this, I would like to advocate a view called 'contrastivism'. According to this view, the way to approach the claim "there are normative shoulds" is to consider the reasons that bear on it. What reasons count towards believing that there are normative shoulds, and what reasons are there to believe that there aren't. The way to judge the question is to weigh the reasons up against each other, instead of assessing them in isolation. According to contrastivism, reasons have comparative weights, but not absolute weights. Read a short explanatory piece here, and a short paper here.

When I do this exercise, I find that the reasons to believe there are moral shoulds are slightly more convincing than the reasons against so believing, if only slightly. I think this means that the rational metaethical choice for me is realism, and that I should believe this fully (unless I have reason to believe that there is further evidence that I haven't considered or don't understand). If you were to consider the issue this way, do you still think that your credence for normative realism would be 0.01?

Are you saying you should act as though moral realism is 100% likely, even though you feel only slightly more convinced of it than of antirealism? That doesn't seem to make sense to me? It seems like the most reasonable approaches to metaethical uncertainty would involve considering not just "your favourite theory" but also other theories you assign nontrivial credence to, analogous to the most reasonable-seeming approaches to moral uncertainty.

Cool! Thank you for the candid reply, and for taking this seriously. Yes, for questions such as these I think one should act as though the most likely theory is true. That is, my current view is contrary to McAskill's view on this (I think). However, I haven't read his book, and there might be arguments there that would convince me if I had.

The most forceful considerations driving my own thinking on this comes from sceptical worries in epistemology. In typical 'brain in a vat' scenarios, there are typically some slight considerations that tip in favor of realism about everything you believe. Similar worries appear in the case of conspiracy theories, where the mainstream view tends to have more and stronger supporting reasons, but in some cases it isn't obvious that the conspiracy is false, even though all things considered, one should believe that they are. These theories/propositions, as well as metaethical propositions are sometimes called hinge-propositions in philosophy, because entire worldviews hinge on them.

So empirically, I don't think that there is a way to act and believe in accordance with multiple worldviews at the same time. One may switch between worldviews, but it isn't possible to inhabit many worlds at the same time. Rationally, I don't think that one ought to act and believe in accordance with multiple worldviews, because they are likely to contradict each other in multiple ways, and would yield absurd implications if takes seriously. That is, absurd implications relative to everything else you believe, which is the ultimate grounds on which you judged the relative weights of the reasons bearing on the hinge proposition to start with. Thinking in this way is called epistemological coherentism in philosophy, and is a dominant view in contemporary epistemology. However, that does not mean it's true, but it does mean that it should be taken seriously.

Hmm, I guess at first glance it seems like that's making moral uncertainty seem much weirder and harder than it really is. I think moral uncertainty can be pretty usefully seen as similar to empirical uncertainty in many ways. And on empirical matters, we constantly have some degree of credence in each of multiple contradictory possibilities, and that's clearly how it should be (rather than us being certain on any given empirical matter, e.g. whether it'll rain tomorrow or what the population of France is). Furthermore, we clearly shouldn't just act on what's most likely, but rather do something closer to expected value reasoning.

There's debate over whether we should do precisely expected value reasoning in all cases, but it's clear for example that it'd be a bad idea to accept a 49% chance of being tortured for 10 years in exchange for a 100% chance of getting a dollar - it's clear we shouldn't think "Well, it's unlikely we'll get tortured, so we should totally ignore that risk."

And I don't think it feels weird or leads to absurdities or incoherence to simultaneously think I might get a job offer due to an application but probably won't, or might die if I don't wear a seatbelt but probably won't, and take those chances of upsides or downsides into account when acting?

Likewise, in the moral realm, if I thought it was 49% likely that a particular animal is a moral patient, then it seems clear to me that I shouldn't act in a way that would cause create suffering to the animal if so in exchange for just a small amount of pleasure for me.

Would you disagree with that? Maybe I'm misunderstanding your view?

I'm a bit less confident of this in the case of metaethics, but it sounded like you were against taking even just moral uncertainty into account?

You might enjoy some of the posts tagged moral uncertainty, for shorter versions of some of the explanations and arguments, including my attempt to summarise ideas from MacAskill's thesis (which was later adapted into the book).

So I agree with you that we should apply expected value reasoning in most cases. The cases in which I don't think we should use expected value reasoning are for hinge propositions. The propositions on which entire worldviews stand or fall, such as fundamental metaethical propositions for instance, or scientific paradigms. The reason these are special is that the grounds for belief in these propositions is also affected by believing them.

I think we should apply expected value reasoning in ethics too. However, I don't think we should apply it to hinge propositions in ethics. The hinginess of a proposition is a matter of degree. The question of whether a particular animal is a moral patient does not seem very hingy to me, so if it was possible to assess the question in isolation I would not object to the way of thinking about it you sketch above.

However, logic binds questions like these into big bundles through the justifications we give for them. On the issue of animal moral patiency, I tend to think that there must be a property in human and non-human animals that justifies our moral attitudes towards them. Many think that this should be the capacity to feel pain, and so, if I think this, and think there is a 49% chance that the animal feels pain, then I should apply expected value reasoning when considering how to relate to the animal. However, the question of whether the capacity to feel pain is the central property we should use to navigate our moral lives, is hingier, and I think that it is less reasonable to apply expected value reasoning to this question (because this and reasonable alternatives leads to contradicting implications).

I am sorry if this isn't expressed as clear as one should hope. I'll have a proper look into your and MacAskills views on moral uncertainty at some point, then I might try to articulate all of this more clearly, and revise on the basis of the arguments I haven't considered yet.

I haven't read those links, but I think that that approach sounds pretty intuitive and like it's roughly what I would do anyway. So I think this would leave my credence at 0.01. (But it's hard to say, both because I haven't read those links and because, as noted, I feel unsure what the claim even means anyway.)

(Btw, I've previously tried to grapple with and lay out my views on the question Can we always assign, and make sense of, subjective probabilities?, including for "supernatural-type claims" such as "non-naturalistic moral realism". Though that was one of the first posts I wrote, so is lower on concision, structure, and informed-ness than my more recent posts tend to be.)

(Also, just a heads up that the links you shared don't work as given, since the Forum made the punctuation after the links part of the links themselves.)

Ah, good! Hmm, then this means that you really find the arguments against normative realism convincing! That is quite interesting, I'll delve into those links you mentioned sometime to have a look. As is often the case in philosophy, though, I suspect the low credence is explained not so much by the strength of the arguments, but by the understanding of the target concept or theory (normative realism). Especially in this case as you say that you are quite unsure what it even means. There are concepts of normativity that I would give a 0.01 credence to as well, but then there are also concepts of normativity which I think imply that normative realism is trivially true. It seems to me that you could square your commitments and restore coherence to your belief set by some good old fashioned conceptual analysis on the very notion of normativity itself. That is, anyways, what I would do in this epistemic state. I myself think that you can get most of the ethics in the column with quite modest concepts of normativity that is quite compatible with a modern scientific worldview!

I updated the links, thanks!