This is a thread for displaying your probabilities of an existential catastrophe that causes extinction or the destruction of humanity’s long-term potential.

Every answer to this post should be a forecast showing your probability of an existential catastrophe happening at any given time.

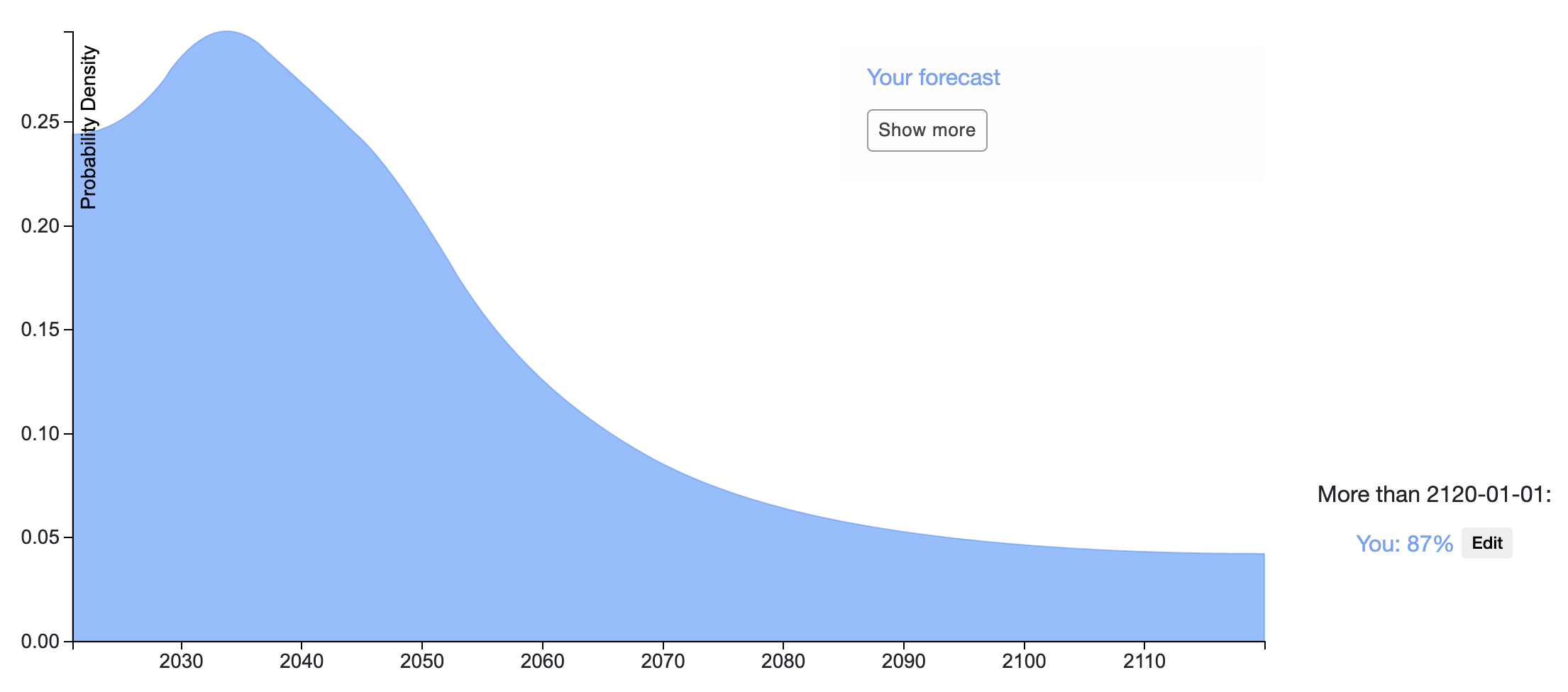

For example, here is Michael Aird’s timeline:

The goal of this thread is to create a set of comparable, standardized x-risk predictions, and to facilitate discussion on the reasoning and assumptions behind those predictions. The thread isn’t about setting predictions in stone – you can come back and update at any point!

How to participate

- Go to this page

- Create your distribution

- Specify an interval using the Min and Max bin, and put the probability you assign to that interval in the probability bin.

- You can specify a cumulative probability by leaving the Min box blank and entering the cumulative value in the Max box.

- To put probability on never, assign probability above January 1, 2120 using the edit button to the right of the graph. Specify your probability for never in the notes, to distinguish this from putting probability on existential catastrophe occurring after 2120.

- Click 'Save snapshot' to save your distribution to a static URL

- A timestamp will appear below the 'Save snapshot' button. This links to the URL of your snapshot.

- Make sure to copy it before refreshing the page, otherwise it will disappear.

- Click ‘Log in’ to automatically show your snapshot on the Elicit question page

- You don’t have to log in, but if you do, Elicit will:

- Store your snapshot in your account history so you can easily access it.

- Automatically add your most recent snapshot to the x-risk question page under ‘Show more’. Other users will be able to import your most recent snapshot from the dropdown.

- We’ll set a default name that your snapshot will be shown under – if you want to change it, you can do so on your profile page.

- If you’re logged in, your snapshots for this question will be publicly viewable.

- You don’t have to log in, but if you do, Elicit will:

- Copy the snapshot timestamp link and paste it into your LessWrong comment

- You can also add a screenshot of your distribution in your comment using the instructions below.

How to add an image to your comment

- Take a screenshot of your distribution

- Then do one of two things:

- If you have beta-features turned on in your account settings, drag-and-drop the image into your comment

- If not, upload it to an image hosting service like imgur.com, then write the following markdown syntax for the image to appear, with the url appearing where it says ‘link’:

- If it worked, you will see the image in the comment before hitting submit.

If you have any bugs or technical issues, reply to Ben from the LW team or Amanda (me) from the Ought team in the comment section, or email me at amanda@ought.org.

Questions to consider as you're making your prediction

- What definitions are you using? It’s helpful to specify them.

- What evidence is driving your prediction?

- What are the main assumptions that other people might disagree with?

- What evidence would cause you to update?

- How is the probability mass allocated amongst x-risk scenarios?

- Would you bet on these probabilities?

(Just want to mention that I'm guessing it's best if people centralise their forecasts and comments on the LW thread, and just use this link post as a pointer to that. Though Amanda can of course say if she disagrees :) )

The one thing I will say here, just in case anyone sees my example forecast here but doesn't follow the link, is that I'd give very little weight to both my forecast and my reasoning. Reasons for that include that:

So I'm mostly just very excited to see other people's forecasts, and even more excited to see how they reason about and break down the question!

Good note, agreed that it's better to centralize forecasts on the LW thread!

I thought that was exactly what Metaculus has been established for. In which way is this different ?

I think this is a good point. I think people probably underrate the costs of duplicate/redundant work. That said:

1) You can't see detailed predictions of other individual people on Metaculus, only the aggregated prediction by one of Metaculus's favored weightings.

2) The commenting system on Metaculus is more barebones than the EA Forum or LessWrong (eg you can't attach pictures, there's no downvote functionality).

3) The userbases are different.