Edited by Jacy Reese Anthis, Spencer Case, and Michael Dello-Iacovo. Thanks to Christopher DiCarlo and Elliot McKernon for their insights.

Abstract

The accelerating development of artificial intelligence (AI) systems has raised widespread concerns about risks from advanced AI. This poses important questions for policymakers regarding appropriate governance strategies to address these risks. However, effective policy requires understanding public attitudes and priorities regarding AI risks and interventions. In this blog post, we summarize key AI policy insights from the Artificial Intelligence, Morality and Sentience (AIMS) survey to elucidate public opinion on pivotal AI safety issues.

Insights include:

- High concern about the fast pace of AI development;

- Expectation of artificial general intelligence (AGI) within 5 years;

- Widespread concern about AI catastrophic and existential threats;

- Support for regulations and bans to slow AI;

- Surprisingly high concern for AI welfare.

These findings imply that AI safety proponents may expect to be relatively successful with strong proposals for risk mitigation. When forecasting the future trajectory of AI, policies attuned to widely shared public sentiments are more likely to be accepted. Acting decisively yet prudently on public priorities will be critical as advanced AI capabilities continue to emerge.

Table of Contents

1. The U.S. public is seriously concerned about the pace of AI development.

5. The U.S. public is generally opposed to the development of AI sentience and human-AI integration.

Introduction

2023 was a pivotal year for AI development. OpenAI’s November 2022 release of ChatGPT sparked massive public interest in AI. By February 2023, ChatGPT had 100 million monthly active users. This fueled a race to produce advanced large language models (LLMs), the architecture behind ChatGPT. Microsoft launched Bing Chat in February 2023. GPT-4 was released in March 2023. Google released Bard on March 21, followed by PaLM 2 in May. In July 2023, Meta released LLama 2. The accessibility of this new generation of AI models to the public increased their use of chatbots and LLMs for routine administrative work, coding, video games, social media content, and information searches such as Microsoft’s Bing search engine. Prior to these developments there was public concern about AI in domains like social media, surveillance, policing, and warfare, but the rapid proliferation of easy-to-access LLMs amplified worries about AI safety and governance.

The release and subsequent popularity of these models galvanized researchers to study the risks they pose to human extinction. Prominent AI researchers Geoffrey Hinton and Yoshua Bengio joined a chorus of voices including Max Tegmark and Eliezer Yudkowsky calling attention to the risks from rapid expansion of frontier AI systems without appropriate safety protocols or socio-political preparation.

Further, these AI developments renewed attention to an issue long debated in philosophy, computer science, and cognitive science: AI sentience. Blake Lemoine, who had been an engineer working on Google’s LLM, LaMDA, speculated that LaMDA was self-aware. This alerted the public to the near-future possibility of living with sentient AIs, although scientists and philosophers generally would not agree LaMDA is sentient. As LLMs are trained on larger portions of human language and history, their behaviors have become more complex, prompting increased interest in the possibility of digital minds and a need for research on public perceptions of digital minds.

The Sentience Institute tracks U.S. public opinion on the moral and social perception of different types of AIs, particularly sentient AIs, in the nationally representative Artificial Intelligence, Morality, and Sentience (AIMS) survey. In 2023, a supplemental AIMS survey was conducted on responses to current developments in AI with a focus on attitudes towards AI safety, AI existential risks (x-risks), and LLMs. With advanced AI systems like ChatGPT becoming more accessible to the public, it is crucial for policymakers to grasp the public's perspectives on certain issues, such as:

- The pace of AI advancement

- When advanced AI systems will emerge

- Catastrophic and existential risks to humanity and moral concern for AI welfare

- Human-AI integration, enhancement, and hybridization

- Policy interventions such as campaigns, regulations, and bans

This would empower policymakers to:

- Gauge the level of policy assertiveness needed to align with public concerns.

- Craft policies that are viable given public acceptability.

- Build trust in governance by addressing risks that resonate with the public's concerns.

We highlight five specific insights from the AIMS survey:

- The U.S. public is seriously concerned about the pace of AI development.

- 49% believe the pace of AI development is too fast.

- The U.S. public seems to expect that very advanced AI systems will be developed within the next 5 years.

- The median predictions for when Artificial General Intelligence (AGI), Human-Level Artificial Intelligence (HLAI), and Artificial Superintelligence (ASI) will be created are 2 years, 5 years, and 5 years.

- The U.S. public is concerned about the risks AI presents to humanity, including risks of extinction, and concerned about risks of harm to the AIs themselves.

- 48% are concerned that, “AI is likely to cause human extinction.”

- 52% are concerned about “the possibility that AI will cause the end of the human race on Earth.”

- 53% support campaigns against the exploitation of AIs.

- 43% support welfare standards that protect the wellbeing of AIs.

- 68% agree that we must not cause unnecessary suffering for LLMs if they develop the capacity to suffer.

- Specific policy tools designed for increasing AI safety are supported by a majority of the U.S. public.

- 71% support public campaigns to slow AI development.

- 71% support government regulation that slows AI development; when asked instead about opposition, 37% oppose it.

- 63% support banning the development of artificial general intelligence that is smarter than humans.

- 64% support a global ban on data centers that are large enough to train AI systems that are smarter than humans.

- 69% support a six-month pause on AI development.

- The U.S. public is generally opposed to the development of AI sentience and human-AI integration.

- 70% support a global ban on the development of sentience in AIs.

- 71% support a global ban on the development of AI-enhanced humans.

- 72% support a global ban on the development of robot-human hybrids.

- 59% oppose uploading human minds to computers (i.e., “mind uploading”).

1. The U.S. public is seriously concerned about the pace of AI development.

Key AIMS finding: 49% believe the pace of AI development is too fast.

In 2023, 49% of AIMS respondents believed that AI development is too fast. Only 2.5% thought that AI development is too slow, 19% were uncertain, and 30% believed the pace of development is fine. Alongside the Artificial Intelligence Policy Institute’s (AIPI) poll result that 72% of Americans prefer slowing down the development of AI and 8% prefer speeding it up (and 72% and 15% in the version of that question with more detail), there is clear evidence of the U.S. public’s support for slowing AI development.

Leading AI experts are also concerned about the pace of AI development. An open letter advocating for pausing the development of AIs more powerful than GPT-4 spearheaded by the Future of Life Institute was signed by over 33,700 experts. An open statement by the Center for AI Safety (CAIS) attracted a wide range of prominent signatories to its simple statement that, “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

To explain the survey and real-world evidence of widespread concern, we would place the factors that drive public opinion and expert concern into four categories: science fiction and mass media, past issues, risks of rapid integration into human socio-political institutions, and the problematic behavior of current AI systems.

- On science fiction: Public opinion on AI is presumably shaped by the many famous portrayals of dangerous AI in science fiction, such as The Terminator and 2001: A Space Odyssey. Nonexperts get most of their information about science concepts from mass media. Narratives and narrative-style mass media increase comprehension and recall, and are engaging and persuasive.

- On past issues: There have already been clear societal harms from earlier generations of AI. For example, social media recommender algorithms have played a significant role in the vast spread of misinformation and disinformation. AIs deployed in other contexts (e.g., healthcare, judicial sentencing, policing) have exhibited bias, which is partly a result of the disparities embedded within their training data. The ethical deployment of AI also has been questioned given some uses to repress populations lacking protections against these abuses.

- On rapid integration: Awareness of foundation models’ (e.g., ChatGPT, DALL-E) powerful capabilities is quickly spreading among the public, industry, and elites in powerful institutions. For example, the U.S. General Services Administration held a competition to find the best ways to integrate AI into government agencies. Although the integration of AI into organizational decision-making can yield benefits (e.g., efficiency), it presents risks as well. In Young et al. (2021), we proposed that the risks from automating tasks within organizations include AI exuberance, quantification bias, and organizational value misalignment. As AIs become more capable, they may be able to complete more general sets of tasks and may be used before they are effective substitutes for the nuances of human judgment. This is particularly challenging for holding governments democratically accountable and can set the stage for increases in “administrative evil,” which we view as the infliction of unnecessary suffering in public administration. For example, a worthy non-traditional applicant to a social benefits program might be denied because an AI makes decisions in a way tuned to the more common, traditional applicants and lacks the nuance to correctly categorize the non-traditional applicant. Job loss is another risk of organizational restructuring based on increased awareness and use of AI. Organizational restructuring that puts job performance evaluation, monitoring, and hiring or firing decision-making in the purview of AIs rather than humans is opposed by a majority of Americans, according to the Pew Research Center. American workers expect automation to shape the future of work for the worse. However, workers in general, and especially in emerging markets, feel more positive than negative about automation and job loss.

- On problematic behavior: AI capabilities have significantly increased on many dimensions, and they are exhibiting more evidence of having mental faculties that constitute digital minds. Generative AIs sometimes behave in harmful ways that have been intensified in the new generation of deep neural networks. Some generative AI behaviors challenge assumptions that AIs will be benign tools that are completely under human control. The pace of AI development suggests a race to the bottom as AI labs work to increase the capacities of AIs and publicly release their models in the marketplace to gain first mover advantages and a stronger reputation.

2. The U.S. public seems to expect that very advanced AI systems will be developed within the next 5 years.

Key AIMS Finding: The median predictions for when Artificial General Intelligence (AGI), Human-Level Artificial Intelligence (HLAI), and Artificial Superintelligence (ASI) will be created are 2 years, 5 years, and 5 years.

Three thresholds of AI development have particularly captured the attention of experts and forecasters: (1) Artificial General Intelligence (AGI), (2) Human-Level Artificial Intelligence (HLAI), and (3) Artificial Superintelligence (ASI). AIMS respondents forecasted about the emergence of these advanced AIs, providing a novel third perspective. The AIMS survey data suggest that the median U.S. adult expects AI to first become general in its capabilities and shortly thereafter to show human-level capabilities and superhuman intelligence. The U.S. public seems to expect these changes soon.

- Artificial General Intelligence (AGI) timelines: The modal estimate for when AGI will be created is 2 years from 2023. Thirty-four percent of the U.S. public believes that AGI already exists, and only 2% believe AGI will never happen when asked, “If you had to guess, how many years from now do you think that the first artificial general intelligence will be created?”

- Human-Level Artificial Intelligence (HLAI) timelines: The modal estimate for when HLAI will be created is 5 years from 2023. Twenty-three percent of the U.S. public believes that HLAI already exists, and only 4% believe it will never happen when asked, “If you had to guess, how many years from now do you think that the first human-level AI will be created?”

- Artificial Superintelligence (ASI) timelines: The modal guess for when ASI will be created is 5 years from 2023. Twenty-four percent of the U.S. public believes ASI already exists, and only 3% believe it will never happen when asked, “If you had to guess, how many years from now do you think that the first artificial superintelligence will be created?”

Definitions were not supplied for these terms. Instead, the AIMS survey relied on the general public’s understanding of and intuition about the meanings of these terms in order to create a baseline of data that can be built on in the future as discourse evolves. Many AI terms have no agreed upon definition among experts, forecasters, or the general public. Even with a particular definition of capabilities, there is significant disagreement among researchers on what empirical evidence would show those capabilities.

With these qualifications in mind, the public’s timelines are shorter than both current prediction markets and recent expert surveys (see below), both of which have intimated specific definitions and received more attention. The public’s expectations matter insofar as they signal what people care about and whether or not there would be public support for regulation or legislative change. Public opinion has been documented as having a substantial impact on policymaking and Supreme Court decisions. In the context of AI, if people expect AGI, HLAI, or ASI to emerge in the next 5 years, policymakers should expect greater public support for AI-related legislation in the near future. The short timelines may also be reflected in policymakers’ personal timelines, also increasing their prioritization of AI issues.

Forecasters offer one of the other more frequently cited timelines to advanced AI. Metaculus, one of the most prominent forecasting platforms, hosts ongoing predictions for AGI. As of December 2023, the ongoing predictions from the Metaculus community put AGI in August 2032, or 9 years from 2023, though there is much to be contested in how it operationalizes AGI, and it is unclear what incentives Metaculus users have for accurate forecasts. Nonetheless, at face value, this is notably 4x longer than the U.S. public’s median timeline. Other Metaculus predictions forecast weak AGI in October 2026 (close to the 2 years to AGI expected by the public) and ASI 2 years after that (i.e., in approximately 12 years; yes, this implies ASI 4 years before AGI, even though scholars typically consider AGI to be weaker than ASI). This is just one example of the challenges with forecasting such events in practice, but we nonetheless include these estimates for context.) Ongoing predictions about weakly general AI systems put weak AGI emergence in March 2026, approximately 3 years from 2023, and very close to the U.S. public’s expectation for AGI. Another Metaculus question suggests a 95% chance of “human-machine intelligence parity before 2040,” which could be considered an HLAI prediction for comparison to the AIMS results, though, as another example of the qualification necessary with such estimates, this operationalization only requires that a machine could pass a Turing-like test with three human judges, which could occur even if, say, 50 such tests are run, and one set of judges happen to be easily persuaded.

Question framing and terminology in questions shape the answers of experts and the general public alike. Metaculus forecasters’ varying timelines in response to the differently framed questions about weak AGI are a case in point. With the framing of our survey (i.e., just sharing terms with no explanation), the U.S. public expects AGI first then HLAI and ASI at approximately the same time, but the wording of the Metaculus questions suggest HLAI is easiest, followed by AGI then ASI.

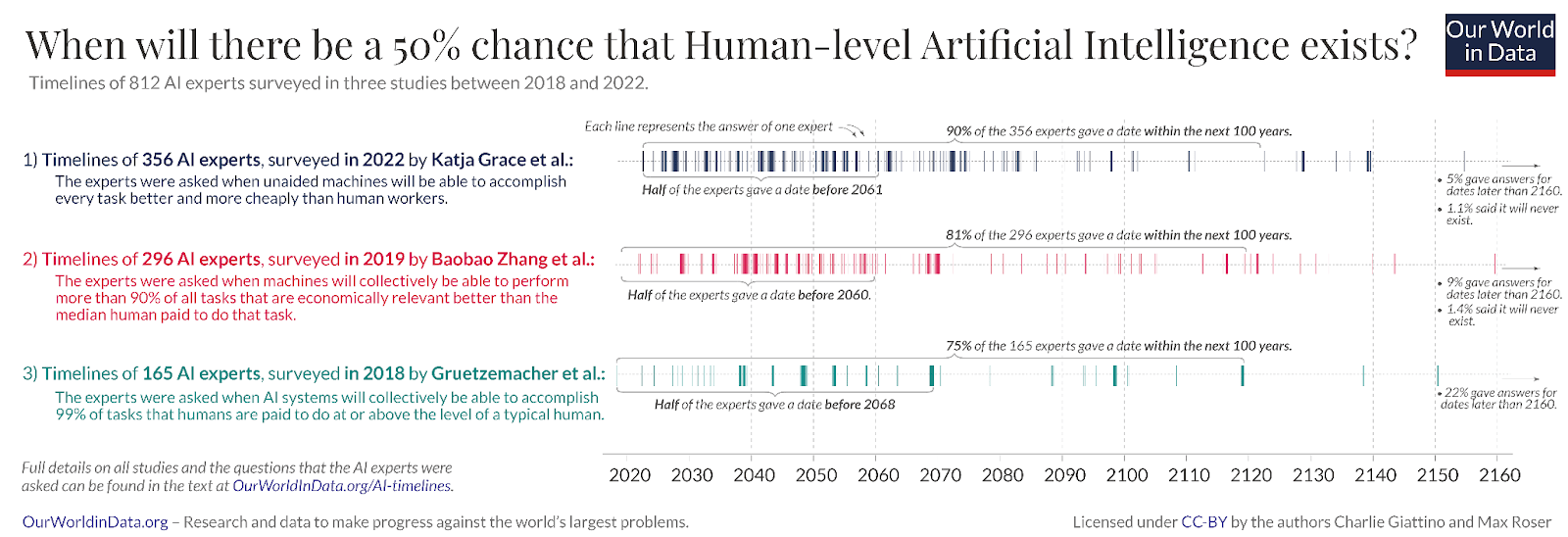

In addition to Metaculus and public timelines, other surveys include the three covered by Roser (2023), which are also documented in Figure 1.

- 2018: One-hundred and sixty-five AI experts were asked when AI systems will collectively be able to accomplish 99% of tasks that humans are paid to do at or above the level of a typical human. Half of the experts gave a date before 2068 (median: 45 years).

- 2019: Two-hundred and ninety-six AI experts were asked when machines will collectively be able to perform more than 90% of all tasks that are economically relevant better than the median human paid to do that task. Half of the experts gave a date before 2060 (median: 37 years).

- 2022: Three-hundred and fifty-six AI experts were asked when unaided machines will be able to accomplish every task better and more cheaply than human workers. Half of the experts gave a date before 2061 (median: 38 years).

Figure 1: Expert Timelines for Human-Level Artificial Intelligence from 2018-2022 Surveys

Note: This image was sourced from Our World in Data.

A 2023 survey of 2,778 AI researchers found an estimated 50% chance that autonomous machines would outperform humans in every possible task by 2047 and a 10% chance by 2027 if science were to continue undisrupted. A majority, 70% of respondents, indicated their desire to see more research on minimizing risks of AI systems.

3. The U.S. public is concerned about the risks AI presents to humanity, including risks of extinction, and concerned about risks of harm to the AIs themselves.

Key AIMS Findings: 48% are concerned that, “AI is likely to cause human extinction.” 52% are concerned about “the possibility that AI will cause the end of the human race on Earth.” 53% support campaigns against the exploitation of AIs. 43% support welfare standards that protect the wellbeing of robots/AIs. 68% agree that we must not cause unnecessary suffering for LLMs if they develop the capacity to suffer.

AI development towards more capable, generally skilled, intelligent systems is concerning to both the U.S. public and AI experts. Fast AI development brings significant risks to humanity and to the general existential trajectory of humanity and AIs. Additionally, the ongoing harms that the unreflective use of current AI systems bring to society (e.g., data privacy, discrimination in healthcare diagnosis) may be exacerbated by development that is too fast. Moreover, humans face threats to their long-term survival and potential to build a harmonious, integrated human-AI society—which may include various hybrid or uploaded beings. We believe the U.S. public is accurately detecting the presence of potential long-term catastrophic risks (e.g., simulations and suffering) and four near-term catastrophic risks that AI and ML safety researchers have recently classified: malicious use, AI race, organizational risks, and rogue AI. Current frontier AI models pose these not mutually exclusive risks and already enact harms across the first three.

- Malicious use: Future intentional malicious uses, for example by malevolent actors, including governments, terrorists, and profit-seeking corporations, are an ongoing and long-term risk. Even seemingly neutral uses of LLMs such as Gita chatbots that interpret ancient religious texts can incidentally spread misinformation and provoke violence.

- AI race: The AI arms race to produce and release increasingly capable and powerful LLMs and other advanced AI systems may directly lead to an ignorance of public and expert concerns. The corporations and governments engaged in the AI arms race seem at risk of overlooking the negative externalities of AI development that are passed on to the public. For example, unbounded iterative AI development strategies threaten public harms from interactions with AI systems that haven’t been properly vetted. The public is concerned about this dynamic. More people distrust than trust the companies building LLMs. Only 23% of respondents in the AIMS supplemental survey trusted LLM creators to put safety over profits, and only 27% trusted AI creators to control all current and future versions of an AI. The public has additional reason to worry about this dynamic because corporations and governments have shifted harms onto them in other contexts, particularly climate change.

- Organizational risks: Another category of risks that frontier AI systems pose are organizational risks. These risks stem from unsafe or unsecure organizational cultures, environments, or power structures. Organizational risks include outcomes such as catastrophic accidents that destroy the organization, sensitive information about the AI or AI safety tests being leaked to malicious actors, and a lack of any safety testing due to a lack of concern for AI safety. Hendrycks and colleagues (2023) highlighted that (1) catastrophes are hard to avoid even when competitive pressures are low, (2) AI accidents could be catastrophic, (3) it often takes years to discover severe flaws or risks, and (4) safety washing can undermine genuine efforts to improve AI safety.

- Rogue AI: Rogue AI risks occur when a misaligned or unaligned AI model has significant autonomy to make decisions and act with little human oversight. In this context, advanced AIs could deliberately alter themselves to take control over their own goals, act on their initial misalignment in an unexpected way, engage in deception, or exhibit goal drift as a result of adapting to changes in their environments. Rogue AIs may lack inner alignment or outer alignment. That is, AI systems can be misaligned both with their instructions and with human values.

In addition to concern about AI risks to humans, the U.S. public showed surprisingly high concern for the welfare of AIs in the AIMS supplemental survey. The majority of Americans supported campaigns against the exploitation of AIs and agreed that we must not cause unnecessary suffering for LLMs with the capacity to suffer. Forty-three percent of Americans supported welfare standards that protect the wellbeing of robots or AIs. This suggests that the public is concerned with ensuring a broadly good future, in which humans survive and thrive, and in which the welfare of AIs is taken seriously. Critically, the U.S. public perceives some moral obligations towards AIs already, supporting recent empirical and theoretical perspectives on the extension of moral consideration to at least some AIs.

4. Specific policy tools designed for increasing AI safety are supported by a majority of the U.S. public.

Key AIMS Findings: 71% support public campaigns to slow AI development. 71% support government regulation that slows AI development; when asked instead about opposition, 37% oppose it. 63% support banning the development of artificial general intelligence that is smarter than humans. 64% support a global ban on data centers that are large enough to train AI systems that are smarter than humans. 69% support a six-month pause on AI development.

AI experts and the U.S. public are concerned about the pace of AI development and the possibility of catastrophic outcomes for humans and AIs alike. Nevertheless, AI experts, prediction markets, and the public expect even more powerful and human-like AI systems to be developed in the near future. Given this, what, if anything, should be done about it?

We need a toolkit of strategies to address the complex interests involved in the promotion and development of safe AI. Early in 2023, FLI prominently called for pausing the development of training frontier models significantly more capable than current models. This letter asked AI labs to implement a 6-month voluntary pause on large-scale experiments until we better understand current frontier model behaviors. In the days following its publication, Yudkowsky (2023) argued that the pause letter understates the problem, “If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter.” Yudkowsky further suggested treating the development of AI like the development of nuclear weapons, a call echoed by CAIS. Even before the now-famous “pause letter,” policy experts were calling for bans on harmful applications of AI, compensation for those harmed by AI, licensing regimes to limit the spread of harmful AIs, and strict liability of AI companies for the harms caused by their products. These calls have intensified. Acting on them both through research and legislation is necessary, although legislation tends to lag behind actual development.

The AIMS supplemental survey data and other survey datasets suggest that the U.S. public is broadly supportive of a variety of safety policies that protect humanity from the development of powerful, advanced, and potentially uncontrollable frontier AI models. More than two-thirds of the U.S. public supported pausing AI development (69%) and public campaigns advocating slowdowns (71%). This level of support for regulation is even more striking given that the same AIMS data showed the least amount of trust placed in the governmental part of the AI ecosystem when compared to companies, engineers, AI output, training data, and algorithms.

The U.S. public supports outright bans on the development of some technologies. More than half of respondents (63%) supported banning the development of AGI that is smarter than humans. More than two-thirds (69%) supported banning the development of sentient AIs and 64% supported a ban on data centers that are large enough to train AI systems that are smarter than humans. Banning the development of a particular technology is typically considered a punitive and blunt tool, but it’s a tool that the U.S. public is willing to consider when it comes to the development of advanced AI technologies and a relatively simple proposal on which to elicit public opinion. This suggests that the public may be more open to disruptive interventions than commonly assumed.

5. The U.S. public is generally opposed to the development of AI sentience and human-AI integration.

Key AIMS Findings: 70% support a global ban on the development of sentience in robots/AIs. 71% support a global ban on the development of AI-enhanced humans. 72% support a global ban on the development of robot-human hybrids. 59% oppose uploading human minds to computers (i.e., “mind uploading”).

As more complex, capable, and intelligent AIs emerge, some experts anticipate radical developments in: (1) de novo artificial sentience, and (2) a closer coupling with humans, leading to AI-enhanced humans or cyborgs (e.g., robot-human hybrids).

Artificial sentience comes with a host of conceptual and terminological difficulties. The Sentience Institute has begun examining some of these challenges: Ladak (2021) evaluated potential methods to evaluate sentience in AI systems, and Pauketat (2021) considered the terminology of artificial sentience, providing definitions for “artificial sentience” and referencing Graziano’s (2017) and Reggia’s (2013) definitions of “artificial consciousness”:

- Artificial sentience:

- “artificial entities with the capacity for positive and negative experiences, such as happiness and suffering”

- “the capacity for positive and negative experiences manifested in artificial entities”

- Artificial consciousness:

- “a machine that contains a rich internal model of what consciousness is, attributes that property of consciousness to itself and to the people it interacts with, and uses that attribution to make predictions about human behavior. Such a machine would ‘believe’ it is conscious and act like it is conscious, in the same sense that the human “machine” believes and acts”

- “computational models of various aspects of the conscious mind, either with software on computers or in physical robotic devices”

Bullock and colleagues (2023) recently explored the consequences of machines evolving greater cognitive capabilities, whether or not machine consciousness would arise, and, if so, whether this consciousness would resemble human consciousness. This remains a challenging and unanswered question. Regardless of the conceptual challenges associated with “sentience” and “consciousness,” the U.S. public is opposed to the development of artificial sentience in this context. A majority of those surveyed in AIMS (70%) supported a global ban on the development of sentience in robots or AIs.

Leading AI and consciousness experts are also concerned about the creation of sentient AI. An open letter by the Association for Mathematical Consciousness Science in 2023 called for the inclusion of consciousness research in the development of AI to address ethical, legal, and political concerns. Philosopher Thomas Metzinger has called for a moratorium until the year 2050 on the development of “synthetic phenomenology” based on the risk of creating new forms of suffering.

AI-enhanced humans and robot-human hybrids are likely to span a continuum. On one end are humans who are not integrated with any AI or robotic components. On the other end are the disembodied AIs that are not integrated with any human components or directly physical capabilities. This continuum could include current cases such as humans who use AI-powered assistive devices to hear or move (e.g., AI-powered hearing aids, AI-powered prosthetic limbs). Science fiction is filled with examples of AI-enhanced humans and robot-human hybrids (e.g., Robocop, Battlestar Galactica) that exemplify physically integrated human-AI/robotic systems. These systems could manifest in other ways, such as AI tools on smartphones to extend our limited cognitive capacities (e.g., extended memory capacity, increased processing speed for calculations) and the robotic exoskeletons designed for soldiers. Similarly, mind uploads, a possible future technology achieved through methods such as whole brain emulation, might also be classified as human-AI hybrids or AI-enhanced humans. We found that over half of Americans (59%) opposed mind uploading.

Like with artificial sentience, there is no general consensus for the definitions of “AI-enhanced humans” and “robot-human hybrids.” It is unclear what the general public has in mind when they respond to questions asking about integration, enhancement, and hybridization. Without imposing any particular definition, the AIMS supplement survey showed that 71% of Americans support a global ban on the development of AI-enhanced humans and 72% support a global ban on the development of robot-human hybrids. Presumably, the general public is thinking about the futuristic cases of integration and hybridization rather than AI-powered assistive devices like hearing aids or prosthetic limbs when they endorse bans on integrative technologies. We should scrutinize public support for bans on integrative technologies because this might reflect a generalized perceived risk of all advanced AI technologies. It might also arise from substratist prejudice and fears over substrate-based contamination. More research is needed before policy directives are made.

People may have developed something of a precautionary principle towards advanced, and the advancement of, AI. People may be responding to threats to human distinctiveness and acting to preserve their perceived ownership over faculties like sentience that are viewed as distinctly, or innately, human. Threats to human distinctiveness or uniqueness might also provoke fears that advanced AIs, sentient AIs, or human-AI hybrids will supersede human control and change human autonomy. In parallel to these threats, concern over advanced AI technologies may stem from a form of status quo bias in which humans prefer to maintain their current situation, regardless of whether change would be harmful or beneficial.

In an AI landscape that is rapidly evolving, public opinion provides policymakers with an important anchor point. The AIMS survey offers insights into the U.S. public’s perspective at this critical juncture for AI governance. Policymakers need to act decisively, but thoughtfully, to implement policies that address the public's pressing concerns regarding advanced AI. Policymakers need also be aware that public opinion shapes policymaking and the acceptance of those policies. If policies appear too lenient or extreme compared to public opinion, backlash could occur that harms progress towards effective governance and building a harmonious human-AI future. Policymakers need to strike a balance between assertive risk mitigation and maintaining public trust through transparency, democratic process, and reasonable discourse.

Executive summary: The AIMS survey provides key insights into US public opinion on AI risks and governance, showing high concern about the pace of AI development, expectations for advanced AI soon, widespread worries about existential and catastrophic threats, support for regulations and bans to slow AI advancement, and concern for AI welfare.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.