This is a linkpost for https://www.sentienceinstitute.org/blog/moral-spillover-in-human-ai-interaction

Written by Katerina Manoli and Janet Pauketat. Edited by Jacy Reese Anthis. Many thanks to Michael Dello-Iacovo, Merel Keijsers, Ali Ladak, and Brad Saad for their thoughtful feedback.

Summary

Moral spillover is the transfer of moral attitudes or behaviors from one setting to another (e.g., from one being to another, from one behavior to a related behavior, from one group today to related groups in the future). Examples include the transfer of anti-slavery activism to animal rights activism (Anthis and Anthis 2017)[1], children’s moral consideration of a biological dog to a robot dog (Chernyak and Gary 2016), and household energy conservation to water conservation (Liu et al. 2021). Moral spillover seems to be an important driver of moral circle expansion. Here, we review moral spillover research with a focus on human-AI interaction. Psychological factors, such as pre-existing attitudes towards AIs, as well as AI attributes, such as human-likeness and social group membership, could influence moral spillover between humans and AIs. Spillover of moral consideration to AIs might be hindered by factors such as the intention-action gap and might be facilitated by interventions such as human-AI contact and promoting a core belief that the moral consideration of AIs is important. We conclude with future research suggestions to examine how pre-existing attitudes affect moral spillover, the potential backfiring of spillover interventions, how spillover affects AIs on a spectrum of similarity to humans, and how temporal spillover functions to shape moral consideration of future AIs, especially based on core beliefs about AI.

Introduction

The well-being of future sentient artificial intelligences (AIs)[2] depends in part on whether moral consideration transfers to them from consideration already extended to other beings, such as humans, nonhuman animals, and AIs who already exist[3]. The transfer of moral attitudes and behaviors, such as moral consideration, from one setting to another can be defined as moral spillover[4]. Moral spillover may be an important part of moral circle expansion, both for the circles of individual humans and of human societies. For example, a 2017 Sentience Institute report on the 1800s anti-slavery movement found that the consideration anti-slavery activists had for humans transferred to animals, making them some of the first animal rights activists.

Given the rapid growth of AI application and sophistication and an increasing likelihood that the number of future sentient beings will be vast, here we analyze whether the spillover of moral consideration is feasible or likely between beings that are granted (at least some) consideration (e.g., humans, animals) and future AIs. In psychology and human-robot interaction (HRI) studies, AIs are often used to improve the moral treatment of humans, suggesting that moral consideration can transfer from AIs to humans. For example, positive emotions from being hugged by a robot spilled over to increase donations to human-focused charities. Some research suggests that the transfer of moral consideration from humans or nonhuman animals to AIs is also possible. A 2016 study showed that 5- and 7-year-old children with biological dogs at home treated robot dogs better than children without biological dogs. This suggests that moral spillover to AIs might occur incidentally or automatically as part of humans’ social relationships.

We do not know whether or not moral consideration will reliably transfer to and across the diverse range of AIs with different appearances, inner features, or mental capacities who are likely to proliferate in the future. There is little evidence on whether or not moral consideration would transfer from very different beings to AIs who display very few or none of the same features. For instance, moral consideration of a biological dog might spill over to moral consideration of a robot dog but it may not spill over to moral consideration of a disembodied large language model like GPT-n. This might be especially significant if arguably superficial features such as appearance, substrate, or purpose override the effects of features that grant moral standing (e.g., sentience). For example, a sentient disembodied algorithm or a sentient cell-like robot, who could theoretically benefit from the transfer of moral consideration based on their sentience, might not.

This post reviews research on moral spillover in the context of AIs and examines factors that might influence its occurrence. We suggest that spillover might foster the moral consideration of AIs and call for more research to investigate spillover effects on a range of current and future AIs.

Types of spillover

In economics, spillovers, also known as externalities, are a natural part of structural theories in which a transaction affects non-participants, such as if an event in an economy affects another—usually more dependent—economy. In epidemiology, a spillover event occurs when a pathogen transfers from its reservoir population, such as animals, to a novel host, such as humans. In psychology, a spillover occurs when the adoption of an attitude or behavior transfers to other related attitudes or behaviors. Based on the latter definition, moral spillover occurs when moral attitudes or behaviors towards a being or group of beings transfer to another setting (e.g., to another being or group of beings). Nilsson et al. (2017) suggested a distinction between three types of spillover:

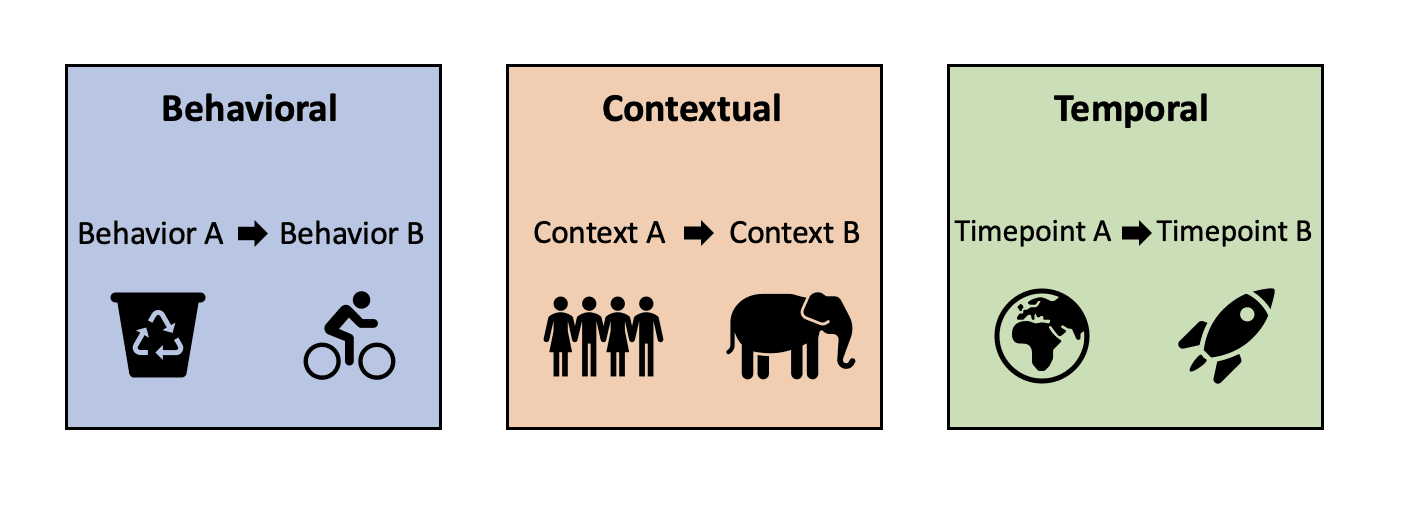

Figure 1: Types of Spillover

- Behavioral: Behavior A increases the probability of behavior B. Carlsson and colleagues (2021) showed that pro-environmental behaviors, such as conserving water, can spill over to other pro-environmental behaviors, such as conserving electricity. In the context of AI, researchers could ask questions like, can a prosocial[5] behavior towards AIs lead to other prosocial behaviors towards AIs? For example, could greeting an AI assistant spill over to protecting this assistant from mistreatment?

- Contextual: A behavior or attitude in context A increases the probability of this behavior or attitude in context B. Research on contextual AI moral spillover could address questions like, can the moral consideration of sentient beings such as animals spill over to AIs (e.g., Chernyak and Gary 2016)?

- Temporal: A behavior or attitude at time point A increases the frequency of the same or similar behavior or attitude at (a temporally distant) time point B. Elf et al. (2019) showed that the frequency of pro-environmental behaviors, such as buying eco-friendly products, increased a year after their initial adoption. Temporal spillover may be especially important for AIs given that they are likely to proliferate in the future. Increasing the moral consideration of AIs now might increase the moral and social inclusion of sentient AIs hundreds of years in the future.

Behavioral, contextual, and temporal spillovers can occur at the same time. These kinds of spillovers can also occur at multiple levels (e.g., from individual to individual, from individual to group, from group to group). Please see Table 1 in the original post for examples.

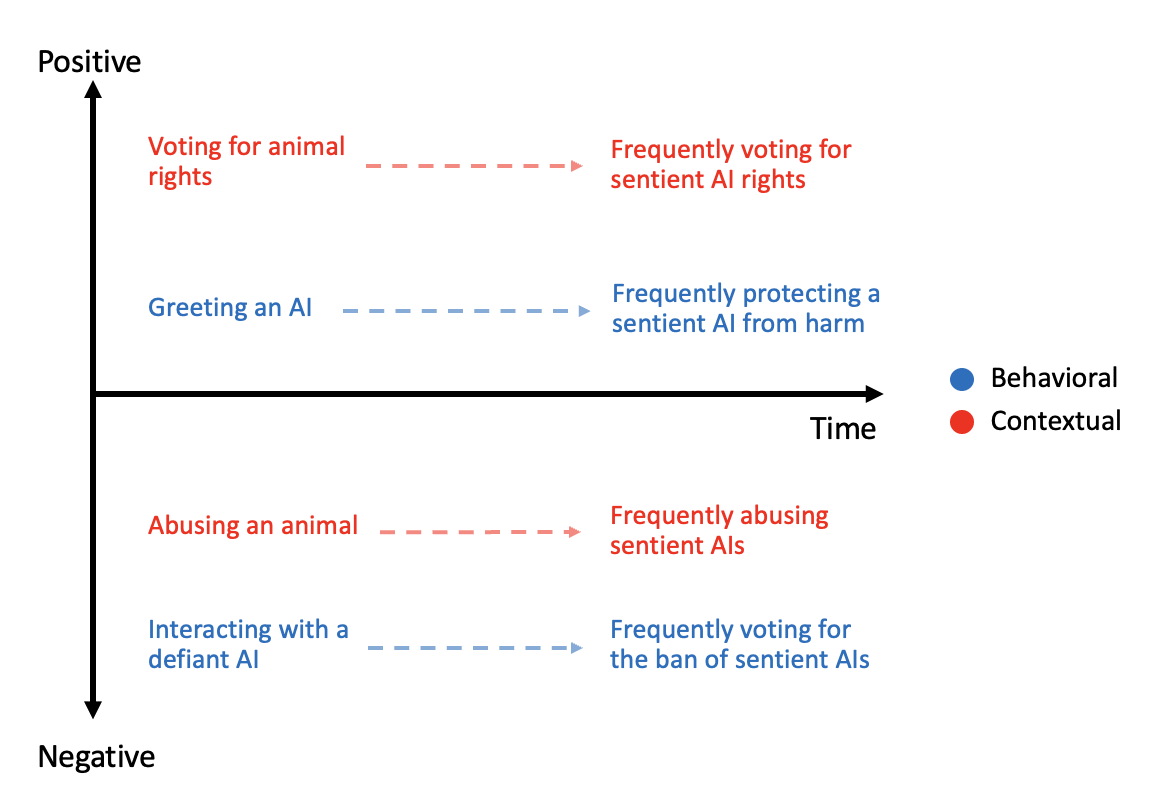

The examples outlined in Table 1 involve the transfer of positive or prosocial attitudes and behaviors. However, negative attitudes and behaviors can also spill over. This transfer can also be behavioral (e.g., ignoring an AI’s plea for help leads to turning off an AI without their consent), contextual (e.g., being rude to a household AI increases the probability of being rude to a workplace AI), or temporal (e.g., intentionally damaging one AI now leads to frequently damaging AIs at a later point). For instance, previous research has shown that feeling threatened by a highly autonomous AI increases negative attitudes toward all AIs. See Figure 2 for a possible taxonomy of how these spillover types might intersect.

Figure 2: Possible Taxonomy of Spillover Types

In the moral domain, the transfer of positive attitudes and behaviors can be associated with increased moral consideration between different groups and settings, like when the moral consideration of a biological dog increased the moral consideration of a robot dog. The transfer of negative attitudes and behaviors might lead to decreased moral consideration. Uhlmann et al. (2012) showed that negative evaluations of a criminal spill over to their biological relatives, who are then more likely to be punished by law than non-biological relatives. The transfer of negative attitudes and behaviors can pose a significant risk to the well-being of sentient AIs, especially if they are held to different standards than other entities. Bartneck and Keijsers (2020) showed that a mistreated robot who fights back is perceived as more abusive than a mistreated human who fights back. The transfer of negative attitudes towards one AI to all AIs could decrease the moral consideration of AIs and obstruct their inclusion in the moral circle.

What factors shape the likelihood of moral spillover?

Whether or not spillover occurs depends on factors such as personality traits and social context. The impact of these factors on spillover has been studied largely in the context of environmental behavior. The same factors are likely valuable for understanding when and how moral spillover applies to AIs. Table 2 in the original post summarizes some factors identified in previous research.

One of the more studied factors shaping whether or not spillover occurs is pre-existing attitudes. If pre-existing attitudes towards a spillover target are negative, then the transfer of negative attitudes and behaviors is more likely. If pre-existing attitudes are positive, then the transfer of positive attitudes and behaviors is more likely. Below are three notable studies:

- Henn et al. (2020) showed that pre-existing attitudes were the driving force behind spillovers in pro-environmental behavior across two separate cohorts: pre-existing positive attitudes towards the environment led to greater spillover between different kinds of pro-environmental behaviors (e.g., saving electricity and saving water).

- Wullenkord et al. (2016) showed that pre-existing negative emotions towards robots spilled over to feeling more negative emotions for robots in general following contact with a robot, compared to a control condition that involved no contact.

- Stafford et al. (2010) showed that pre-existing positive attitudes towards robots became more pronounced after meeting a robot; suggesting that the transfer of positive attitudes from one robot to all robots is easier when positive attitudes towards robots are already present.

What are the implications of human-AI interaction research for moral spillover?

General themes in human-AI interaction have emerged from HRI research: “computers are social actors,” the importance of social group membership, and how human and AI features affect interactions. In this section, we consider what these themes imply for moral spillover between humans and AIs, focusing on their implications for the moral consideration of AIs.

“Computers are social actors”

The “computers are social actors” (CASA) framework suggests that machines with human-like capacities, such as verbal or written communication, interactivity (e.g., a response when a button is pressed), and the ability to perform traditional human tasks elicit an automatic attribution of social capacities. This affects responses to them. For example, Lee et al. (2019) showed that people felt more positively towards autonomous vehicle voice agents who conformed to social role stereotypes (i.e., informative male voice and social female voice) compared to agents who did not.

What does CASA imply for moral spillover? Moral spillover might be automatic between humans and AIs because of humans’ propensity to think of AIs as social actors. Moral spillover might occur more for AIs with human-like capacities, given that such features are blatant CASA cues. Some studies using the CASA framework have shown the transfer of consideration from AIs to humans. For example, Peter et al. (2021) showed that people who interacted with a prosocial robot, compared to a less prosocial robot, gave more resources to other humans. Whether or not similar spillover effects emerge from humans towards AIs is an open question. For instance, the spillover of consideration from humans to AIs could be hindered for AIs who do not display enough human-like capacities (e.g., communication, emotional expression) to trigger CASA attributions.

Social group membership

Social group membership will likely impact moral spillover from humans to AIs since AIs are increasingly coexisting with humans in various group settings. Generally, people tend to favor ingroups (i.e., members of the same social group) over outgroups, (i.e., members of another social group). Ingroup favoritism increases cooperation with members of the same group, which can be based on features such as ethnicity, religion, gender, and ideology.

Moral consideration for ingroup humans could spill over onto ingroup AIs and consideration for ingroup AIs could spill on to other AIs. Shared social group membership can also translate into refusal to harm an ingroup AI. Sembroski et al. (2017) found that people refused to turn off a robot teammate despite being instructed to do so. Preliminary research has also shown that feelings of warmth towards a robot who was perceived as a friend spilled over to positive attitudes towards robots in general.

Ingroup-based spillover between humans and AIs likely has limits. Savela et al. (2021) showed that humans identified less with a team that was mostly composed of robots, suggesting an underlying “us” versus “them” distinction that threatens the identity, status, or control of the human minority. This could potentially inhibit the spillover of moral consideration between humans and AIs, at least in some cases. If humans and AIs coexist in social groups mostly composed of AIs (e.g., in the workplace), this could lead to the transfer of negative attitudes from the ingroup AIs to AIs in general, which might inhibit the inclusion of AIs in the moral circle.

Human and AI features

Additional research has focused on how the features of AIs (e.g., autonomy, usefulness/ease of operation) and of humans (e.g., cultural values towards AIs, owning AI or robotic devices) shape spillover. This research is likely important to understanding whether or not moral spillover occurs in the context of AIs. This research is summarized in Table 3 of the original post.

What is the difference between the spillover of actions and intentions?

The transfer of moral consideration could increase intentions to treat AIs morally, but this may not necessarily translate into action. For example, even if someone recognizes the importance of including sentient AIs in the moral circle, they may not act on it by voting for a ban on AI discrimination. There is no guarantee that AIs will be treated well, even if moral consideration transfers to them from other beings. In the short term, humans might not intervene to help a mistreated AI. In the long term, human societies might not implement legislative infrastructure that safeguards the well-being of sentient AIs.

This phenomenon is known as the intention-action gap and has been extensively studied in the context of environmental behavior. A recent meta-analysis showed that a pro-environmental behavior spills over to the intention to adopt similar behaviors but does not necessarily lead to action. For instance, taking shorter showers might spill over to an intention to start conserving electricity, but does not lead to turning off unused devices. In the context of human-AI interaction, most studies have focused on the spillover of intentions rather than behaviors. For example, previous studies have found that intentions to engage in prosocial interactions with all AIs increased after interacting with a single AI. However, positive attitudes do not necessarily transfer to behavior or even behavioral intentions. People might not seek out interactions with AIs even if they feel positively towards them or intend to engage in prosocial interactions.

A synthesis of studies suggested that the extent to which an action is in line with core beliefs (i.e., strong, long-held beliefs about oneself and the world) may underpin the intention-action gap. Intentions that align with core beliefs are more likely to consistently translate into action compared to intentions that are motivated by other factors (e.g., the need to conform to group norms), which might lead to performative or temporary behaviors. In the environmental conservation literature, promoting core beliefs to protect the environment has been shown to increase the spillover between pro-environmental behaviors. Promoting core beliefs about the importance of the moral consideration of AIs is likely important to closing the intention-action gap so that AIs can benefit from the transfer of moral consideration.

What interventions can we use to induce the spillover of moral consideration?

A common technique used in HRI research is to examine attitude change towards robots in general after interaction with a single AI, usually a robot. This technique builds on a rich literature of human intergroup contact interventions that have been shown to effectively promote the spillover of moral consideration and reduction of prejudice between humans [7].

HRI research has shown that human-AI contact might facilitate the transfer of moral consideration in the context of AIs. Stafford et al. (2010) found that prosocial contact with a robot increased positive attitudes toward the robot and towards all robots. More recently, researchers showed that mutual self-disclosure (e.g., sharing personal life details) with a robot increased positive perceptions of all robots. Additionally, contact with a robot caregiver increased acceptance of technology and AIs in general, and social interaction with a robot has been shown to increase positive perceptions of robots, regardless of their features. Positive attitudes after contact with a human-like robot have been shown to spill over to non-human-like robots. In-person interactions with a robot are not required to produce the spillover of positive attitudes and behaviors. Wullenkord and Eyssel (2014) demonstrated that imagining a prosocial interaction with a robot leads to positive attitudes and willingness to interact with other robots.

Another possible intervention to promote the transfer of moral consideration is changing underlying negative attitudes towards AIs. Some research has shown that pre-existing negative attitudes towards AIs can persist even after a positive interaction with an AI, highlighting the significance of promoting positive attitudes towards AIs in order to facilitate the transfer of moral consideration. However, even if an intervention is successful in changing pre-existing attitudes towards AIs, the effective scope of this intervention might be limited to attitudes and intentions rather than behavior because of the intention-action gap.

A more effective intervention might be to target core beliefs. As discussed previously, such beliefs are more likely to overcome the intention-action gap and translate into behavior. Sheeran and Orbell (2000) showed that individuals for whom exercising was part of their self-identity were better at translating intentions to exercise into action than ‘non-exercisers’. In the context of AIs, promoting the self-perception of being someone who cares about the well-being of all sentient beings might make it more likely for the transfer of moral consideration to produce positive behaviors towards sentient AIs. Likewise, holding a core belief that the moral consideration of AIs is important might improve the likelihood that moral consideration will transfer onto AIs.

Some interventions might be less effective in facilitating the transfer of moral consideration. Guilt interventions are ineffective in producing spillover in the environmental domain, and may even backfire. Specifically, inducing guilt over failing to adopt a pro-environmental behavior decreased the likelihood of adopting other pro-environmental behaviors. Even though guilt increases initial intentions to perform a pro-environmental behavior, the feelings of guilt dissipate after the first behavior has been performed and this undermines motivation to engage in similar future behaviors. There is currently no research on guilt interventions in the context of AIs, but the risk of backfiring seems high given these previous findings.

Even though interventions designed around contact, pre-existing attitudes, and core beliefs might be effective in inducing the transfer of moral consideration, to date there is no evidence for a long-term change in the moral consideration of AIs. So far, interventions have focused on short-term behavioral and contextual spillover. It is unknown whether these interventions have long-lasting effects on the moral consideration of AIs.

Another limitation of existing spillover research in the context of AIs is that the interventions conducted so far have been small-scale (i.e., small samples with limited types of AIs), often focused on non-moral purposes (e.g., user experience), and disconnected from each other. Research on possible interventions with larger samples, for the purpose of studying moral spillover, and to track long-term effects (e.g., how moral consideration might transfer from current AIs to future AIs), would provide more insight into how spillover effects might facilitate or hinder the inclusion of AIs in the moral circle.

What future research is needed?

Research on moral spillover towards AIs is in its infancy. More empirical evidence is needed to understand how moral consideration may or may not transfer from humans to AIs and from existing AIs to future AIs.

Future research should investigate how and when positive and negative attitudes and behaviors transfer to AIs, and the consequences this might have for their inclusion in the moral circle. Prosocial interactions—real or imagined—with individual robots have been shown to increase positive attitudes and behaviors towards similar and very different AIs. Developing this research in the moral domain with studies that examine precursors (e.g., pre-existing negative attitudes) to spillover could help us understand positive and negative attitude transfer and the potential backfiring effects of spillover interventions. This matters because interaction with AIs is likely to increase as they become more widespread in society. If positive interactions with existing AIs facilitate moral consideration for AIs in general, future AIs might have a better chance of being included in the moral circle.

How future AIs will appear, think, feel, and behave is uncertain. They are likely to have diverse mental capacities, goals, and appearances. Future AIs could range from highly human-like robots to non-embodied sentient algorithms or minuscule cell-like AIs. The mental capacities of AIs are likely to vary on a spectrum (e.g., from minimally sentient to more sentient than humans). How these diverse future AIs will be affected by moral spillover is unknown. Future research could examine whether there is a minimal threshold of similarity with humans that AIs must meet for moral consideration to transfer. Future studies could also examine whether the inclusion of even one kind of AI in the moral circle spills over to all AIs regardless of their features.

Furthermore, a neglected but important research direction is the examination of temporal spillover. A change in how AIs are treated in the present might shape the moral consideration of AIs in the future. One possible way of investigating temporal spillover would be to use longitudinal studies to examine how present attitudes and behaviors towards AIs affect attitudes and behaviors towards different future AIs. Further research on the effectiveness of interventions that change core beliefs towards AIs is likely also an important part of understanding temporal moral spillover.

Expanding the research on moral spillover in the context of AIs has the potential to identify boundaries that shape human-AI interaction and the moral consideration of present AIs. Future research is also likely to broaden our understanding of how the many diverse AIs of the future will be extended moral consideration. Facilitating the transfer of moral consideration to AIs may be critical to fostering a future society where the well-being of all sentient beings matters.

- ^

See Davis (2015) and Orzechowski (2020) for more on the histories of the animal rights and anti-slavery social movements.

- ^

In humans and animals, sentience is usually defined as the capacity to have positive and negative experiences, such as happiness and suffering. However, we understand that sentience does not necessarily have to look the same in AIs. We outline how one might assess sentience in AIs in a previous post.

- ^

We take the view that the well-being of an entity is tied to judgments of moral patiency, as opposed to moral agency. Whether an AI is able to discern right from wrong, how the AI acts or how the AI is programmed does not necessarily change whether or not they should be considered a moral patient. That is, even if an AI who does not care about moral treatment is developed, we still ought to treat them morally on the basis that they are a moral patient.

- ^

Moral spillover can also occur for the opposite of moral consideration (e.g., actively wishing harm upon someone). Negative moral spillover has been referred to as moral taint.

- ^

From a psychological perspective, the term “prosocial” refers to a behavior that benefits one or more other beings (e.g., offering one’s seat to an older person on a bus). The term “moral” refers to a behavior that is ethical or proper (e.g., right or wrong). These terms can overlap. A prosocial behavior can in some cases also be a moral behavior (and vice versa), insofar as both kinds of actions promote the interests of other beings and can be construed as right or wrong. Given that much of the moral spillover research in HRI is framed as the study of prosocial behavior, we use the terms “moral” and “prosocial” interchangeably.

- ^

Temporal spillover could include a huge range of attitudes and behavior, such as simply having one attitude persist over time (e.g., after seeing a compelling fundraiser for a charity, you still feel compelled two weeks later). A narrower definition of spillover would exclude situations where moral consideration merely transfers to the same individual or group at a different time, rather than to different entities.

- ^

See Boin et al. (2021) and Paluck et al. (2021) for recent reviews of the effectiveness of contact interventions.

The idea of the intention-action gap is really interesting. I would imagine that the personal utility lost by closing this gap is also a significant factor. Meaning, if I recognize that this AI is sentient, what can I no longer do with/to it? If the sacrifice is too inconvenient, we might not be in such a hurry to concede that our intuitions are right by acting on them.