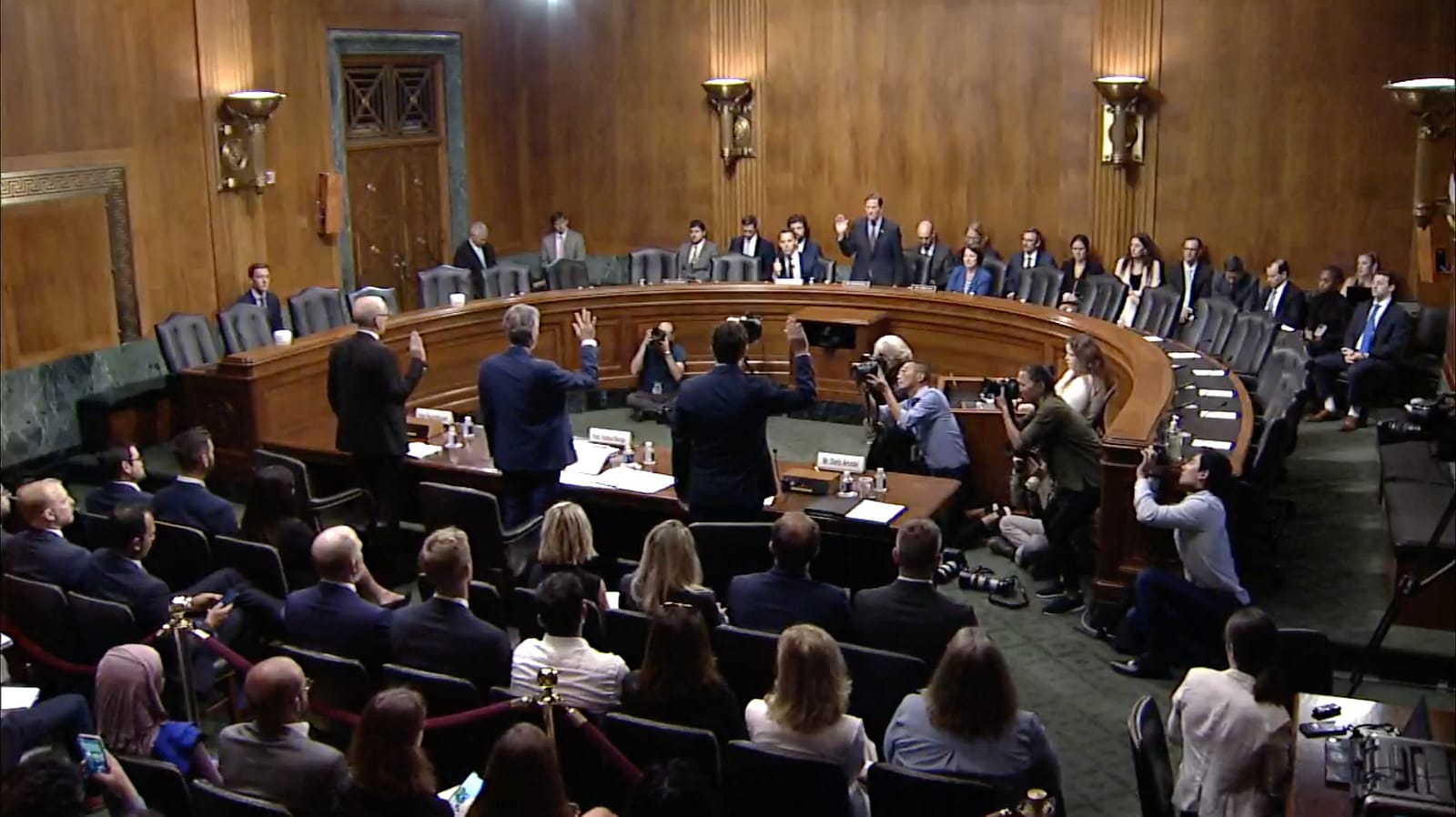

On Tuesday, the US Senate Judiciary Subcommittee on Privacy, Technology and the Law held a hearing on AI. The hearing involved 3 witnesses – Dario Amodei (CEO of Anthropic), Yoshua Bengio (Turing Award winner, and the second-most cited AI researcher in the world), and Stuart Russell (Professor of CS at Berkeley, and co-author of the standard textbook for AI).

The hearing wound up focusing a surprising amount on AI X-risk and related topics. I originally planned on jotting down all the quotes related to these topics, thinking it would make for a short post of a handful of quotes, which is something I did for a similar hearing by the same subcommittee 2 months ago. Instead, this hearing focused so much on these topics that I wound up with something that’s better described as a partial transcript.

All the quotes below are verbatim. Text that is bolded is simply stuff I thought readers might find particularly interesting. If you want to listen to the hearing, you can do so here (it’s around 2.5 hours). You might also find it interesting to compare this post to the one from 2 months ago, to see how the discourse has progressed.

Opening remarks

Senator Blumenthal:

What I have heard [from the public after the last AI hearing] again and again and again, and the word that has been used so repeatedly is ‘scary.’ ‘Scary’… What rivets [the public’s] attention is the science-fiction image of an intelligence device, out of control, autonomous, self-replicating, potentially creating diseases — pandemic-grade viruses, or other kinds of evils, purposely engineered by people or simply the result of mistakes… And, frankly, the nightmares are reinforced in a way by the testimony that I’ve read from each of you…

I think you have provided objective, fact-based views on what the dangers are, and the risks and potentially even human extinction — an existential threat which has been mentioned by many more than just the three of you, experts who know first hand the potential for harm. But these fears need to be addressed, and I think can be addressed through many of the suggestions that you are making to us and others as well.

I’ve come to the conclusion that we need some kind of regulatory agency, but not just a reactive body… actually investing proactively in research, so that we develop countermeasures against the kind of autonomous, out-of-control scenarios that are potential dangers: an artificial intelligence device that is in effect programmed to resist any turning off, a decision by AI to begin nuclear reaction to a nonexistent attack.

The White House certainly has recognized the urgency with a historic meeting of the seven major companies which made eight profoundly significant commitments… but it’s only a start… The urgency here demands action.

The future is not science fiction or fantasy — it’s not even the future, it’s here and now. And a number of you have put the timeline at 2 years before we see some of the biological most severe dangers. It may be shorter because the kinds of pace of development is not only stunningly fast, it is also accelerated at a stunning pace, because of the quantity of chips, the speed of chips, the effectiveness of algorithms. It is an inexorable flow of development…

Building on our previous hearing, I think there are core standards that we are building bipartisan consensus around. And I welcome hearing from many others on these potential rules:

Establishing a licensing regime for companies that are engaged in high-risk AI development;

A testing and auditing regimen by objective 3rd parties or by preferably the new entity that we will establish;

Imposing legal limits on certain uses related to elections… related to nuclear warfare — China apparently agrees that AI should not govern the use of nuclear warfare;

Requiring transparency about the limits and use of AI models — this includes watermarking, labeling, disclosure when AI is being used, and data access…

I appreciate the commitments that have been made by Anthropic, OpenAI, and others at the White House related to security testing and transparency last week — it shows these goals are achievable and that they will not stifle innovation, which has to be an objective… We need to be creative about the kind of agency, or entity, the body… I think the language is less important than its real enforcement power and the resources invested in it.

Senator Hawley:

I want to start by thanking the Chairman, Senator Blumenthal, for his terrific work on these hearings, it’s been a privilege to get to work with him. These have been incredibly substantive hearings… I have expressed my own sense of what our priorities ought to be when it comes to legislation… workers, kids, consumers, and national security. As AI develops, we’ve got to make sure that we have safeguards in place that will ensure this new technology is actually good for the American people… I’m less interested in the corporations’ profitability; in fact, I’m not interested in that at all…

You wanna talk about a dystopia? Imagine a world in which AI is controlled by one or two or three corporations that are basically governments unto themselves, and then the United States government and foreign entities…

And I think the real question before Congress is, ‘will Congress actually do anything?’ … Will the leadership in both parties, both parties, will it actually be willing to act? … I think if the urgency of the new generative AI technology does not make that clear to folks, then you’ll never be convinced.

Senator Klobuchar:

I do agree with both Senator Blumenthal and Senator Hawley — this is the moment, and the fact that this has been bipartisan so far in the work that Senator Schumar, Senator Young are doing, the work that is going on in this subcommittee… I actually think that if we don’t act soon we could decay into not just partisanship, but inaction.

Dario Amodei:

Anthropic is a public-benefit corporation that aims to lead by example in developing and publishing techniques to make AI systems safer and more controllable and by deploying these safety techniques in state-of-the-art models. Research conducted by Anthropic includes constitutional AI… early work on red-teaming… and foundational work in AI interpretability… While we’re the first to admit that our measures are still far from perfect, we believe they’re an important step forward in a race to the top on safety…

My written testimony covers three categories of risks: short-term risks that we face right now, such as bias, privacy, misinformation; medium-term risks related to misuse of AI systems as they become better at science and engineering tasks; and long-term risks related to whether models might threaten humanity as they become truly autonomous…

In these short remarks I want to focus on the medium-term risks… Specifically, Anthropic is concerned that AI could empower a much larger set of actors to misuse biology… Today, certain steps in bioweapons production involve knowledge that can’t be found on Google or in textbooks… We found that today’s AI tools can fill in some of these steps… however, a straightforward extrapolation of today’s systems to those we expect to see in 2 to 3 years suggests a substantial risk that AI systems will be able to fill in all the missing pieces, enabling many more actors to carry out large-scale biological attacks…

We have instituted mitigations against these risks in our own deployed models, briefed a number of US government officials — all of whom found the results disquieting, and are piloting a responsible disclosure process with other AI companies to share information on this and similar risks. However, private action is not enough — this risk and many others like it requires a systemic policy response.

We recommend three broad classes of actions:

First, the US must secure the AI supply chain in order to maintain its lead while keeping these technologies out of the hands of bad actors — this supply chain runs from semiconductor manufacturing equipment to chips and even the security of AI models stored on the servers of companies like ours.

Second, we recommend the testing and auditing regime for new and more powerful models… new AI models should have to pass a rigorous battery of safety tests before they can be released to the public at all, including tests by 3rd parties and national security experts in government.

Third, we should recognize that the science of testing and auditing for AI systems is in its infancy… thus it is important to fund both measurement and research on measurement to ensure a testing and auditing regime is actually effective…

Responsible supply chain policies help give America enough breathing room to impose rigorous standards on our own companies without ceding our national lead to adversaries and funding measurement in turn makes these rigorous standards meaningful.

Yoshua Bengio:

While this [ongoing AI] revolution has the potential to enable tremendous progress and innovation, it also entails a wide range of risks, from immediate ones like discrimination, to growing ones like disinformation, and even more concerning ones in the future like loss of control of superhuman AIs…

[Recent] advancements have led many top AI researchers, including myself, to revise our estimates of when human-level intelligence could be achieved. Previously thought to be decades or even centuries away, we now believe it could be within a few years, or decades. The shorter timeframe — say 5 years — is really worrisome, because we’ll need more time to effectively mitigate the potentially-significant threats…

These severe risks could arise either intentionally (because of malicious actors using AI systems to achieve harmful goals) or unintentionally (if an AI system develops strategies that are misaligned with our values and norms).

I would like to emphasize 4 factors that governments can focus on in their regulatory efforts to mitigate all AI harms and risks:

First, access, limiting who has access to powerful AI systems…

Second, alignment, ensuring that AI systems will act as intended in agreement with our values and norms.

Third, raw intellectual power, which depends on the level of sophistication of the algorithms and the scale of computing resources and of datasets.

And forth, scope of actions — the potential for harm an AI system can affect, indirectly, for example through human actions, or directly, for example through the internet…

I firmly believe that urgent efforts, preferably in the coming months, are required in the following 3 areas:

First, the coordination of highly-agile national and international regulatory frameworks and liability incentives that bolster safety, this would require licenses for people and organizations with standardized duties to evaluate and mitigate potential harm, allow independent audits, and restrict AI systems with unacceptable levels of risk.

Second, because the current methodologies are not demonstrably safe, significantly accelerate global research endeavors focused on AI safety, enabling the informed creation of essential regulations, protocols, safe AI methodologies, and governance structures.

And third, research on countermeasures to protect society from potential rogue AIs, because no regulation is going to be perfect. This research in AI and international security should be conducted with several highly-secure and decentralized labs, operating under multilateral oversight, to mitigate an AI arms race… We must… allocate substantial additional resources to safeguard our future — at least as much as we are collectively, globally, investing in increasing the capabilities of AI.

Stuart Russell:

[The field of AI’s] stated goal is general-purpose artificial intelligence, sometimes called ‘AGI’ or ‘artificial general intelligence’ — machines that match or exceed human capabilities in every relevant dimension…

For most of [the last 80 years] we created systems whose internal operations we understood… Over the last decade, that has changed… the dominant approach has been end-to-end training of circuits with billions or trillions of adjustable parameters… Their internal principles of operation remain a mystery — this is particularly true for the large language models…

Many researchers now see AGI on the horizon… If we succeed, the upside could be enormous — I’ve estimated a cash value of at least fourteen quadrillion dollars for this technology… On the other hand, Alan Turing, the founder of computer science, warned in 1951 that once AI outstrips our feeble powers, we should have to expect the machines to take control. We have pretty much completely ignored this warning — it’s as if an alien civilization warned us by email of its impending arrival, and we replied ‘humanity is currently out of the office’.

Fortunately, humanity is now back in the office and has read the email from the aliens. Of course, many of the risks from AI are well-recognized already, including bias, disinformation, manipulation, and impacts on employment — I’m happy to discuss any of these. But most of my work over the last decade has been on the problem of control — how do we maintain power, forever, over entities more powerful than ourselves?

The core problem we have studied comes from AI systems pursuing fixed objectives that are misspecified — the so-called ‘King Midas problem’… But with LLMs, we don’t even know what their objectives are…

This committee has discussed ideas such as 3rd party testing, licensing, national agency, an international coordinating body — all of which I support. Here are some more [ideas I support]:

First, an absolute right-to-know if one is interacting with a person or a machine.

Second, no algorithms that can decide to kill human beings, particularly when attached to nuclear weapons.

Third, a kill switch that must be activated if systems break into other computers or replicate themselves.

Fourth, go beyond the voluntary steps announced last Friday — systems that break the rules must be recalled from the market…

Eventually… we will develop forms of AI that are provably safe and beneficial, which can then be mandated — until then we need real regulation and a pervasive culture of safety.

Senator Blumenthal:

We have to be back in the office to answer that email that is in fact a siren blaring for everyone to hear and see: AI is here and beware of what it will do if we don’t do something to control it, and not just in some distant point in the future, but as all of you have said, with a time horizon that would have been thought unimaginable just a few years ago.

On Superintelligent AI

Senator Blumenthal:

Superhuman AI — I think all of you agree, we’re not decades away, we’re perhaps just a couple of years away. And you describe it… in terms of biologic effects — the development of viruses, pandemics, toxic chemicals, but superhuman AI evokes for me, artificial intelligence that could on its own develop a pandemic virus, on its own decide Joe Biden shouldn’t be our next president, on its own decide that the water supply of Washington DC should be contaminated with some kind of chemical, and have the knowledge to do it through public utilities systems…

I think your warning to us has really graphic content and it ought to give us impetus with that kind of urgency to develop an entity that can not only establish standards and rules but also research on countermeasures that detect those misdirections, whether they’re the result of malign actors or mistakes by AI, or malign operation of AI itself.

Do you think those countermeasures are within our reach as human beings and is that a function for an entity like this one to develop?

Dario Amodei:

Yes… this is one of the core things that, whether it’s the biorisks from models that… are likely to come in 2 to 3 years, or the risks from truly autonomous models, which I think are more than that, but might not be a whole lot more than that.

I think this idea of being able to even measure that the risk is there is really the critical thing; if we can’t measure, then we can put in place all of these regulatory apparatus, but it’ll all be a rubber stamp. And so funding for the measurement apparatus and the enforcement apparatus… our suggestion was NIST and the National AI Research Cloud which can help allow a wider range of researchers to study these risks and develop countermeasures…

I’m worried about our ability to do this in time, but we have to try.

Yoshua Bengio:

I completely agree. About the timeline, there’s a lot of uncertainty… It could be a few years, but it could also be a couple of decades…

Regulation, liability — they will help a lot. My calculations is we could reduce the probability of a rogue AI showing up by maybe a factor of 100 if we do the right things in terms of regulation… But it’s not gonna bring those risks to zero, and especially for bad actors that don’t follow the rules anyways.

So we need that investment in countermeasures and AI is gonna help us with that, but we have to do it carefully so that we don’t create the problem that we’re trying to solve in the first place… The organizations that are going to [provide governance] in my opinion shouldn’t be for profit — we shouldn’t mix the objective of making money… with the objective which should be single-minded of defending humanity against a potential rogue AI.

Also I think we should be very careful to do this with our allies in the world and not do it alone. There is first, we can have a diverse set of approaches, because we don’t know how to really do this… and we also need some kind of robustness against the possibility that one of the governments involved in this kind of research isn’t democratic anymore for some reason… We need a resilient system of partners, so that if one of them ends up being a bad actor, the others are there.

Stuart Russell:

I completely agree that if there is a body that’s set up, that it should be enabled to fund and coordinate this type of research, and I completely agree with the other witnesses that we haven’t solved the problem yet.

I think there are a number of approaches that are promising — I tend towards approaches that provide mathematical guarantees, rather than just best-effort guarantees, and we’ve seen that in the nuclear area, where originally, the standard, I believe was you could have a major core accident every 10,000 years, and you had to demonstrate that your system design met that requirement, then it was a million years, and now it’s 10 million years. And so that’s progress, and it comes from actually having a real scientific understanding of the materials, the designs, redundancy, etcetera. We are just in the infant stages of a corresponding understanding of the AI systems that we’re building.

I would also say that no government agency is going to be able to match the resources that are going into the creation of these AI systems — the numbers I’ve seen are roughly 10 billion dollars a month going into AGI startups… How do we get that resource flow directed towards safety? I actually believe that the involuntary recall provisions… would have that effect…

[If systems are recalled for violating rules] the company can go out of business, so they have a very strong incentive to actually understand how their systems work, and if they can’t, to redesign their systems so that they do understand how they work…

I also want to mention on rogue AI… we may end up needing a very different kind of digital ecosystem… Right now, to a first approximation, a computer runs any piece of binary code that you load into it — we put layers on top of that that say ‘okay, that looks like a virus, I’m not running that.’ We actually need to go the other way around — the system should not run any piece of binary code unless it can prove to itself that this is a safe piece of code to run… With that approach, I think we could actually have a chance of preventing bad actors from being able to circumvent these controls.

On Supply Chains and China

Senator Hawley:

Mr. Amodei… your first recommendation is ‘the United States must secure the AI supply chain,’ and then you mention immediately as an example of this chips used for training AI systems. Where are most of the chips made now?

Dario Amodei:

There are certain bottlenecks in the production of AI systems. That ranges from semiconductor manufacturing equipment to chips, to the actual produced systems which then have to be stored on a server somewhere, and in theory could be stolen or released in an uncontrolled way. So I think compared to some of the more software elements, those are areas where there are substantially more bottlenecks.

Senator Hawley:

Understood… Do you know where most of them are currency manufactured?

Dario Amodei:

There are a number of steps in the production process… an important player on the making the base fabrication side would be TSMC, which is in Taiwan, and then within companies like NVIDIA…

Senator Hawley:

As part of securing our supply chain… should we consider limitations… on components that are manufactured in China?

Dario Amodei:

I think we should think a little bit in the other direction of, are things that are produced by our supply chain, do they end up in places that we don’t want them to be.

So we’ve worried a lot about that in the context of models — we just had a blog post out today about AI models saying ‘hey, you might have spent a large number of millions of dollars… to train an AI system — you don’t want some state actor or criminal or rogue organization to then steal that and use it in some irresponsible way that you don’t endorse.’

Senator Hawley:

Let’s imagine a hypothetical in which the [CCP] decides to launch an invasion of Taiwan, and let’s imagine… that they are successful… Just give me a back-of-the-envelope forecast — what might that do to AI production?

Dario Amodei:

A very large fraction of the chips are indeed somewhere go through the supply chain in Taiwan…

Stuart Russell:

There are already plans to diversify away from Taiwan. TSMC is trying to create a plant in the US, Intel is now building some very-large plants in the US and in Germany I believe. But it’s taking time.

If the invasion that you mentioned happened tomorrow, we would be in a huge amount of trouble. As far as I understand it, there are plans to sabotage all of TSMC operations in Taiwan if an invasion were to take place, so it’s not that all that capacity would then be taken over by China.

Senator Hawley:

What’s sad about that scenario is that would be the best case scenario, right? If there’s an invasion of Taiwan, the best we could hope for is, maybe all of their capacity, or most of it, gets sabotaged and maybe the whole world has to be in the dark for however long — that’s the best case scenario…

Your point Mr. Amodei about securing our supply chains is absolutely critical, and thinking very seriously about strategic decoupling efforts I think is absolutely vital at every point in the supply chain that we can… I think we’ve got to think seriously about what may happen in the event of a Taiwan invasion.

Dario Amodei:

I just wanted to emphasize Professor Russell’s point even more strongly that we are trying to move some of the chip fab production capabilities to the US, but that needs to be faster. We’re talking about 2 to 3 years for some of these very scary applications, and maybe not much longer than that for truly autonomous AI… I think the timelines for moving these production facilities look more like 5 years, 7 years, and we’ve only started on a small component of them.

On a Pause in AI

Senator Blumenthal:

For those who want to pause, and some of the experts have written that we should pause AI development — I don’t think it’s gonna happen. We right now have a gold rush, literally much like the gold rush that we had in the Wild West where in fact there are no rules and everybody is trying to get to the gold without very many law enforcers out there preventing the kinds of crimes that can occur.

So I am totally in agreement with Senator Hawley [who had just criticized the outsourcing and labor practices of the AI industry] in focusing on keeping it in America, made in America when we’re talking about AI.

On Leading Countries and International Coordination

Senator Blumenthal:

Who are our competitors among our adversaries and our allies? … Are there other adversaries out there that could be rogue nations… whom we need to bring into some international body of cooperation?

Stuart Russell:

I think the closest competitor we have is probably the UK in terms of making advances in basic research, both in academia and in DeepMind in particular…

I’ve spent a fair amount of time in China, I was there a month ago talking to the major institutions that are working on AGI, and my sense is that we’ve slightly overstated the level of threat that they currently present — they’ve mostly been building copycat systems that turn out not to be nearly as good as the systems that are coming out from Anthropic and OpenAI and Google. But the intent is definitely there…

The areas where they are actually most effective… state security… voice recognition, face recognition [etc]. Other areas like reasoning and so on, planning, they’re just not really that close. They have a pretty good academic sector that they are in the process of ruining, by forcing them to meet numerical publication targets and things like that.

Senator Blumenthal:

It’s hard to produce a superhuman thinking machine if you don’t allow humans to think.

Stuart Russell:

Yup. I’ve also looked a lot at European countries… I don’t think anywhere else is in the same league as those 3. Russia in particular has been completely denuded of its experts and was already well behind.

Yoshua Bengio:

On the allied side, there are a few countries… that have really important concentration of talent in AI. In Canada we’ve contributed a lot… there’s also a lot of really good European researchers in the UK and outside the UK.

I think that we would all gain by making sure we work with these countries to develop these countermeasures as well as the improved understanding of the potentially dangerous scenarios and what methodologies in terms of safety can protect us… A common umbrella that would be multilateral — a good starting place could be Five Eyes or G7…

Senator Blumenthal:

And there would probably be some way for our entity, our national oversight body doing licensing and registration, to still cooperate — in fact I would guess that’s one of the reasons to have a single entity, to be able to work and collaborate with other countries.

Yoshua Bengio:

Yes… The more we can coordinate on this the better… There [are] aspects of what we have to do that have to be really broad at the international level.

I think… mandatory rules for safety should be something we do internationally, like, with the UN. We want every country to follow some basic rules… Viruses, computer or biological viruses, don’t see any border…

We need to agree with China on these safety measures as the first interlocutor, and we need to work with our allies on these countermeasures.

On off-switches

Senator Blumenthal:

On the issue of safety, I know that Anthropic has developed a model card for Claude that essentially involves evaluation capabilities, your red teaming considered the risk of self-replication or a similar kind of danger. OpenAI engaged in the same kind of testing…

Apparently you [Dario] share the concern that these systems may get out of control. Professor Russell recommended an obligation to be able to terminate an AI system… When we talk about legislation would you recommend that we impose that kind of requirement as a condition for testing and auditing the evaluation that goes on when deploying certain AI systems? …

An AI model spreading like a virus seems a bit like science fiction, but these safety breaks could be very very important to stop that kind of danger. Would you agree?

Dario Amodei:

Yes. I for one think that makes a lot of sense… Precisely because we’re still getting good at the science of measurement, probably [particularly dangerous outcomes] will happen, at least once… We also need a mechanism for recalling things or modifying things.

Senator Blumenthal:

I think there’s been some talk about autoGPT. Maybe you can talk a little bit about how that relates to safety breaks.

Dario Amodei:

AutoGPT refers to use of currently deployed AI systems, which are not designed to be agents, which are just chatbots, comendering such systems for taking actions on the internet.

To be honest, such systems are not particularly effective at that yet, but they may be a taste of the future and the kinds of things we’re worried about in the future. The long-term risks… where we’re going is quite concerning to me.

Senator Blumenthal:

In some of the areas that have been mentioned, like medicines and transportation, there are public reporting requirements. For example, when there’s a failure, the FAA’s system has an accident and incident report…

It doesn’t seem like AI companies have an obligation to report issues right now… Would you all favor some kind of requirement for that kind of reporting?

Yoshua Bengio:

Absolutely.

Senator Blumenthal:

Would that inhibit creativity or innovation?

Dario Amodei:

There are many areas where there are important tradeoffs — I don’t think this is one of them. I think such requirements make sense… A lot of this is being done on voluntary terms… I think there’s a lot of legal and process infrastructure that’s missing here and should be filled in.

Stuart Russell:

To go along with the notion of an involuntary recall, there has to be that reporting step happening first.

On investing in safety measures

Senator Blumenthal:

The government has an obligation to… invest in safety… because we can’t rely on private companies to police themselves… Incentivizing innovation and sometimes funding it to provide the airbags and the seatbelts and the crash-proof kinds of safety measures that we have in [the] automobile industry — I recognize that the analogy is imperfect, but I think the concept is there.

Top Recommendations

Senator Hawley:

If you could give us your one or at most two recommendations for what you think Congress ought to do right now — what should we do right now?

Stuart Russell:

There’s no doubt that we’re going to have to have an agency. If things go as expected, AI is going to end up being responsible for the majority of economic output in the United States, so it cannot be the case that there’s no overall regulatory agency…

And the second thing… systems that violate a certain set of unacceptable behaviors are removed from the market. And I think that will have not only a benefit in terms of protecting the American people and our national security, but also stimulating a great deal of research on ensuring that the AI systems are well understood, predictable, controllable.

Yoshua Bengio:

What I would suggest, in addition to what Professor Russell said, is to make sure either through incentives to companies but also direct investment in nonprofit organizations that we invest heavily, so totalling as much as we spend on making more capable AIs… in safety.

Dario Amodei:

I would again emphasize the testing and auditing regime, for all the risks ranging from those we face today, like misinformation came up, to the biological risks that I’m worried about in 2 or 3 years, to the risks of autonomous replication that are some unspecified period after that.

All of those can be tied to different kinds of tests… that strikes me as a scaffolding on which we can build lots of different concerns… I think without such testing, we’re blind…

And the final thing I would emphasize is I don’t think we have a lot of time… To focus people’s minds on the biorisks — I would really target 2025, 2026, maybe even some chance of 2024 — if we don’t have things in place that are restraining what can be done with AI systems, we’re gonna have a really bad time.

On the specifics mattering for red teaming

Senator Blumenthal:

A lot of the military aircraft we’re building now basically fly on computers… They are certainly red-teamed to avoid misdirection and mistakes. The kinds of specifics… are where the rubber hits the road… where the legislation will be very important.

President Biden has elicited commitments to security, safety, transparency, announced on Friday, an important step forward, but this red teaming is an example of how voluntary, non-specific commitments are insufficient. The advantages are in the details… And when it comes to economic pressures, companies can cut corners.

On Open Source

Senator Blumenthal:

On the issue of open source — you each raised the security and safety risk of AI models that are open source or are leaked to the public… There are some advantages to having open source as well, it’s a complicated issue…

Even in the short time that we’ve had some AI tools… they have been abused… Senator Hawley and I, as an example of our cooperation, wrote to Meta about an AI model that they released to the public… they put the first version of LLaMa out there with not much consideration of risk… the second version had more documentation of its safety work, but it seems like Meta or Facebook’s business decisions may have been driving its agenda.

Let me ask you about that phenomenon.

Yoshua Bengio:

I think it’s really important, because when we put open source out there for something that could be dangerous, which is a tiny minority of all the code that’s open source, essentially we’re opening the door to all the bad actors.

And as these systems become more capable, bad actors don’t need to have very strong expertise, whether it’s in bioweapons or cybersecurity, in order to take advantage of systems like this. And they don’t even need to have huge amounts of compute either…

I believe that the different companies that committed to these measures last week probably have a different interpretation of what is a dangerous system. And I think it’s really important that the government comes up with some definition, which is gonna keep moving, but makes sure that if future releases are gonna be very carefully evaluated for that potential before they are released.

I’ve been a staunch advocate of open source for all my scientific career… but as Geoff Hinton… was saying ‘if nuclear bombs were software, would you allow open source of nuclear bombs?’

Senator Blumenthal:

And I think the comparison is apt. I’ve been reading the most recent biography of Robert Oppenheimer. Every time I think about AI — the specter of quantum physics, nuclear bombs, but also atomic energy, both peaceful and military purposes, is inescapable.

Yoshua Bengio:

I have another thing to add on open source. Some of it is coming from companies like Meta, but there’s also a lot of open source coming out of universities.

Usually these universities don’t have the means of training the kind of large systems that we’re seeing in industry. But the code could be then used by a rich, bad actor and turned into something dangerous.

So, I believe that we need ethics review boards, in universities, for AI, just like we have for biology and medicine… We need to move into a culture where universities… adopt these ethics reviews with the same principles that we’re doing for other sciences where there’s dangerous output, but in the case of AI.

Dario Amodei:

I strongly share professor Bengio’s view here…

I think in most scientific fields, open source is a good thing… and I think even within AI there’s room for models on the smaller and medium side… And I think to be fair, even up to the level of open source models that have been released so far, the risks are relatively limited… But I’m very concerned about where things are going… I think the path that things are going, in terms of the scaling of open source models — I think it’s going down a very dangerous path.

Stuart Russell:

I agree with everything the other witnesses said…

It’s not completely clear where exactly the liability should lie, but to continue the nuclear analogy, if a corporation decided they wanted to sell a lot of enriched uranium in supermarkets, and someone decided to take that enriched uranium and buy several pounds of it and make a bomb, wouldn’t we say that some liability should reside with the company that decided to sell the enriched uranium? They could put a vise on it saying ‘do not use more than 3 ounces of this in one place’ or something, but no one’s gonna say that absolved them from liability.

On bipartisanship

Senator Blumenthal:

The last point I would make… what you’ve seen here is not all that common, which is bipartisan unanimity, that we need guidance from the federal government. We can’t depend on private industry, we can’t depend on academia.

The federal government has a role that is not only reactive and regulatory, it is also proactive in investing in research and development of the tools that are needed to make this fire work for all of us.

Thanks for putting this together! Super helpful.

You might want to rename this with the title of the hearing (Oversight of A.I.: Principles for Regulation) because I couldn't find this easily and almost repeated your great work!