A response to Puzzles for Everyone.

Richard Yetter Chappell argues that problems in decision theory and population ethics are not just puzzles for utilitarians, but puzzles for everyone. I disagree. I think that the puzzles Richard raises are problems for people tempted by very formal theories of morality and action, which come with a host of auxiliary assumptions one may wish to reject.

Decision Theory

Richard’s post cites two examples: one from decision theory, and one from population ethics.

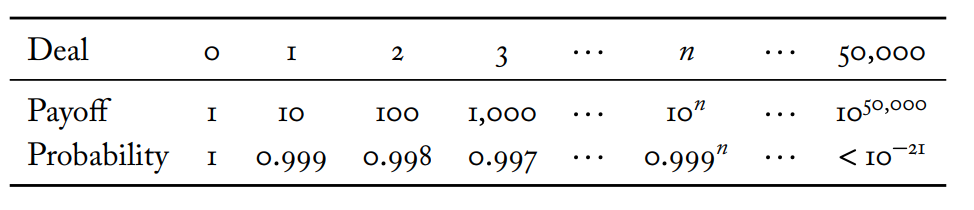

To support his decision-theoretic case, Richard references this paper, in which you’re faced with the following payoff table:

The case is nicely summarized by Tomi Francis:

“You have one year left to live, but you can swap your year of life for a ticket that will, with probability 0.999, give you ten years of life, but which will otherwise kill you immediately with probability 0.001. You can also take multiple tickets: two tickets will give a probability 0.9992 of getting 100 years of life, otherwise death, three tickets will give a probability 0.9993 of getting 1000 years of life, otherwise death, and so on. It seems objectionably timid to say that taking n+1 tickets is not better than taking n tickets. Given that we don’t want to be timid, we should say that taking one ticket is better than taking none; two is better than one; three is better than two; and so on.”

Sure, fine, we don’t want to be timid.

… But, if we’re not timid, the end-point of this series of decisions looks objectionably reckless. If you act in line with a non-timid decision procedure telling you to take each ticket, you end up in a situation where you’re taking (potentially arbitrarily unlikely) moonshot bets for astronomically high payoffs. In their paper, Beckstead and Thomas argue that, alas, we’re caught in a bind — any possible theory for dealing with uncertain prospects will be one of: timid, reckless, or intransitive.

1.1.

In many ways I’m sympathetic to the motivations behind Chappell's post, because I’ve encountered a lot of unfair derision of others who sincerely attempt to deal with problematic implications of their values. And, often, the sources of mockery do appear to come from people who “haven’t actually thought through any sort of systematic alternative” to the people they're deriding.

Still, I think his post is wrong. So what do I actually do in a situation like this?

I’ll get to that. But first, I want to discuss some basic setup required for Beckstead and Thomas’ paper. While Beckstead and Thomas’ paper doesn’t explicitly rely on expected utility theory (EUT), I think their conception of deontic theory (i.e., the theory of right action) shares the following structure with EUT: we begin with an agent with some ends (represented by some numerical quantity), a decision-context (represented by a subjective probability distribution over some exogenously given state space).

Now, back to the gamble. What would I actually do in that situation? In practice, I think I’d probably decide to take actions via the following decision-procedure:

STOCHASTIC: Decide whether to take the next bet via a stochastic decision procedure, where you defer your decision to take each successive bet to some procedure which outputs Take with probability p, and Decline with probability (1-p), for some 0 < p < 1.

So, for each bet, I defer to some random process. For simplicity, let's say I draw from an urn with black balls, in which I take Ticket 1 if I draw a black ball, and decline otherwise. If the ball is black, I iterate the process, until I draw a non-black ball. If I draw black balls, I take tickets. This process, STOCHASTIC, determines the number of tickets I will take.

I look at this strategy, and it seems nice. It's a bit clunky, but I was interested in decision theory because it offered a particular promise — the promise of a framework telling agents how to do better by their own values. And, here, I feel as though STOCHASTIC serves my ends better than alternatives I can imagine. Thus, absent some convincing argument telling me that this decision-procedure leads my awry in some way I’m unaware of, I’m happy to stick with it. I have my ends. And, relative to my ends, I endorse STOCHASTIC in this decision-context. It strikes, for me, an appropriate balance of risk and reward.

1.2.

Of course, if you insist on viewing each action I take as expressing my attitude towards expected consequences, then my actions won’t make sense. If I accepted gamble , why would I now refuse gamble ? After all, gamble is just the same gamble, but strictly better.

But, well, you don’t have to interpret my actions as expressing attitudes towards expected payoffs. I mean this literally. You can just … not do that. Admittedly, I grant that my performing any action whatsoever could, in principle, be used to generate some (probability function, utility function) weighting which recovers my choice behavior. But I don’t see that anyone has established that whatever weighting you use to represent my behavior contains the probability function that I must use to guide my behavior going forward. I don’t think you get that from Dutch Book arguments, and I don’t think you get it from representation theorems.

You may still say that deferring to a randomization process to determine my actions looks weird — but it’s a weird situation! I don't find it all that puzzling to believe, when confronted with a weird situation, that the best thing to do looks a little weird. STOCHASTIC is a maxim telling me how to behave in a weird situation. And, given that I'm in an unusual decision-context, deferring to an unusual decision-procedures doesn't feel all that counter-intuitive. I don’t have to refer back to a formal theory which tells me what to do, but rather refer back to the reasons I have for performing actions in this particular decision-context, given my ends. And, at bottom, my reasons for acting in this way include a desire for life-years, and some amount of risk-aversion. I don’t see why I’m required to treat every local action I take as expressing my attitude towards expected consequences, when this decision-procedure does globally worse by my ends.

If you prefer some alternative maxim for decision-making, then, well, good for you. Life takes all sorts. But if you do prefer some other procedure for decision-making, then I don’t really think Beckstead and Thomas’ paper is really a puzzle for you, either.[1] Either you’re genuinely happy with recklessness (or timidity), or else you have antecedent commitments to the methodology of decision theory — such as, for example, a commitment to viewing every action you take as expressing your attitude to expected consequences. But I take it (per Chappell) that this was meant to be a puzzle for everyone, and most people don’t share that commitment — I don’t even think those who endorse utilitarian axiologies need that commitment!

1.3.

If you’re committed to a theory which views actions as expressing attitudes towards expected consequences, then this is a puzzle for you. But I’m not yet convinced, from my reading of the decision-theoretic puzzles presented, that these puzzles are puzzles for me. Onto ethics.

Population Ethics

When discussing population ethics, Chappell intimates at the complicated discussions surrounding Arrhenius’ impossibility proof for welfarist axiologies.

Chappell's post primarily responds to a view offered by Setiya, and focuses on the implications of a principle called ‘neutrality’. That said, I interpret Richard to be making a more general point: we know, from the literature in population axiology, that any consistent welfarist axiology has to bite some bullet. Setiya (so claims Chappell) can’t avoid the puzzles, for any plausible moral theory has some role for welfare, and impossibility theorems are problems for all such theories.

Chappell is right to say that the puzzles of population axiology are not just puzzles for utilitarians, but rather (now speaking in the authorial voice) puzzles for theorists who admit well-defined, globally applicable notions of intrinsic utility. I certainly think that some worlds are better than others, and I also believe that there are contexts under which it makes sense to say that some population has higher overall welfare than another. But I’m not committed (and nor do I see strong arguments for being committed) to the existence of a well-defined, context-independent, and impartial aggregate welfare ranking.

2.1.

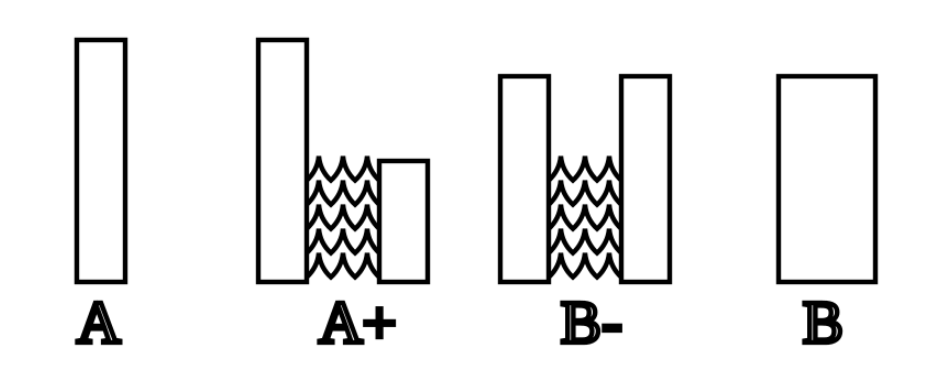

Parfit is the originator of the field of population ethics. One of his famous cases is the Mere Addition Paradox. It starts with the bars below, and proceeds through argumentative steps well summarized on Wikipedia. We’ll recount it briefly here, but feel free to skip to 2.2 if you already know the deal.

Start with World A, full of loads and loads (let’s say 10 billion) of super happy people. Then, someone comes along and offers to create a disconnected world full of merely very happy people. They’re happier than anyone alive today, but still far short of the super happy people. But no one has any resentment, and the worlds don’t interact. A+, we may think, is surely no worse than A. We’ve simply added a bunch of people supremely grateful to be alive.

Now someone comes along and says: “hey, how about we shift from A+ to B-”, where B- has higher total welfare, and also higher average welfare. You don’t actively hate equality, so it seems that B- is better than A+. Then, we merge the groups in B- to a single group in B. That’s surely no worse. So B > A.

You iterate these steps. We end up with some much larger world, Z, full of people with lives that are worth living, but only just. If you endorsed all the previous steps, it seems that Z > A. That is, there’s some astronomically large population, Z, full of lives that are barely worth living — arrived at through a series of seemingly plausible steps — that you have to say is better than Utopia. Lots of people don’t like this, hence the name: the repugnant conclusion.

2.2.

I have two qualms with the basic setup. First, I’m not convinced that you can universaly make these small, iterative differences to aggregate welfare levels in a way that gets you to the repugnant conclusion, because I’m not convinced that I’m committed to a concept of aggregate welfare which has a propety Arrhenius calls finite fine-grainedness. That is, the incremental steps in the Mere Addition Paradox (like the move from A+ to B) assume that it's always possible to make slight, incremental changes to aggregate welfare levels.

Second, even if I were committed to a (universally applicable) concept of aggregate welfare, Parfit’s initial presentation put forth bars of varying widths and heights, which are supposed to correspond to some set of creatures with valenced experiential states. I care about these creatures, and their experiences. However, in order to derive any practical conclusion from the bars of varying widths and heights, I need to have some idea of what mapping I’m meant to have between imaginable societies and the aforementioned bars of varying widths and heights.

I’ve not yet encountered someone who can show me, relative to a mapping between societies and bars (numbers, whatever) that I find plausible, that I’m in fact committed to some counterintuitive consequence which is meant to beset every plausible normative theory. I simply have judgments about the ranking of various concretely described worlds, and (largely implicit) decision procedures for what to do when presented with the choice of various worlds. The axioms required for various impossibility proofs assume that I already possess a global aggregate welfare ranking, defined in every context, and present various axioms which are meant to apply to this global welfare ranking. I'm much more inclined to think that models under which assume an impartial aggregate welfare ordering are useful in some contexts, but find myself unpersuaded that the assumption of an impartial aggregate welfare ordering is anything more than a convenient modeling assumption.

My conception of ethics is admittedly unsystematic, but I don’t see that as a failing by itself. I see the virtues in coherency, for — if I’m not coherent — then I don’t take myself to be saying anything at all about what I take to be better or worse. But, currently, I think that I am coherent. You can show that people are incoherent when you can show that they are jointly committed to two principles which are collectively inconsistent. And, I think, you can show that people have implicit commitments by showing that something follows from one of their more explicit commitments, or from showing that they engage in an activity which can only be justified if they endorse some other commitment. But, as far as I’m aware, no one has shown me that.

If someone shows me that I’m incoherent, I’ll reconsider my principles. But, absent arguments showing me that I am committed to the principles required to get population ethics off the ground, I remain happy in claiming that Chappell poses puzzles for some, but not for me.

- ^

Thanks to Richard for nudging me to say something about whether I avoid the puzzles, rather than just constructing a solution I personally find satisfying.

Thanks for writing this! Thinking on it some more, I wonder about a possible tension between two of your big-picture claims here:

(1) "I think that the puzzles Richard raises are problems for people tempted by very formal theories of morality and action, which come with a host of auxiliary assumptions one may wish to reject."

(2) "I simply have judgments about the ranking of various concretely described worlds, and (largely implicit) decision procedures for what to do when presented with the choice of various worlds."

The first passage makes it sound like the problem is specifically with a narrow band of "very formal" theories, involving questionable "auxiliary assumptions". This leaves open that we could still secure a complete ethical theory, just one that isn't on board with all the specific "assumptions" made by the "very formal" theories. (E.g. Someone might hope that appeal to incommensurability or other ways of understanding value non-numerically might help here.)

But the second passage, which maybe fits better with the actual argument of the post, suggests to me that what you're really recommending is that we abandon ethical theory (as traditionally understood), and embrace the alternative task of settling on a decision procedure that we're happy to endorse across a fairly wide range of circumstances.

E.g. I take it that a central task of decision theory is to provide a criterion that specifies which gambles are or aren't worth taking. When you write, "What would I actually do in that situation? In practice, I think I’d probably decide to take actions via the following decision-procedure..." it seems to me that you aren't answering the same question that decision theorists are asking. You're not giving an alternative criterion; instead you're rejecting the idea that we need one.

So, do you think it's fair to interpret your post as effectively arguing that maybe we don't need theory? "Puzzles for everyone" was arguing that these were puzzles for every theory. But you could reasonably question whether we need to engage in the project of moral/decision theory in the first place. That's a big question! (For some basic considerations on the pro-theory side, see 'Why Do We Need Moral Theories?' on utilitarianism.net.)

But if I'm completely missing the boat here, let me know.

[ETA: on the object-level issues, I'm very sympathetic to Alex's response. I'd probably go with "take exactly N" myself, though it remains puzzling how to justify not going to N+1, and so on.]

Interesting post! But I’m not convinced.

I’ll stick to addressing the decision theory section; I haven’t thought as much about the population ethics but probably have broadly similar objections there.

(1) What makes STOCHASTIC better than the strategy “take exactly N tickets and then stop”?

I get that you’re trying to avoid totalizing theoretical frameworks, but you also seem to be saying it’s better in some way that makes it worth choosing, at least for you. But why?

(2) In response to

I’m having trouble interpreting this more charitably than “when given a choice, you can just … choose the option with the worse payoff.” Sure, you can do that. But surely you’d prefer not to? Especially if by “actions” here, we’re not actually referring to what you literally do in your day-to-day life, but a strategy you endorse in a thought-experiment decision problem. You’re writing as if this is a heavy theoretical assumption, but I’m not sure it’s saying anything more than “you prefer to do things that you prefer.”

(3) In addition to not finding your solution to the puzzle satisfactory,[2] I’m not convinced by your claim that this isn’t a puzzle for many other people:

To me, the point of the thought experiment is that roughly nobody is genuinely happy with extreme recklessness or timidity.[3] And as I laid out above, I’d gloss “commitment to viewing every action you take as expressing your attitude to expected consequences” here as “commitment to viewing proposed solutions to decision-theory thought experiments as expressing ideas about what decisions are good” — which I take to be nearly a tautology.

So I’m still having trouble imagining anyone the puzzles aren’t supposed to apply to.

The only case I can make for STOCHASTIC is if you can’t pre-commit to stopping at the N-th ticket, but can pre-commit to STOCHASTIC for some reason. But now we’re adding extra gerrymandered premises to the problem; it feels like we’ve gone astray.

Although if you just intend for this to be solely your solution, and make no claims that it’s better for anyone else, or better in any objective sense then ... ok?

This is precisely why it's a puzzle -- there's no outcome (always refuse, always take, take N, stochastic) that I can see any consistent justification for.

Interesting comment! But I’m also not convinced. :P

… or, more precisely, I’m not convinced by all of your remarks. I actually think you’re right in many places, though I’ll start by focusing on points of disagreement.

(1) On Expected Payoffs.

In summary: Beckstead and Thomas’ case provides me with exogenously given payoffs, and then invites me to choose, in line with my preferences over payoffs, on a given course of action. I don’t think I’m deciding to act in a way which, by my lights, is worse than some other act. My guess is that you interpret me as choosing an option which is worse by my own lights because you interpret me as having context-independent preferences over gambles, and choosing an option I disprefer.

(2) On STOCHASTIC More Specifically

I think you’re right that some alternative strategy to STOCHASTIC is preferable, and probably you’re right taking exactly N tickets is preferable. I’ll admit that I didn’t think through a variety of other procedures, STOCHASTIC was just the first thought that came to mind.

One final critical response.

Thanks for the helpful pushback, and apologies for the late reply!

Regarding decision theory: I responded to you on substack. I'll stand by my thought that real-world decisions don't allow accurate probabilities to be stated, particularly in some life-or-death decision. Even if some person offered to play a high-stakes dice game with me, I'd wonder if the dice are rigged, if someone were watching us play and helping the other player cheat, etc.

Separately, it occurred to me yesterday that a procedure to decide how many chances to take depends on how many will meet a pre-existing need of mine, and what costs are associated with not fulfilling that need. For example, if I only need to live 10 more years, or else something bad happens (other than my death), and the chances that I don't live that extra 10 years are high unless I play your game (as you say, I only have 1 year to live and your game is the only game in town that could extend my life), then I will choose an N of 1. This argument can be extended to however many years. There are plausible futures in which I myself would need to live an extra 100 years, but not an extra 1000, etc. That allows me to select a particular N to play for in accordance with my needs.

Let's say, though, for the sake of argument, that I need to live the entire 10^50000 years or something bad will happen. In that case, I'm committed to playing your game to the bitter end if I want to play at all. In which case, if I choose to play, it will only be because my current one year of life is essentially worthless to me. All that matters is preventing that bad thing from happening by living an extra 10^50000 years.

Alternatively, my only need for life extension is to live longer than 10^50,000. If I don't, something bad will happen, or a need of mine won't be met. In that case, I will reject your game, since it won't meet my need at all.

This sort of thinking might be clearer in the case of money, instead of years of life. If I owed a debt of 10^50000 dollars, and I only have 1 dollar now, and if in fact, the collector will do something bad to me unless I pay the entire debt, well, then, the question becomes whether your game is the only game in town. If so, then the final question is whether I would rather risk a large chance of dying to try to pay my debt, or whether I would rather live through the collector's punishment because the money I have already (or earned from playing your game to less than the end) is short of the full repayment amount. If death were preferable to the collector's punishment and your game was the only game in town, then I would go with playing your game even though my chances of winning are so pitifully small.

Similar thinking applies to smaller amounts of debt owed, and commensurate choice of N sufficient to pay the debt if I win. I will only play your game out to high enough N to earn enough to pay the debt I owe.

Regardless of the payoff amount's astronomically large size as the game progresses, there is either a size past which the amount is greater than my needs, or my needs are so great that I cannot play to meet my needs (so why play at all), or only playing to the end meets my needs. Then the decision comes down to comparing the cost of losing your game to the cost of not meeting my pre-existing needs.

Oh, you could say, "Well, what if you don't have a need to fulfill with your earnings from playing the game? What if you just want the earnings but would be ok without them?" My response to that is, "In that case, what am I trying to accomplish by acquiring those earnings? What want (need) would those earnings fulfill, in what amount of earnings, and what is the cost of not acquiring them?"

Whether it's money or years of life or something else, there's some purpose(s) to its use that you have in mind, no? And that purpose requires a specific amount of money or years. There's not an infinitude of purposes, or if there are, then you need to claim that as part of proposing your game. I think most people would disagree with that presumption.

What do you all think? Agree? Disagree?