Update 2022: Looking back on younger Remmelt's writing on decision-making, it is useful but cocky. It explained what I now call 'monitoring values' for carving out states of the world to decide between, but not how choice arises from 'deep embedded values'.

---

This post contains:

1. an exposition of a high-level model

2. some claims on what this might mean strategically for the EA community

Effective Altruism is challenging. Some considerations require you to zoom out to take an eagle-eye view across a vast landscape of possibility (e.g. to research moral uncertainty), while other considerations require you to swoop in to see the details (e.g. to welcome someone new). The distance from which you're looking down at a problem is the construal level you're thinking at.

People involved in the EA community can gain a lot from improving their grasp of construal levels – the levels they or others naturally incline towards and even the level they're operating at any given moment (leading, for instance, to less disconnect in conversations). A lack of construal-level sense combined with a lack of how-to-interact-in-large-social-networks sense has left a major blindspot in how we collectively make decisions, in my view.

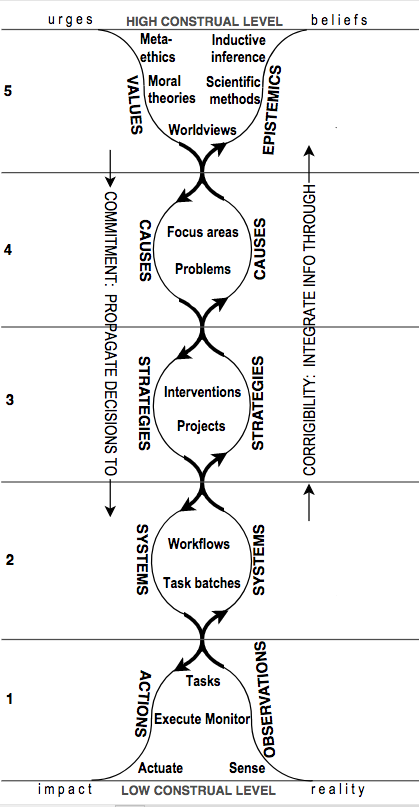

The Values-to-Actions Decision Chain (in short: 'decision chain') is an approach for you to start solving the awe-inspiring problem of 'doing the most good' by splitting it into a series of decisions you will make from high to low construal levels (creating in effect, a hierarchy of goals). It is also a lens through which you can more clearly see your own limits to doing this and compensate by coordinating better with others. Beware though that it’s a blurry and distorted lens – this post itself is a high construal-level exercise and many important nuances have been eliminated in the process. I'd be unsurprised if I ended up revising many of the ideas and implications in here after another year of thinking.

Chain up

To illustrate how to use the V2ADC (see diagram below):

Suppose an ethics professor decided that...

- ...from the point of view of the universe... (meta-ethics)

- ...hedonistic utilitarianism made sense... (moral theories)

- ...and that therefore humans in developing countries should not suffer unnecessarily... (worldview)

- ...leading him to work on reducing global poverty... (focus area)

- ...by reducing a variety of easily treatable diseases... (problems)

- ...by advocating for citizens of rich countries to pledge 1% of their income... (intervention)

- ...by starting a non-profit... (project)

- ...where he works with a team of staff members... (workflow)

- ...to prepare his TED talk... (task batches)

- ...to cover the decision to donate for guide dogs vs. treating trachoma... (task)

- ...which he mentions... (execute)

- ...by vibrating his vocal chords (actuate)

The Values-to-Actions Decision Chain. Note that the categorisation here is somewhat arbitrary and open to interpretation; let me know if something's unclear or should be changed (or if you disagree with the diagram on some fundamental level). Also see the headings on either side of the diagram; it could also be called the Observations-to-Epistemics Integration Chain but that's less catchy.

It should be obvious at this point that the professor isn't some lone ranger battling out to stop world suffering by himself. Along the way, the professor studies classical moral philosophers and works with an executive director, who in turn hires a website developer who creates a donation button, which a donor clicks to donate money to a malaria prevention charity, which the charity's treasurer uses to pay out the salary of a new local worker, who hands out a bednet to a mother of three children, who hangs the net over the bed in the designated way.

It takes a chain of chains to affect those whose lives were originally intended to be improved. As you go down from one chain to the next, the work a person focuses on also gets more concrete (lower construal level).

However, the chain of chains can easily be broken if any person doesn't pay sufficient attention to making decisions at other construal levels (although some are more replaceable than others). For example, if instead of starting a charity, the philosopher decided to be content with voicing his grievances at a lecture, probably little would have come out of it. Or if the executive director or website developer decided that working at any foreign aid charity was fine. Or if the donor decided not to read into the underlying vision of the website and instead donated to a local charity. And so on.

Making the entire operation happen requires tight coordination between numerous people who are able to both skilfully conduct their work, and see the importance of more abstract or concrete work done by others they are interacting with. If instead, each relied on the use of financial and social incentives to motivate others, it would be a most daunting endeavour.

Cross the chasm

Imagine someone visiting an EA meetup for the first time. If the person stepping through the doorway was an academic (or LessWrong addict), they might be thrilled to see a bunch of nerdy people engaging in intellectual discussions. But an activist (or social entrepreneur) stepping in would feel more at home seeing agile project teams energetically typing away at their laptops. Right now, most local EA groups emphasise the former format, I suspect in part due to CEA's deep engagement stance, and in part because it's hard to find volunteer projects that have sufficient direct impact.

If the academic and activist happened to strike up a conversation, both sides could have trouble seeing the value produced by the other, because each is stuck at an entirely different level of abstraction. An organiser could help solve this disconnect by gradually encouraging the activist to go meta (chunking up) and the academic to apply their brilliant insights to real-life problems (chunking down).

As a community builder, I've found that this chasm seems especially wide for newcomers. Although the chasm gets filled up over time, people who've been involved in the EA community for years still encounter it, with some emphasising work on epistemics and values, and others emphasising work to gather data and get things done. The middle area seems neglected (i.e. deciding on the problem, intervention, project, and workflow).

Put simply, EAs tend to emphasise one of two categories:

- Prioritisation (high level/far mode): figuring out in what areas to do work

- Execution (low level/near mode): getting results on the ground

Note: I don't base this on any psychological studies, which seems like the weakest link of this post. I'm curious to get an impartial view from someone well-versed in the literature.

Those who instead appreciate the importance of making decisions across all levels tend to do more good. For example, in my opinion:

- Peter Singer is exceptionally impactful not just because he is an excellent utilitarian philosopher, but because he argues for changes in foreign aid, factory farm practices, and so on.

- Tanya Singh is exceptionally impactful not just because she was excellent at operations at an online shopping site, but because she later applied to work at the Future of Humanity Institute.

Commit & correct

The common thread so far is that you need to link up work done at various construal levels in order to do more good.

To make this more specific:

Individuals who increase their impact the fastest tend to be

- corrigible – quickly integrate new information into their beliefs from observations through to epistemics

- committed – propagate the decisions they make from high-level values down to the actions they take on the ground (put another way: actually pursue the goals they set for themselves)

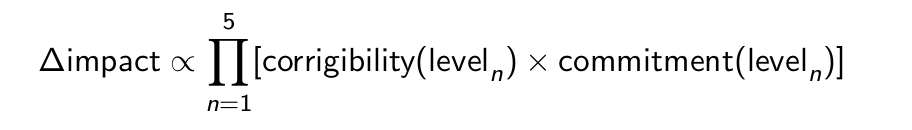

To put this into pseudomaths:

Where level refers to the level numbers as in the diagram.

Note: This multiplication between levels assumes that if you change decisions on higher levels (e.g. by changing what charity organisation you work at), you are able to transfer the skills and know-how you've acquired on lower levels (this works well with e.g. operations skills but not as well with e.g. street protest skills). Also, though the multiplication of traits in this formula implies that the impact of some EAs is orders of a magnitude higher than others, it's hard to evaluate these traits as an outsider.

Specialise & transact

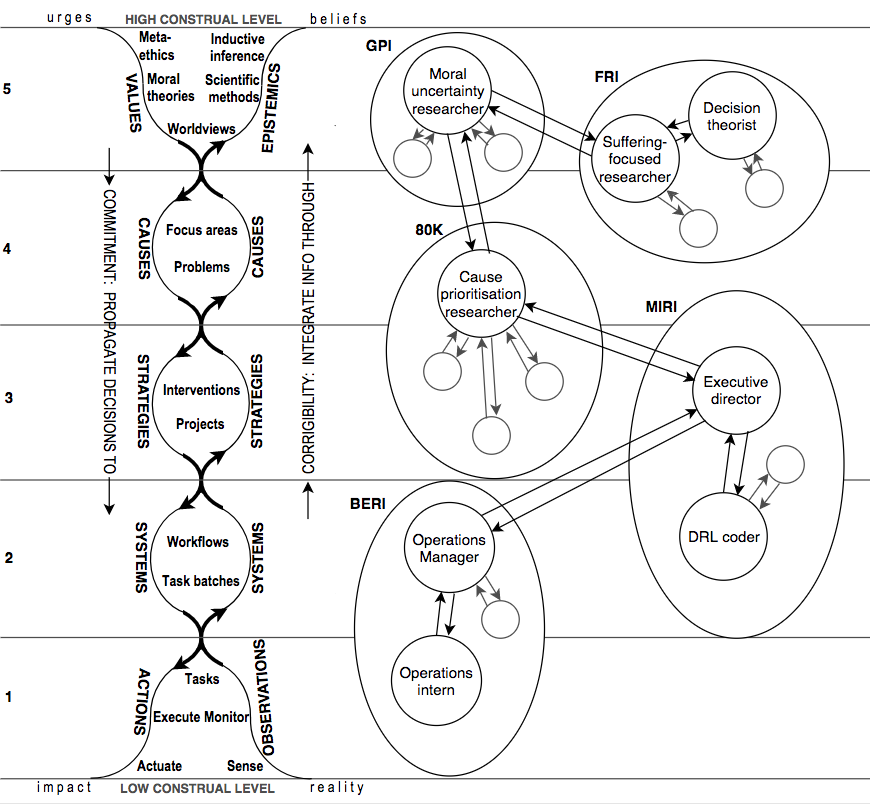

Does becoming good at all construal levels mean we should all become generalists? No, I actually think that as a growing community we’re doing a poor job at dividing up labour compared to what I see as the gold standard – decentralised market exchange. This is where we can use V2ADC to zoom out over the entire community:

The circles illustrate network clusters of people that exchange a lot with each other. Interestingly, a cluster of agents working toward (shared) goals can be seen as a super-agent with resulting super-goals, as can one person with sub-goals be seen as being the amalgamation of sub-agents interacting with each other.

A more realistic but messy depiction of our community's interactions would look like this (+ line weights to denote how much people exchange). Also, people tend to actually cluster at each construal level – e.g. there are project team clusters, corporate animal welfare outreach clusters, factory farming reduction clusters, animal welfare clusters, and so on. Many of these clusters contain people who are not (yet) committed to EA, which makes sense, both in our ability to do good together and for introducing new people to the principles of EA.

See here for a great academic intro to social networks.

As more individuals become connected within the EA network, each should specialise at construal levels in a role they excel at. They can then transact with others to acquire other needed information and delegate remaining work (e.g. an operations staff member can both learn from a philosopher and take over organisational tasks that are too much for them). Exceptions: leaders and professional networkers often need to have a broad focus across all of these levels as they function as hubs – judiciously relaying information and work requests from one network cluster to another.

With a transaction, I mean a method of giving resources to someone (financial, human, social, and temporal capital) in return for progress on your goals (i.e. to get something back that satisfies your preferences).

Here are three transactions common to EAs:

- Collaborations involve giving resources to someone whom you trust, has the same goals and is capable of using your resources to make progress on those goals. When this is the case, EAs will tend to transact more and cluster in groups to capture the added value (the higher alignment in collaborations reduces principal agent problems).

- Reciprocal favours involves giving up a tiny amount of your resources to help someone make disproportional progress on goals that are unaligned with yours (e.g. connect someone working on another problem with a colleague), with an implicit expectation of that person returning the favour at some point in the future (put another way, it increases your social capital). It's a practical alternative to moral trade (instead of e.g. signing contracts, which is both time consuming and socially awkward). The downside of reciprocal favours is that you often won't be able to offer a specific resource that the other party wants. This is where a medium of exchange comes in useful instead:

- Payments involve giving someone money based on the recipient's stated intent of what they will do with that money.

EAs are never perfectly (mis)aligned – they will be more aligned at some levels and less aligned at others. For example:

- You can often collaborate with other EAs on shared goals if you pick the levels right. For one, most EAs strongly value internal consistency and rigour, so it's easy to start a conversation as a high-level collaboration to get closer to 'the truth'. To illustrate, though Dickens and Tomasik clearly disagreed here on moral realism vs. anti-realism, they still collaborated on understanding the problem of AI alignment better.

- Where goals diverge, however, reciprocal favours can create shared value. This can happen when e.g. a foreign aid policy maker types out a quick email for an animal charity director to connect her with a colleague (though if the policy maker made the decision because he's uncertain whether global poverty is the best focus area, it's a collaboration). But in a fundamental way, we're all unaligned: EAs, like other humans, have an intrinsic drive to show off their altruistic deeds through signalling. The recipients of these signals have the choice whether or not to encourage them to make these decisions again by giving back compliments, gifts and other tokens of social status. This in turn influences the community's norms at large.

- Although employers who pay money to cover the salaries tend to roughly agree with employees on e.g. what problems and interventions to work on, the employees also have sub-goals (such as feeling safe, comfortable and admired by others) that are personal to them.

By 'transacting' with others you're able to compensate for your personal limitations to achieving your goals: the fact that you'll never be able to acquire all required knowledge yourself nor be able to do all work as skillfully as the few things you can become particularly capable at.

Integrate the low-level into the high-level

Most of this post has been about pushing values down into actions, which implies that people doing low-construal level work should merely follow instructions from above. Although it's indeed useful for those people to use prioritisation advice to decide where to do work, they also fulfill the essential function of feeding back information that can be used to update overarching models.

We face a major risk of ideological rust in our community. This is where people who are working out high-level decisions either don't receive enough information from below or no longer respond to it. As a result, their models separate from reality and their prioritisation advice becomes misguided. To illustrate this…

At a Strategies level, you find that much of AI alignment research is built on paradigms like 'the intelligence explosion' and 'utility functions' that arose from pioneering work done by the Future of Humanity Institute and Machine Intelligence Research Institute. Fortunately, leaders within the community are aware of the information cascades this can lead to, but the question remains whether they're integrating insights on machine learning progress fast enough into their organisations' strategies.

At a Causes level, a significant proportion of the EA community champions work on AI safety. But then there's the question: how many others are doing specialised research on the risks of pandemics, nanotechnology, and so on? And how much of this gets integrated into new cause rankings?

At a Values level, it is crazy how one person's worldview leads them to work on safeguarding the existence of future generations, another on preventing their suffering and another to work on neither. This reflects actual moral uncertainty – to build up a worldview, you basically need to integrate most of your life experiences into a workable model. Having philosophers and researchers explore diverse worldviews and exchange arguments is essential in ensuring that we don't rust in our current conjecture.

Now extend it to organisational structure:

We should also use the principle of decentralised experimentation, exchange and integration of information more in how we structure EA organisations. There has been a tendency to concentrate resources (financial, human and social capital) within a few organisations like the Open Philanthropy Project and the Centre for Effective Altruism who then set the agenda for the rest of the community (i.e. push decisions down their chains).

This seems somewhat misguided. Larger organisations do have less redundancy and can divide up tasks better internally. But a team of 24 staff members is still at a clear cognitive disadvantage at gathering and processing low-level data compared to a decentralised exchange between 1000 committed EAs. By themselves, they can't zoom in closely on enough details to update their high-level decision models appropriately. In other words, concentrated decision-making leads to fragile decision-making – just as it has done for central planning.

Granted, it is hard to find people you can trust to delegate work to. OpenPhil and CEA are making headway in allocating funding to specialised experts (e.g. OpenPhil's allocation to CEA, which in turn allocated to EA Grants) and collaborating with organisations who gather and analyse more detailed data (e.g. CEA's individual outreach team working with the Local Effective Altruism Network). My worry is that they're not delegating enough.

Given the uncertainty they are facing, most of OpenPhil's charity recommendations and CEA's community-building policies should be overturned or radically altered in the next few decades. That is, if they actually discover their mistakes.

This means it's crucial for them to encourage more people to do local, contained experiments and then integrate their results into more accurate models.

EDIT: see these comments on where they could create better systems to facilitate this:

Private donors who have the time and aptitude to research specialised problem niches and feed up their findings to bigger funders should do so (likewise, CEA should actively discourage these specific donors from donating to EA Funds). Local community builders should test out different event formats and feed up outcomes to LEAN. And so on.

However, if bigger organisations would hardly use this information, most of the exploration value would get lost. Understandably, this outpour of data is too overwhelming for any human (tribe) to manually process.

We therefore need to build vertically integrated data analysis platforms that separate the signal from the noise, update higher-level models, and then share those models with relevant people. On these platforms, people can then upload data, use rigorous data mining techniques and share the results through targeted channels like the PriorityWiki.

I introduced this decision chain in a previous post, which I revised after chatting with thoughtful people at two CEA retreats. Thanks to Kit Harris for his incisive feedback on the categorisation, Siebe Rozendal and Fokel Ellen for their insightful text corrections, and Max Dalton, Victor Sint Nicolaas and all the other people who beared with me last year when I was still forming these ideas.

If you found this analysis valuable, considering making a donation or email me at remmelt@effectiefaltruisme.nl (also if you want to avoid transaction costs through a bank transfer). My student loan payments are stopping in August so you're close to funding my work at peak marginal returns (note: I have also recently applied to EA Grants to extend my financial runway).

Also, if you're at EAGx Netherlands, feel free to grill me at my workshop. :-)

Cross-posted on LessWrong.

I appreciate you mentioning this! It’s probably not a minor point because if taken seriously, it should make me a lot less worried about people in the community getting stuck in ideologies.

I admit I haven’t thought this through systematically. Let me mull over your arguments and come back to you here.

BTW, could you perhaps explain what you meant with the “There are other causes of an area...” sentence? I’m having trouble understanding that bit.

And with ‘on-reflection moral commitments’ do you mean considerations like population ethics and trade-offs between eudaimonia and suffering?

Sorry for being unclear. I've changed the sentence to (hopefully) make it clearer. The idea was there could be other explanations for why people tend to gravitate to future stuff (group think, information cascades, selection effects) besides the balance of reason weighs on its side.

I do mean considerations like population ethics etc. for the second thing. :)