Posts tagged community

Quick takes

Popular comments

Recent discussion

Edit: so grateful and positively overwhelmed with all the responses!

I am dealing with repetitive strain injury and don’t foresee being able to really respond to many comments extensively (I’m surprised with myself that I wrote all of this without twitching forearms lol!...

Like others, I just want to say I'm so sorry that you had this experience. It isn't one I recognise from my own journey with EA, but this doesn't invalidate what you went through and I'm glad you're moving in a direction that works for you as a person and your values. You are valuable, your life and perspective is valuable, and I wish all you all the best in your future journey.

Indirectly, I'm going to second @Mjreard below - I think EA should be seen as beyond a core set of people and institutions. If you are still deeply driven by the ideals EA was inspi...

TL;DR: We need technology and infrastructure specialists. You don't need to specialise in cybersecurity to have an impactful career addressing AI risk, or even to improve cybersecurity.

I've been providing advice and mentoring to EAs on cybersecurity and IT careers for a few years now, mostly at conferences. I've regularly made the case (often to the relief of the mentee) that people on cybersecurity and other IT career pathways should consider staying the course rather than retraining as machine learning researchers.

This year, after increased community focus on information security in relation to AI risk, I am now often asked how to retrain into a cybersecurity specialisation. In response, I'm making the case that an oversupply of cybersecurity professionals is not optimal, and (possibly un-intuitively) is not ideal for cybersecurity either.

Organisations working on AI risk, as well as other...

Summary

- Where there’s overfishing, reducing fishing pressure or harvest rates — roughly the share of the population or biomass caught in a fishery per fishing period — actually allows more animals to be caught in the long run.

- Sustainable fishery management policies

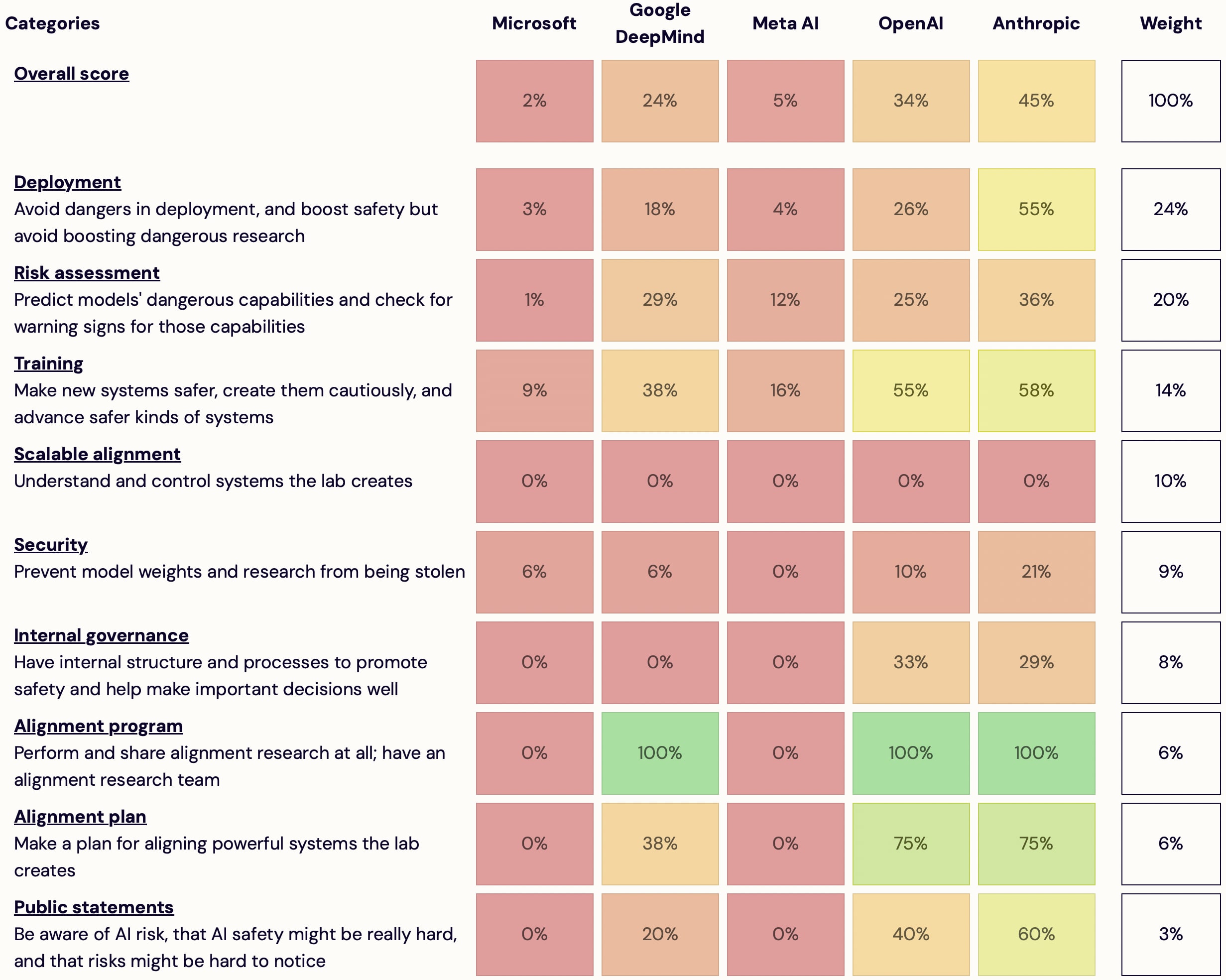

I'm launching AI Lab Watch. I collected actions for frontier AI labs to improve AI safety, then evaluated some frontier labs accordingly.

It's a collection of information on what labs should do and what labs are doing. It also has some adjacent resources, including a list...

I agree such commitments are worth noticing and I hope OpenAI and other labs make such commitments in the future. But this commitment is not huge: it's just "20% of the compute we've secured to date" (in July 2023), to be used "over the next four years." It's unclear how much compute this is, and with compute use increasing exponentially it may be quite little in 2027. Possibly you have private information but based on public information the minimum consistent with the commitment is quite little.

It would be great if OpenAI or others committed 20% of their compute to safety! Even 5% would be nice.

Does anyone know the latest estimate of what percentage of US/Western charity goes to poverty broadly and international poverty specifically?

A 2013 Dylan Matthews piece in WaPo cites a 2007 estimate. Googling isn't helping much.

I may be writing an opinion piece for...

Perhaps it’s in the Giving USA Annual Survey? You need a subscription to access it though.

I am writing this post in response to a question that was raised by Nick a few days ago,

1) as to whether the white sorghum and cassava that our project aims to process will be used in making alcohol, 2) whether the increase in production of white sorghum and cassava...

Hi Roddy, thanks very much too for this message. Here are my answers to these questions:

1). Currently, our farmers are within a radius of about 40km from the UCF, and because the volume of their sorghum is still a bit small, it's the UCF team itself that gathers all these farmers' sorghum using a motorbike, and brings it to the UCF, from where we take it to Kampala.

We also have a dump truck at the UCF, and whenever the load we are going to carry is a bit big, we use this truck instead. Right now, all these costs (fuel, transport etc) are covered by t...

GPT-5 training is probably starting around now. It seems very unlikely that GPT-5 will cause the end of the world. But it’s hard to be sure. I would guess that GPT-5 is more likely to kill me than an asteroid, a supervolcano, a plane crash or a brain tumor. We can predict...

I know of one that is less widely reported; not sure if they're counted in the two Joseph Miller knows of that are less widely reported, or if separate.

This announcement was written by Toby Tremlett, but don’t worry, I won’t answer the questions for Lewis.

Lewis Bollard, Program Director of Farm Animal Welfare at Open Philanthropy, will be holding an AMA on Wednesday 8th of May. Put all your questions for him on this thread...

What percent of farmed animal welfare advocacy does Open Phil fund? How valuable would it be for the cause area to have additional major funders?

Going to quickly share that I'm going to take a step back from commenting on the Forum for the foreseeable future. There are a lot of ideas in my head that I want to work into top-level posts to hopefully spur insightful and useful conversation amongst the community, and while I'll still be reading and engaging I do have a limited amount of time I want to spend on the Forum and I think it'd be better for me to move that focus to posts rather than comments for a bit.[1]

If you do want to get in touch about anything, please reach out and I'll try my very best to respond. Also, if you're going to be in London for EA Global, then I'll be around and very happy to catch up :)

Though if it's a highly engaged/important discussion and there's an important viewpoint that I think is missing I may weigh in