David_Moss

Bio

I am the Principal Research Director at Rethink Priorities. I lead our Surveys and Data Analysis department and our Worldview Investigation Team.

The Worldview Investigation Team previously completed the Moral Weight Project and CURVE Sequence / Cross-Cause Model. We're currently working on tools to help EAs decide how they should allocate resources within portfolios of different causes, and to how to use a moral parliament approach to allocate resources given metanormative uncertainty.

The Surveys and Data Analysis Team primarily works on private commissions for core EA movement and longtermist orgs, where we provide:

- Private polling to assess public attitudes

- Message testing / framing experiments, testing online ads

- Expert surveys

- Private data analyses and survey / analysis consultation

- Impact assessments of orgs/programs

Formerly, I also managed our Wild Animal Welfare department and I've previously worked for Charity Science, and been a trustee at Charity Entrepreneurship and EA London.

My academic interests are in moral psychology and methodology at the intersection of psychology and philosophy.

How I can help others

Survey methodology and data analysis.

Posts 41

Comments506

Thanks Cameron!

It is not particularly surprising to me that we are asking people meaningfully different questions and getting meaningfully different results...

the main question of whether these are reasonable apples-to-apples comparisons.)

We agree that our surveys asked different questions. I'm mostly not interested in assessing which of our questions are the most 'apples-to-apples comparisons', since I'm not interested in critiquing your results per se. Rather, I'm interested in what we should conclude about the object-level questions given our respective results (e.g. is the engaged EA community lukewarm and longtermism, and prioritises preference for global poverty and animal welfare, or is the community divided on these views, with the most engaged more strongly prioritising longtermism?).

in my earlier response, I had nothing to go on besides the 2020 result you have already published, which indicated that the plots you included in your first comment were drawn from a far wider sample of EA-affiliated people than what we were probing in our survey, which I still believe is true. Correct me if I'm wrong!)

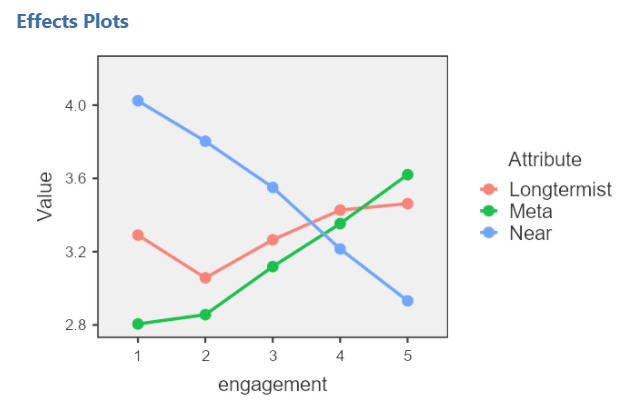

I would just note that in my original response I showed the how the results varied across the full range of engagement levels, which I think offers more insight into how the community's views differ across groups, than just looking at one sub-group.

One further question/hunch I have in this regard is that the way we are quantifying high vs. low engagement is almost certainly different (is your sample self-reporting this/do you give them any quantitative criteria for reporting this?), which adds an additional layer of distance between these results.

The engagement scale is based on self-identification, but the highest engagement level is characterised with reference to "helping to lead an EA group or working at an EA-aligned organization". You can read more about our different measures of engagement and how they cohere here. Crucially, I also presented results specifically for EA org employees and people doing EA work so concerns about the engagement scale specifically do not seem relevant.

The most recent data you have that you mention briefly at the end of your response seems far more relevant in my view. It seems like both of the key results you are taking issue with here (cause prioritization and lukewarm longtermism views) you found yourself to some degree in these results

I respond to these two points below:

Your result 1:

The responses within the Cause Prioritization category which did not explicitly refer to too much focus on AI, were focused on insufficient attention being paid to other causes, primarily animals and GHD.

We specifically find the exact same two cause areas, animals and GHD, as being considered the most promising to currently pursue.

I don't think this tells us much about which causes people think most promising overall. The result you're referring to is looking only at the 22% of respondents who mentioned Cause prioritization as a reason for dissatisfaction with EA and were not one of the 16% of people who mentioned excessive focus on x-risk as a cause for dissatisfaction (38 respondents, of which 8 mentioned animals, 4 mentioned Global poverty and 7 mentioned another cause (the rest mentioned something other than a specific cause area)).

Our footnote mentioning this was never intended to indicate which causes are overall judged most promising, just to clarify how our 'Cause prioritization' and 'Excessive focus on AI' categories differed. (As it happens, I do think our results suggest Global Poverty and Animal Welfare are the highest rated non-x-risk cause areas, but they're not prioritised more highly than all x-risk causes).

Your result 2 (listed as the first reason for dissatisfaction with the EA community):

Focus on AI risks/x-risks/longtermism: Mainly a subset of the cause prioritization category, consisting of specific references to an overemphasis on AI risk and existential risks as a cause area, as well as longtermist thinking in the EA community.

Our results show that, among people dissatisfied with EA, Cause prioritisation (22%) and Focus on AI risks/x-risks/longtermism (16%) are among the most commonly mentioned reasons.[1] I should also emphasise that 'Focus on AI risks/x-risks/longtermism' is not the first reason for dissatisfaction with the EA community, it's the fifth.

I think both our sets of results show that (at least) a significant minority believe that the community has veered too much in the direction of AI/x-risk/longtermism. But I don't think that either sets of results show that the community overall is lukewarm on longtermism. I think the situation is better characterised as division between people who are more supportive of longtermist causes[2] (whose support has been growing), and those who are more supportive of neartermist causes.

I don't think there is any strong reason to ignore or otherwise dismiss out of hand what we've found here—we simply sourced a large and diverse sample of EAs, asked them fairly basic questions about their views on EA-related topics, and reported the results for the community to digest and discuss.)

I certainly agree that my comment here have only addressed one specific set of results to do with cause prioritisation, and that people should assess the other results on their own merits!

- ^

And, to be clear, these categories are overlapping, so the totals can't be combined.

- ^

As we have emphasised elsewhere, we're using "longtermist" and "neartermist" as a shorthand, and don't think that the division is necessarily explained by longtermism per se (e.g. the groupings might be explained by epistemic attitudes towards different kinds of evidence).

I think your sample is significantly broader than ours: we were looking specifically for people actively involved (we defined as >5h/week) in a specific EA cause area...

In other words, I think our results do not support the claim that

[it] isn't that EAs as a whole are lukewarm about longtermism: it's that highly engaged EAs prioritise longtermist causes and less highly engaged more strongly prioritise neartermist causes.

given that our sample is almost entirely composed of highly engaged EAs.

I don't think this can explain the difference, because our sample contains a larger number of highly engaged / actively involved EAs, and when we examine results for these groups (as I do above and below), they show the pattern I describe.

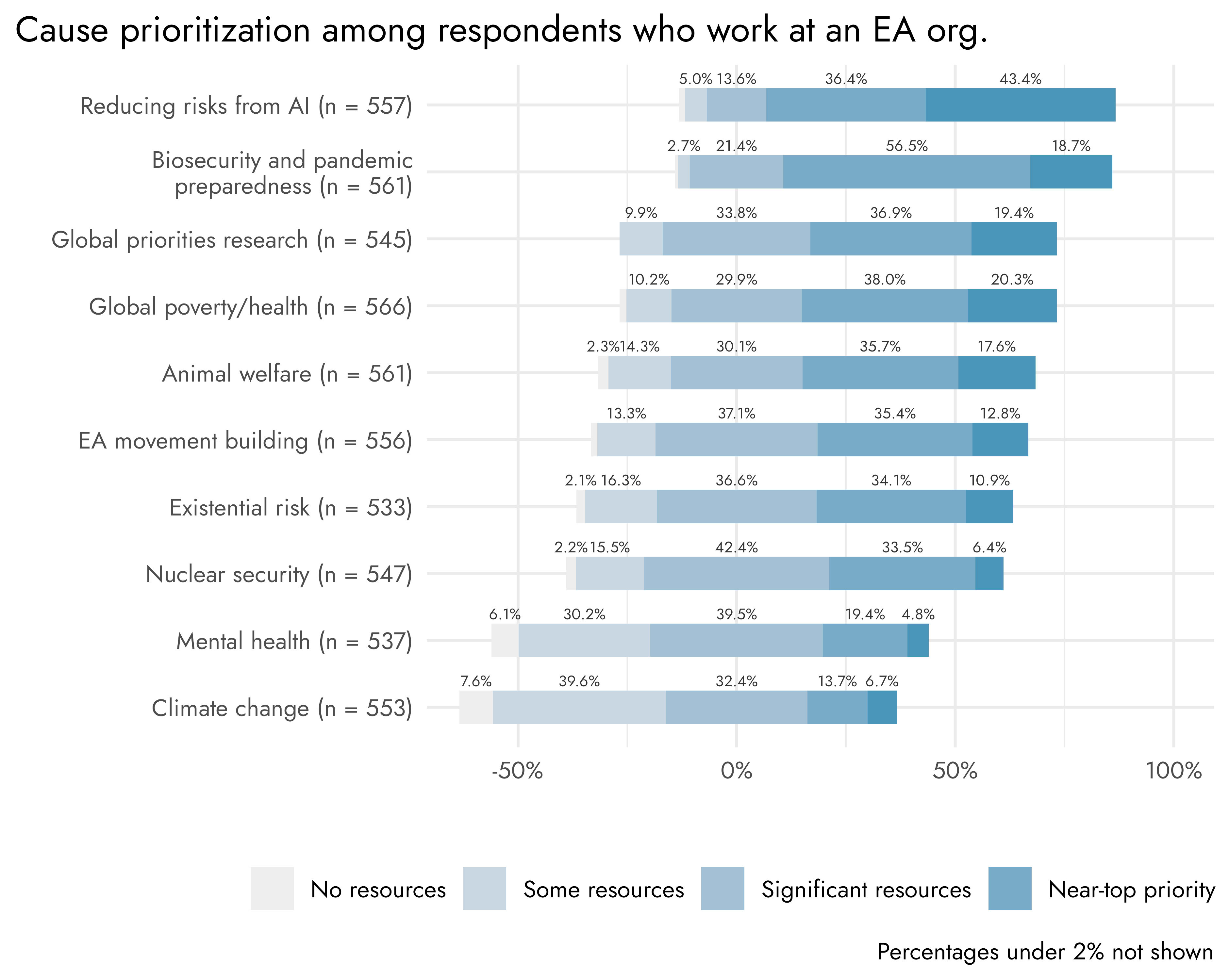

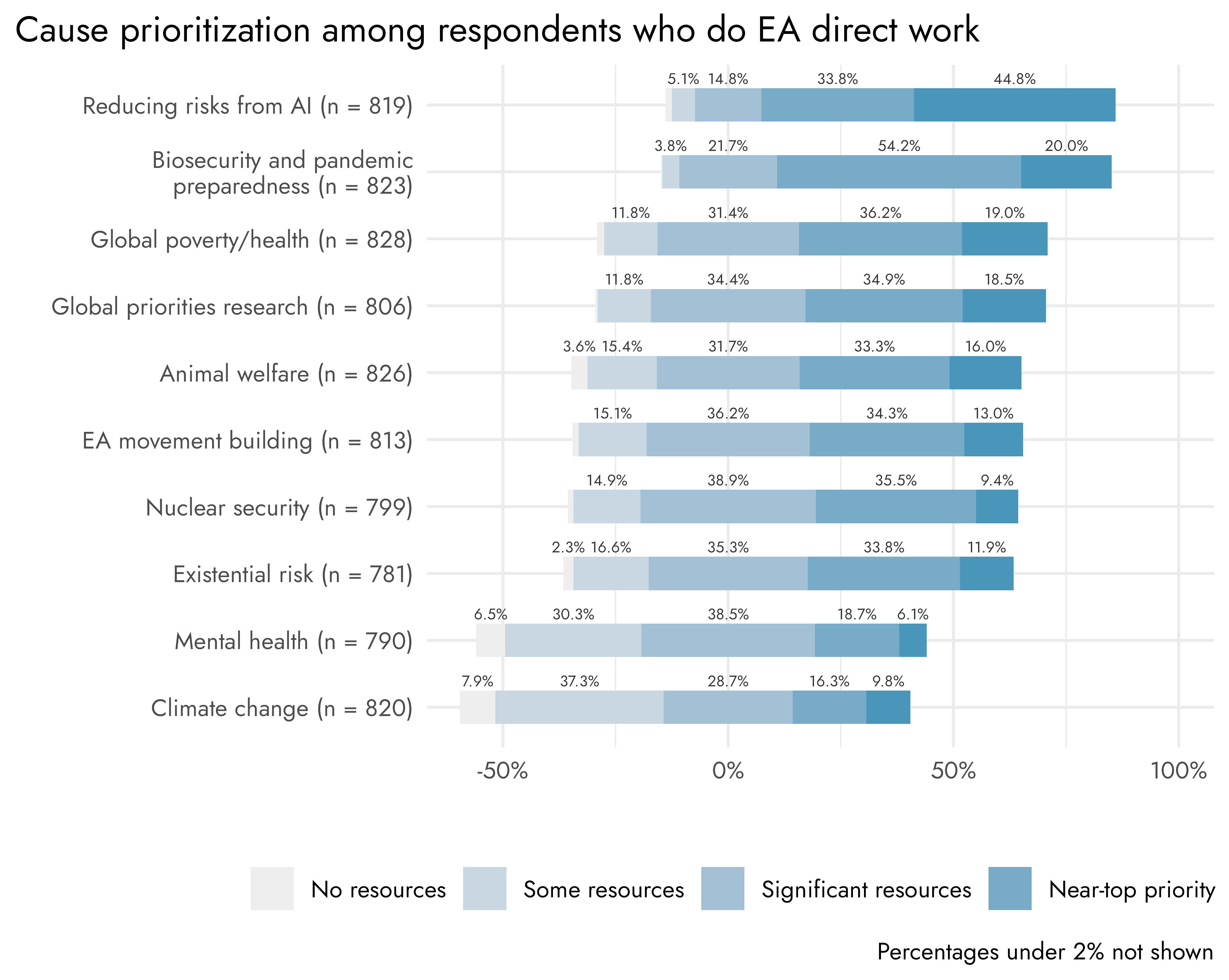

These are the results from people who currently work for an EA org or are currently doing direct work (for which we have >500 and 800 respondents respectively). Note that the EA Survey offers a wide variety of ways we can distinguish respondents based on their involvement, but I don't think any of them change the pattern I'm describing.

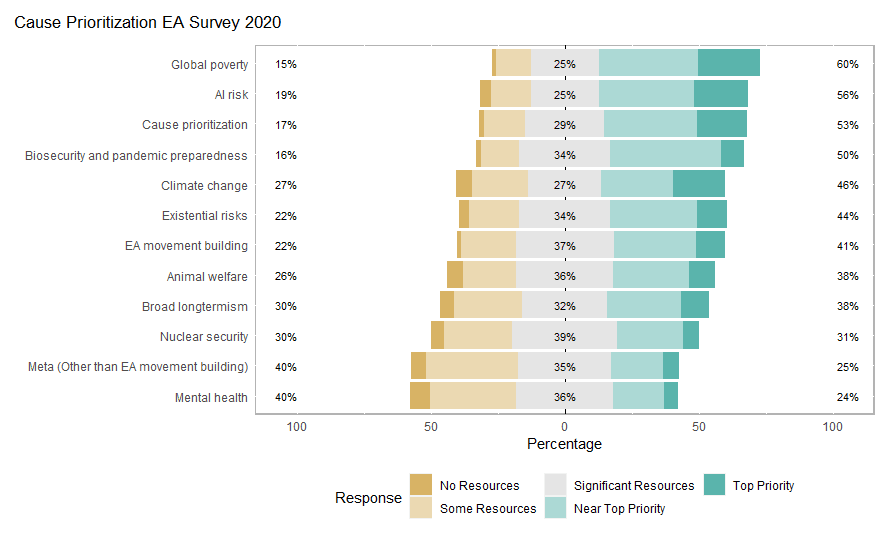

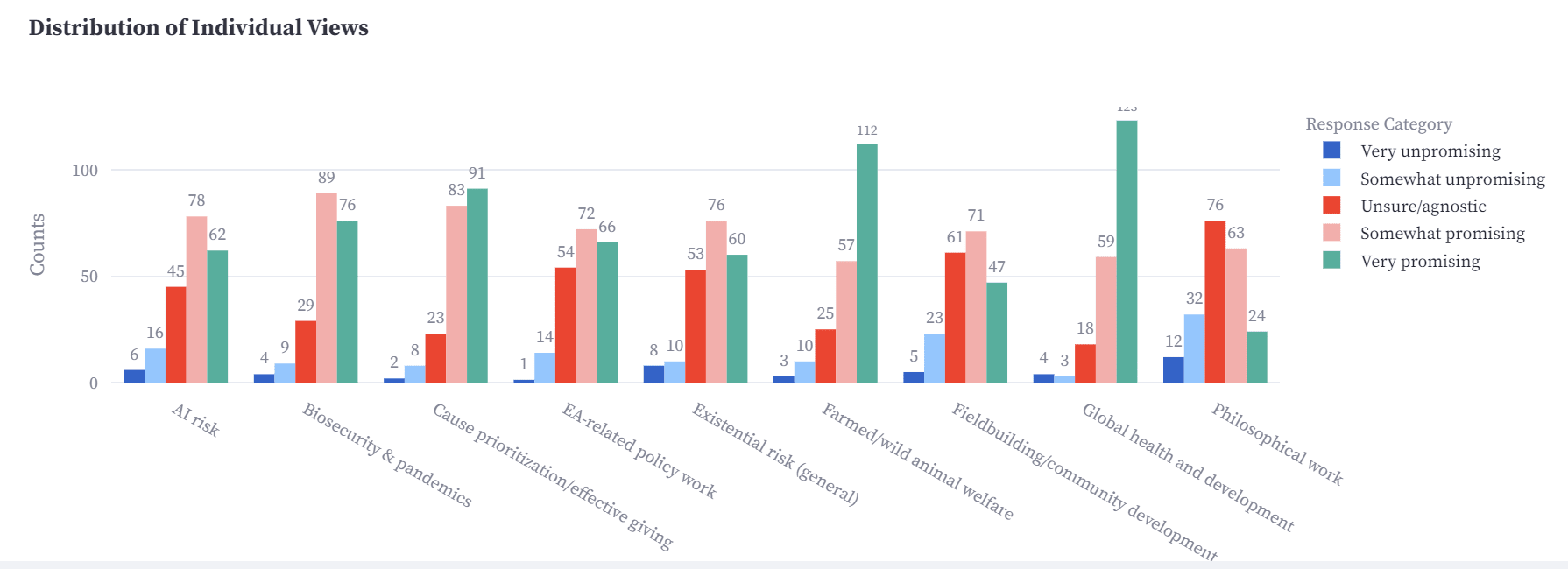

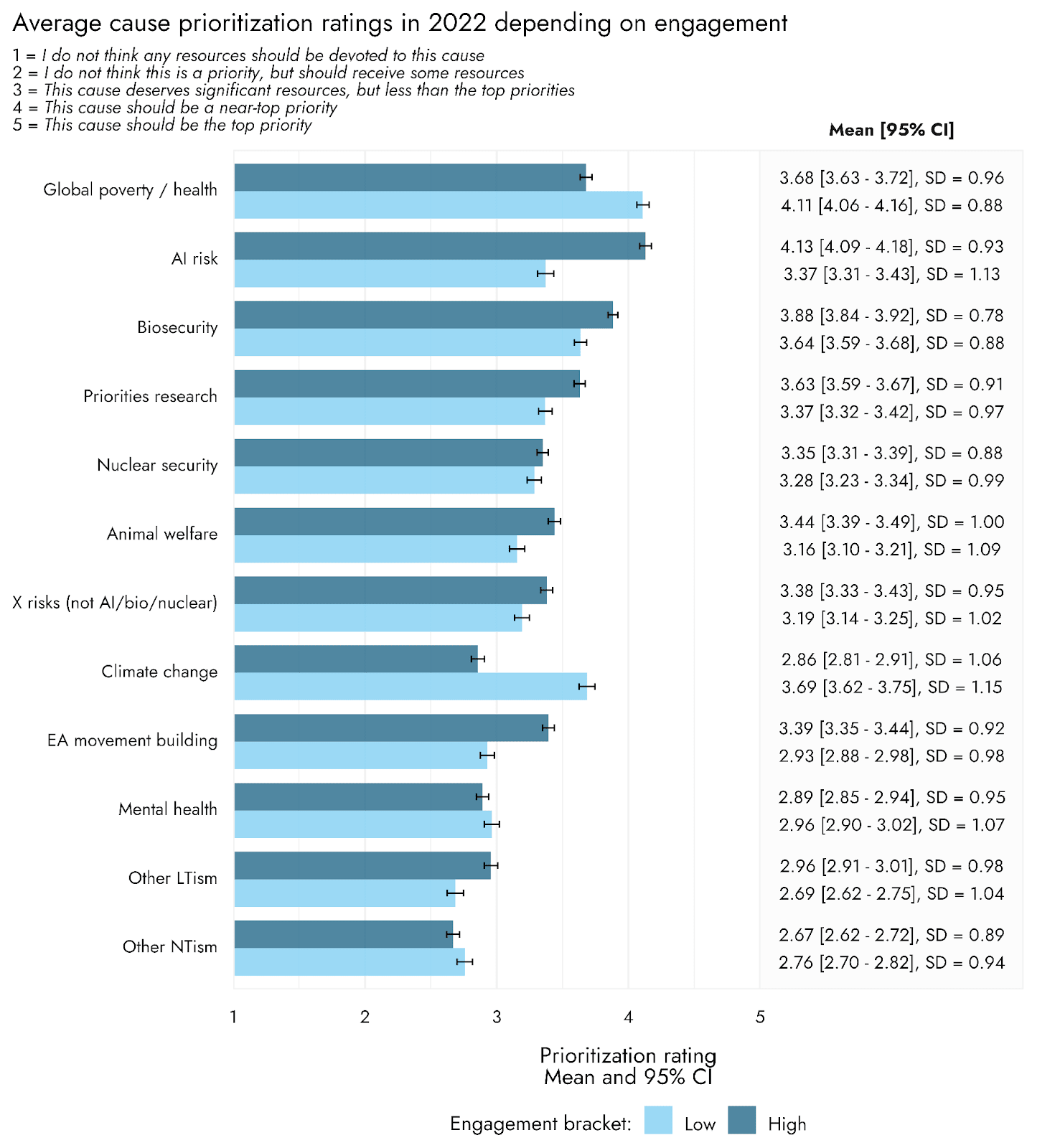

Both show that AI risk and Biosecurity are the most strongly prioritized causes among these groups. Global Poverty and Animal Welfare retain respectable levels of support, and it's important not to neglect that, but are less strongly prioritised among these groups.

To assess the claim of whether there's a divergence between more and less highly engaged EAs, we need to look at the difference between groups however, not just a single group of somewhat actively involved EAs. Doing this with 2022 data, we see the expected pattern of AI Risk and Biosecurity being more strongly prioritised by highly engaged EAs and Global Poverty less so. Animal Welfare notably achieves higher support along the more highly engaged, but still lower than the longtermist causes.[1]

Note that we are also measuring meaningfully different things related to cause area prioritization between the 2020 analysis and this one: we simply asked our sample how promising they found each cause area, while you seemed to ask about resourced/funded each cause area should be... respondents could have validly responded 'very promising' to all of the cause areas we listed

I agree that this could explain some of the differences in results, though I think that how people would prioritize allocation of resources is more relevant for assessing prioritization. I think that promisingness may be hard to interpret both given that, as you say, people could potentially rate everything highly promising, and also because "promising" could connote an early or yet to be developed venture (one might be more inclined to describe a less developed cause area as "promising", than one which has already reached its full size, even if you think the promising cause area should be prioritized less than the fully developed cause areas). But, of course, your mileage may vary, and you might be interested in your measure for reasons other than assessing cause prioritization.

Finally, it is worth clarifying that our characterization of our sample of EAs seemingly having lukewarm views about longtermism is motivated mainly by these two results:

["I have a positive view of effective altruism's overall shift towards longtermist causes" and "I think longtermist causes should be the primary focus in effective altruism"]

Thanks, I think these provide useful new data!

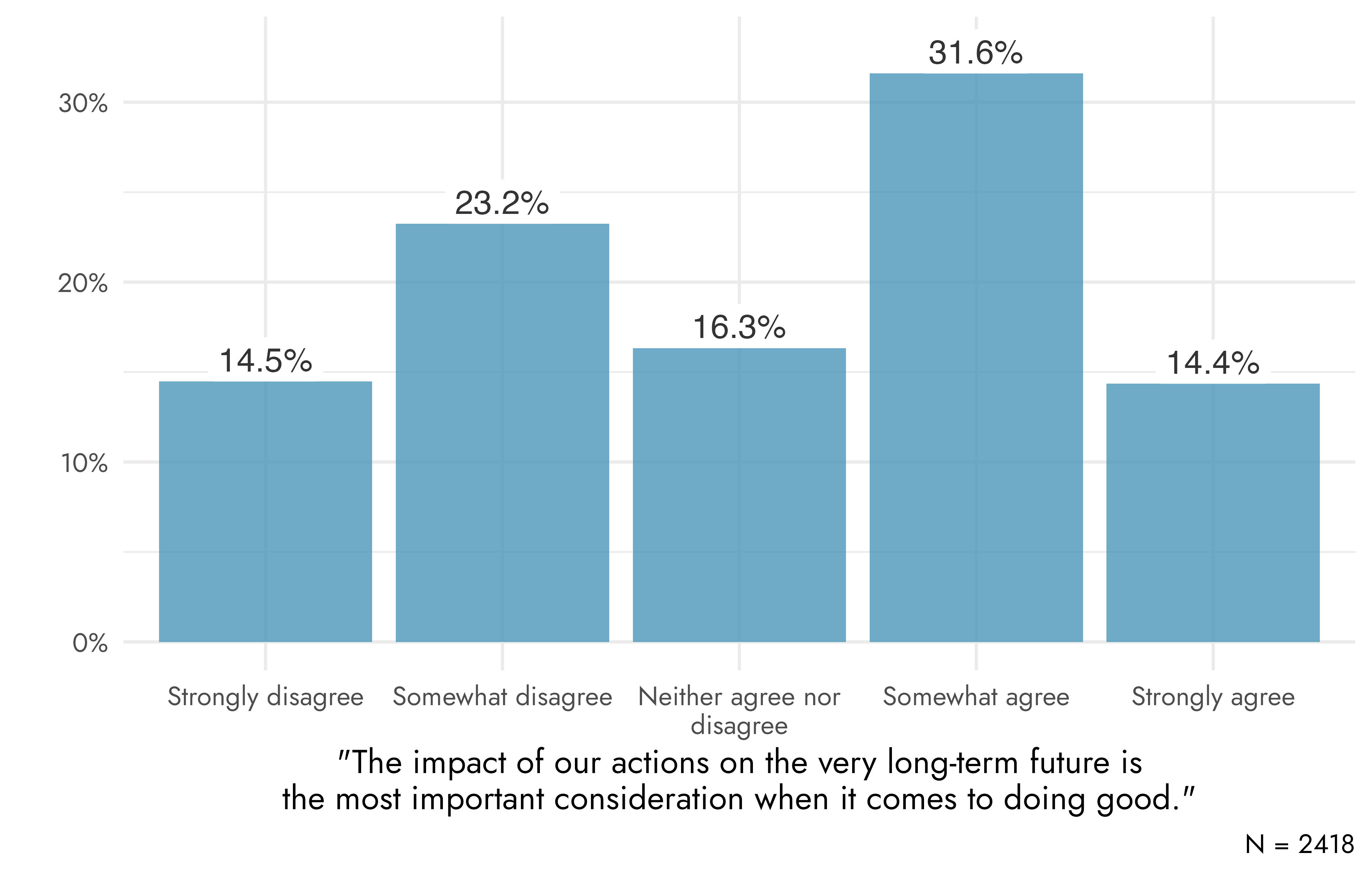

It's worth noting that we have our own, similar, measure concerning agreement with an explicit statement of longtermism: "The impact of our actions on the very long-term future is the most important consideration when it comes to doing good."

As such, I would distinguish 3 things:

- What do people think of 'longtermism'? [captured by our explicit statement]

- What do people think about allocations to / prioritisation of longtermist causes? [captured by people's actual cause prioritization]

- What do people think of EA's shift more towards longtermist causes? [captured by your 'shift' question]

Abstract support for (quite strong) longtermism

Looking at people's responses to the above (rather strong) statement of abstract longtermism we see that responses lean more towards agreement than disagreement. Given the bimodal distribution, I would also say that this reflects less a community that is collectively lukewarm on longtermism, and more a community containing one group that tends to agree with it and a group which tends to disagree with it.

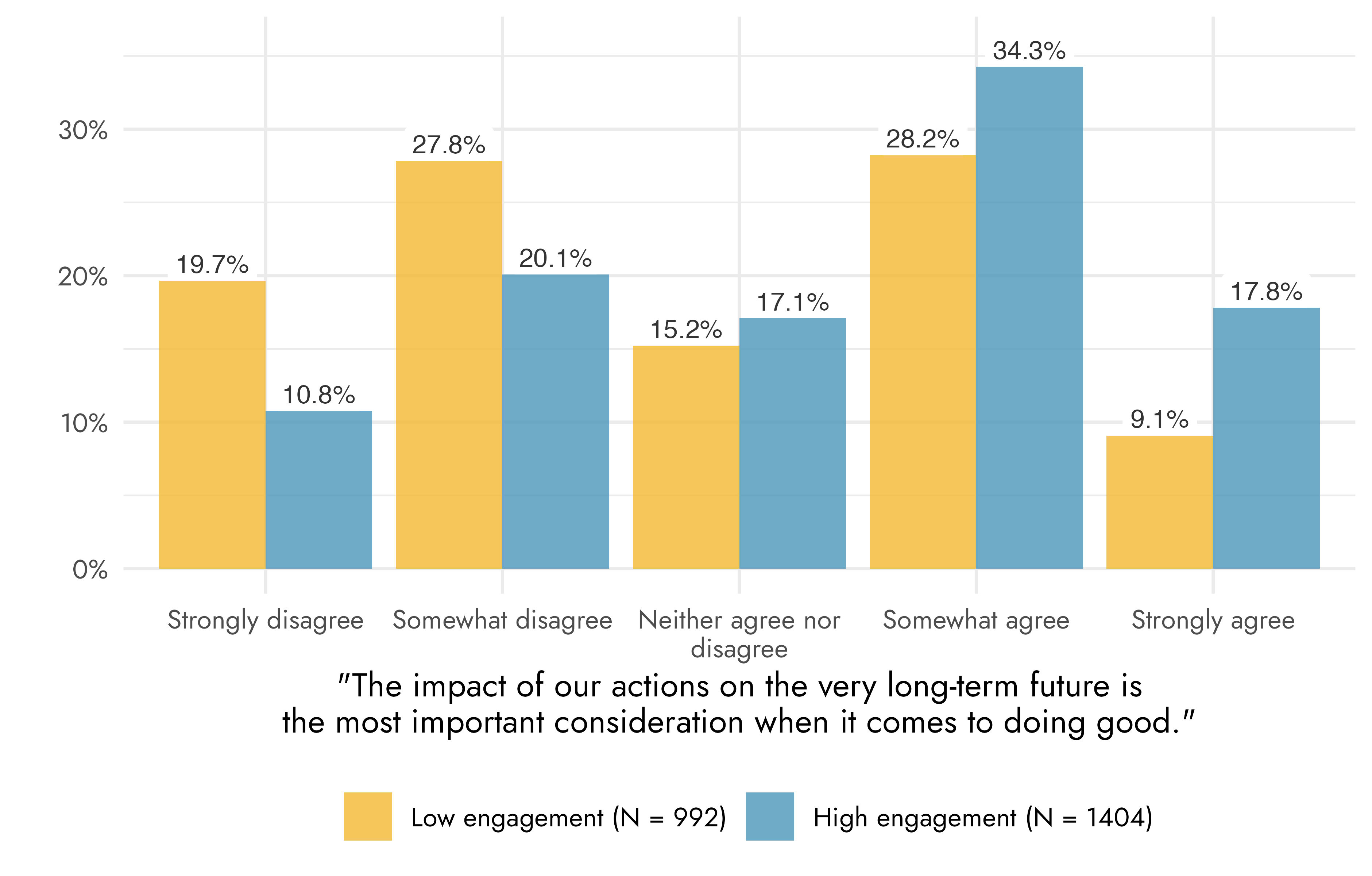

Moreover, when we examine these results split by low/high engagement we see clear divergence, as in the results above.

Concrete cause prioritization

Moreover, as noted, the claim that it is "the most important consideration" is quite strong. People may be clearly longtermist despite not endorsing this statement. Looking at people's concrete cause prioritization, as I do above, we see that two longtermist causes (AI Risk and Biosecurity) are among the most highly prioritized causes across the community as a whole and they are even more strongly prioritised when examining more highly engaged EAs. I think this clearly conflicts with a view that "EAs have lukewarm views about longtermism... EAs (actively involved across 10+ cause areas) generally seem to think that AI risk and x-risk are less promising cause areas than ones like global health and development and animal welfare" and rules out an explanation based on your sample being more highly engaged.

Shift towards longtermism

Lastly, we can consider attitudes towards the "shift" towards longtermism, where your results show no strong leaning one way or the other, with a plurality being Neutral/Agnostic. It's not clear to me that this represents the community being lukewarm on longtermism, rather than, whatever their own views about cause prioritization, people expressing agnosticism about the community's shift (people might think "I support longtermist causes, but whether the community should is up to the community" or some such. One other datapoint I would point to regarding the community's attitudes towards the shift, however, is our own recent data showing that objection to the community's cause prioritization and perception of an excessive focus on AI / x-risk causes are among the most commonly cited reasons for dissatisfaction with the EA community. Thus, I think this reflects a cause for dissatisfaction for a significant portion of the community, even though large portions of the community clearly support strong prioritization of EA causes. I think more data about the community as a whole's views about whether longtermist causes should be prioritized more or less strongly by community infrastructure would be useful and is something we'll consider adding to future surveys.

- ^

Animal Welfare does not perform as a clear 'neartermist' cause in our data, when we examine the relationships between causes. It's about as strongly associated with Biosecurity as Global Poverty, for example.

Many people mentioned comms as the biggest issue facing both AI safety and EA. EA has been losing its battle for messaging, and AI safety is in danger of losing its too (with both a new powerful anti-regulation tech lobby and the more left-wing AI ethics scene branding it as sci-fi, doomer, cultish and in bed with labs).

My sense is more could be done here (in the form of surveys, experiments and focus groups / interviews) pretty easily and cheaply relative to the size of the field. I'm aware of some work that's been done in this area, but it seems like there is low-hanging fruit, such as research like this, which could easily be replicated in quantitative form (rather than a small-scale qualitative form), to assess which objections are most concerning for different groups. That said, I think both qualitative research (like focus groups and interviews) and quantitative work (e.g. more systematic experiments to assess how people respond to different messages and what explains these responses), is lacking.

Thanks for putting together these results!

EAs have lukewarm views about longtermism

- Result: EAs (actively involved across 10+ cause areas) generally seem to think that AI risk and x-risk are less promising cause areas than ones like global health and development and animal welfare

This seems over-stated. Our own results on cause prioritisation,[1] which are based on much larger sample sizes drawn from a wider sample of the EA community, find that while Global Poverty tends to be marginally ahead of AI Risk, considering the sample as a whole, Animal Welfare (and all other neartermist causes) are significantly behind both.

But this near-parity of Global Poverty and AI risk considering the sample as a whole obscures the underlying dynamic in the community, which isn't that EAs as a whole are lukewarm about longtermism: it's that highly engaged EAs prioritise longtermist causes and less highly engaged more strongly prioritise neartermist causes.

Overall, I find your patterns of results for people's own cause prioritisation and their predicted cause prioritisation quite surprising. For example, we find ratings of Animal Welfare, and most causes, to be quite normally distributed, but yours are extremely skewed (almost twice as many give Animal Welfare the top rating as give it the next highest rating and more than twice as many give it the second to highest rating as the next highest).

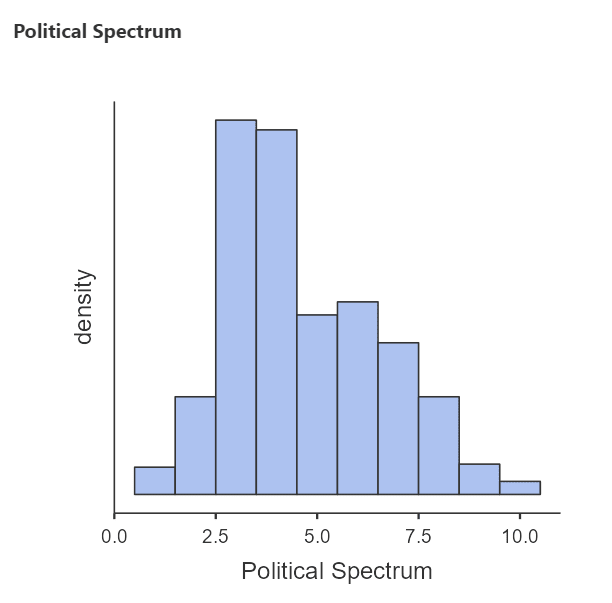

So I am actually perhaps less familiar with the distribution of political beliefs in EAs specifically and I'm thinking about rationalist-adjacent communities more at large

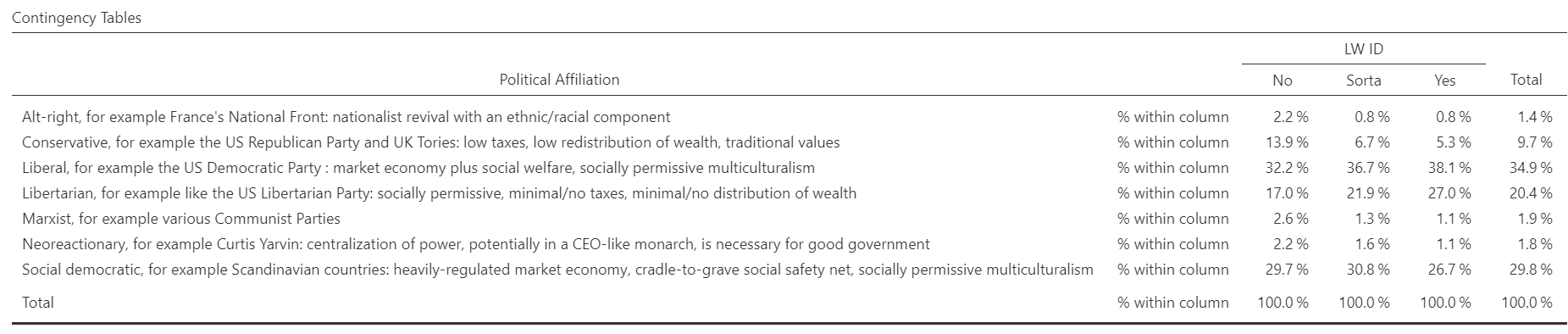

The results of the ACX survey just came out and allow us to examine political affiliation and alignment both across the whole sample and based on LW / EA ID.

First, the overall sample.

This is a left-right scale: nearly 70% were on the left side of the spectrum.

Political affiliation: mostly liberal, social democratic and libertarian (in that order).

Now looking at LW ID to assess rationalist communities:

Quite similar, but LW ID'd people lean a bit more to the left than the general readership.

In terms of political affiliation, LWers are substantially less conservative, less neo-reactionary, less alt-right and much more both liberal and libertarian.

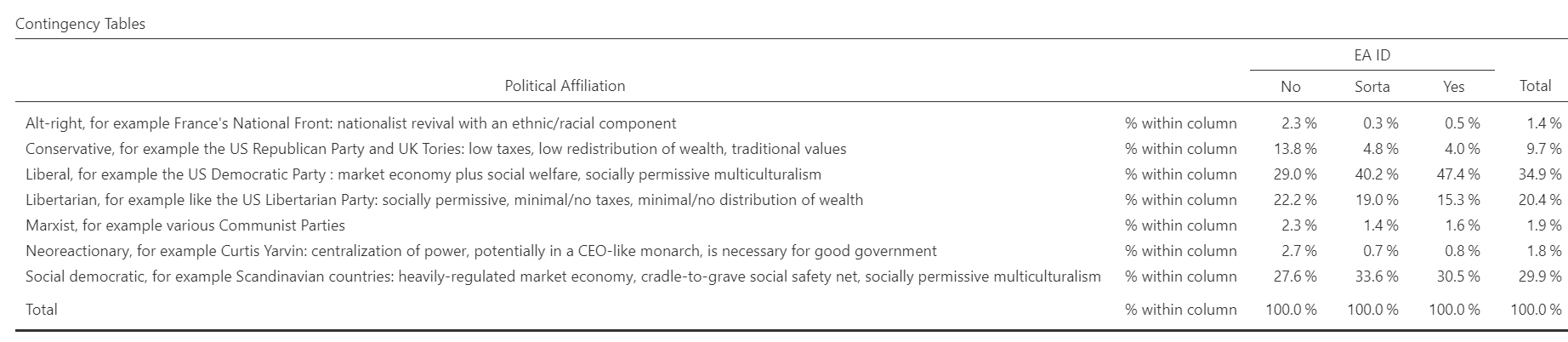

Now looking at EA ID (though I would not expect EA ID's ACX respondents to reflect the EA community as a whole: they should be expected to be more ACX and rationalist leaning):

EAs are more left, 16.4% are on the right end of the spectrum, though 9.7% are in the category immediately right of centre and 13.5% in one of the two most centre-right categories, only 2.9% are more right-leaning than that. (That's still more right-leaning that the general EA Survey sample, which I would expect to be less skewed, which found 2.2% center-right, 0.7% right.

In terms of political affiliation, EAs are overwhelmingly liberal (almost 50% of the sample) followed by social democratic (another 30.5%), with 15.3% libertarians. There are 4% Conservatives and <1% for each of alt-right or neo-reactionary (for context, 3 and 5 respondents respectively), so definitely into lizardman territory.

Thanks Vasco!

These are numbers from the most recent full EA Survey (end of 2022), but they're not limited only to people who joined EA in the most recent year. Slicing it by individual cohorts would reduce the sample size a lot.

My guess is that it would also increase the support for neartermist causes among all recruits (respondents tend to start out neartermist and become more longtermist over time).

That said, if we do look at people who joined EA in 2021 or later (the last 3 years seems decently recent to me, I don't have the sense that 80K's recruitment has changed so much in that time frame, n=1059), we see:

- Global Poverty

- 80,000 Hours

- 35.8% rated Global Poverty 4/5

- 34.2% rated Global Poverty 5/5

- All respondents

- 40.2% rated Global Poverty 4/5

- 33.2% rated Global Poverty 4/5

- 80,000 Hours

- Animal Welfare

- 80,000 Hours

- 26.8% rated Animal Welfare 4/5

- 8.9% rated Animal Welfare 5/5

- All respondents

- 29.7% rated Animal Welfare 4/5

- 13.7% rated Animal Welfare 5/5

- 80,000 Hours

Thanks Vasco.

We actually have a post on this forthcoming, but I can give you the figures for 80,000 Hours specifically now.

- Global Poverty:

- 80,000 Hours:

- 37.3% rated Global Poverty 4/5

- 28.6% rated Global Poverty 5/5

- All respondents:

- 39.3% rated Global Poverty 4/5

- 28.7% rated Global Poverty 5/5

- So the difference is minimal, but this also neglects the fact that the scale of 80K's recruitment swamps any differences in % supporting different causes. Since 80K recruits so many, it is still the second highest source of people who rate Global Poverty most highly (12.5% of such people) after only personal contacts.

- 80,000 Hours:

- Animal Welfare:

- 80,000 Hours:

- 26.0% rated Animal Welfare 4/5

- 9.6% rated Animal Welfare 5/5

- All respondents:

- 30.1% rated Animal Welfare 4/5

- 11.8% rated Animal Welfare 5/5

- Here the difference is slightly bigger, though 80,000 Hours remains among the top sources of recruits rating Animal Welfare highest (specifically, after personal contact, the top sources are 'Other' (11.2%), 'Book, article or blog' (10.7%), 80,000 Hours (9%).

- 80,000 Hours:

To further elaborate on what I think might be a crux here:

I think that where the job requirements are clearly specified, predictive proxies like having a PhD may have no additional predictive power above what is transparent in the job requirements and transparent to the applicants themselves in terms of whether they have them or not.

For example:

- Knowing programming language X may be necessary for a job and may be most common among people who studied computer science. But if 'knowing X' is listed in the job ad and the applicant knows they know X, then knowing they have a computer science degree and knowing the % successful applicants with such a degree adds no additional predictive power.

- Having a degree in Y may be necessary for a job and because more men than women have degrees in Y, being a man may thereby be predictive of success. But if you are a woman and know you have a degree in Y, then you don't gain any additional predictive power from knowing the % successful female applicants.

My supposition is that possession of a PhD is mostly just a case like the above for many EA roles (though I'm sure it varies by org, role and proxy). But I imagine those who want the information about PhDs to be revealed think they are likely to be proxies for latent qualities of the applicant, which the applicants themselves don't know and which aren't transparent in the job ad.

I think it is very clear that 80,000 hours have had a tremendous influence on the EA community... so references to things like the EA survey are not very relevant. But influence is not impact... 80,000 hours prioritises AI well above other cause areas. As a result they commonly push people off paths which are high-impact per other worldviews.

Many of the things the EA Survey shows 80,000 Hours doing (e.g. introducing people to EA in the first place, helping people get more involved with EA, making people more likely to remain engaged with EA, introducing people to ideas and contacts that they think are important for their impact, helping people (by their own lights) have more impact), are things which supporters of a wide variety of worldviews and cause areas could view as valuable. Our data suggests that it is not only people who prioritise longtermist causes who are report these benefits from 80,000 Hours.

Thanks again for the detailed reply Cameron!

I don't think our disagreement is to do with the word "lukewarm". I'd be happy for the word "lukewarm" to be replaced with "normal but slightly negative skew" or "roughly neutral, but slightly negative" in our disagreement. I'll explain where I think the disagreement is below.

Here's the core statement which I disagreed with:

The first point of disagreement concerned this claim:

If we take "promising" to mean anything like prioritise / support / believe should receive a larger amount of resources / believe is more impactful etc., then I think this is a straightforward substantive disagreement: I think whatever way we slice 'active involvement', we'll find more actively involved EAs prioritise X-risk more.

As we discussed above, it's possible that "promising" means something else. But I personally do not have a good sense of in what way actively involved EAs think AI and x-risk are less promising than GHD and animal welfare.[1]

Concerning this claim, I think we need to distinguish (as I did above), between:

Regarding the first of these questions, your second result shows slight disagreement with the claim "I think longtermist causes should be the primary focus in effective altruism". I agree that a reasonable interpretation of this result, taken in isolation, is that the actively involved EA community is slightly negative regarding longtermism. But taking into account other data, like our cause prioritisation data which shows actively engaged EAs strongly prioritise x-risk causes or result suggesting slight agreement with an abstract statement of longtermism, I'm more sceptical. I wonder if what explains the difference is people's response to the notion of these causes being the "primary focus", rather than their attitudes towards longtermist causes per se.[2] If so, these responses need not indicate that the actively involved community leans slightly negative towards longtermism.

In any case, this question largely seems to me to reduce to the question of what people's actual cause prioritisation is + what their beliefs are about abstract longtermism, discussed above.

Regarding the question of EA's attitudes towards the "overall shift towards longtermist causes", I would also say that, taken in isolation, it's reasonable to interpret your result as showing that actively involved EAs are lean slightly negative towards EA's shift towards longtermism. Again, our cause prioritisation results suggesting strong and increasing prioritisation of longtermist causes by more engaged EAs across multiple surveys gives me pause. But the main point I'll make (which suggests a potential conciliatory way to reconcile these results) is to observe that attitudes towards the "overall shift towards longtermist causes" may not reflect attitudes towards longtermism per se. Perhaps people are Neutral/Agnostic regarding the "overall shift", despite personally prioritising longtermist causes, because they are Agnostic about what people in the rest of the community should do. Or perhaps people think that the shift overall has been mishandled (whatever their cause prioritisation). If so the results may be interesting, regarding EAs' attitudes towards this "shift" but not regarding their overall attitudes towards longtermism and longtermist causes.

Thanks again for your work producing these results and responding to these comments!

As I noted, I could imagine "promising" connoting something like new, young, scrappy cause areas (such that an area could be more "promising" even if people support it less than a larger established cause area). I could sort of see this fitting Animal Welfare (though it's not really a new cause area), but it's hard for me to see this applying to Global Health/Global Poverty which is a very old, established and large cause area.

For example, people might think EA should not have a "primary focus", but remain a 'cause-neutral' movement (even though they prioritise longtermist cause most strongly and think they should get most resources). Or people might think we should split resources across causes for some other reason, despite favouring longtermism.