Video Link: AI ‘race to recklessness’ could have dire consequences, tech experts warn in new interview

Highlights

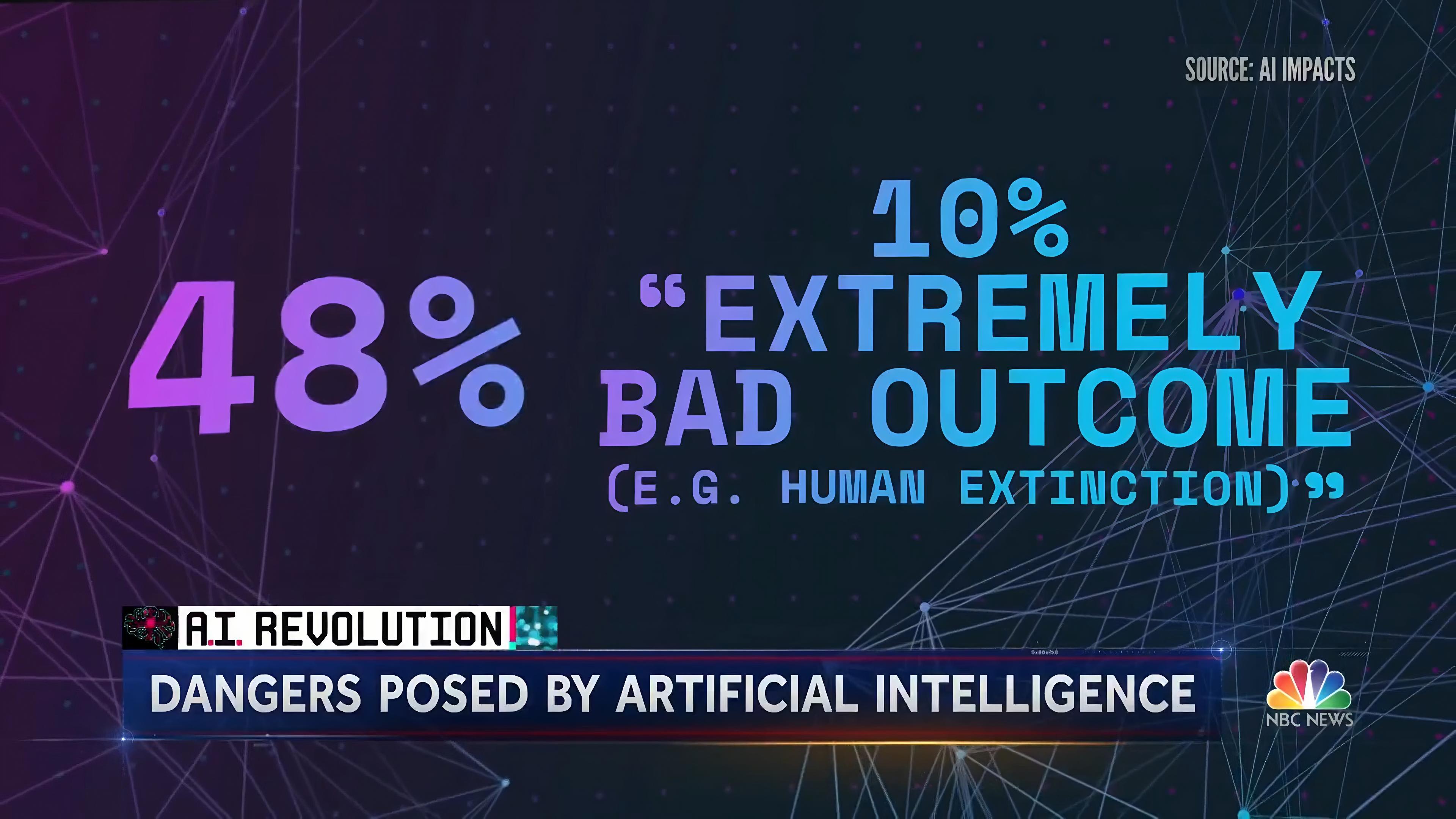

- AI Impacts' Expert Survey on Progress in AI cited: "Raskin points to a recent survey of AI researchers, where nearly half said they believe there's at least a 10% chance AI could eventually result in an extremely bad outcome like human extinction."

- Airplane crash analogy:

- Raskin: "Imagine you're about to get on an airplane and 50% of the engineers that built the airplane say there's a 10% chance that their plane might crash and kill everyone."

- Holt: "Leave me at the gate!"

- Airplane crash analogy:

- Tristan Harris on there being an AI arms race: "The race to deploy becomes the race to recklessness. Because they can't deploy it that quickly and also get it right."

- Holt: "So what would you tell a CEO of a Silicon Valley company right now? "So yeah, you don't want to be last, but can you take a pause?" Is that realistic?"

Transcript

Lester Holt: Recent advances in artificial intelligence now available to the masses have both fascinated and enthralled many Americans. But amid all the "wows" over AI, there are some saying "Wait!" including a pair of former Silicon Valley insiders who are now warning tech companies there may be no returning the AI genie to the bottle. I sat down with them for our series A.I. Revolution.

Holt: It's hard to believe it's only been four months since ChatGPT launched, kicking the AI arms race into high gear.

Tristan Harris: That was like firing the starting gun. That now, all the other companies said, 'If we don't also deploy, we're going to lose the race to Microsoft.'

Holt: Tristan Harris is Google's former Design Ethicist. He co-founded the Center for Humane Technology with Aza Raskin. Both see an AI welcome possibilities.

Harris: What we want is AI that enriches our lives, that is helping us cure cancer, that is helping us find climate solutions.

Holt: But will the new AI arms race take us there? Or down a darker path?

Harris: The race to deploy becomes the race to recklessness. Because they can't deploy it that quickly and also get it right.

Holt: In the 2020 Netflix doc the Social Dilemma they sounded the alarm on the dangers of social media.

Harris: We built these things and we have the responsibility to change it.

Holt: But tonight they have an even more dire warning about ignoring the perils of artificial intelligence.

Harris: It would be the worst of all human mistakes to have ever been made. And we literally don't know how it works and we don't know all the things it will do. And we're putting it out there before we actually know whether it's safe.

Holt: Raskin points to a recent survey of AI researchers, where nearly half said they believe there's at least a 10% chance AI could eventually result in an extremely bad outcome like human extinction.

Holt: Where do you come down on that?

Aza Raskin: I don't know!

Holt: That's scary to me you don't know.

Raskin: Yeah, well here's the point. Imagine you're about to get on an airplane and 50% of the engineers that built the airplane say there's a 10% chance that their plane might crash and kill everyone.

Holt: Leave me at the gate!

Raskin: Yeah, right, exactly!

Holt: AI tools can already mimic voices, ace exams, create art, and diagnose diseases. And they're getting smarter everyday.

Raskin: In two years, by the time of the election, human beings will not be able to tell the difference between what is real and what is fake.

Holt: Who's building the guardrails here?

Harris: No one is building the guard rails and this has moved so much faster than our government has been able to understand or appreciate. It's important to note the CEOs of the major AI labs—they've a ll said we do need to regular AI.

Holt: There's always that notion that well maybe these companies can police themselves. Does that work?

Harris: No. No.

Holt: Self policing doesn't work?

Harris: No. It cannot work.

Holt: But doesn't a person ultimately control it? Can I simply just pull the plug?

Harris: Unfortunately this is being decentralized into more and more hands so the technology isn't just run inside of one company that you can just say I want to pull the plug on Google. Also think about how hard it would be to just pull the plug on Google or pull the plug on Microsoft.

Holt: So what would you tell a CEO of a Silicon Valley company right now? "So yeah, you don't want to be last, but can you take a pause?" Is that realistic?

Harris: Yeah, you're right. It's not realistic to ask one company. What we need to do is get those companies to come together in a constructive positive dialogue. Think of it like the nuclear test ban treaty. We got all the nations together saying can we agree we don't want to deploy nukes above ground.

Holt: The stakes they say are impossibly high.

Harris: But when we're in an arms race to deploy AI to every human being on the planet as fast as possible with as little testing as possible, that's not an equation that's going to end well.

Holt: We reached out to some of the companies on the forefront of AI development. Microsoft told us "AI has the potential to help solve some of humanity's biggest problems" and they believe "It's important to make AI tools available... with guardrails." Google said they're committed to responsible development including "rigorous testing and ethics reviews."

More

A further clip of the interview beyond the above edit were published here: Artificial intelligence is rapidly developing, but how does it work? Experts explain. I didn't find this additional content as noteworthy.

Thanks for the transcript and sharing this. The coverage seems pretty good, and the airplane crash analogy seems pretty helpful for communicating - I expect to use it in the future!