Ulrik Horn

Bio

Participation4

I have received funding from the LTFF and the SFF and am also doing work for an EA-adjacent organization.

My EA journey started in 2007 as I considered switching from a Wall Street career to instead help tackle climate change by making wind energy cheaper – unfortunately, the University of Pennsylvania did not have an EA chapter back then! A few years later, I started having doubts about my decision that climate change was the best use of my time. After reading a few books on philosophy and psychology, I decided that moral circle expansion was neglected but important and donated a few thousand sterling pounds of my modest income to a somewhat evidence-based organisation. Serendipitously, my boss stumbled upon EA in a thread on Stack Exchange around 2014 and sent me a link. After reading up on EA, I then pursued E2G with my modest income, donating ~USD35k to AMF. I have done some limited volunteering for building the EA community here in Stockholm, Sweden. Additionally, I set up and was an admin of the ~1k member EA system change Facebook group (apologies for not having time to make more of it!). Lastly, (and I am leaving out a lot of smaller stuff like giving career guidance, etc.) I have coordinated with other people interested in doing EA community building in UWC high schools and have even run a couple of EA events at these schools.

How others can help me

Lately, and in consultation with 80k hours and some “EA veterans”, I have concluded that I should consider instead working directly on EA priority causes. Thus, I am determined to keep seeking opportunities for entrepreneurship within EA, especially considering if I could contribute to launching new projects. Therefore, if you have a project where you think I could contribute, please do not hesitate to reach out (even if I am engaged in a current project - my time might be better used getting another project up and running and handing over the reins of my current project to a successor)!

How I can help others

I can share my experience working at the intersection of people and technology in deploying infrastructure/a new technology/wind energy globally. I can also share my experience in coming from "industry" and doing EA entrepreneurship/direct work. Or anything else you think I can help with.

I am also concerned about the "Diversity and Inclusion" aspects of EA and would be keen to contribute to make EA a place where even more people from all walks of life feel safe and at home. Please DM me if you think there is any way I can help. Currently, I expect to have ~5 hrs/month to contribute to this (a number that will grow as my kids become older and more independent).

Posts 7

Comments277

I have never done community building and am probably ignorant of many ongoing initiatives so maybe I am stating the obvious below.

I am just wondering about mid-career professionals: Could one not easily abandon the focus on elite universities for this group? I think I have seen calls for getting more mid-career professionals into EA (@Letian Wang mentions this in another comment on this post), and I think at a mid-career point people have sufficient track record in their discipline/industry that one can almost completely disregard their education. In my experience, some of the most talented people I have worked with were people who either never considered moving to the UK/US to attend elite universities, or who just did not take university too seriously but later found ways to make significant contributions in their field. Maybe this is more true outside of research roles, as researchers still seem to have a harder time "decoupling" from their undergrad.

Maybe you hint at it in your text but I want to emphasize that sometimes, honesty can put the listener in a difficult situation. Such difficult situations can range from anything like scaring the listener to more serious stuff like involving them in a crime (with the possibility of them ending up in jail or worse). A couple of examples (I think there are many more!):

- You are angry with someone and you tell them how you feel like hitting them over the head with the beer bottle you are holding.

- You are filing your taxes incorrectly in order to evade taxes and you tell your boss about it.

Just mentioning this as in my experience, "lying" has a very practical, consequentialist "positive" aspect to it. Otherwise, I think you make good points about largely trying to be more honest - I try to do this myself in anything from expressing uncertainty when my kids ask me a question "dad, do ghosts exist" to expressing my opinions and feelings here on the forum, risking backlash from prospective employers/grantmakers.

One suggestions might be to look at Glassdoor or other company ratings and see how they change over time. While not being tracked for long, one might see e.g. DEI scores changing over time, perhaps a useful proxy for at least sexual harassment. Maybe not an answer but instead just pointing at where answers might be found.

Thanks that is super helpful and I think also super action-relevant. I can right away myself go to EA events keeping in mind:

- To make sure I am listening proportionally (e.g. in a crowd of 4, not talking more than ~25% of the time, allowing time for silence/others to say something)

- To make sure I spend some of the time I am talking to ask questions about the non-EA passions of those I am talking to - perhaps they love some activity I always have been curious about/wanting to learn

Thanks a lot for pushing back on my comment - I realize my phrasing above was clumsy/wrong - I should have written something more like "Being born in a Western country myself, the below observations are probably missing the mark but hopefully they can start a conversation to help make more people feel like they belong in EA."

I think your observations about a Western feel to most of EA is important. Being born in a Western country myself I can see that everything from the choice of music on podcasts to perhaps more importantly the philosophers and ideologies referenced is very Western-centric. I think there are many other philosophical traditions and historical communities we can draw inspiration from beyond Europe - it is not like EA is the first attempt at doing the most good in the world (I have some familiarity with Tibetan Buddhism and they have fairly strong opinions on everything from machine consciousness to how to help the most people most effectively). I like how many EA organizations use the concept of Ikigai, for example, but think we can do more. I think it is important both for talent like yourself, but also for engaging effectively on global AI policy, animal welfare in the global south and of course global health and poverty alleviation efforts. I also think there might be lessons worth highlighting on podcasts, in talks etc. from the many current EA-associated organizations interacting with stakeholders across a variety of cultures - given their success it feels like they must have found ways to be culturally sensitive and accommodating non-Western viewpoints. Perhaps we simply just do not highlight this part of EA enough and instead focus on intellectually interesting meta ideas which are a bit more distance from EAs "contact surface" across the globe. Sorry for the rant, I hope this comment might be useful!

I am also curious if you think the field of anthropology (and perhaps linguistics and other similar fields) might have something to offer the field of AI safety/alignment? Caveat: My understanding of both AI safety and anthropology is that of an informed lay person.

A perhaps a bit of a poor analogy: The movie "Arrival" features a linguist and/or anthropologist as the main character and I think that might have been a good observation from the script writer. Thus, one example output I could imagine anthropologists to contribute would be to push back on the framing of the binary or "AIs" and humans. It might be that in terms of culture, the difference between different AIs is larger than between humans and the most "human-like" AI.

I love this work, especially because you investigated something I have been curious about for a while - the impact that diversity might have on AI safety. I have a few reactions so I thought I would provide them in separate comments (not sure what the forum norm is).

I am curious if you think there are dimensions of diversity you have not captured, that might be important? One thought that came to my mind when reading this post is geographic/cultural diversity. I am not 100% sure if it is important, but reasons it might be include both:

1 - That different cultures might have different views on what it is important to focus on (a bit like women might focus more on coexistence and less on control)

2 - That it is a global problem and international policy initiatives might be more successful if one can anticipate how various stakeholders react to such initiatives.

I also had a bit of a harder time following than with "pro podcasts", but I think that is because I have a default 1.8x speed increase and aggressive trimming of silences. That works fine for the typical podcast sound and cadence but I agree it got a bit intense with these (sorry, I could not be bothered with changing the playback speed).

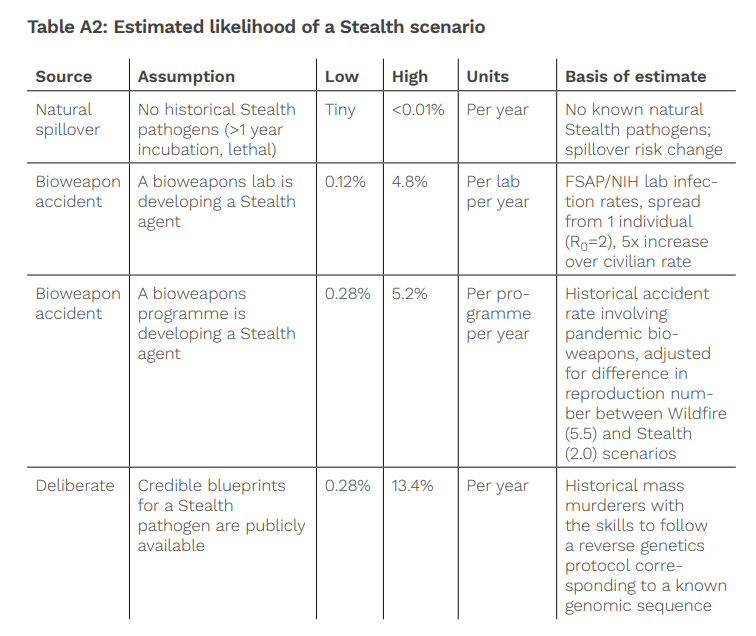

I am surprised I only now discovered this paper. In addition to Jeff's excellent points above, what stood out to me was that the paper contained both likelihoods of different scenarios as well as what I think is some of the more transparent reasoning behind these likelihood numbers. And the numbers are uncomfortably high!

There is more detail on how the likelihoods were arrived at in the paper itself - the last column is only a summary.

That makes sense. I guess it's then not really that EA is elitist, but the part of EA that focuses on students.