David_Moss

Bio

I am the Principal Research Director at Rethink Priorities and currently lead our Surveys and Data Analysis department. Most of our projects involve private commissions for core EA movement and longtermist orgs, where we provide:

- Private polling to assess public attitudes

- Message testing / framing experiments, testing online ads

- Expert surveys

- Private data analyses and survey / analysis consultation

- Impact assessments of orgs/programs

Formerly, I also managed our Wild Animal Welfare department and I've previously worked for Charity Science, and been a trustee at Charity Entrepreneurship and EA London.

My academic interests are in moral psychology and methodology at the intersection of psychology and philosophy.

How I can help others

Survey methodology and data analysis.

Posts 41

Comments496

The 2020 EA survey link says "More than half (50.7%) of respondents cited 80,000 Hours as important for them getting involved in EA". (2022 says something similar)

I would also add these results, which I think are, if anything, even more relevant to assessing impact:

- 80,000 Hours is the second most commonly cited factor for "having the largest impact on one's personal ability to have a positive impact" (after "Personal contact with EAs, so it's the largest substantive program, org or service), being cited by 31.4% of EAs.

- The 80K website alone is comparable to EA Global or the EA Forum in cited impact.

- It's also the most commonly cited factor, by a dramatic margin, for causing EAs to learn something important in the last year, being cited by 40% of EAs.

- It's also the second most important factor for people hearing about EA in the first place (13.5% of EAs).

I think this is one piece of information you would need to include to stop such a statement being misleading, but as I argue here, there are potentially lots of other pieces of information which would need to be included to make it non-misleading (i.e. information about any and all other confounders which explain the association).

Otherwise, applicants will not know that conditional on X, they are not less likely to be successful, if they do not have a PhD (even though disproportionately many people with X have a PhD).

Edit: TLDR, if you do not also condition on satisfying the role requirements, but only on applying, then this information will still be misleading (e.g. causing people who meet the requirements but lack the confounded proxy to underestimate their chances).

As I suggested in my first comment, you could do the same "by reporting other characteristics which play no role in selection, but which are heavily over-represented in successful applicants": for example, you could report that >50% of successful applicants are male,[1] white, live in certain countries, >90% have liberal political beliefs, and probably a very disproportionately large number have read Harry Potter fan fic.[2] Presumably one could identify other traits which are associated with success via their association with these other traits e.g. if most successful applicants have PhDs and PhDs disproportionately tend to [drink red wine, ski etc.], then successful applicants may also disproportionately have these traits.

Of course, different people can disagree about whether or not each of these are causal. But even if they are predictive, I imagine that we would agree that at least one of these would likely mislead people. For example, having read Harry Potter fan fic is associated with being involved with communities interested in EA-related jobs for largely arbitrary historical reasons.[3]

This concern is particularly acute when we take into account the pragmatics of employers highlighting some specific fact.[4] People typically don't offer irrelevant information for no reason. So if orgs go out of their way to say ">50% of successful applicants have PhDs", even with the caveat about this not being causal, applicants will still reasonably wonder "Why are they telling me this?" and many will reasonably infer "What they want to convey is that this is a very competitive position and I should not apply."

As I mentioned in the footnote of my comment above, there are jobs where this would be a reasonable inference. But I think most EA jobs are not like this.

If one wanted to provide applicants with full, non-misleading information, I think you would need to distinguish which of the cases applies, and provide a full account of the association which explains why successful applicants might often have PhDs, but that this is not the case when you control for x, y, z. That way (in theory), applicants would be able to know that conditional on them being a person who meets the requirements specified in the application (e.g. they can complete the coding test task), the fact that they don't have a PhD does or does not imply anything about their chances of success. But I think that in practice, providing such an account for any given trait is either very difficult or impossible.[5]

- ^

Though in EA Survey data, there is no significant gender difference in likelihood of having an EA job. In fact, a slightly larger proportion of women tend to have EA jobs.

- ^

None of these reflect real numbers from any actual hiring rounds, though they do reflect general disparities observed in the wider community.

- ^

Of course, you could describe a situation where having read Harry Potter fan fic actually serves as a useful indicator of some relevant trait like involvement in the EA community. But, again, I'm not referring to cases like this. Even in cases where involvement in the EA community is of no relevance to the role at all (e.g. all you need to do to be hired is to perform some technical, testable skill, like coding very well), applicants are likely to be disproportionately interested in EA, and successful applicants may be yet further disproportionately interested in EA, even if it has nothing to do with selection.

This can happen if, for example, 50% of the applications are basically spam (e.g. applications from a large job site, who have barely read the job advert and don't have any relevant skills but are applying for everything they can click on). In such cases, the subset of applications who are actually vaguely relevant, will be disproportionately people with an interest in EA, people with degrees etc.

- ^

In some countries there may be a norm of releasing information about certain characteristics, in which case this consideration doesn't apply for those characteristics, but would for others.

- ^

And that is not taking into account the important question of whether all applicants would actually update on such information provided completely rationally, or would whether many would be irrationally inclined to be negative about their chances, and just conclude that they aren't good enough to apply if they don't have a PhD from a fancy institution.

I broadly agree that such a statement, taken completely literally, would not be misleading in itself. But it raises the questions:

- What useful information is being conveyed by such a statement?

- If interpreted correctly, I think the applicant should take anything actionable away from the statement. But what I suspect many will do, will be to conclude that they probably shouldn't apply if they don't have a PhD, even if they meet all the requirements.

- What is pragmatically implied by such a statement?

- People don't typically go out of their way to state things which they don't think are relevant (without some reason). So if employers go out of their way to state ">50% of successful applicants had a PhD...", even with the caveat, people are reasonably going to wonder "Why are they telling me this?" and a natural interpretation is "They want to communicate that if I don't have a PhD, I'm probably not suited to the role, even if I meet all the requirements", which is exactly what employers don't want to communicate (and is not true) in the cases I'm describing.[1]

- ^

I think there are roles where unless you have a PhD, you are unlikely to meet the requirements of the role. In such cases, communicating that would be useful. But the cases I'm describing are not like that: in these cases, PhDs are really not relevant to the roles, but applicants will have very commonly undertaken PhDs. I imagine that part of the motivation for wanting to see the information are because people think that things are really like the former case, not the latter case.

Whether this information is good to include depends crucially on the causal relationships though.

In the simple test score case, academic ability causes both test scores and admission success, and test scores serve as a strong proxy for academic ability and we assume no other causal relationships complicating matters. Here test scores serve as a useful proxy for academic ability, and are relatively innocent as an indicator for likelihood of admission (i.e. they serve as a pretty good indicator of whether one is likely to succeed).

But telling people about something which was strongly associated with success, but not causally connected with the factors which determine success in the right way would be misleading.

In a more complex (but perhaps realistic) case, where completing a PhD is causally related to a bunch of other factors, then saying that most successful applicants have PhDs risks being misleading about one's chances of success / suitability for the role and about the practical utility of getting a PhD for success.

Sometimes EA Orgs will say something like “we have no degree requirements”... but in reality will mostly hire people with PhDs. I appreciate your open-mindedness regarding degree and experience requirements, but saying something like "we expect most successful applicants to have X"... helps applicants assess whether the opportunity is worth spending time on.

I would advise against this specific policy, since I think it risks being misleading. My impression is that many EA employers genuinely assign ~0 weight to an applicant having a PhD, but nevertheless find themselves hiring disproportionately PhDs, simply because the pool of people who have the traits they are interested in (e.g. interested in autonomously completing very high-level research) disproportionately choose to complete PhDs.[1] If an org simply reports ">50% of successful applicants have PhDs", this will likely mislead applicants into thinking that having a PhD is important to success, even though it's assigned no weight (one could imagine similar dynamics by reporting other characteristics which play no role in selection, but which are heavily over-represented in successful applicants).

To be clear, if employers actually do assign significant weight to a characteristic, but are open to considering exceptions, then I think it's good to be transparent about both sides of that.

- ^

The EA community itself is very disproportionately skewed towards people with graduate degrees, which probably contributes to this. Last time we checked, in 2019, >45% of respondents had a graduate degree, and I would expect this number to have only increased since then, since many of the remainder were students, who will themselves likely go on to complete further degrees at quite high rates.

only 10% of non-student respondents worked at an Effective Altruism organization...

Donations are an amazing opportunity, and I think they are underemphasized

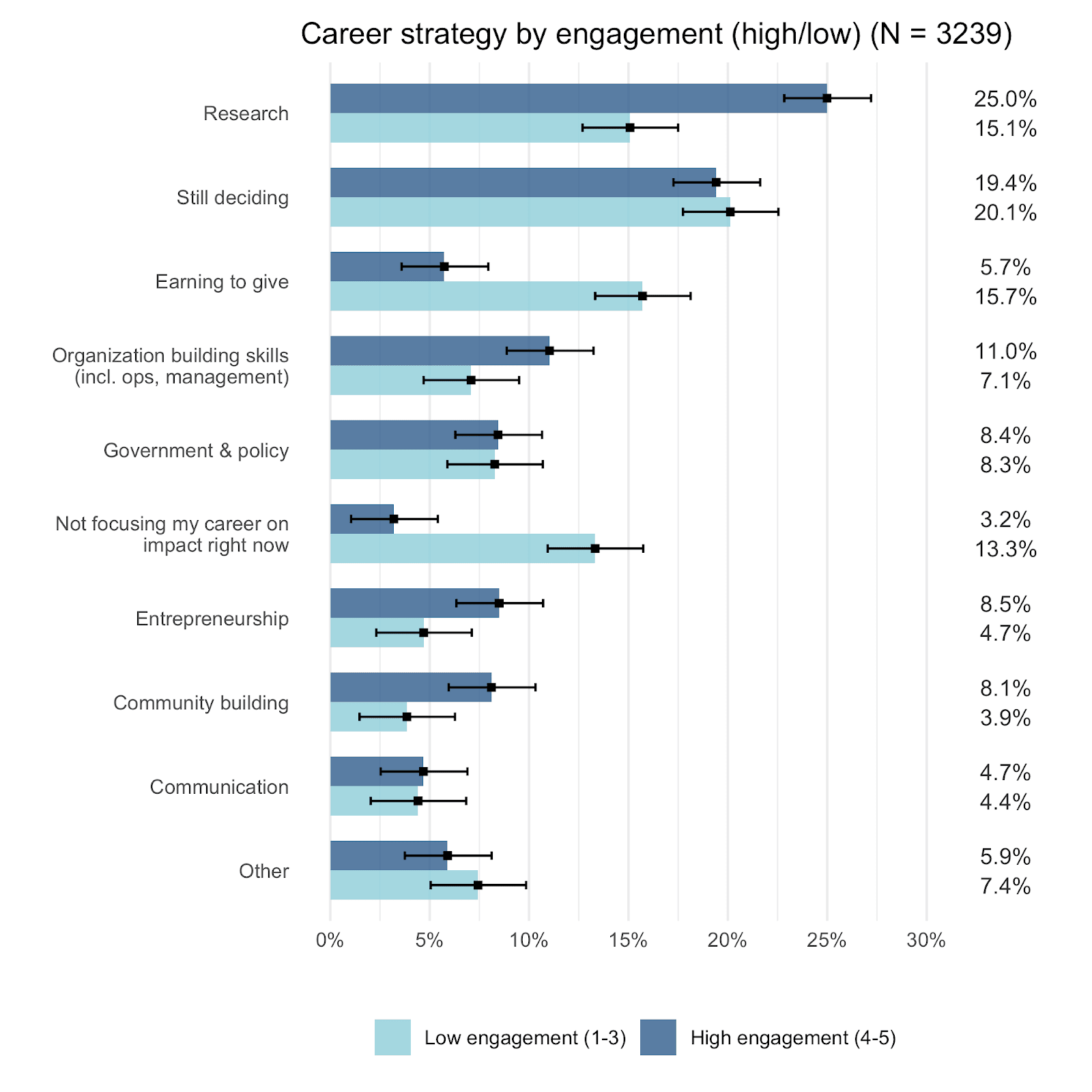

Our 2022 survey offers further illustration of this. Only 10% of respondents have earning to give as their career plan. And that masks a stark divide between highly engaged EAs, for whom less than 6% plan to pursue earning-to-give, compared to closer to 16% of less engaged EAs.

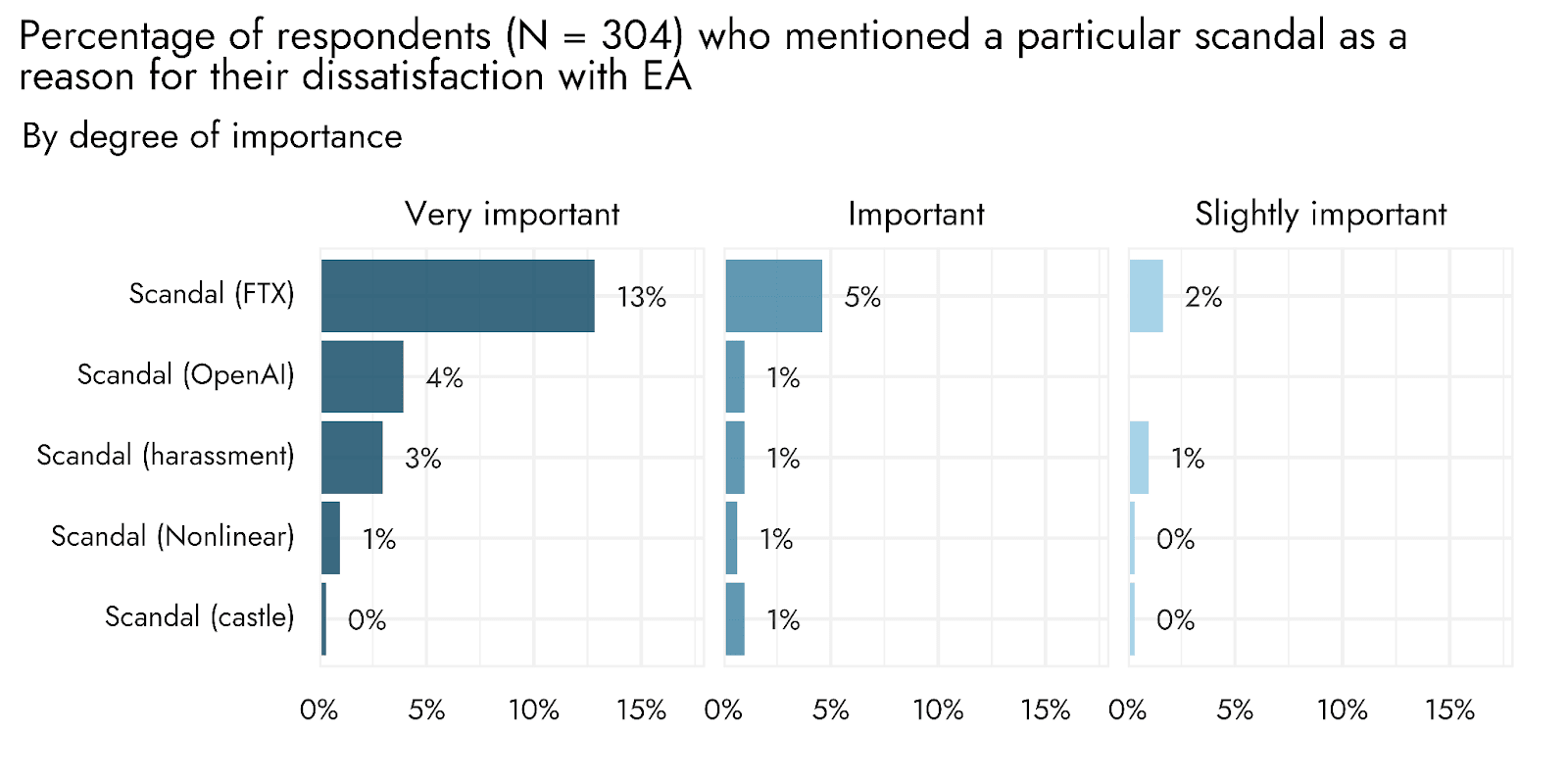

Our data suggests that the highest impact scandals are several times more impactful than other scandals (bear in mind that this data is probably not capturing the large number of smaller scandals).

The relatively high frequency of people with high satisfaction temporarily stopping promoting EA (and the general flatness of this curve)

Agreed. I think that people temporarily stopping promoting EA is compatible with people who are still completely on board with EA, deciding that it's strategically unwise to publicly promote it, at a time when there's lots of negative discussion of it in the media. Likewise with still promoting EA, but stopping referring to it as "EA", which also showed high levels across the board.

I think the prevalence of these behaviours points to the importance of more empirical research on the EA brand and how it compares to alternative brands or just referring to individual causes or projects (see our proposal here). I think it's entirely possible that the term "EA" itself has been tarnished and that people do better to promote ideas and projects without explicitly branding them as EA. But there's a real cost to just promoting things piecemeal or using alternative terms (e.g. "have you heard of "high impact careers" / "existential security"?"), rather than referring to a unified established brand. So it's not clear a priori whether this is a positive move.

I was surprised that for the cohort that changed their behavior, “scandal” was just one of many reasons for dissatisfaction and didn’t really stand out. The data you provide looks quite consistent with Luke Freeman’s observation: “My impression is that that there was a confluence of things that peaking around the FTX-collapse..."

Agreed. One possible explanation, other than it just being a co-incidence of factors, is that the FTX crisis and subsequent revelations dented faith in EA leadership, and made people more receptive to other concerns. (I think historically, much of the community has been extremely deferential to core EA orgs and ~ assumed they know what they're doing come what may).

Certainly it's true that many of the other factors e.g. dissatisfaction with cause prioritisation, diversity, and elitism had been cited for a while. It's also true that even before FTX (though it still holds for 2022), people who had been in the community longer tended to be less satisfied with the community, even though higher engagement was associated with higher satisfaction.[1] While the implications of this for the average satisfaction level of the community depend on how many newer vs older EAs we have at a given time, this is compatible with a story where EAs generally become less satisfied with the community over time.

- ^

Note that this is the opposite direction to what you'd see if less satisfied people drop out, leaving more satisfied people remaining in earlier cohorts. That said the linked analyses (for individual years) can't rule out the possibility that earlier cohorts have just always been distinctively less satisfied, which would require a comparison across years.

Sounds broadly like the belief vs alief distinction.