From reading EA material, one might get the impression that the Importance, Tractability and Neglectedness (ITN) framework is the (1) only, or (2) best way to prioritise causes. For example, in EA concepts’ two entries on cause prioritisation, the ITN framework is put forward as the only or leading way to prioritise causes. Will MacAskill’s recent TedTalk leaned heavily on the ITN framework as the way to make cause prioritisation decisions. Open Philanthropy Project explicitly prioritises causes using an informal version of the ITN framework.

In this post, I argue that:

- Extant versions of the ITN framework are subject to conceptual problems.

- A new version of the ITN framework, developed here, is preferable to extant versions.

- Non-ITN cost-effectiveness analysis is, when workable, superior to ITN analysis for the purposes of cause prioritisation.

- This is because:

- Marginal cost-effectiveness is what we ultimately care about.

- If we can estimate the marginal cost-effectiveness of work on a cause without estimating the total scale of a problem or its neglectedness, then we should do that, in order to save time.

- Marginal cost-effectiveness analysis does not require the assumption of diminishing marginal returns, which may not characterise all problems.

- ITN analysis may be useful when it is difficult to produce intuitions about the marginal cost-effectiveness of work on a problem. In that case, we can make progress by zooming out and carrying out an ITN analysis.

- In difficult high stakes cause prioritisation decisions, we have to get into the weeds and consider in-depth the arguments for and against different problems being cost-effective to work on. We cannot bypass this process through simple mechanistic scoring and aggregation of the three ITN factors.

- For this reason, the EA movement has thus far significantly over-relied on the ITN framework as a way to prioritise causes. For high stakes cause prioritisation decisions, we should move towards in-depth analysis of marginal cost-effectiveness.

[update - my footnotes didn't transfer from the googledoc, so I am adding them now]

1. Outlining the ITN framework

Importance, tractability and neglectedness are three factors which are widely held to be correlated with cost-effectiveness; if one cause is more important, tractable and neglected than another, then it is likely to be more cost-effective to work on, on the margin. ITN analyses are meant to be useful when it is difficult to estimate directly the cost-effectiveness of work on different causes.

Informal and formal versions of the ITN framework tend to define importance and neglectedness in the same way. As we will see below, they differ on how to define tractability.

Importance or scale = the overall badness of a problem, or correspondingly, how good it would be to solve it. So for example, the importance of malaria is given by the total health burden it imposes, which you could measure in terms of a health or welfare metric like DALYs.

Neglectedness = the total amount of resources or attention a problem currently receives. So for example, a good proxy for the neglectedness of malaria is the total amount of money that currently goes towards dealing with the disease.[^1]

Extant informal definitions of tractability

Tractability is harder to define and harder to quantify than importance and neglectedness. In informal versions of the framework, tractability is sometimes defined in terms of cost-effectiveness. However, this does not make that much sense because, as mentioned, the ITN framework is meant to be most useful when it is difficult to estimate the marginal cost-effectiveness of work on a particular cause. There would be no reason to calculate neglectedness if we already knew tractability, thus defined.

Other informal versions of the ITN framework often use intuitive definitions such as “tractable causes are those in which it is easy to make progress”. This definition seems to suggest that tractability is defined as how much of a problem you can solve with a given amount of funding. However, if you knew this, there would be no point in calculating neglectedness, since with importance and tractability alone, you could calculate the marginal cost-effectiveness of work on a problem, which is ultimately what we care about. This definition renders the Neglectedness part of the analysis unnecessary, or at least suggests that we would only calculate neglectedness as one factor that bears on tractability, rather than as three distinct quantities that can be aggregated and scored.

Thus, extant informal versions of the ITN framework have some conceptual difficulties.

Extant formal definitions of tractability

80,000 Hours develops a more formal version of the ITN framework which advances a different definition of tractability:

“% of problem solved / % increase in resources”[^2]

The terms in the 80k ITN definitions cancel out as follows:

- Importance = good done / % of problem solved

- Tractability = % of problem solved / % increase in resources

- Neglectedness = % increase in resources / extra $

Thus, once we have information on importance, tractability and neglectedness (thus defined), then we can produce an estimate of marginal cost-effectiveness.

The problem with this is: if we can do this, then why would we calculate these three terms separately in the first place? The ITN is supposed to be useful as a heuristic when we lack information on cost-effectiveness, but on these definitions, we must already have information on cost-effectiveness. On these definitions, there is no reason to calculate neglectedness.

To be as clear as possible, on the 80k framework, if we know the ITN estimates, then we know the difference that an additional $1m (say) will make on solving a problem. So, we do not necessarily have to calculate the neglectedness of a problem in order to prioritise causes.

It is important to bear in mind, but easy to forget, that cause prioritisation in terms of the ITN criteria thus defined involve judgements about cost-effectiveness. For example, all of 80,000 Hours’ cause prioritisation rests on judgements about all ITN factors thus defined, and so we must be able to deduce from them marginal cost-effectiveness estimates for work on AI, biorisk, nuclear security and climate change, and so on.

An alternative ITN framework

Existing versions of the ITN framework seem to have some conceptual problems. Nevertheless, the ITN framework in some form often seems a useful heuristic. The question therefore is: how should we define tractability in a conceptually coherent way such that the ITN framework remains useful?

The research team at Founders Pledge has developed a framework which attempts to meet these criteria. We define tractability in the following way:

Tractability = % of problem solved per marginal resource.

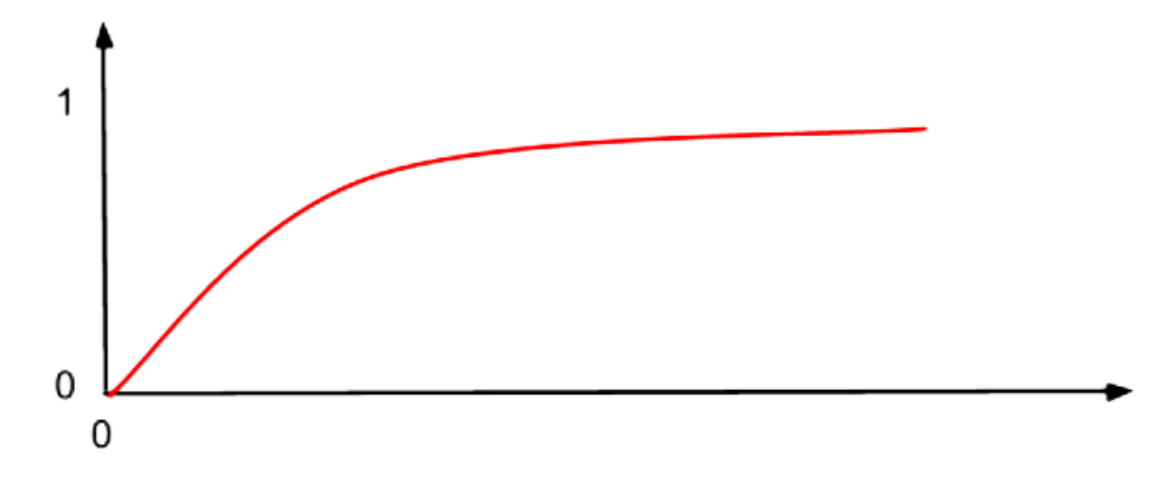

On this definition, neglectedness is just one among many determinants of tractability. Importance and neglectedness can be quantified quite easily, but the other factors, aside from neglectedness, that bear on tractability are harder to quantify. Assuming diminishing returns, the conceptual relationship between the factors can be represented as follows:

[I can't get the textboxes to show here - they are labels of 'good done' for the y-axis, and 'resources' for the x-axis.]

The scale importance of a problem is the maximal point that the curve meets on the y-axis - the higher up the y-axis you can go, the better it is. Neglectedness tells you where you are on the x-axis at present. The other factors that bear on tractability tell you the overall shape of the curve. Intractable problems will have flatter curves, such that moving along the x-axis (putting more resources in) doesn’t take you far up the y-axis (solve much of the problem). Correspondingly, easily solvable problems will have steep curves.

When we are initially evaluating a problem, it is often difficult to know the shape of the returns to resources curve, but easy to calculate how big a problem is and how neglected it is. This is why ITN analysis comes into its own when it is difficult to gather information about cost-effectiveness. Thus, when we are carrying out ITN analysis in this new format, the process would be:

- We quantify importance to neglectedness ratios for different problems.

- We evaluate the other factors (aside from neglectedness) that bear on the tractability of a problem.

- We make a judgement about whether the differences in tractability could be sufficient to overcome the initial importance/neglectedness ranking.

For step 1, problems with higher importance/neglectedness ratios should be a higher priority, other things equal. That is, we should prefer to work on huge but neglected problems than small crowded ones, other things equal.

For step 2, we would have to find a way to abstract from the current neglectedness of different problems.[^3] One way to do this would be to try to evaluate the average tractability of two different problems. Another way would be to evaluate the two problems imagining that they were at the same level of neglectedness. When we are assessing tractability, controlling for neglectedness, we would consider factors such as:

- The level of opposition to working on a problem

- The strength of the political or economic incentives to solve a problem

- The coordination required to solve a problem

For step 3, once we have the information of the other factors (aside from neglectedness) bearing on tractability, we then have to decide how these affect our initial step 1 ranking. One option would be to give problems different very rough scores on tractability perhaps using a checklist of the factors above. Some problems will dominate others in terms of the three ITN criteria, and prioritisation will then be straightforward. In more difficult cases, some problems will be highly neglected but much less tractable than others (eg in climate change, nuclear power is much more neglected than renewables but also arguably more unpopular at all levels of neglectedness), or the tractability of work of a problem will be very unclear. In these cases, we have to make judgement calls about whether any of the differences in the other factors bearing on tractability are sufficient to change our initial step 1 ranking. That is, we have to make rough assumptions claims about the shape of the returns curve for different problems.

On this version of the framework, it is not possible to mechanistically aggregate ITN scores between problems to produce an overall cause ranking. This version of the ITN framework produces rankings between problems that are quite low resolution: it will often be difficult to know the overall ranking of different causes, analysed in this way. This is what we should expect from the ITN framework. The ITN framework is useful precisely when it is difficult to have intuitions about cost-effectiveness.

The advantage of this version of the framework is that it is more conceptually coherent than extant versions of the framework.

The disadvantages of this version of the framework are:

- It relies on the assumption of diminishing returns, which may not characterise all problems.

- ITN analysis is in some cases inferior to cost-effectiveness analysis as a cause prioritisation tool.

To these two points, I now turn.

2. Cost-effectiveness analysis without ITN analysis

We have seen that on some versions of the framework, ITN analyses necessarily give us the information for a marginal cost-effectiveness estimate. However, it is possible to calculate the marginal cost-effectiveness of work on a cause without carrying out an ITN analysis. There are two main ways in which cost-effectiveness analysis could differ from an ITN analysis:

- Calculating the size of the whole problem

ITN analysis involves estimating the size of a whole problem. For example, when estimating the importance of malaria, one would quantify the total scale of the problem of malaria in DALYs. But if you are doing cost-effectiveness analysis, it would not always be necessary to quantify the total scale of the whole problem. Rather, you could estimate directly how good it is to solve part of a problem with a given amount of resources.

- Calculating neglectedness

Cost-effectiveness analyses do not necessarily have to calculate the neglectedness of a problem. It is sometimes possible to directly calculate how much good an extra x resources will do, which does not necessarily require you to assess how many resources a problem currently receives in total. This is because neglectedness is just one determinant of tractability (understood as % of problem solved/$) among others, and it may be possible to estimate how tractable a problem is without estimating any one determinant of tractability, whether that be neglectedness, level of political opposition, degree of coordination required, or whatever.

Non-ITN cost-effectiveness estimates have two main advantages over ITN analyses.

- Marginal cost-effectiveness is what we ultimately care about. If we can produce an estimate of that without having to go through the extra steps of quantifying the whole problem or calculating neglectedness, then we should do that, purely to save time.

- Avoiding theoretical reliance on calculating neglectedness avoids reliance on the assumption of diminishing marginal returns, which may not characterise every problem.[^4]

To illustrate the possibility, and advantages, of non-ITN cost-effectiveness analysis, examples follow.

Giving What We Can on global health

Giving What We Can argued that donating to the best global health charities is better than donating domestically.

“The UK’s National Health Service considers it cost-effective to spend up to £20,000 (about $25,000) for a single year of healthy life added.

By contrast, because of their poverty many developing countries are still plagued by diseases which would cost the developed world comparatively tiny sums to control. For example, GiveWell estimates that the cost per child life saved through an LLIN distribution funded by the Against Malaria Foundation is about $7,500. The NHS would spend this amount to add about four months of healthy life to a patient.”

This argument uses a cost-effectiveness estimate to argue for focusing on the best global health interventions rather than donating in a high-income country.

It is true that Giving What We Can appeals here to neglectedness as a way to explain why the cost-effectiveness of health spending differs between the UK and the best global poverty charities. But the argument from the direct cost-effectiveness estimate alone is sufficient to get to the conclusion: if the cost-effectiveness of health spending is actually higher in the UK than poor countries, then the point about neglectedness would be moot. This illustrates the relation neglectedness has to cost-effectiveness analysis, and how neglectedness analysis is not always necessary for cause comparisons.

Lant Pritchett on economic growth

In his paper ‘Alleviating Global Poverty: Labor Mobility, Direct Assistance, and Economic Growth’, Lant Pritchett argues that research on, and advocacy for, economic growth is a better bet than direct ‘evidence-based development’ (eg, distributing bednets, cash transfers and deworming pills).

Here he lays out the potential benefits of one form of evidence-based development, the ‘Graduation approach’:

“Suppose the impact of the Graduation program in Ethiopia was what it was on average for the five countries and generated $1,720 in NPV for each $1000 invested.” (p25)

Thus, one gets a 1.7x return from the Graduation approach. Here he lays out the benefits of research and advocacy for growth:

“The membership of the American Economics Association is about 20,000 and suppose the global total number of economists is twice that and the inclusive cost to someone of an economist per year is $150,000 on average. Then the cost of all economists in the world is about 6 billion dollars. Suppose this was constant for 50 years and hence cost 300 billion to sustain the economics profession from 1960 to 2010. Suppose the only impact of all economists in all these 50 years was to be even a modest part of the many factors that persuaded the Chinese leadership to switch economic strategy and produce 14 trillion dollars in cumulative additional output.” (p24)

Even if the total impact of all economists in the world for 50 years was only to increase by 4% (in absolute terms) the probability of the change in course in Chinese policy, it would still have greater expected value than directly funding the graduation approach. Since development economists likely did much more than this, research and advocacy for growth-friendly policies is better than evidence-based development. Pritchett continues:

“For instance, the World Bank’s internal expenditures (BB budget) on all of Development Economics (of which research is just a portion) in FY2016 was about 50 million dollars. The gains in NPV of GDP from just the Indian 2002 growth acceleration of 2.5 trillion are 50,000 times larger. The losses in NPV from Brazil’s 1980 growth deceleration are 150,000 times larger. So even if by doing decades of research on what accelerates growth (or avoids losses) and even if that only as a small chance of success in changing policies this still could have just enormous returns—because the policy or other changes that create growth induces country-wide gains in A (which are, economically, free) and induces voluntary investments that have no direct fiscal cost (or conversely causes those to disappear).” (p25)

This, again, is a way of placing a lower bound on a cost-effectiveness estimate of research and advocacy for growth, as against direct interventions.

Trying to bend the reasoning here into an ITN analysis would add unnecessary complexity to Pritchett’s argument. This illustrates the advantage of non-ITN cost-effectiveness analysis:

- Calculating the scale of the benefits of economic growth

What is the total scale of the problem that economic growth is trying to solve? Economic growth can arguably produce arbitrarily large benefits, so should we use a discount rate of some sort? Which one should we use? Etc. We can avoid these questions by focusing on the limited benefits of particular growth episodes a la Lant.

- Calculating the neglectedness of economics research

At no point does Pritchett appeal to the neglectedness of research and advocacy for growth relative to evidence-based development. Doing so is unnecessary to get to his conclusion.

Bostrom on existential risk

In his paper, ‘Existential Risk Prevention as Global Priority’, Nick Bostrom defends the view that reducing existential risk should be a top priority for our civilisation, and argues:

“Even if we give this allegedly lower bound on the cumulative output potential of a technologically mature civilization a mere 1% chance of being correct, we find that the expected value of reducing existential risk by a mere one billionth of one billionth of one percentage point is worth a hundred billion times as much as a billion human lives.

One might consequently argue that even the tiniest reduction of existential risk has an expected value greater than that of the definite provision of any "ordinary" good, such as the direct benefit of saving 1 billion lives.”

This is an argument in favour of focusing on existential risk that provides a lower bound cost-effectiveness estimate for the expected value of existential risk reduction. The argument is: plausible work on existential risk is likely to make even very small reductions in ex risk, which will have greater expected value than any action one could plausibly take to improve (eg) global poverty or health. Estimating the total neglectedness of the problem of existential risk is unnecessary here. You just have to know the lower bound of how big an effect a state could expect to have on existential risk. That is, you have to know the lower bound of tractability, understood as ‘% of problem solved /$’. Neglectedness is one determinant of tractability (thus defined), and it is not necessary to evaluate all determinants of tractability when evaluating tractability.

Matheny on existential risk and asteroid protection

In his ‘Reducing the Risk of Human Extinction’, Jason Matheny argues that existential risk reduction has very high expected value, and uses the example of asteroid protection to illustrate this. He concludes that an asteroid detect and deflect system costing $20 billion would produce benefits equivalent to saving a life for $2.50.[^5]

Since this is much lower than what you can get from almost all global poverty and health interventions, asteroid protection is better than global poverty and health. One might add that since that most experts think that work on other problems such as AI, biosecurity and nuclear security is much more cost-effective than asteroid protection (from a long-termist point of view), working on these problems must (per epistemic modesty) a fortiori be even better than global poverty and health. If there were reliable conversions between the best animal welfare interventions and global poverty and health interventions, then this would also enable us to choose between the causes of existential risk, global poverty and animal welfare.

In this case, one does not need to estimate neglectedness because we can already quantify what a particular amount of resources will achieve in reducing the problem of existential risk.

3. Conclusions

In this post, I have argued that:

- Extant versions of the ITN framework are subject to conceptual problems.

- A new version of the ITN framework, developed here, is preferable to extant versions.

- Non-ITN cost-effectiveness analysis is, when workable, superior to ITN analysis for the purposes of cause prioritisation.

- This is because:

- Marginal cost-effectiveness is what we ultimately care about.

- If we can estimate the marginal cost-effectiveness of work on a cause without estimating the total scale of a problem or its neglectedness, then we should do that, in order to save time.

- Marginal cost-effectiveness analysis does not require the assumption of diminishing marginal returns, which may not characterise all problems.

The ITN framework may be preferable to cost-effectiveness analysis when:

- At current levels of information, it is difficult to produce intuitions about the effect that a marginal amount of resources will have on a problem. In that case, it may be easier to zoom out and get some lower resolution information on the total scale of a problem and on its neglectedness, and then to try to weigh up the other factors (aside from neglectedness) bearing on tractability. This can be a good way to economise time on cause prioritisation decisions.

Often, as we have seen, this will sometimes leave us uncertain about which cause is best. This is what we should expect from ITN analysis. We should not expect ITN analysis to resolve all of our difficult cause selection decisions. We can resolve this uncertainty by gathering more information about the factors bearing on the cost-effectiveness of working on a problem. This is difficult work that must go far beyond a simple mechanistic process of quantifying and aggregating three scores.

For example, suppose we are deciding how to prioritise global poverty and climate change. This is a high stakes decision for the EA community, as it could affect the allocation of tens of millions of dollars. While it may be relatively easy to bound the importance and neglectedness of these problems, that still leaves out a lot of information on the other multitudinous factors that bear on how cost-effective these causes are to work on. To really be confident in our choice between these causes, we would have to consider factors including:

- The best way to make progress on global poverty. Should we focus on health or on growth? How do we increase growth? Etc etc

- What are the indirect effects of growth? Does it make people more tolerant and liberal? To what extent does it increase the discovery of civilisation-threatening technologies? Etc etc.

- Plausible estimates of the social cost of carbon. How should we quantify the potential mass migration that could result from extreme climate change? How should we discount future benefits? How rich will people be in 100 years? Etc etc.

- Ways to convert global poverty reduction benefits into climate change reduction benefits.

- What are the best levers to pull on in climate change. How good would a carbon tax be and how tractable is it? Can renewables take over the electricity supply? Is innovation the way forward? What are the prospects of success for nuclear innovation and enhanced geothermal? Do any non-profits stand a chance of affecting innovation? Does increasing nuclear power lead to weapons proliferation? etc etc.

These are all difficult questions, and we need to answer them in order to make reasonable judgements about cause prioritisation. We should not expect a simple three factor aggregation process to solve difficult cause prioritisation decisions such as these. The more we look in detail at particular causes, the further we get from low resolution ITN analysis, and the closer we get to producing a direct marginal cost-effectiveness estimate of work on these problems.

To have confidence in our high stakes cause prioritisation decisions, the EA community should move away from ITN analysis, and move towards in-depth marginal cost-effectiveness analysis.

Thanks to Stefan Schubert and Martijn Kaag for helpful comments and suggestions.

[^1] Plausibly, we should actually consider the total all-time resources that will go to a problem over time, but that is the subject for another post.

[^2] For mathematical ease, we can make the denominator 1 here and so calculate the good produced by a doubling of resources from the current level

[^3] Rob Wiblin ‘The Important/Neglected/Tractable framework needs to be applied with care’ (2016)

[^4] On this, see Arepo (Sasha Cooper), ‘Against neglectedness’, EA Forum (Nov 2017); sbehmer, ‘Is Neglectedness a Strong Predictor of Marginal Impact?’, EA Forum (Nov 2018). See also Owen Cotton-Barrat ‘The law of diminishing returns’, FHI (2014).

[^5] For similar, see Piers Millett and Andrew Snyder-Beattie, ‘Existential Risk and Cost-Effective Biosecurity’, Health Security 15, no. 4 (1 August 2017): 373–83, https://doi.org/10.1089/hs.2017.0028.

I found this article useful, and I could imagine sending it to other people in the EA community. Thanks for writing it!

There’s a range of posts critiquing ITN from different angles, including many of the ones you specify. I was working on a literature review of these critiques, but stopped in the middle. It seemed to me that organizations that use ITN do so in part because it’s an easy to read communication framework. It boils down an intuitive synthesis of a lot of personal research into something that feels like a metric.

When GiveWell analyzes a charity, they have a carefully specified framework they use to derive a precise cost effectiveness estimate. By contrast, I don’t believe that 80k or OpenPhil have anything comparable for the ITN rankings they assign. Instead, I believe that their scores reflect a deeply researched and well-considered, but essentially intuitive personal opinion.

I should just flag I've put a post on this topic on the forum too, albeit one that doesn't directly reply to John but addressed many of the points raised in the OP and in the comments.

I will make a direct reply to John on one issue. He suggests we should:

(that's supposed to be in quotation marks but they seem not be working).

I don't think this is a useful heuristic and I don't see problems which are higher scale:neglectedness should be higher priority. There are two issues with this. One is that problems with no resources going towards them will score infinitely highly on this schema. Another is that delineating one 'problem' from another is arbitrary anyway.

Let's illustrate what happens when we put these together. Suppose we’re talking about the cause of reducing poverty and, suppose further, it happens to be the case that it’s just as cost-effective to help one poor person as another. As a cause, quite a lot of money goes to poverty and let’s assume poverty scores badly (relative to our other causes) on this scale/neglectedness rating. I pick out person P, who is currently not receiving any aid and declare that ‘cause P’ – helping person P – is entirely neglected. Cause P now has infinite score on scale/neglectedness and suddenly looks very promising via this heuristic. This is perverse as, by stipulation, helping P is just as cost-effective any helping any other person in poverty.

Hello michael. This feels like going too far in an anti-ITN direction. On the scores going to infinity point, this feels like an edge case where things break down rather than something which renders the framework useless. Price elasticities also have this feature for example, but still seem useful.

On defining a problem, there have to be restrictions on what you are comparing in order to make the framework useful. Nevertheless, it does seem that there is a meaningful sense in which we can compare eg malaria and diabetes in terms of scale and neglectedness, and that this could be a useful comparison to make.

Overall, I do think the ITN framework can be useful sometimes. If you knew nothing else about two problems aside from their importance and neglectedness and one was very important and neglected and one was not, then that would indeed be a reason to favour the former. Sometimes, problems will dominate others in terms of the three criteria considered at low resolution, and there the framework will again be useful.

Where I have my doubts is in it being used to make decisions in the hard high stakes cases. There, we need to use the best available arguments on marginal cost-effectiveness, not this very zoomed out perspective. eg we need to discuss whether technical AI safety research can indeed make progress.

So here's a framing that I found useful, maybe someone else will too.

Given some problem area, let's say I is the importance of the problem, defined as the total value we gain from solving the whole thing, and write p(r)∈[0,1] for the proportion of the problem solved depending on the total resources r invested (this is the graph in the post).

Now let's say R is the amount of resources that are currently being used to combat the problem. We want to estimate the current marginal value of additional resources, which is given by I⋅dpdr(R).

The ITN framework splits the second factor into tractability and neglectedness. If we write r′=rR for resources normalized by the current investment R, then

The factors on the right-hand side represent tractability T=dpdr′ and neglectedness N=1R. So we've recovered the familiar I⋅T⋅N = marginal value of additional resources.

But this feels like a kinda clumsy way to do it―it's not clear what we gain from introducing r′. Instead, we should just try to estimate dpdr(R) directly (this is the main argument I think OP is making).

I think this is on the right track --though as you say its a bit clumsy. There is a similar formalism called the 'Kaya identity' (see google--its well known) with the same issues. i'm trying to develop a slightly different and possibly more useful formalism or formula (but i may not succeed)

>in-depth marginal cost-effectiveness analysis.

I'd recommend finding an easy to remember name for the proposal.

Marginal Efficiency Gain Analysis? (MEGA)

something metaphorical? (what well known existing thing does something similar?)

Also some worked examples will both help cement the idea and show possible areas of improvement.

I think this is the core of describing the issue and why we don't need to talk about neglectedness as a separate factor from tractability! I have found this a useful and understandable visual interpretation of the ITN-framework.

One thing I worry about with the ITN-framework is that it seems to assume smooth curves: it seem to assume that returns diminish as more (homogenous) resources are invested. I think this is much more applicable to funding decisions than to career decisions. Dollars are more easily comparable than workers. Problems need a portfolio of skills. If I want to assess the value I could have by working on a particular problem, I'd better ask whether I can fill a gap in that area than what the overall tractability is of general, homogenous human resources.

Thanks for this Halstead - thoughtful article.

I have a one push-back, and one question about your preferred process for applying the ITN framework.

1. After explaining the 80K formalisation of ITN you say

I think the answer is that in some contexts it's easier to calculate each term separately and then combine them in a later step, than to calculate the cost-effectiveness directly. It's also easier to sanity check that each term looks sensible separately, as our intuitions are often more reliable for the separate terms than for the marginal cost effectiveness.

Take technical AI safety research as an example. I'd have trouble directly estimating "How much good would we do by spending $1000 in this area", or sanity checking the result. I'd also have trouble with "What % of this problem would we solve by spending another $100?" (your preferred definition of tractability). I'd feel at least somewhat more confident making and eye-balling estimates for

I do think the tractability estimate is the hardest to construct and assess in this case, but I think it's better than the alternatives. And if we assume diminishing marginal returns we can make the tractability estimate easier by replacing it with "How many resources would be needed to completely solve this problem?"

So I think the 80K formalisation is useful in at least some contexts, e.g. AI safety.

2. In the alternative ITN framework of the Founders Pledge, neglectedness is just one input to tractability. But then you score each cause on i) the ratio importance/neglectedness, and ii) all the factors bearing on tractability except neglectedness. To me, it feels like (ii) would be quite hard to score, as you have to pretend you don't know things that you do know (neglectedness).

Wouldn't it be easier to simply score each cause on importance and tractability, using neglectedness as one input to the tractability score? This has the added benefit of not assuming diminishing marginal returns, as you can weight neglectedness less strongly when you don't think there are DMR.

Hmmm. I don't really see how this is any harder, or different from, your proposed method, which is to figure out how much of the problem would be solved by increasing spend by 10%. In both cases you've got to do something like working out how much money it would take to 'solve' AI safety. Then you play with that number.

I don't see how you get this from the 80k article. On my reading, their definition of importance is just the amount of good done (rather than good done per % of problem solved), and their definition of neglectedness is just the level of resources (rather than the percentage change per dollar). You should be clear that you're giving an interpretation of their model, and not just copying it.

Oops, I was wrong. I had skipped the intro section and was looking at the definitions later in the article.

I think your critique of the ITN framework might be flawed. (though I haven't read section 2 yet). I assume some of my critique must be wrong as I still feel a bit confused about it, but I really need to get back to work...

One point that I think is a bit confusing is that you use the term marginal cost-effectiveness. To my knowledge this is not an acknowledged term in economics or elsewhere. What I think you mean instead is the average benefit given a certain amount of money.

Cost-effectiveness is (according to wikipedia at least) generally expressed at something like: 100USD/QALY. This is done by looking at how much a program costs and how many QALYs it created. So we get the average benefit of each $100 dollars for the program by doing this. However, we gain no insight as to what happened inside of the program. Maybe the first 100USD did all the work and the rest ended up being fluff, we don't know. More likely would be that the money had diminishing marginal returns.

When talking about tractability you say:

You would know cost-effectiveness if you knew the amount spent so far/amount of good done. You know the amount spent from neglectedness but don't know the amount already done with the money spent. I guess marginal cost-effectiveness = average benefit from X more dollars. Let’s say that this is doubling the amount spent so far. I don’t think we can construe this as marginal though as doubling the money is not an ‘at the margin’ change. I think then that tractability gives you average benefit from X more dollars (so no need for scale).

We still need neglectedness and scale though to do a proper analysis.

Scale because if something wasn’t a big problem, why solve it? And to look at neglectedness let's use some made-up numbers:

Say that we as humanity have already spent 1 trillion USD on climate change (we use this to measure neglectedness) and got a 1% reduction in risk of an extinction event (use this to calculate the amount of good = .01* present value of all future lives). That gives us cost-effectiveness (cost/good done). We DON'T know however what happens at the margin (if we put more money in). We just have an average. Assuming constant returns may seem (almost) reasonable on an intervention like bed net distribution but it seems less reasonable when we've already spent 1 trillion USD on a problem. Then what we really need to know is the benefit of, say, another 1 trillion USD. This I think is what 80k's tractability measure is trying to get at. The average benefit (or cost-effectiveness) of another hunk of money/resources.

So defending neglectedness a bit. If we think that the marginal benefit to more money is not constant (which seems eminently reasonable) then it makes sense to try to find out where we are on the curve. Neglectedness helps to show us where we might be on the curve, even though we have little idea what the curve looks like (though I would generally find it safe to assume decreasing marginal returns). If we're on the flat bit of the diminishing marginal returns curve then we sure as hell want to know, or at least find evidence which would indicate that to be likely.

So then neglectedness is trying to find where we are on the curve, which will help us understand the marginal return to one more person/dollar entering (the true margin). This might mean that even if a problem is unsolvable there might be easy gains to be had in terms of reducing risk on the margin. For something that is neglected but not tractable we might be able to have huge benefits by throwing a few people/dollars in (get x-risk reductions f.ex) but that might peter off really quickly thus making it untractable. It would be less attractive overall then because putting a lot of people in would not be worth it.

Tractability says, if we were to dump lot's more money, what are the average returns going to look like. If we are now at the flat part of the curve average returns might be FAR lower than they were in a cost-effectiveness analysis (average returns of past spending) of what we already spent.

Maybe new intuitions for these:

Neglectedness: How much bang for the buck do we get for one more person/dollar?

Tractability: Is it worth dumping lot's of resources into this problem?

I think you identified the same problem i saw. If you have a small problem, then there no reason to call it 'neglected' if you put enough resources into solving that small problem. You have to put all problems into context--no reason to spend alot of resources to 100% solve a small problem when you put no resources into trying to solve a big problem. This is like spending alot of money to give sandwiches to solve temporary hunger problem for a few people, while 'neglecting ' the entire issue of global hunger or food scarcity.

Some of the images in the post need updating, they don't show up.

This is an interesting (or 'thought provoking') article, and it linked to other articles (mostly on 80,000 hours) which in turn linked to others (e.g. B Tomasik's blog), as well as the TEDTalk by MacAskill. I had skimmed some of the 80,000 hours articles before, but skimming them again I found clarified some issues, and I realized I had missed some points in them before .

(One point I had missed was the article by Tomasik on why many charities may not differ all that much in terms of their effectiveness. I think some cases can be distinguished --for example a 'corrupt charity' (which spends most of its money on salaries and fundraising--there have been many in my area and they make up data about how much good they have done; or some which do research on interventions that are 'way out of the scientific mainstream' ---and which typically are often not peer reviewed by other scientists even if they claim to be scientific approaches. There also cases of large charities which spend alot of money on 'disaster releif' often if foreign countries (i'm in US) , but on review , one cannot find out how most of the money was spent.

This is why my limited charity given mostly goes to small projects I'm somewhat familiar with; also big charities often have alot more resources and ways tio generate revenue, so even if they do reasonable work and could use more resources, i spread my donations --partly because of my study of ecology.)

So far, I don't see a really succint formula which can capture the complexity of these issues (apart from the very basic ones in the paper --ie using INT framework you end up with Effectiveness = good done/$ spent).

I often hear ideas like its better to spend alot of money on issues like malaria or deworming because these appear to have 'good' returns in terms of QALYs, and also by saving the lives of alot of people you may then have some 'potential Einsteins' or 'Newtons' or 'Hawkings' or some other famous genius who may help save alot of future humanity. Bu then others say it would be better to spend the same amount of money on someone on the planet right now to fund their research on how to stop an asteroid from causing the extinction of all humanity.

It might cost as much to fund 5 people doing the research on asteroid hazards, as it would be to fund 1000s of people (i.e. 'giving as consumption') to avoid death in infancy, so its hard to calculate both scale and neglectedness , as well as tractability.

(e.g. the TEDtalk while discussing impressive gains over time in Human development indicators, sort of glosses over some 'complicated issues'---such as the fact that while 'democratization' has increased, many democracies and semi-democracies (places with elections) such as Venezuela, Brazil, even USA and UK and rest of EU, Israel Kenya, Brazil, South Africa, India, Russia, Syria, Egypt, Turkey, etc. seem far from ideal. Also while life expectancy (and populations ) have gone up, these also cause demographic and environmental problems --including ethnic conflict, biodiversity loss, etc. )

So there appears to be some more research on this problem --I'm even working on my own model (and related research is being done by many people), though I don't know if this is 'effective' (though at least its relatively low cost--some very powerful ideas were produced on a shoestring budget).

Thank you for this! I think this sort of challenge and thinking is really valuable and exactly what the EA community needs.

For comparison, the SoGive method of analysing charities advises focusing marginal cost-effectiveness, and not on the ITN framework. I absolutely welcome this thoughtful analysis of the framework.

(Full disclosure: I developed the SoGive method of analysing charities; it's essentially inspired by EA thinking, but not particularly focused on the ITN framework)

This is how I think about the ITN framework:

What we ultimately care about is marginal utility per dollar, MU/$ (or marginal cost-effectiveness). ITN is a way of proxying MU/$ when we can't easily estimate it directly.

Definitions:

Note that tractability can be a function of neglectedness: the amount of the problem solved per dollar will likely vary depending on how many resources are already allocated. This is to capture diminishing returns, as we expect the first dollar spent on a problem to be more effective in solving it than the millionth dollar.

Then to get MU/$ as a function of neglectedness, we multiply importance and tractability: MU/$ = utility(total problem) * % solved/$ (=f(resources)). Now we have MU/$ as a function of resources, so to figure out where we are on the MU/$ curve, we plug in the value of resources (neglectedness).

Here's an example without diminishing returns: suppose solving an entire problem increases utility by 100 utils, so importance = 100 utils. And suppose tractability is 1% of the problem solved per dollar. Note that this doesn't vary with resources spent, so there aren't diminishing returns. Then MU/$ = 100 utils * 0.01/$ = 1 util/$. Here, neglectedness (defined as resources spent) doesn't matter, except when spending hits $100 and the problem is fully solved.

Now let's introduce diminishing returns. Let's denote resources spent by x. As before, importance = 100 utils. But now, suppose tractability is (1/x)% of the problem solved per dollar. Now we have diminishing returns: the first dollar solves 1% of the problem, but the tenth dollar solves 0.1%. Here MU/$ = 100 utils * (1/x)%/$ = 1/x utils/$. To evaluate the MU/$ of this problem, we need to know how neglected it is, captured by how many resources, x, have already been spent.

Hence, importance and tractability define MU/$ as a function of neglectedness, and neglectedness determines the specific value of MU/$.

The examples given above all have scale, however scale/importance should not be ignored. If X has great marginal cost-effectiveness but needs only a millon dollars to solve, it is a waste of time for the EA community to think and discuss about it. That kind of money can be easily raised, and once the problem is solved can be forgotten.

On economic growth, it is not clear that the investments in economists is the reason for policy change in India or China, it is/was obvious to everyone that Western World is the most powerful, and everybody around the world was looking for lessons from the West. In fact most the developing world tried to quickly industrialize (using various paths, some succesful some not). Also Lant's analysis ignores other factors that may have contributed to the economic growth.

Thanks for completing this analysis on the advantages and disadvantages of the INT framework! I particularly like you clearly enumerating your points.

I think there are some important points not adequately covered in the alternative INT framework and discussion of cost-effectiveness estimates. Namely:

(1) To a significant extent cause prioritization involves estimating long-term counterfactual impacts

(2) Neglectedness could be instrumental to estimating long-term counterfactual impacts because the more neglected a cause the more potential to translate to greater far future trajectory changes, as opposed to accelerating proximate changes