Summary: This is an FAQ on the AI Safety GiveWiki at ai.givewiki.org. It’s open to anyone, and we’re particularly trying to attract s/x-risk projects at the moment! Some of the questions, though, apply to impact markets more generally. This document will give you an overview of what it is that we’re building and where we’re hoping to go with it.

But before we jump into the FAQ, a quick announcement:

We are looking for new projects and expressions of interest from donors!

- If you’re a donor who doesn’t want to spend a lot of time researching your donations, you’ll be able to follow sophisticated donors who have skin in the game. Our impact market doubles as a crowdsourced charity evaluator for all the small, speculative, potentially-spectacular projects across all cause areas. You’ll be able to tap into the wisdom of our top donors to boost the impact of your donations. Please indicate your interest!

- If you’re a donor who has insider knowledge of some space of nonprofit work or likes to thoroughly research your donations, you can use the platform to signal-boost the best projects. You get a “donor score” based on your track record of impact, and the higher your score, the greater your boost to the project. This lets you leverage your expertise for follow-on donations, getting the project funded faster. Please indicate your interest!

- Are you fundraising for some project, as individual or organization? Please post it to our platform. No requirements when it comes to the format or scope, so you can copy-paste or link whatever proposals you already have. We want to make it easier for lesser-known projects to find donors. We score donors by their track record of finding new high-impact projects, which signal-boosts the projects that they support. Attention from top donors helps you be discovered by more donors, which can snowball into greater and greater fundraising success.

- If you are a philanthropic funder, we want to make all the local information accessible to you that is distributed across thousands of sophisticated donors and helps them find exceptional funding gaps. We signal-boost that knowledge and make it legible. You can use cash or regranting prizes to incentivize these donors, or you can mine their findings for any funding gaps that you want to fill.

Please let me know if you have any further questions, below or in a call.

General questions

What problems does it solve?

Donors and grant applicants face the following three problems at the moment:

- Charity entrepreneurs are known to waste a lot of time on redundant grant applications – each tailored a bit to the questions of the respective funder but otherwise virtually identical in content.

- Donors, especially if they are “earning to give,” often don’t have the time to do a lot of vetting. Funds and donor lotteries address this, but a team of fund managers needs to win their trust first, which is not a given, and maybe they don’t want to take months off work in case they win the lottery.

- Larger funders generally don’t want to invest much more time and money into vetting a project than it would cost them to fund it. Hence funders are forced to ignore projects that are too small.

This is our solution:

- We promote givewiki.org as the one platform where grant applicants can publish their project proposals. No particular format: There’s a Q & A system though for funders/donors to ask further questions as needed. Questions and answers are public too. Funders can subscribe to notifications of new, popular projects in their cause areas.

- When a donor supports a project, they can record that. When a project claims to have succeeded, some experts evaluate it. Eventually early donors to successful projects (“top donors”) will stand out as having unusual foresight, especially if they can repeat this feat several times. Donors who don’t have the time to do as much research can follow the top donors to inform their own donations.

- Larger funders can (1) also follow the implicit recommendations of top donors, (2) encourage top donors with cash or regranting prizes, and (3) recruit grantmakers from the set of top donors.

There are a host of other benefits in various specific scenarios. You can read about them on our blog.

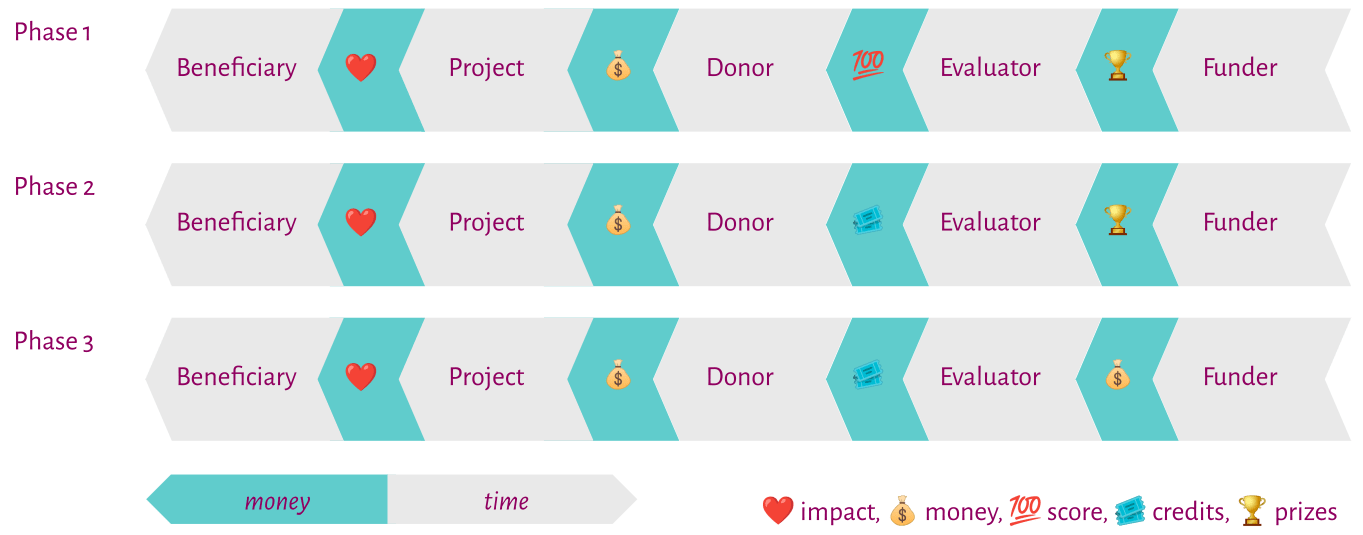

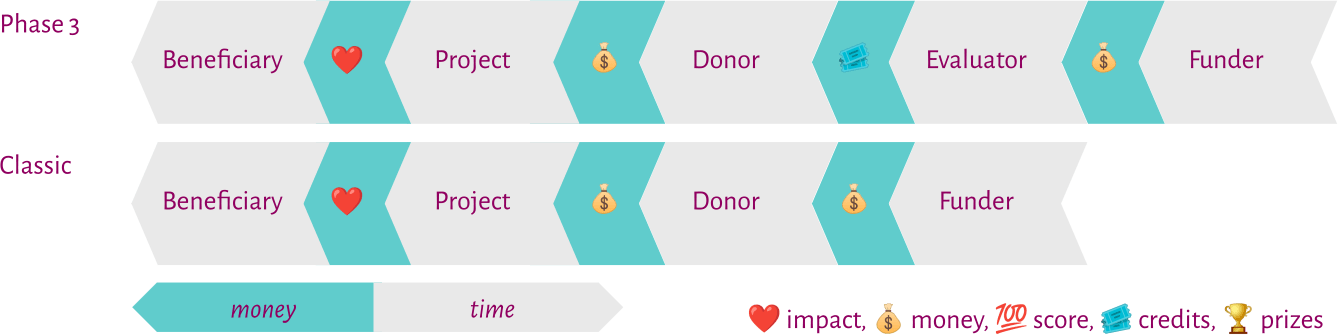

Eventually we want to grow this into an ecosystem akin to the voluntary carbon credit market (phase 3). But for now only phase 1 is relevant.

What is a project?

What we call a project is some set of actions that creators or charity entrepreneurs plan to carry out within some time frame. Good examples are blog articles, scientific papers, campaigns, courses, etc.

Whole charities (like the whole of the Center on Long-Term Risk rather than any one piece of research) are a bit of an awkward contingency because they don’t have any obvious “completion date,” but they qualify too. We’re considering methods for how we can evaluate them too.

What is a creator or charity entrepreneur?

We call a creator or charity entrepreneur someone who publishes a project on our website to fundraise for it. They are usually researchers, founders, entrepreneurs, etc. Founder would be another obvious choice, but the term creator is more general and harder to confuse with funder than founder.

What is a specialist donor; what is a generalist donor?

Both of them give money to projects, but their ambitions are different.

Generalist donors either don’t have the time or the specialized knowledge to evaluate projects. They want to use the impact market like a black box, a charity evaluator that makes recommendations to them.

Specialist donors have the time or the special knowledge to form a first-hand opinion on projects – be it because they are experts in a relevant field, because they are experts in startup picking, or simply because they are friends with the people who run a particular project. They use the impact market to make recommendations and thereby leverage the donations of the donors who rely on them. They may also be after the prizes that funders might provide!

What is a funder?

Funders are basically large donors. They can behave exactly like other donors, but they can also provide prizes to incentivize other donors.

Questions about the platform

What is the donor score?

The score is computed in three steps:

- The contribution of each donor to a given project is calculated as a fraction that is greater if the donor contributed to a project earlier. Earliness here is not about sidereal time but about the order of the donations, so it doesn’t matter whether there’s a day or a year between the first and the second donation. The standard score also takes the size of the donation into account.

- Eventually many projects will complete and then get evaluated. The result is a score that expresses the evaluators aggregate opinion on the relative impact of the project.

- Finally the per-project contributions and the per-project scores are multiplied and summed up for each donor. This results in the scores that form the donor ranking.

When and how are projects evaluated?

Each project has an end date. The project creator can edit this date in case things take longer than planned, but at some point the date will be in the past and the project will really be complete. At this point it can apply to be evaluated.

We want to get a number of evaluators on board to consider the project artifacts and pass judgment on them.

The focus here will not be to make great contributions to priorities research but rather, if the project is (say) a book, to establish whether the book got written at all and whether it looks like someone has put effort into it. Ethical value judgments will be embedded in these assessments but we can hopefully find multiple evaluators so that, in controversial cases, the scores can average out.

The guideline for the calibration of the scores will be something along the lines: Suppose this book/paper/etc. didn’t exist, and I wanted to make it happen. How much would I have to pay? Or conversely for harmful projects: If there were a fairy who let’s me undo this book/paper/etc., how much would I pay to have it undone?

What are your long-term plans?

There have been changes to the funding landscape in 2022. Such vicissitudes keep our long-term plans in flux. But at the moment we’re aiming to create a market that is similar to the voluntary market for carbon credits. (These are also called “carbon offsets,” but the term “offset” would be confusing in our context.)

- In the first phase we want to work with just the score that is explained above. Any prizes will be attached to such a score.

- In the second phase, we want to introduce a play money currency that we might call “impact credit” or “impact mark.” The idea is to reward people with high scores with something that they can transfer within the platform so that incentives for donors will be controlled less and less by the people with the prize money and increasingly by the top donors who have proved their mettle and received impact credits as a result.

- Eventually, and this brings us to the third phase, we want to understand the legal landscape well enough to allow trade of impact credits against dollars or other currencies. We would like for impact credits to enjoy the same status that carbon credits already have. They should function like generalized carbon credits.

Last time I checked you were doing something with impact certificates, though?

What we’ve been calling a “project” is something that can issue one or more impact certificates. Our platform still lists the existing certificates, but that’s merely an archive at this point. There is a chance we might return to this format, especially if we choose to found a nonprofit branch of our organization, but for the moment we have no such plans.

We’ve encountered three problems with impact certificates:

- Charity entrepreneurs are hesitant to issue them because they need to define what their plans are and who their contributors are in some detail and commit to never issuing overlapping certificates. That requires some thought and coordination, and without a fairly strong promise of funding, few charity entrepreneurs are ready to put in the time and effort. That was compounded by the problem that hardly any funders were interested in using our or any impact market, so that there never was any such “strong promise of funding.”

- Trading impact certificates is only legal between accredited investors in the US. Trade between accredited investors internationally (esp. US, Canada, EU, UK, and India) is sufficiently recondite of a problem that we haven’t found any experts on it yet. Additionally, we would not be allowed to help these investors coordinate either without running afoul of broker-dealer regulations. Figuring this out just for the US and accredited investors is something that can easily cost upward of $100k in lawyer fees and might even then just fail. The combination of costs, risks, and limitation to one country and only rich people was too much for us, at least at this level of scale.

- Funders were very hard to find. We concentrated on outreach to funders for several months, had talks at several Effective Altruism Globals and other conferences, but in the end only got two funders interested (among them Scott Alexander though!), who promptly lost most of their funding because it was tied to the Future Fund. The funding situation changed to become even more unfavorable, so that we were no longer optimistic that it might still become easier to find funders.

Finally – and this hasn’t become a problem but would have – a lot of interesting financial instruments, such as perpetual futures, will remain inapplicable to impact certificates because each one of them is doomed to have very little liquidity. Most projects on the GiveWiki will require some $10–100k in seed funding to get off the ground. The fully diluted market cap of even the most successful projects will probably almost never exceed $1–10m. The circulating supply will be much less still. Such assets are a good fit for bonding curve or English auctions but it would be useless to try to set up order books, indices, and futures markets for them. We previously hoped to bucket them to alleviate this problem. Impact credits will hopefully one day serve this purpose.

Hence, we’ve removed impact certificates from our plans and introduced projects instead, which are perfectly laissez faire about their definition. We’ve also opted to allow no trade of anything that can be turned into dollars. We might reboot markets for impact certs when the overall conditions change.

You can think of the donor score as analogous to the total value that you would hold in retired certificate shares if we still had those. (“Retired,” a.k.a. “consumed” or “burned,” shares are ones that cannot be sold anymore.) But it’s probably just confusing to think of it that way.

The only monetary rewards that donors may receive are prizes if they make it to the top of our donor ranking.

Questions from charity entrepreneurs

What does this platform do for me?

That hinges on how much promise your project has.

Let’s say it has a lot.

- Do you have some donors who already trust you? Convince them to donate, then bring them on the platform to register their donations. As early donors they’ll be rewarded richly by the scoring.

- Do you know any of the top donors? If the #1 donors is an AI specialist but your project is in animal rights, getting in touch with a top donor who is a specialist in your field may pay off even if they’re only donor #8 because animal rights donors will tend to follow the recommendations of other animal rights donors.

- Now your project has gotten a donation from a top donor? That’ll wash it way up in our list of projects so that donors will see it even if they’re not yet following that exact donor!

To wit: As soon as you have any fundraising success, you can leverage it to build greater success. The platform even does it for you!

What sorts of projects can I post?

Basically anything goes… so long as it’s legal and not super risky!

We review every project and eliminate any that seem to us like they might be harmful. But please also make sure yourself that you don’t include any classified information or info hazards in the description because all projects are public. (Would you like to make your project only accessible to logged-in users? Send us a message through the Intercom button in the bottom right to indicate your interest in this feature!)

The ideal project is something finite that produces artifacts. Our evaluators will have an easy time with projects that fundraise for books, articles, or papers because they can read them to assess them. They’ll have a hard time with projects that are about whole organizations because an organization typically does a lot and they also can’t look into the future to know what great things the organization might still accomplish. Expect organizations to be undervalued, not because they suck but because so much of what they do is shrouded by the future and closed office doors.

How long does it take to submit a project?

There’s no required format. So if you already have a funding application lying around because you already applied for funding from some foundation, then just copy-paste or link it.

Other than that, you just need to enter a title and someplace where people can send you their donations, such as a PayPal or Stripe page. You can add some tags to make it easier for your project to be found. All in all this should take no longer than 5 minutes.

If you have no application written up yet, it’ll take longer. It’s up to you how comprehensive you want to make it. One thing I like to do is to write down just the essentials (if it’s short, it’s more likely to get read too), and then to include a link to a site where people can book a call with me to learn more.

Alternatively they also have the option to ask questions in the Q & A section. No need to procatalepse them all in your description when you can just respond to the ones that actually come up.

Amber Dawn might also help you with the writing.

Questions from specialist donors

What does this platform do for me?

Have you supported any charities early on that late made it bigly?

I, for one, would love to know what fledgling organizations you support today so I can get in early too. And for you that means that suddenly your donations count for more!

Some of my friends donate up to $100,000 per year. They don’t have much time to research their donations, so they, too, would love to know about that fledgling organization that you support. Even if you just donate $100 to the organization, your $100 might leverage $100,000 from the donors who trust your judgment!

That’s one thing that the platform can do for you.

Another is that we’re hoping for larger funders to come in and to reward our top donors. They might opt for cash prizes or for regranting prizes. Either way you’ll have a lot more money to give away if you unlock any of those prizes!

Can I make money with this?

My hope is that eventually a substantial number of people can turn donating into their full-time job. They make small but really smart donations, earn high scores as a result, and then make it all back several times over from the prizes that they win.

As of early 2023 we’re not there yet, but you might as well start building up your score already.

Project X doesn’t make sense if it receives less than $10k. I love it, but I only have $1k. What do I do?

A Kickstarter type of system would solve this, right? We can’t easily implement such “assurance contracts” ourselves, but we can help you coordinate: We could offer a way for donors to pledge that they want to donate $x if all donors together pledge to donate $X. Then once the sum of all pledges reaches $X, you’ll all get notified and can dispatch your donations.

Does that sound interesting? Please let us know, e.g., through the Intercom button in the lower right. We’ll prioritize the feature more highly.

Questions from generalist donors

What does this platform do for me?

You want to donate but maybe you don’t have time to do a lot of research or you want to donate in a field where you don’t have the requisite background knowledge. Hence you’re dependent on friends, funds, or charity evaluators to suggest good giving opportunities.

But all of these have limitations: Your friends probably know of many of the same giving opportunities, so you might be overlooking even better ones. The same is true of funds, though they receive applications, which alleviates the problem. Conversely, you may know and trust them less than some of your friends. The track record of retrospective self-evaluation at funds is thin. Finally charity evaluators have a wholly different set of limitations: They put a lot of effort into their evaluations, so that they can’t evaluate projects whose funding gaps are so small that they don’t warrant the evaluators’ efforts. Plus charity evaluators don’t exist for many cause areas.

We want to solve that for you. All you need to do when you want to donate is to turn to our platform. You can:

- View our top donor ranking, pick out top donors who share your values, and then follow their recommendations, or

- Filter projects according to your values (using the tags) and pick out the ones that have received most top donor support.

Today we’re just getting started, but over the coming months we want to establish a new, bottom-up, grassroots type of funding allocation mechanism that scales down to the smallest projects, is fully meritocratic, and doesn’t know geographic limits.

How do I know that the donors I’m following aren’t just good forecasters but also have good ethics?

Our plan is to hand off power to top donors gradually. First all their forecasting will bottom out at the judgments of impact evaluators that we will hire. That’ll ensure that they’ll be sophisticated altruistic, but it will not immediately steel us against our own biases. Later we want to recruit impact evaluators from our top donors, increasing the organic, bottom-up meritocracy of the platform.

But then we want to transition to phase 2 of our rollout. Phase 2 will gradually put top donors on the same footing as evaluators until most evaluation is done by top donors. But even then our evaluators will still be around to steer the platform as needed to make sure it is not usurped by any amoral top donors.

How do I know that a project still has room for more funding?

We ask projects to publish their fundraising goals and stretch goals. If they have not done so, please ask them for that information in the Q & A section.

Questions from philanthropic funders

What does this platform do for me?

You can use the platform like any other donor to find great, new funding opportunities.

But we also have a special function for you: You can basically rent our top donors by offering regranting budgets to them. Those serve the dual purpose that (1) you’ll get top grantmaker talent for free, maybe even top grantmaker talent whose networks are relatively uncorrelated with yours, and (2) by announcing such a prize, you create an incentive for prospective top donors to show up and try to prove their mettle.

If that sounds interesting to you, please get in touch, e.g., through the Intercom button to the lower right or via hi@givewiki.org.

What if I’m unhappy with the scoring?

Are you? If so, we can easily build a custom score for you. You score the projects, and we aggregate all of your project scores into your own custom donor ranking. Please get in touch if that sounds interesting to you, e.g., through the Intercom button to the lower right or via hi@givewiki.org.

What if there are funders who defect against me by idly waiting for me to post the same prizes they want to see posted?

We’ve termed this problem the “Retrofunder’s Dilemma.” It’s easy to imagine a world in which there are several funders – just too many for them all to be really chummy with each other – who all insist on extremely niche scoring rules to make sure that they don’t reward any donations to good deeds that anyone else might reward too. But that would leave exactly the most uncontroversially good deeds unrewarded.

We’re far from this being a problem for our rewarding, alias retrofunding, at all and even farther from it becoming a greater problem for retrofunding than it is already for prospective funding. But if it becomes a problem, the abovelinked article lists three remedies that funders can implement and four that charity entrepreneurs can implement. Or that we can implement for them to establish coordination.

Questions about impact markets

Is the goal to replace the current funding mechanisms?

Not really, sort of in the way that airplanes didn’t replace bikes. We think that impact markets will be best suited for funding the long-tail of small, young speculative startup charity projects. But they will be rather uninteresting for projects with strong track records or otherwise safe, reliable success. They will also be uninteresting for projects that require a lot of funding from the get-go.

You read more about the math behind these considerations on our blog.

The basic idea is that projects that are > 90% likely to succeed (according to some metric of success that the funder uses) don’t leave much room for an investor to make a profit while reducing the risk further for the funder.

Additionally, a risk-neutral funder is only interested in a risk reduction if it moves an investment from the space of negative expected value to the space of positive expected value. If a project is already 90% likely to succeed, it would have to be very expensive before it could become negative EV for a funder. Such an expensive project is then easily worth the time of the funder to evaluate prospectively rather than retrospectively.

So impact markets (with risk-neutral funders) are most interesting for:

- Projects that seem very speculative to funders, e.g., because they are new and the funder doesn’t know the team behind the project,

- Projects that require little money to get started, so they’re not worth the time of the funder to review.

If highly risk-averse funders are involved, though, they may be happy to pay a disproportionate fee for a risk reduction from 10% to 0%! There are also funders who are limited by their by-laws to only invest in certain types of low-risk projects. In some cases impact markets may present a loophole for them to do good more effectively without incurring any illicit risks.

Is the goal to replace the current market mechanisms?

No. The financial markets have developed over the course of over a century and are accompanied with legislation that is usually phrased in such generic terms that it is nigh impossible to create a separate financial apparatus outside of it. Many cryptocurrency projects have tried to create market mechanisms beyond the reach of the law, but the law typically disagreed. More recently, there is instead a stronger push to welcome regulation and to reform the law to facilitate regulation.

We therefore consider it infeasible to try to replace the existing financial systems. Rather our goal is to create systems that reward the creation and maintenance of public, common, and network goods while interfacing with the existing financial systems in standard, regulated ways. (The closest parallel is the voluntary market for carbon credits.)

How good is it?

[This section has not been rewritten for the new “impact credits” approach. The differences are probably minor.]

We’ve been trying to get an idea of how good impact markets are by putting some rough estimates into a Guesstimate, but a lot of the factors are multiplicative and they are all hard to guess, so that the variance of the result is very wide.

Some key benefits are:

- There’ll be as much or more seed funding as there is today. The idea is that many investors will try many different things and try to think outside of the box. Often it’ll turn out that their calibration was off. They’ll invest into lots of projects and make less money back because they were wrong about how great all the projects will turn out. These investors will gradually select themselves out of the pool, but new ones will join. We don’t know how many ill-calibrated investors join for each that is well-calibrated, but we’ve seen some data that prize contests attract investments to the tune of up to 50x the prize money, so the average investor must be fairly ill-calibrated. Our model assumes that the value is probably around 1–20x.

- The allocational efficiency can be improved because investors can overcome language barriers. But much of the world speaks English, and the US dominates the world economy, so we’ve put this improvement at a factor 1–3x.

- The allocational efficiency can be improved because investors are in different social circles. This factor seems more significant to us, and we’ve put it at 1–10x.

- The allocational efficiency can be improved because investors can draw on economies of scale. They can rent one server rack for all of their projects, or they can employ one HR person for all of them, etc. We’ve put this factor at 1–10x too.

- Charities can draw on much greater talent pools if they can use fractions of impact certificates to align incentives. (They can also pay bonuses tied to retro funding.) We think that the talent pool might grow by 1–5x.

- All of this frees up a lot of time for retro funders. Current prospective funders such as the Open Philanthropy Project have a lot of staff who would be excellent at a very practical brand of priorities research. When impact markets free up time for them, they can devote that time to research. Evidential cooperation in large worlds alone can serve as a likely existence proof that there is a lot more to know about global priorities. We very conservatively assume that improved understanding of priorities will boost the allocational efficiency by 1–10x.

The result of the model is that impact markets are unlikely to improve the current efficiency by less than 60x or by more than 11,000x.

We think that this range is likely biased upward:

- Our model ignores black swan events that may occur with an unknown frequency and may be very harmful.

- It is well possible that some factor in the model actually turns out to be < 1x for some reason that we haven’t thought of.

- Finally, we’ve mentioned before that the multiplicativeness of the model makes it very easy for it to produce big numbers. This is the prime reason that we don’t greatly trust it.

You can find further discussion of the model in the comments on this post.

Can it go bad?

The biggest concern that we’ve had from the beginning in 2021 is that prize contests (such as impact markets) are general purpose: Anyone can use them – to incentivize awesome papers on AI safety or to incentivize terrorist attacks. In fact, promises of rewards in heaven could count as prizes. If we create tooling to make prize contests easier, there is the risk that said tooling will be used by unscrupulous actors too. The very concept of the prize contest could also count as attention hazard.

Here is a summary of all of the risks that we’ve identified and our mitigation strategies.

A rich terrorism funder could, for example, copy our approach and build an analogous platform where they promise millions of dollars to donors who fund speculative approaches to terrorism, such as terror attacks that only work out in 1 in 10 attempts. We would not allow such projects on our platform or a scoring procedure with such goals, but that doesn’t keep terrorists from building their own clone of our platform.

This doesn’t need to be obviously ill-intentioned (though terrorists probably also consider themselves to be heroes). You could imagine someone cloning our platform to fund grassroots nuclear fusion research, which might lead to accidental nuclear chain reactions in the basements of hobby physicists in densely populated cities around the world.

The Impact Attribution Norm alleviates this problem to (roughly) the extent to which it is adopted (see the question about measurement above). Yet it is not obvious that it will reliably be applied the way we would like to see it applied. This article is a good summary of its limits. See also our comment. Consider for example:

- Someone might wrongly think that the impact evaluators will reward them for posting an article that contains some dangerous info hazards.

- This can also happen if they don’t think that the particular impact evaluators will reward them but just that at some point there will be impact evaluators that will reward them.

- Finally, it can happen that the impact evaluators are actually mistaken about the value of some impact and that their mistaken evaluation is predictable. This applies in particular (but not only) to actions that can turn out very well or very badly – might save lives or destroy civilization – but so happen to turn out well. The longer the duration between the launch of the project and the evaluation, the greater the risk that the prize committee will see only how well it turned out and ignore the other possible world where it did not.

These risks mostly seem like “black swan” risks to us – deleterious but highly unlikely risks. We’re also quite confident that we can prevent them from happening on our platform by carefully moderating all activity.

Finally, there is always the question how easy it is already for unscrupulous actors to achieve their ends and why they are not doing it already. It is quite easy for an unscrupulous millionaire to promise a big reward for something like nuclear fusion simply by tweeting it. But this is not currently a major problem. So the legal safeguards (or some other mechanisms) that also apply to our solution must be working fairly well. That said, we’re not solely relying on them.

Isn’t it in the interest of funders to promise funding but then not pay up?

Seemingly the best outcome for funders is to incentivize excellent work with the promise of a prize but then not reward them at all but to instead put the money into prospective funding of additional impactful work.

That is a shortsighted strategy as no one will trust a funder again if they’ve pulled this trick once. I would go further and suggest that donors should not rely on new funders to pay up unless they have a history of being trustworthy. For funders this means that it’s probably in their interest to gradually ramp up their prizes, so that they can build up trust more cheaply. Another option is escrow.

Eventually we hope to have tradable impact credits so that donors can assume that any funder who suddenly vanishes will thus leave the price at an unexpectedly low level which other funders will immediately use to “buy the dip.”

Does uncertainty really decrease over time?

This article touches on this question. I don’t think it’s important whether there is always more evidence of impact at a later point. Impact markets will just be most interesting for projects for which that is true.

The second part of the answer is that we think that there is a substantial number of projects for which this is true.

An example: You can usually divide your uncertainty about how a project – say, a book – turns out into two multiplicative parts: the probability that the book gets written at all and the impact-over-probability distribution of the finished book if it gets written. Once you know whether the book got written or not, that product collapses into just the second factor (minus the “if …”).

This only goes through if you take uncertainty to mean something like the difference between the best and the worst or the 99st and the 1th percentile outcomes, which may be a bit unintuitive. If you think of uncertainty as variance, and your fully written book has either an extremely positive or an extremely negative impact, then added uncertainty over whether the book has really been written adds another cluster of neutral outcomes in the middle between the extremes. It does not reduce (or increase) the difference between the extremes, but it does reduce the variance.

Do impact markets instead risk centralizing funding?

The whole point of impact markets is to decentralize funding – so might they perversely increase it? The argument goes that the current scoring rule allows for truly exceptionally good donations – the first donation to a project as amazing as Evidential Cooperation in Large Worlds might’ve been a substantial donation. Whoever the donor might be, they’d get an enormous score boost even though they were only right once. This boost might push them to the top of our ranking for many years until finally enough other donors have gradually accrued comparable scores. That seems unlikely but also undesirable.

One variation that we’re trialing is a score that does not take the size of a donation into account but just the earliness. Every project has a first donation, so even the first donations to great projects could no longer be as remarkable as a substantial first donation to a great project could’ve been under the size-weighted scoring rule.

Another remedy is to have scores decay over time. One solution we’re trialing is to have a score that only takes into account donations from the past year.

We’ll keep monitoring this potential issue and react in case it does manifest.

This effort can end up popularizing a mechanism that incentivizes and funds risky, net-negative projects—in anthropogenic x-risk domains, using EA funding.

Conditional on this effort ending up being extremely impactful, do you really believe the downside risks are "highly unlikely"? (And do you think most of the EA community would agree)?