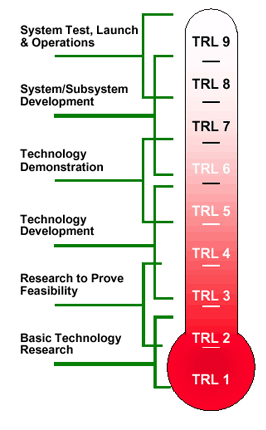

From some previous work on technology timelines I know that NASA used Technology readiness levels to gauge the maturity of technologies for the purposes of space exploration. The scale then later was criticized as becoming much less useful when it became applied more widely and more holistically, particularly by the European Union. Here is a picture of NASA’s technology readiness levels:

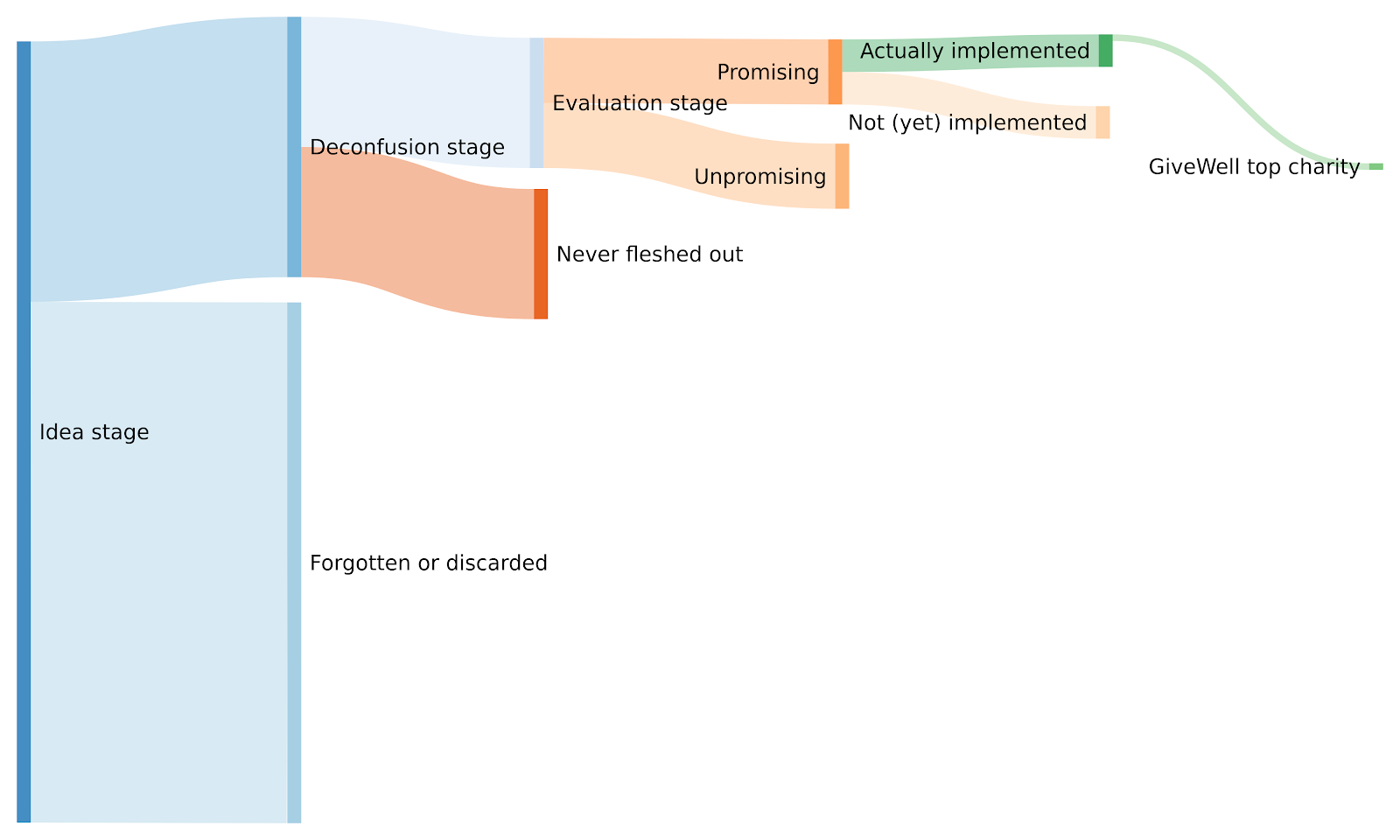

The general idea is an awareness that technology has a funnel, from theoretical proposal to actually being used in space, and that the drop-out rate —the rate at which technologies don't make it to the next stage— is pretty brutal. For Effective Altruism cause candidates, the funnel might look something like this (more on the meaning of each stage later):

So if one is trying to pick technologies for the next generation of rockets, as in the case of NASA, one would want to focus on ideas with a high level of maturity. Similarly, if one is an EA incubator who wants to create a charity which has a chance of becoming a, say, GiveWell top charity, or a valuable long-termist charity, one might also want to choose an idea to implement which has a very high level of maturity.

But one would also want to pay attention to the health of the funnel. That is, one would want to make sure that each stage has an adequate amount of resources, that each stage has enough survivors left for the next stage, and that there isn’t any particularly grievous bottleneck at any stage. For example, it could be the case that many ideas are suggested, but that they are mostly not fleshed out or followed up on, which means that the set of ideas for which there is a cost effectiveness analysis might be very small (and thus its best ideas not as promising as if they had been selected from a larger pool).

This brings me to the question of what the actual stages are. I’m currently thinking in terms of the following stages:

- Ideation stage: A new idea which hasn’t been considered before, or which hasn’t been salient recently, is proposed.

- Deconfusion stage: The proposed idea is fleshed out, and concrete interventions are proposed or explored.

- There is a theory of change from intervention to impact, an understanding of how progress can be measured, etc.

- Evaluation: How does the evidence base for this kind of intervention look?

- What unit of benefit would an intervention produce and how many units would it produce per unit of resources? What does a cost-effectiveness analysis of proposed interventions reveal?

- Overall, is this cause competitive with GiveWell's/ACE's/That Long-Termist Charity Evaluator Organization Which Doesn't Exist But Probably Should's top charities?

- If the evidence base is unclear (for example, because the cause area under consideration is speculative), what specific evidence is there to think that the idea is worth it? Are there robust heuristics that allow us to conclude that working on the cause under consideration is very much likely to be worth it?

- Implementation stage: It has been determined that it's most likely a good idea to start object-level work on a specific cause/intervention (e.g., starting a charity, starting a new programme in an existing org or government, doing independent research and advising key decision-makers). Now the hard work begins of actually doing that

To clarify, I’m not saying that all EA charities or projects need to be started after a quantitative cost-effectiveness analysis. But I’m guessing it helps, particularly for charities in global health and development, and particularly if someone wants to convince EAs to go along with a new cause. Other strong evaluation methods are of course also possible.

I’m holding this model somewhat lightly. For example, I’ve noticed that the “deconfusion” and the “evaluation” stages can sometimes go in either order. For instance, we can guess that an intervention which positively affects vast amounts of people in the long-term future of humanity is probably very cost-effective, even if we’re confused about how such an intervention looks like in practice. It can also be the case that some area seems particularly valuable to become less confused about, and so an organization could be created for that purpose. Further, ongoing interventions still undergo deconfusion and evaluations on later variations and specificities.

Despite holding that model lightly, and understanding that it doesn’t fully capture reality, I’ll be referring to it in subsequent posts, because I think it’s useful to be able to talk about the “readiness level” of a cause candidate. Thanks to Ozzie Gooen and Edo Arad for comments and discussion.

My shorter (and less strong) comment concerns this:

I don't believe that every cause area naturally lends itself to starting a charity. In fact, many don't. For example, if one wants to estimate the philanthropic discount rate more accurately, one probably doesn't need to start a charity to do so. Instead, one may want to do an Econ PhD.

So I think viewing the end goal as charity incubation may not be helpful, and in fact may be harmful if it results in EA dismissing particular cause areas that don't perform well within this lens, but may be high-impact through other lenses.

Great point.

I think my take is that evaluation and ranking often really makes sense for very specific goals. Otherwise you get the problem of evaluating an airplane using the metrics of a washing machine.

This post was rather short. I think if a funnel became more capacity, it would have to be clarified that it has a very particular goal in mind. In this case, the goal would be "identifying targets that could be entire nonprofits".

We've discussed organizing cause areas that could make sense for smaller projects, but one problem with that is that the number of possible candidates in that case goes up considerably. It becomes a much messier problem to organize the space of possible options for any kind of useful work. If you have good ideas for this, please do post!

OK, I think that's probably fine as long as you are very clear on the scope and the fact that some cause areas that you 'funnel out' may in fact still be very important through other lenses.

It sounds like you might be doing something quite similar to Charity Entrepreneurship so you may (or may not) want to collaborate with them in some way. At the very least they might be interested in the outcome of your research.

Speaking of CE, they are looking to incubate a non-profit that will work full-time on evaluating cause areas. I actually think it might be good if you have a somewhat narrow focus, because I'd imagine their organisation will inevitably end up taking quite a wide focus.

I agree with this.

I think one could address that simply by tweak the quoted sentences to "It has been determined that it's most likely a good idea to start object-level work on a specific cause/intervention (e.g., starting a charity, starting a new programme in an existing org or government, doing independent research and advising key decision-makers). Now the hard work begins of actually doing that."

(I'm also not sure the implementation stage will always or typically be harder than the other three stages. I don't specifically believe the opposite; I just feel unsure, and imagine it varies.)

One minor quibble with your comment: I think "do an Econ PhD [and during this or afterwards try to estimate the philanthropic discount rate]" should probably not by itself be called "implementation". It's more object-level than doing prioritisation research to inform whether someone should do that, but by itself it doesn't yet connect to any "directly important" decisions. So I'd want to make some mention of later communicating findings to key decision-makers.

[Btw, thanks for this post, Nuño - I found it clear and useful, and liked the diagram.]

That's all fair. I would endorse that rewording (and potential change of approach)

Makes sense, thanks, changed.

Thanks for this. I have two initial thoughts which I'll separate into different comments (one long, one short - guess which one this is).

OK so firstly, I think in your evaluation phase things get really tricky, and more tricky than you've indicated. Basically, comparing a shorttermist cause area to a longtermist cause area in terms of scale seems to me to be insanely hard, and I don't think heuristics or CEAs are going to help much, if at all. I think it really depends on which side you fall on with regards to some tough, and often contentious, foundational questions that organisations such as GPI are trying to tackle. To give just a few examples:

Basically my point is, depending on answers to questions such as the above, you may think a longtermist cause area is WAY better than a shorttermist cause area, or vice versa, and we haven't even gone near a CEA (which I'm not sure would help matters). I can't emphasise that 'WAY' enough.

To some significant extent, I just think choice of cause area is quite personal. Some people are longtermists, some aren't. Some people think it's good to reduce x-risk, some don't etc. The question for you, if you're trying to apply a funnel to all cause areas, is how do you deal with this issue?

Most research organisations deal with this issue by not trying to apply a funnel to all cause areas in the first place. Instead they focus on a particular type of cause area and prioritise within that e.g. ACE focuses on near-term animal suffering, and GiveWell focuses on disease. Therefore, for example, GiveWell can make certain assumptions about those who are interested in their work - that they aren't worried by complex cluelessness, that they probably aren't (strong) longtermists etc. They can then proceed on this basis. A notable exception may be 80,000 Hours that has funnelled from all cause areas, landing on just longtermist ones and

So part of me thinks your project may be doomed from the start unless you're very clear about where you stand on these key foundational questions. Even in that case there's a difficulty, in that anyone who disagrees with your stance on these foundational questions would then have the right to throw out all of your funnelling work and just do their own. (EDIT: I no longer really endorse this paragraph, see comments below).

I would be interested to hear your thoughts on all of this.

I think this is true. I therefore also basically agree with your conclusions, but I think they're much less damning than I get the impression you think. Basically, I'd rephrase your second-last paragraph to:

"So it seems worth noting that this model is probably most/only useful for the project of generating and comparing cause candidates within a cause area. E.g., it could be useful for neartermist human-focused stuff, neartermist animal-focused stuff, or longtermist stuff, but probably separately for each.

But that alone shouldn't prevent this model from being very useful. That's because there are a huge number of ideas that could be generated, deconfused, evaluated, and implemented within each broad cause area, so a model that helps us do that for each cause area could be really handy, even if it doesn't simultaneously help us decide between broad cause areas."

Yes, I agree. I actually think this model could work well if we do multiple funnelling exercises, one for each type of cause area.

The only reason I was perhaps slightly forceful in my comment is because from this post and the previous post (Big List of Cause Candidates) I have got the impression that there is going to be a single funnelling exercise that aims to directly compare shorttermist vs longtermist areas including on their 'scale'.

Nuno - I don't want to give the impression that I fundamentally don't like your idea because I don't, I just think some care has to be taken.

Yeah, so I (and others) have been exploring different things, but I don't know what I'll end up going with. That said, I think that there are gains to be had in optimizing first two stages, not just the third evaluation stage.

Absolutely, every stage is important.

And reading back what I wrote, it was perhaps a little too strong. I would quite happily adopt MichaelA's suggested paragraph in place of my penultimate one!

[Tangent]

I think I might actually agree with what you actually mean, but not with a natural interpretation of this sentence. "quite personal" sounds to me like it means "subjective, with no better or worse answers, like how much someone likes How I Met Your Mother." But I think there may be "better or worse answers", or at least more or less consistent and thought-out answers. And I think that what's going on here is not simply subjectivity.

Instead, I'd say that choices of broad cause area seem to come down to a combination of factors like:

I think people can explicitly discuss and change their minds about all of these things.

But that seems (a lot?) harder than explicitly discussing and changing one's mind about priorities within a broad cause area. And I think this is partly because these between-cause-area stuff involves more differences in moral views, priors, etc. I think this is the sort of thing you might gesture at with "To some significant extent, I just think choice of cause area is quite personal"?

To return from my tangent to the post at hand: The activities involved in the stages of this model:

And I think that that's one way to explain why this model might not have much to say about between-cause-area decisions.

You're right. "Personal" wasn't the best choice of word, I'm going to blame my 11pm brain again.

I sort of think you've restated my position, but worded it somewhat better, so thanks for that.

A (nit-picky) point that feels somewhat related to your points:

I like that Nuño says "To clarify, I’m not saying that all EA charities need to be started after a quantitative cost-effectiveness analysis."

But personally, I still feel like the following phrasing implies an emphasis on empirical data and/or a quantitative cost-effectiveness analysis:

I think often it might make sense to also or instead emphasise things like theoretical arguments, judgemental forecasts, and expert opinions. (See also Charity Entrepreneurship's research method.) I think these things are technically evidence in a Bayesian sense, but aren't what people would usually think of when you say "evidence base".

So personally, I think I'd be inclined to rephrase the "Evaluation" step to something like "How does the evidence base and/or reasoning for this kind of intervention look? This could include looking at empirical data, making cost-effectiveness analyses, collecting forecasts or expert opinions, or further probing and fleshing out the theoretical arguments."

So suppose you have a cause candidate, and some axis like the ones you mention:

But also some others, like

For simplicity, I'm going to just use three axis, but the below applies to more. Right now, the topmost vectors represent my own perspective on the promisingness of a cause candidate, across three axis, but they could eventually represent some more robust measure (e.g., the aggregate of respected elders, or some other measure you like more). The vectors at the bottom are the perspectives of people who disagree with me across some axis.

For example, suppose that the red vector was "ratio of the value of a human to a standard animal", or "probability that a project in this cause area will successfully influence the long-term future".

Then person number 2 can say "well, no, humans are worth much more than animals". Or, "well, no, the probability of this project influencing the long-term future is much lower". And person number 2 can say something like "well, overall I agree with you, but I value animals a little bit more, so my red axis is somewhat higher", or "well, no, I think that the probability that this project has of influencing the long-term future is much higher".

Crucially, they wouldn't have to do this for every cause candidate. For example, if I value a given animal living a happier life the same as X humans, and someone else values that animal as 0.01X humans, or as 2X humans, they can just apply the transformation to my values.

Similarly, if someone is generally very pessimistic about the tractability of influencing the long-term future they could transform my probabilities as to that happening. They could divide my probabilities by 10x (or, actually, subtract some amount of probability in bits). Then the tranformation might not be linear, but it would still be doable.

Then, knowing the various axis, one could combine them to find out the expected impact. For example, one could multiply three axis to get the volume of the box, or add them as vectors and consider the length of the purple vector, or some other transformation which isn't a toy example.

So, a difference in perspectives would be transformed into a change of basis

So:

doesn't strike me as true. Granted, I haven't done this yet, and I might never because other avenues might strike me as more interesting, but the possibility exists.

I think this comment is interesting. But I think you might be partially talking past jackmalde's point (at least if I'm interpreting both of you correctly, which I might not be).

If jackmalde meant "If someone disagrees with your stance on these foundational questions, it would then make sense for them to disagree with or place no major credence in all of the rest of your reasoning regarding certain ideas", then I think that would indeed be incorrect, for the reasons you suggest. Basically, as I think you suggest, caring more or less than you about nonhumans or creating new happy lives is not a reason why a person should disagree with your beliefs about various other factors that play into the promisingness of an idea (e.g., how much the intervention would cost, whether there's strong reasoning that it would work for its intended objective).

But I'm guessing jackmalde meant something more like "If someone sufficiently strongly disagrees with your stance on these foundational questions, it would then make sense for them to not pay any attention to all of the rest of your reasoning regarding certain ideas." That person might think something like:

In other words, there may be single factors that are (a) not really evaluated by the activities mentioned in your model, and (b) sufficient to rule an idea out as worthy of consideration for some people, but not others.

Maybe a condensed version of all of that is: If there's a class of ideas (e.g., things not at all focused on improving the long-term future) which one believes all have extremely small scores on one dimension relative to at least some members of another class of ideas (e.g., things focused on improving the long-term future), then it makes sense to not bother thinking about their scores on other dimensions, if any variation on those dimensions which one could reasonably expect would still be insufficiently to make the total "volume" as high as some other class.

I'm not sure how often extreme versions of this would/should come up, partly because I think it's relatively common and wise for people to be morally uncertain, decision-theoretically uncertain, uncertain about "what epistemologies they should have" (not sure that's the right phrase), etc. But I think moderate versions come up a lot. E.g., I personally don't usually engage deeply with reasoning for near-term human or near-term animal interventions anymore, due to my stance on relatively "foundational questions".

(None of this would mean people wouldn't pay attention to any results from the sort of process proposed in your model. It just might mean many people would only pay attention to the subset of results which are focused on the sort of ideas that pass each person's initial "screening" based on some foundational questions.)

So suppose that the intervention was about cows, and I (the vectors in "1" in the image) gave them some moderate weight X, the length of the red arrow. Then if someone gives them a weight of 0.0001X, their red arrow becomes much smaller (as in 2.), and the total volume enclosed by their cube becomes smaller. I'm thinking that the volume represents promisingness. But they can just apply that division X -> 0.0001X to all my ratings, and calculate their new volumes and ratings (which will be different from mine, because cause areas which only affect, say, humans, won't be affected).

In this case, the red arrow would go completely to 0, and that person would just focus on the area of the square in which the blue and green arrows lie, across all cause candidates. Because I am looking at volume and they are looking at areas, our ratings will again differ.

This cube approach is interesting, but my instinctive response is to agree with MichaelA, if someone doesn’t think influencing the long-run future is tractable then they will probably just want to entirely filter out longtermist cause areas from the very start and focus on shorttermist areas. I’m not sure comparing areas/volumes between shorttermist and longtermist areas will be something they will be that interested in doing. My feeling is the cube approach may be over complicating things.

If I were doing this myself, or starting an ’exploratory altruism’ organisation similar to the one Charity Entrepreneurship is thinking about starting, I would probably take one of the following two approaches:

I think this decision is tough, but on balance I would probably go for option 1 and would focus on longtermist cause areas, in part because shorttermist areas have historically been given much more thought so there is probably less meaningful progress that can be made there.

Yeah, I agree with this.

What I'm saying is that, if they and you disagree sufficiently much on 1 factor in a way that they already know about before this process starts, they might justifiably be confident that this adjustment will mean any ideas in category A (e.g., things focused on helping cows) will be much less promising than some ideas in category B (e.g., things focused on helping humans in the near-term, or things focused on beings in the long-term).

And then they might justifiably be confident that your evaluations of ideas in category A won't be very useful to them (and thus aren't worth reading, aren't worth funding you to make, etc.)

I think this is basically broadly the same sort of reasoning that leads GiveWell to rule out many ideas (e.g., those that focus on benefitting developed-world populations) before even doing shallow reviews. Those ideas could vary substantially on many dimensions GiveWell cares about, but they still predict that almost all of the ideas that are best by their lights would be found in a different category that can be known already to typically be much higher on some other dimensions (I guess neglectedness, in this case).

(I haven't followed GiveWell's work very closely for a while, so I may be misrepresenting things.)

(All of this will not be the case if a person is more uncertain about e.g. which population group it's best to benefit or which epistemic approaches should be used. So, e.g., the ratings for near-term animal welfare focused ideas should still be of interest for some portion of longtermism-leaning people.)

I also agree with this. Again, I'd just say that some ideas might only warrant attention if we do care about the red arrow - we might be able to predict in advance that almost all of the ideas with the largest "areas" (rather than "volumes") would not be in that category. If so, then people might have reason to not pay attention to your other ratings for those ideas, because their time is limited and they should look elsewhere if they just want high-area ideas.

Another way to frame this would be in terms of crucial considerations: "a consideration such that if it were taken into account it would overturn the conclusions we would otherwise reach about how we should direct our efforts, or an idea or argument that might possibly reveal the need not just for some minor course adjustment in our practical endeavors but a major change of direction or priority."

A quick example: If Alice currently thinks that a 1 percentage point reduction in existential risk is many orders of magnitude more important than a 1 percentage point increase in the average welfare of people in developing nations*, then I think looking at ratings from this sort of system for ideas focused on improving welfare of people in developing nations is not a good use of Alice's time.

I think she'd use that time better by doing things like:

*I chose those proxies and numbers fairly randomly.

To be clear: I am not saying that I don't think your model, or the sort of work that's sort-of proposed by the model, wouldn't be valuable. I think it would be valuable. I'm just explaining why I think some portions of the work won't be particularly valuable to some portion of EAs. (Just as most of GiveWell's work or FHI's work isn't particularly valuable - at least on the object level - to some EAs.)

Makes sense, thanks

Nitpick: A change of basis might also be combined with a projection into a subspace. In the example, if one doesn't care about animals, or about the long term future at all, then instead of the volume of the cuboid they'd just consider the area of one of its faces.

Another nitpick: The ratio of humans to animals would depend on the specific animals. However, I sort of feel that the high level disagreements of the sort jackmalde is pointing to are probably in the ratio of the value between a happy human life and a happy cow life, not about the ratio of the life of a happy cow to a happy pig, chicken, insect, etc.

[Tangent]

"X-risk" is typically short for "existential risk", which includes extinction risk, but also includes risks of unrecoverable collapse, unrecoverable dystopia, and some (but not all) s-risks/suffering catastrophes. (See here.)

My understanding is that, if we condition on rejecting totalism:

(See here for some discussion relevant to those points.)

So maybe by "x-risk" you actually meant "extinction risk", rather than "existential risk"? (Using "x-risk"/"existential risk" as synonymous with just "extinction risk" is unfortunately common, but goes against Bostrom and Ord's definitions.)

Yeah absolutely, this was my tired 11pm brain. I meant to refer to extinction risk whenever I said x-risk. I'll edit.