Epistemic status

Written as a non-expert to develop my views and get feedback, rather than persuade.

Why read this post?

Read this post for

- a theory of change for AI safety movement building, including measuring key outcomes of contributors, contributions, and coordination to determine if we are succeeding

- activities to realise the promise of this theory of change, focused on (1) building shared understanding, (2) evaluating progress (3) coordinating workers and projects, (4) prioritising projects to support, and (5) changing how we do related movement building

- examples of how technical and non-technical skills are both needed for AI safety

- an explanation of a new idea, "fractional movement building", where most people working in AI safety spend some fraction of their time building the movement (i.e. by splitting time between a normal job and movement-building, or taking time off to do a "tour of service")

- ideas for how we might, evaluate, prioritise and scale AI Safety movement building.

I also ask for your help in providing feedback and answering questions I have about this approach. Your answers will help me and others make better career decisions about AI safety, including how I should proceed with this movement building approach. I am offering cash bounties of $20 for the most useful inputs (see section at the bottom of the post).

Summary

If we want to more effectively address problems of AI safety, alignment, and governance, we need better i) shared understanding of the challenges, better coordination of people and resources, and ii) prioritisation.

AI Safety Movement Building activities are currently mired in uncertainty and confusing. It's not clear who can or should be a contributor; it's not clear what contributions people in the community are making or value; and it's not clear how to best coordinate action at scale.

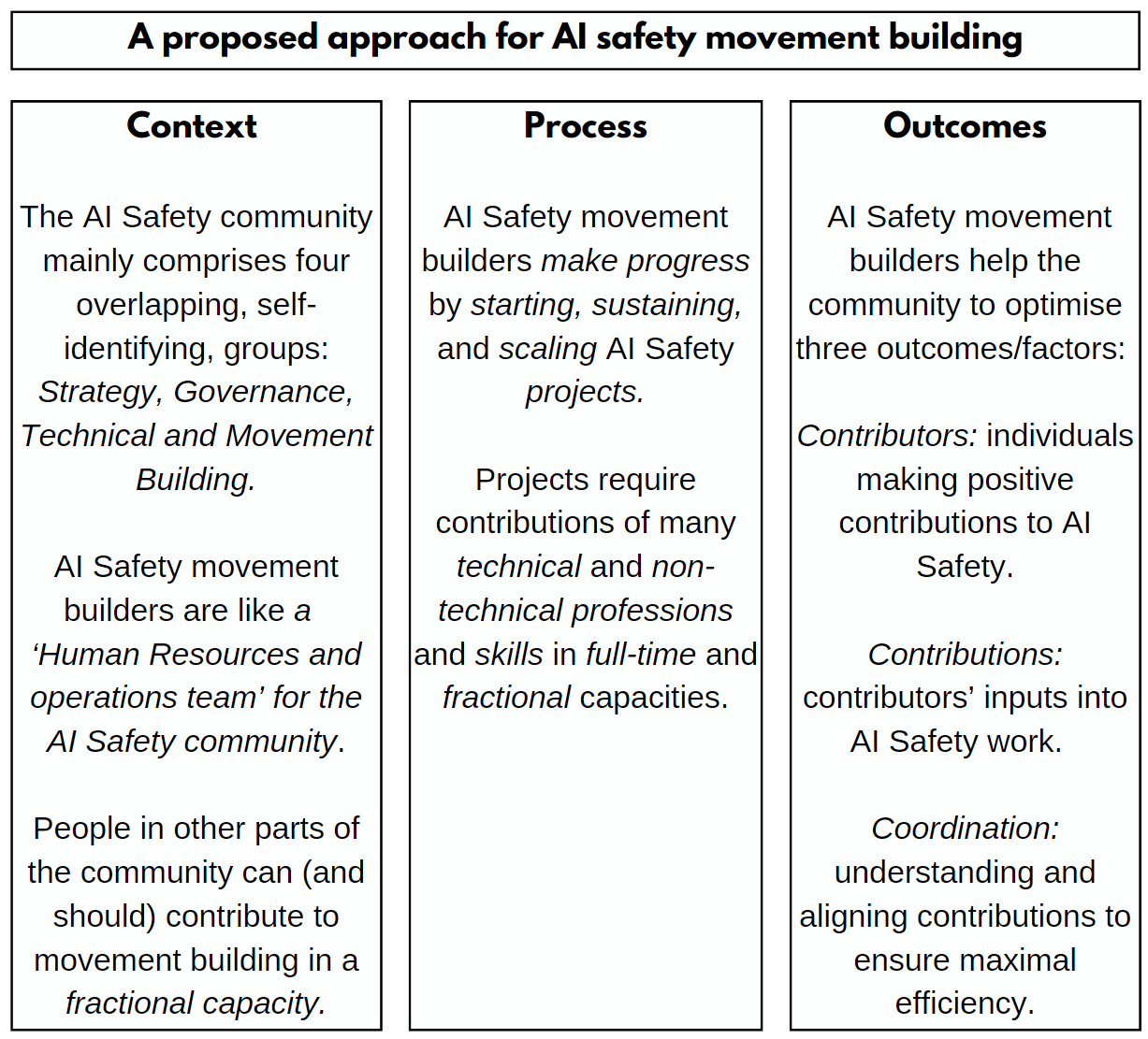

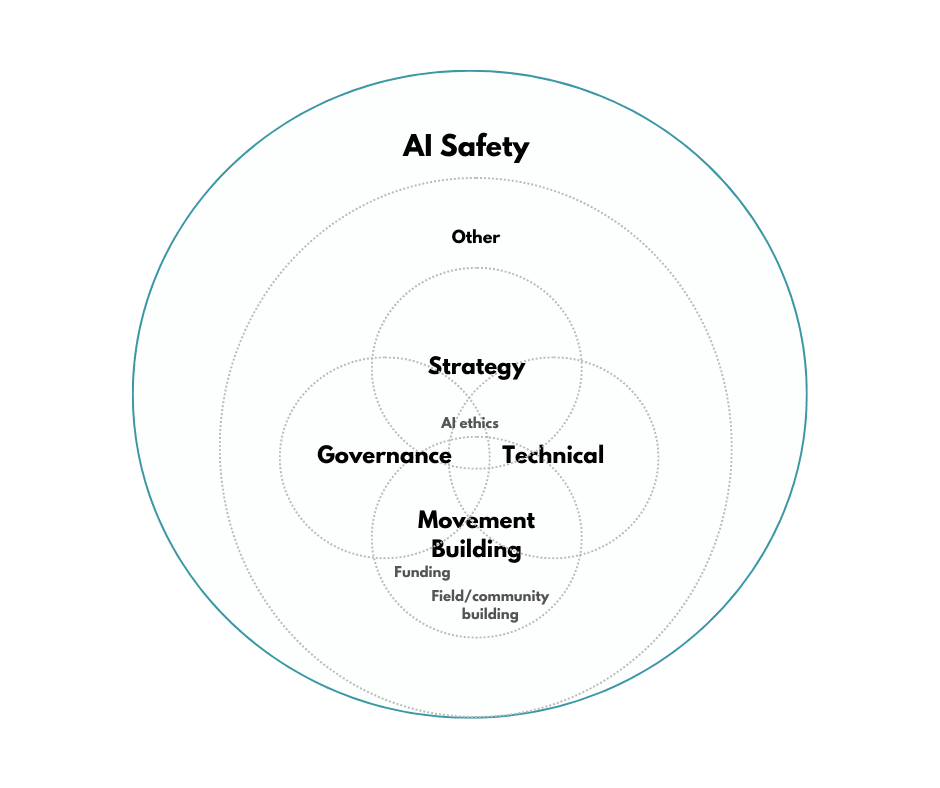

In response, this post is the third in a series that outlines a theory of change for AI Safety Movement Building. Part 1 introduced the context of the AI Safety community, and its four work groups: Strategy, Governance, Technical, and Movement Building.

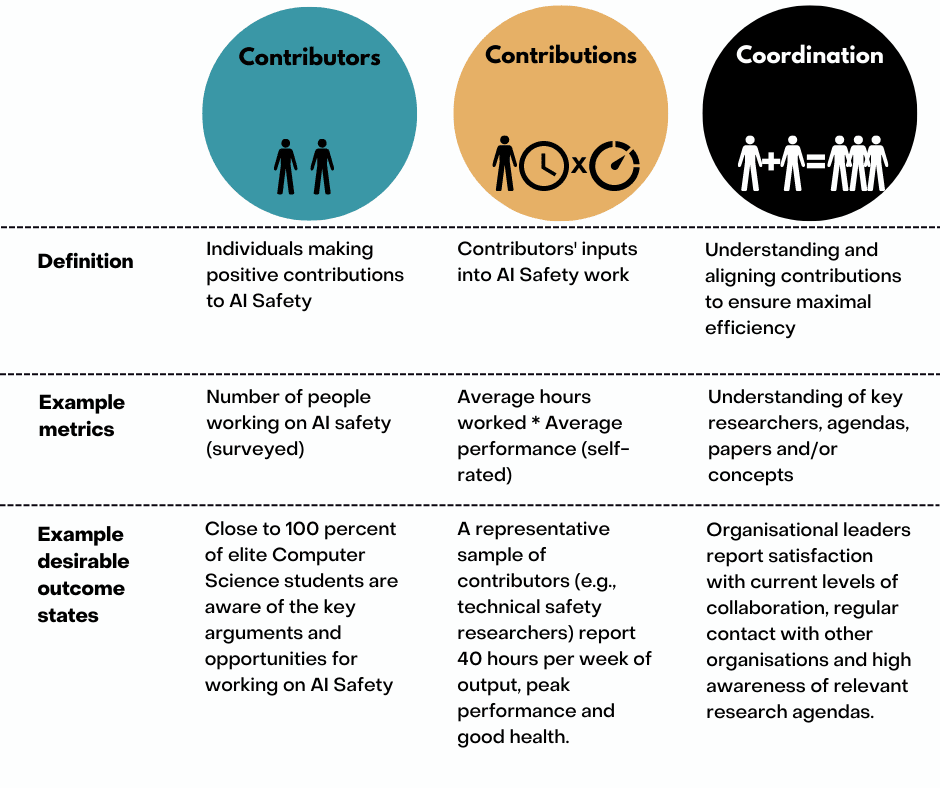

Part 2 proposed measurable outcomes that AI Safety Movement Building should try to improve: Contributors: individuals making positive contributions to AI Safety; Contributions: positive inputs into AI Safety work; and, Coordination: understanding and aligning contributions to ensure maximal efficiency.

In this post, I describe a process for AI Safety Movement Builders that can be implemented in this context, to produce these outcomes.

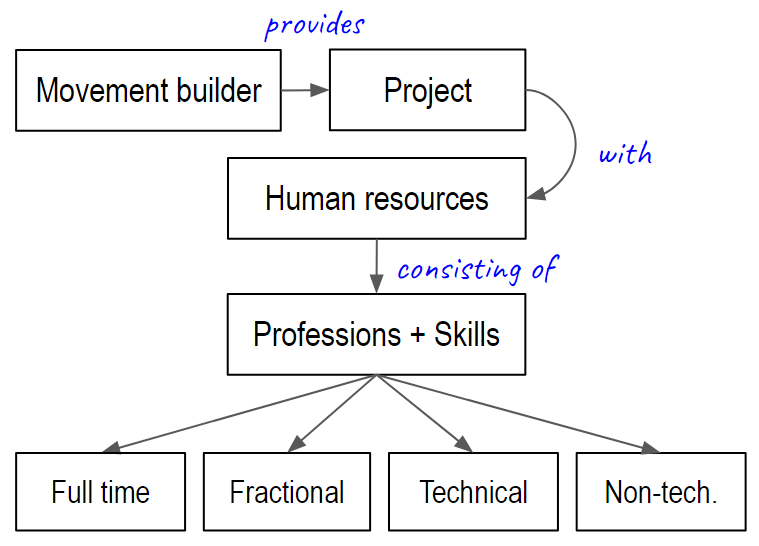

The process is thus: Technical and non-technical AI Safety movement builders, including those working in a fractional capacity, start, sustain or scale AI Safety projects. They mainly do this by providing access human resources (i.e., needed professions and skills). They exercise caution by validating their assumptions that a project will be useful as early as possible. These concepts and the process are elaborated on in the full post.

My key recommendations for people and organisations working on AI Safety and movement building are:

- Evaluate how well AI Safety movement building is achieving key outcomes such as increasing the number and quality of contributors, improving contributions, or helping coordination of work

- Support or lead work to improve shared understanding of the main challenges in AI Safety, to improve prioritisation, and speed of progress

- Consider a fractional movement building approach where everyone doing direct work has some of their time allocated to growing the community.

Why did I write this series of posts?

There is uncertainty about what AI Safety Movement Building is, and how to do it helpfully

AI Safety is a pre-paradigmatic area of research and practice which recently emerged to address an impending, exceptionally pressing, societal problem. This has several implications:

- The emergent community is relatively lacking in established traditions, norms, maturity and scientific consensus.

- Most community members' focus and effort have gone into understanding problems they are personally interested in rather than community wide considerations.

- Most knowledge is in the heads of community members and *relatively* (e.g., compared to an established research domain) little information has been made easily legible and accessible to new entrants to the community (e.g., see various posts calling for, and providing more of such information: 1, 2).

- Most people in and outside AI safety, have relatively poor information on, and understanding, of what other relevant people and groups think (e.g., 1)

As a result of these and other factors, there is considerable uncertainty about which outcomes are best to pursue, and which processes and priorities are best for achieving these outcomes.

This uncertainty is perhaps particularly pronounced in the smaller and newer sub-field of AI Safety Movement building (e.g., 1). This may be partially due to differences in perspective on Movement Building across the emerging AI Safety community. Most participants are from diverse backgrounds: technical and non-technical researchers, mathematicians, rationalists, effective altruists, philosophers, policymakers, entrepreneurs. They are not groups of people who share an innate conceptualisation of what ‘Movement Building’ is or what it should involve.

Regardless, the uncertainty about what AI Safety Movement Building is, and how to do it helpfully, creates several problems. Perhaps most significantly, it probably reduces the number of people who consider, and select, AI Safety Movement Building as a career option.

Uncertainty about AI Safety Movement Building reduces the number of potentially helpful movement builders

People who care about AI safety and want to help with movement building are aware of two conflicting facts: i) that the AI Safety community badly needs more human resources and ii) that there are significant risks from doing movement building badly (e.g., 1,2).

To determine if they should get involved, they ask questions like: Which movement building projects are generally considered good to pursue for someone like me and which are bad? What criteria should I evaluate projects on? What skills do I need to succeed? If I leave my job would I be able to get funding to work on (Movement Building Idea X)?

It’s often hard to get helpful answers to these questions. There is considerable variance in vocabulary, opinions, visions and priorities about AI Safety Movement Building within the AI Safety community. If you talk to experts or look online you will get very mixed opinions. Some credible people will claim that a particular movement building project is good, while others will claim the opposite.

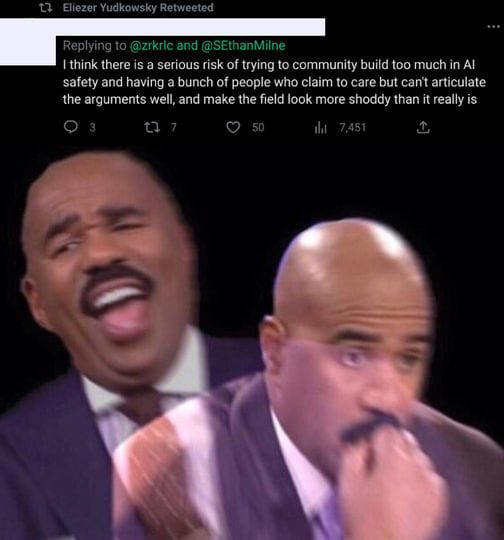

There is also a problem of fragmented and abstract discussion: Most discussions happen in informal contexts that are difficult to discover or synthesise, such as Facebook, Twitter, Slack, in-person conversations, or in the comments of three different forums(!). There is limited discussion of specifics, for instance, what specifically is good or bad movement building, or lowest or highest priority to do. Instead, discussions are vague or kept at a high level, such as the kinds of norms, constraints, or values that are (un)desirable in the AI Safety community. As an example, memes and sentiments like the image below are commonly seen. These don’t specify what they’re critiquing or provide a good example of what is acceptable or desirable in response. They serve as a sort of indefinite expression of concern that can’t easily be interpreted or mitigated.

An example of an indefinite expression of concern: Source

Because of the lack of coherence in discussions and abstract nature of concerns, it is very hard for movement builders to evaluate the risk and rewards of potential actions against the risks of inaction.

This creates uncertainty which is generally unpleasant and promotes inaction. The consequence of this uncertainty is that fewer potential AI Safety Movement Builders will choose to engage in AI Safety Movement Building over competing options with more clearly defined processes and outcomes (e.g., direct work, their current job, or other impact focused roles).

Having fewer movement builders probably reduces AI Safety contributors, contributions and collective coordination

Let’s explore some arguments for why movement builders are important for key outcomes for the AI Safety Community.

The lack of AI Safety movement builders probably reduces the number of CONTRIBUTORS to AI Safety by reducing awareness and ability to get involved. I believe that the vast majority of people who could work on AI safety don’t have a good understanding of the core arguments and opportunities. Of those who do, many don’t know how to get involved and therefore don’t even try. Many who know how to get involved, erroneously think that they can’t contribute. It’s much harder to get involved than it should be: the effort to understand whether / how to help is unreasonably high.

The lack of movement builders probably reduces CONTRIBUTIONS to AI Safety by making it harder for new and current contributors to collaborate on the most important research topics. Researchers don’t know what is best to research. For instance, Eli Tyre argues that most new researchers “are retreading old ideas, without realizing it”. Researchers don’t seem to know much about what other researchers are doing and why. When Nate Soares talks about how AI safety researchers don’t stack I wonder how much of this is due to a lack of shared language and understanding. Researchers don’t get much support. When Oliver Harbyka says: “being an independent AI alignment researcher is one of the [jobs] with, like, the worst depression rate in the world”, I wonder how much of that is due to insufficient support for mental and physical needs.

The lack of movement builders probably reduces coordination because it leads to a lower level of shared language and understanding. Without the support of movement builders there is no one in the AI Safety community responsible for creating shared language and understanding inside and outside the community: reviewing, synthesising and communicating AI Safety community member’s work, needs and values to relevant groups (within and outside the community). Without this, the different parts of AI Safety community and outside stakeholders are less well able to understand and communicate what they collectively do or agree/disagree on, and to make efficient progress towards their goals.

But what if current movement building is harmful? Some people in the community have concerns about current work on movement building (e.g., 1,2,3), many of which I share. However, these concerns do not strike me as compelling reasons to stop movement building. Instead, they strike me as reasons to improve how we do movement building by better understanding the needs and values of the wider community.

For instance, right now, to ensure we have movement builders who

- Survey the AI Safety community and communicate its collective values and concerns.

- Research how fast the community is growing and why and facilitate productive conversation around if/how that should change

- Synthesise different viewpoints, identify differences, and progress the resolution of debates

- Help the existing community to collaborate and communicate more effectively.

However, before we can understand how to do movement building better, we need to address an underlying bottleneck - a lack of shared language and understanding.

To address the uncertainty and related problems we need a better shared language and shared understanding

To address the uncertainty and related problems, the AI Safety (Movement Building) community appears to need a better shared language, and shared understanding.

By a shared language, I mean a shared set of concepts which are consistently used for relevant communication. These shared concepts are helpful categorisations for differentiating otherwise confusing and complex phenomena (e.g., key parts, process, or outcomes within the community). Some adjacent examples are the EA cause areas, the Importance, Tractability and Neglectedness (ITN) framework and the 80,000 Hours priority careers.

A shared language offers potential for a shared understanding. By a shared understanding, I mean a situation where the collective understanding of key variables (e.g., our context, processes, desired outcome, and priorities) is clear enough that it is very well understood ‘who needs to do what, when and why’. An adjacent example within the EA community, is the 80,000 Hours priority career paths list which provide a relatively clear understanding of which careers are the highest priority from EA perspective and why.

I argued earlier that variance in vocabulary, opinions, visions and priorities about AI Safety Movement Building contributed to uncertainty. A shared language can help address *some* of this uncertainty by standardising vocabulary and conceptual frameworks.

I argued earlier that uncertainty stems from limited discussion of specifics, for instance, what specifically is good or bad AI Safety Movement Building, or lowest or highest priority to do. Creating a shared understanding can help address *some* of this uncertainty and reduce the related problems by standardising i) the collective understanding of key variables (e.g., context, process, desired outcomes), and, later, ii) priorities.

The above has hopefully outlined a few of the reasons why I value shared language and understanding. I wanted them as a new entrant to the community and couldn’t find anything substantive. This is why I wrote this series of posts to outline and share the language and understanding that I have developed and plan to use if I engage in more direct work. I am happy for others to use or adapt it. If there are flaws then I’d encourage others to point them them out and/or develop something better.

What have I already written?

This is the third part of a series which outlines an approach/a theory of change for Artificial Intelligence (AI) Safety movement building. Part one gave context by conceptualising the AI Safety community.

Part two proposed outcomes: three factors for movement builders to make progress on.

I next explain the process used to achieve these outcomes in this context. In doing so, I aim to provide i) a basic conceptual framework/shared language for AI Safety Movement Building, and ii) show the breadth and depth of contributions required as part of movement building.

AI Safety movement builders contribute to AI Safety via projects

As used here a ‘project’ refers to a temporary effort to create a unique product, service, or outcome. Projects can be focused on technical research, governance, strategy (e.g. 1.2) or movement building specifically (e.g., 1,2). They range in size from very small (e.g., an hour a week to do X) to very large (e.g., a large organisation or group). They can encompass smaller subprojects (e.g., teams in an organisation).

AI Safety movement builders contribute by helping to start, sustain and scale AI Safety projects

Starting projects refers to cases where movement builders enable one or more people to provide a unique product, service, or outcome which contributes to AI safety. For instance, an AI Safety movement builder might encourage a newly graduated computer science student to start an independent research project and connect them with a mentor. They might join several people with diverse skills to start an AI Safety related training project in their university or organisation.

Sustaining project means keeping them going at their current level of impact/output. For instance, this might involve supporting a newly created independent research, or AI Safety related training project to keep it running over multiple years.

Scaling projects refers to cases where movement builders enable an AI safety project to have a greater positive impact on AI Safety contributors, contributions and coordination. For instance, an AI Safety movement builder might help a newly started organisation to find researchers for their research projects as a recruiter, or find them a recruiter. Or they might find several industry partners for an AI related training project which is seeking to give their best students’ industry experience before they graduate. They could also join a bigger organisation of AI governance researchers as a research communicator to help them reach a wider audience.

AI Safety Movement builders start, sustain and scale project by contributing resources (e.g., knowledge, attitudes, habits, professions, skills and ideas). Their main contribution is 'human resources': access to the professions and skills that the project needs to start or scale.

AI Safety movement builders start and scale AI Safety projects by contributing human resources: technical and non-technical professions and skills in full-time and fractional capacities.

A ‘profession’ refers to a broad role which people are often hired to do (e.g., a marketer or coach) and a ‘skill’ refers to a commonly used ability (e.g., using Google Ads or CBT). Professions are essentially ‘careers’. Skills are professionally relevant skills and experience. They are somewhat akin to ‘career capital’ - what you might learn and transfer in a career.

AI Safety movement builders contribute professions and skills to projects directly (e.g., via working for an organisation as founder or a recruiter) or indirectly (e.g., via finding founder or a recruiter for that organisation).

It seems useful to split contributions of professions and skills into those which are technical (i.e., involve knowledge of Machine Learning (ML)), non-technical (i.e., don’t involve knowledge of ML), full-time (i.e., 30+ hours a week) or fractional (e.g., 3 hours a month or 3 months a year). This conceptual framework is visualised below and referenced in the content that follows.

AI Safety Projects generally require many technical and non-technical professions and skills to start/scale

AI Safety Projects typically require a wide range of professions and skills. As an example, the below shows a typical AI Safety Project and some of the professional skills required to make it happen.

| Project type | Explanation | Professional skills involved |

| AI Safety training program | An eight-week course to train people in machine learning | Planning the course, providing the research training, facilitating the sessions, marketing the course, creating and maintaining the digital assets, communicating with stakeholders and attendees, measuring and evaluating impact, managing staff, participants, and finances. |

The professional skills involved include ‘technical’ contributions: research mentorships or and help to develop or deliver technical aspects of the course. They also involve non-technical professional skills, for instance, in the development of digital assets, marketing, project management and evaluation.

What professions and skills are relevant to current and future AI Safety projects?

To unpack this, I next outline professions and skills which I think can contribute to AI Safety Movement building. I start by offering some general thoughts on how these contributions might be made. I then give examples of how these can impact the three target outcomes of AI Safety Movement Builders: increasing contributors, contributions and coordination.

Because of how technical competence affects personal capacity to contribute to AI Safety Movement building, I discuss technical and non-technical contributions in separate sections.

In each case I am not presenting an exhaustive list - just a set of examples to make my overall theory of change somewhat clear. At the end of the section, I argue that we should properly explore the demand for specific professions and skills within the AI Safety community.

Technical contributions to AI Safety Movement Building are very valuable, but need to be balanced against trade-offs, and may best be made in a fractional capacity

AI emerged from specific types of Machine Learning. As such, anyone interested in interpreting and communicating the technical and theoretical risk from AI, and related AI Safety community needs and opportunities would ideally have a deep background in the relevant technologies and theories. This is why technical experts (e.g., Paul Christiano, Buck Schlegeris, and Rohin Shah) have privileged insight and understanding and are essential to the AI Safety movement building. But there is a problem…

There are relatively few technical experts within the AI Safety community and most are (rightly) focused on doing technical work

Relatively few people possess in-depth knowledge of ML in conjunction with a focus on safety. Relatively few of those who do are focused on movement building as most instead prefer to directly work on AI Safety theory or empirical work.

Many technical experts within the AI Safety should consider fractional movement building

It seems likely that technical experts can sometimes have a much higher indirect impact on expectation via doing movement building than their alternatives. For instance, they may encounter someone who they could work with, have a chance to influence a group of relevant experts at a networking event or talk, or accelerate the development of one or more projects or research labs in some advisory capacity. In each case they could have a valuable opportunity to increase coordination, contribution or cooperation.

To the extent that the above is true, it seems valuable for experts to consider assigning a portion of their time to movement building activities. As an example, they could aim to spend 10% of their time attending conferences, speaking with other researchers and engineers, mentoring other technical experts, and creating educational programs.

Technical novices within the AI Safety community should consider assigning a larger fraction of time to movement building

By technical novices, I mean people who have one or two years of experience in ML. For such people, there is a case for investing a relatively large fraction of time in movement building as opposed to research. For instance, early career researchers may stand to contribute a lot by helping more senior researchers to provide more presentations, training, research operations or research communication, particularly if they do not feel that they are likely to make progress on difficult research problems within their expected timelines for AI takeoff.

There are opportunities to accelerate the acquisition and development of exceptional talents. A researcher who believes they are good but not brilliant enough to make significant contributions to AI Safety research might be very well suited to engage and help people who are younger and less experienced, but more talented. They might also be able to make significant contributions to movement building in areas such as AI governance or strategy where the required technical knowledge for making significant contributions may be significantly lower.

To the extent that the above is true, it seems valuable for novice researchers to consider assigning some portion of their time to movement building activities. For instance, depending on aptitude and opportunity they could plan to spend 25% - 75% of their time communicating research, running conferences, supporting expert researchers, engineers and creating and running educational programs.

What do technical contributions look like in practice?

To help illustrate the above, the following table outlines professions (in this case with a technical specialisation) which can contribute to AI Safety Movement building and suggests potential impacts on contributors, contributions and coordination.

| Profession | Definition | Example of movement building impact |

| Research | Researching how to best develop and deploy artificial intelligence systems. | Attending technical AI conferences to network and increase the number of contributors. |

| Engineering | Providing technical expertise to research, develop and deploy AI systems. | Supervising new AI research projects to increase the number of contributors. |

| Policymaker | Providing technical expertise in policy settings. | Mentoring relevant civil servants to increase future contributors to policy research and enactment. |

| Entrepreneurship | Creating, launching, and managing ML related ventures. | Creating a new AI safety related research organisation which can then hire new contributors. |

| Consulting | Helping organisations solve technical problems or improve performance. | Helping to scale a new AI safety related research organisation which can then hire new contributors. |

| Management | Overseeing and directing technical operations. | Helping to manage engineers in an AI safety related research organisation to increase their contributions. |

| Recruiting | Finding, screening, and selecting qualified job candidates. | Recruiting and trialling engineers for an organisation to increase the number of contributors. |

| Education | Providing individuals with necessary knowledge and skills. | Offering technical training to provide important skills and increase the number of contributors. |

| Community manager | Building communities of researchers, engineers and related specialisations. | Connecting important researchers via research seminars to improve coordination. |

For illustration, the following table outlines skills relevant to those with technical expertise and examples of potential impacts on contributors, contributions and coordination.

| Skill | Definition | Example movement building impact |

|---|---|---|

| Mentorship | Providing guidance and support to new participants within the community. | Increasing contributors, contributions and coordination by mentoring new researchers. |

Ambassador/ advocate

| Becoming an effective representative or spokesperson for AI safety. | Communication and engagement with peers to increase awareness of AI safety issues and to attract new contributors to the community. |

| Machine Learning focused recruitment (ML) | Identifying, attracting, and hiring individuals with ML skills and qualifications. | Finding and testing individuals to help to increase the number of contributors. |

| AI Safety Curriculum design | Creating AI Safety educational programs and materials. | Increasing contributors, contributions and coordination by curating needed skills and bodies of knowledge into educational courses and materials. |

| Research dissemination | Effectively spreading research information to relevant groups. | Increasing contributors, contributions and coordination by creating simple and easily communicable summaries and syntheses. |

| Career coaching | Helping others with career choice. | Making progress on contributors, contributions and coordination by helping new and novice researchers to understand what they need to know and where to work. |

| Writing | Strong writing skills for creating clear and effective research and communication. | Writing blog posts, articles, or social media posts to share information and engage with followers, which can increase contributions and coordination within the AI safety movement. |

| Presenting | Presenting information persuasively to relevant audiences. | Giving presentations at conferences, workshops, or other events to share information and ideas about AI safety, increase contributions and coordination within the AI safety movement. |

| Networking | Building relationships and connections with others who share similar interests or goals. | Building partnerships and collaborations with/between other organisations or individuals who are also working on AI safety issues to increase contributions and coordination. |

| Social skills | Being able to effectively understand and collaborate with others | Communicating effectively, collaborating with others, and building trust and rapport with stakeholders to increase contributions and coordination. |

| Teaching skills | Educating others about the potential risks and challenges associated with advanced AI. | Providing training to help people develop the skills and knowledge needed to work on AI Safety and increase the number of coordinators. |

Non-technical contributions to AI Safety Movement Building can best support AI strategy and AI governance but can also support AI Safety technical work.

Non-technical people may be best suited to movement build for AI strategy and AI governance

AI Strategy and AI governance involve a lot of non-technical tasks including setting up and managing courses, creating community surveys, communicating research, writing up social media content or helping with general operation. AI Strategy and AI governance areas can therefore absorb many contributions of non-technical professions and skills in relatively obvious ways.

Non-technical people can also contribute to technical AI safety

Non-technical people appear at a major disadvantage when it comes to helping with the technical aspect of AI Safety Movement building. They cannot provide many of the important inputs of those offered by technical people. They also cannot easily understand some of the technical literature and related reasoning. Accordingly, it might seem that non-technical people can only make useful contributions to AI Safety via working in AI Strategy and AI policy.

In contrast, I think that non-technical people can also contribute to technical AI safety and are going to be increasingly important as it scales. Here are three reasons why.

Non-technical people are in greater supply than technical people, they have lower opportunity costs, and their complementary skills are needed to produce the optimal outcomes from technical contributors and contributions.

Non-technical people are in greater supply than technical people. I suspect that a much larger percentage of people interested in AI Safety, or open to working on it for a market rate salary, will have non-technical, than technical backgrounds.

Non-technical people have fewer opportunity costs than technical people because they don’t have a competing opportunity to do technical research instead of movement building.

Non-technical people can contribute to the AI Safety movement by providing complementary resources that save technical people time, or address gaps in their competency or interests. For instance, a technical person might be very happy to speak at a conference but not to spend the time looking for conferences, contacting organisers, or reworking their slides and planning their trip. However, a nontechnical person working as an operational staff member might however be able and willing to do all of this.

By working with the technical person the operation person can therefore greatly increase their collective impact on AI Safety outcomes. By working with a team of researchers, they may be able to dramatically improve the level of awareness of their work with compounding effects over time.

The above reasons may help explain why half or more of the staff at technical companies (e.g., focused on software engineering) are often non-technical: usually marketers, lawyers, recruiter, social media managers, managers and many other forms of support staff are required to maximise the value of technical work, particularly at scale.

Non-technical people who are able and willing to contribute to AI Safety should prioritise support technical AI Safety movement building when possible.

I believe:

- In AI strategy and governance there is a higher level of awareness, understanding and usage of nontechnical people in movement building roles than in technical AI safety.

- Technical research is the most bottlenecked group within the AI safety community (although one might argue that movement building is actually the issue as it is an upstream cause of this blocker)

I therefore believe that most non-technical AI safety movement builders will have maximal impact if they allocate as much of their time to technical AI Safety movement building as can be productively accommodated.

For instance, this could be via working at an organisation alongside technical workers in a nontechnical operations' role as discussed in the example above. In a more abstract sense, it could also be helping to recruit for people who might be more immediately productive. For instance, if you know an organisation really needs a technical recruiter then you could have an outsized impact by helping them find one (if working in a relevant context).

For some non-technical movement builders, it will make sense to work in a fractional role where only part of one’s time is spent on AI safety work

In some cases having more than one role may outperform the alternative of working full time as an ‘AI Safety Movement Builder’. For instance, a nontechnical social science researcher might have more impact from a fractional role where they teach and do research at a university for 50% of their time than from leaving their university to put 100% of their time into movement building.

In the fractional role, they may be able to do a range of important movement building activities: to interact with more people who are unaware of but potentially open to AI safety arguments, invite in speakers to talk to students, advocate for courses etc. All of these are things they cannot do if they leave their research role.

They are also potentially more likely to be able to engage with other researchers and experts more effectively as an employed lecturer interested in X, than as an employee of an AI safety organisation or “movement builder” with no affiliation.

Fractional roles may make sense where individuals believe that the AI safety community can only make productive use of a percentage of their time (e.g., one day a week they facilitate a course).

For some non-technical movement builders, it will make sense to work as a generalist across multiple parts of the AI safety community

In some cases a nontechnical AI safety movement builder (e.g., a recruiter or communicator) might be better off working across the AI strategy, governance and technical groups as a generalist than focusing on one area. For instance, this may give them more opportunities to make valuable connections.

Non-technical people working as experts in AI governance or strategy roles should consider being fractional movement builders

Non-technical people working as experts in governance or strategy roles (e.g., Allan Dafoe) should consider a fractional approach to AI Safety movement building. For instance, they might consider allocating 10% of their time to networking events, talks, or mentorship.

What do non-technical contributions look like in practice?

To help illustrate the above, the following table outlines non-technical professions which can contribute to AI Safety Movement building and explains how doing so could impact contributors, contributions and coordination.

| Profession | Definition | Example movement building impact |

| Marketing (and communications) | Promoting and selling products or services through various tactics and channels using various forms of communication. | Using marketing communication to increase awareness of AI safety research opportunities in relevant audiences to increase the number of contributors. |

| Management | Overseeing and directing the operations of a business, organisation, or group. | Joining a research organisation to improve how well researchers involved in the AI safety movement coordinate around the organisation's goals and research agendas. |

| Entrepreneurship | Creating, launching, and managing new ventures. | Creating a new AI safety related research training program which can then hire new contributors. |

| Research | Gathering and analysing information to gain knowledge about a relevant topic. | Applied research to make the values, needs and plans of the AI (safety) community more legible which can help increase contributors, contributions and coordination. |

| Coaching | Providing guidance, advice, and instruction to individuals or teams to improve performance. | Coaching novice individuals and organisations within the AI safety movement can help to increase their contributions. |

| Operations | Planning, organising, and directing the use of resources to efficiently and effectively meet goals. | Reducing administration or providing support services to help AI safety organisations make better contributions. |

| Recruiting | Finding, screening, and selecting qualified job candidates. | Recruiting top talent and expertise to organisations to increase the number of contributors. |

The following table outlines skills relevant to those with non-technical expertise and examples of potential impacts on contributors, contributions and coordination.

| Skill | Definition | Example movement building impact |

| Search Engine Optimisation (SEO) | Managing websites and content to increase visits from search engine traffic. | Optimising a website or online content to increase capture of AI career related search engine traffic and increase contributors. |

| Research lab management | Overseeing and directing the operations of a research laboratory. | Increasing contributions by creating and managing labs that amplify the research output of experienced AI safety researchers. |

| Commercial innovation | Creating and implementing new ideas, products, or business models. | Creating AI safety related ventures that increase the funding and/or research and therefore increase the number of contributors to AI Safety and/or their contributions or coordination. |

| Surveying | Measuring and collecting data about a specific area or population to gain understanding. | Surveying key audiences at key intervals to better understand the values, needs and plans of the AI safety community and improve contributors, contributions or coordination. |

| Therapy | Treating emotional or mental health conditions through various forms of treatment. | Increasing contributions by helping individuals within the AI safety movement to improve their mental health, well-being and long term productivity. |

| Project management | Planning, organising, and overseeing the completion of specific projects or tasks within an organisation. | Coordinating resources and timelines, setting goals and objectives, and monitoring progress towards achieving them to help researchers in the AI safety movement to make better contributions. |

| Job description creation | Developing adverts for a specific job within an organisation. | Creating clear, accurate, and compelling job descriptions that effectively communicate the responsibilities, qualifications, and expectations for a role and therefore increase the number of contributors. |

| Facilitation | Coordinating and leading meetings, workshops, and other events. | Bringing together people from different backgrounds and perspectives to discuss and work on AI Safety issues and increase coordination. |

| Design | Create attractive visuals and interactive tools. | Create engaging and compelling content that raise awareness and increase contributions. |

Mistakes to avoid in movement building

It shouldn’t be assumed that all AI Safety Movement building projects will be net positive or that everyone with these professions or skills would be useful within AI Safety.

It is particularly important to avoid issues such as causing reputational harm to the EA and AI Safety community, speeding up capabilities research or displacing others who might make more significant contributions if they took the role you fill.

Professions such as marketing, advocacy and entrepreneurship (and related skills) are therefore particularly risky to practice.

I recommend that anyone with a relevant profession or skill should consider using it to support or start a project and then seek to validate their belief that it may be useful. For now validation might involve talking with senior people in the community and/or doing some sort of trial, or seeking funding.

I think that it's important to have a strong model of possible downsides if you're considering doing a trial and that some things might not be easy to test in a trial.

In future, there will hopefully be a clearer sense of i) the collective need for specific professions and skills, ii) the thresholds for when on can be confident that they/a project will be provide value and iii) the process to be followed to validate that if a person/project was useful.

Summary

Across my three posts, I have argued:

- The AI Safety community mainly comprises four overlapping, self-identifying, groups: Strategy, Governance, Technical and Movement Building.

- AI Safety movement builders are like a ‘Human Resources and operations team’ for the AI Safety community.

- People in other parts of the community can (and should) contribute to movement building in a fractional capacity.

- AI Safety movement builders contribute to AI Safety by helping to start and scale AI Safety projects

- Projects require contributions of many technical and non-technical professions and skills in full-time and fractional capacities.

- AI Safety movement builders help the community to optimise three outcomes/factors:

- Contributors: individuals making positive contributions to AI Safety.

- Contributions: contributors’ inputs into AI Safety work.

- Coordination: understanding and aligning contributions to ensure maximal efficiency.

The summarised approach is illustrated below.

Implications and ideas for the future

I argued that a lack of shared language and understanding was the cause of many problems with AI Safety Movement Building. With that in mind, if the response to my approach is that it seems helpful (even if just as a stepping stone to a better approach), this suggests a range of implications and ideas for the future.

Use the three AI Safety Movement building outcome metrics suggested (Contributors, Contributions and Coordination), or something similar, to evaluate progress in AI Safety Movement Building

It may be useful to have some sort of benchmarking for progress in AI Safety Movement building. One way to do this would be to have some sort of regular evaluation of the three AI Safety Movement building outcome metrics I suggested (or similar).

These outcome metrics are Contributors: how many people are involved in AIS Safety (total and as a percentage of what seems viable), Contributions: how well they are functioning and performing and Coordination: How well all contributors understand and work with each other.

If the factors that I have suggested seem suboptimal, then I’d welcome critique and the suggestion of better alternatives. I am confident that some sort of tracking is very important but not yet confident that my suggested outcomes are optimal to use.

However, as they are the best option that I have right now, I will next use them to frame my weakly held perspective on how AI Safety Movement building is doing right now.

I think that AI Safety Movement building is doing well for a small community but has significant potential for improvement

I really appreciate all the current AI Safety movement building that I am seeing, and I think that there is a lot of great work. Where we are reflects very well on the small number of people working in this space. I also think that we probably have a huge amount of opportunity to do more. While I have low confidence in my intuitions for why we are under optimised I will now explain them for the sake of hopefully provoking discussion and increasing shared understanding.

All the evaluations that follow are fuzzy estimates and offered in the context of me finally being convinced that optimising the deployment of AI is our most important societal challenge and thinking about what I might expect if say, the US government, was as convinced of the severity of this issue as many of us are. From this perspective, where we are is far from where I think we should be if we believe that there is ~5%+ chance of AI wiping us out, and a much higher chance of AI creating major non-catastrophic problems (e.g, economic, geopolitical, and suffering focused risks).

I think that probably less than 5% of all potentially valuable contributors are currently working on AI safety. I base this on the belief that the vast majority of people who could work on AI safety don’t even know about the core arguments and opportunities. Of those that do know of these options many have a very high friction path to getting involved, especially if they are outside key hubs. For instance, there are lots of different research agendas to evaluate, good reasons to think that none of them work, limited resources beyond introductory content, a lack of mentors and a lack of credible/legible safe career options to take.

I think that probably less than 50% of maximal potential individual productivity has been achieved in the current group of contributors. I base this on conversations with people in the community and work I have done alongside AI safety researchers. Physical health, mental health and social issues seem very significant. Burnout rates seem extremely high. Some people seem to lack technical resources needed to do their research.

I think that probably less than 10% of optimal coordination has been achieved across all contributors. I base this on conversations with people in the community and work I have done alongside AI safety researchers. It seems that there is relatively little communication between many of the top researchers and labs and little clarity around which research agendas are the most well-accepted. There seems to be is even less coordination across areas within the community (.e.g, between governance and technical research)

Assume we regard the total output of AI Safety community as being approximated by the combination of contributors, contributions & coordination. This means that I think that we are at around 0.25% of our maximum impact (0.05*.5*.1*100).

I’d welcome critiques that endorse or challenge my reasoning here.

Determining clear priorities for AI Safety Movement Building is the most important bottleneck to address once we have a shared understanding

To varying extents, we appear to lack satisfactorily comprehensive answers to key questions like: Which AI Safety movement building projects or research projects are most valued? Which professions and skills are most needed by AI Safety organisations? Which books, papers and resources are most critical to read for new community members? Which AI organisations are best to donate to?

We also appear to lack comprehensive understanding of the drivers of differences in opinions within answers to these questions. All of this creates uncertainty, anxiety, inaction and inefficiency. It therefore seems very important to set up projects that explore and surface our priorities and the values and differences that underpin them.

Projects like these could dramatically reduce uncertainty and inefficiency by helping funders, community members, and potential community members to understand the views and predictions of the wider community, which in turn may provide better clarity and coordinated action. Here are three examples which illustrate the sorts of insights and reactions that could be provoked.

- If we find that 75 percent of a sample of community members are optimistic about project type Y (courses teaching skill x in location y) and only 20% are optimistic about project Z (talent search for skill y in location x), this could improve related funding allocation and communication and action from movement builders and new contributors.

- If we find that technical AI Safety organisations expect to hire 100 new engineers with a certain skill over the next year, then we can calibrate a funding and movement building response to create/run related groups and training programs in elite universities and meet that need.

- We find that 30% of people of a sample of community members see a risk that movement building project type Y might be net negative and explain why. We can use this information to iterate project type Y to add safeguards that mitigate the perceived risks. For instance, we might add a supervisory team or a pilot testing process. If successful, we might identify a smaller level of concern in a future survey.

Here are some other benefits from prioritisation.

- To help new entrants to the AI safety community to understand the foundational differences in opinion which have caused its current divisions, and shape their values around the organisations and people they trust most.

- To help Movement Builders to communicate such information to the people they are in contact with, raising the probability that these people act optimally (e.g., by working on the skills or projects that seem best in our collective expectation).

- To help current contributor to realise that they have overlooked or undervalued key projects or projects and align to work on those which are forecast to be more effective.

I am not confident of the best way to pursue this goal, but here are some ideas for consideration:

- We create panel groups for the different sectors of the AI Safety Community. Participants in the groups consent to be contacted about paid participation in surveys, interviews and focus groups relating to AI Safety Community matters.

- Groups run regular projects to aggregate community and expert opinions on key questions (e.g., see the questions above but note that we could also collect other key questions in these surveys)

- Where surveys or dialogue show important differences in prediction (e.g., in support for different research agendas or movement building projects), groups curate these differences and try to get to identify the causes for underlying differences

- Groups run debates and/or discussions between relevant research organisations and actors to explore and surface relevant differences in opinion.

- Groups try to explore key differences in intuitions between organisations and actors by collecting predictions and hypotheses and testing them.

What are some examples? This survey of intermediate goals in AI governance is an example of the sort of survey work that I have in mind. I see curation work as a precursor for surveys of this nature (because of the need for shared language).

I might be wrong to believe that determining priorities is such a problem or that related projects are so important, so please let me know if you agree or disagree.

Fractional movement building seems like the best way to grow the community once it knows if, and how, it wants to grow

I have argued that fractional movement building - allocating some, but not all, of my productive time to movement building- is a useful approach for many people to consider. Here is why I think it is probably the best approach for the AI Safety community to grow with.

It will usually be better for most people in the AI safety community to do fractional movement building than to entirely focus on direct work

It seems likely the average AI safety expert will get maximal impact from their time when they allocate some portion of that time for low frequency, but high impact, movement building work. This could be networking with intellectual peers in other areas and/or providing little known meta-knowledge (e.g., about what to read or do to make progress on problems), which can make it much easier for other and future researchers to make important contributions.

Why will the average AI safety expert will get maximal impact from their time when they allocate some portion of that time for low frequency, but high impact, movement building work? This is mainly because AI Safety is a pre-paradigmatic field of research and practice where there is no clear scientific consensus on what to do and with what assumptions. This has the following implications.

Many (probably orders of magnitude more) potentially good contributors are unaware, than aware, of the field of AI Safety. I suspect there are millions of very smart people who are not aware of, or engaged with, AI Safety Research. I am optimistic that some portion of these people can be persuaded to work on AI Safety if engaged by impressive and capable communicators over extended periods, which will require our best brains to communicate and engage with outside audiences (ideally with the help of others to coordinate and communicate on their behalf where this is helpful).

Most important information in AI Safety is in the heads of the expert early adopters. Because AI Safety is a pre-paradigmatic field of research and practice there is little certainty about what good content is, that bar is changing as the assumptions change with the result that there are few to no production systems for, and examples of, well synthesised explanatory content (e.g., a college level textbook). This means that even a very smart person seeking to educate themselves about the topic will lack access to the most key and current knowledge unless they have an expert guide.

It will be usually be better for AI safety movement building to focus on growing fractional movement building commitments than full-time movement building commitments

Fractional movement building can likely engage more people than full time movement building

Most people are more comfortable and capable of persuading other people to do something when they already do that thing or something similar. Someone with a PhD in X, researching X, is probably going to feel much more comfortable recommending other people to do a PhD in, or research on, X than if they have only a superficial understanding of this area and no professional experience.

Most people who are at all risk-adverse will generally feel more comfortable working on something superficially legitimate than on being a full time ‘movement builder’. They will be more comfortable being hired to research X at Y institution, which may involve doing movement building, than being a full-time movement builder for CEA. As a personal example, I feel it will look very strange for my career if I go from being a university based academic/consultant with a certain reputation built up over several years to now announcing that I am a freelance ‘movement builder’ or one working for CEA. I imagine that many of my professional contacts would find my new role to be very strange/cultish and that it would reduce the probability of getting future occupations if it didn’t work out as it’s not a very well understood or respected role outside of EA.

It is easier to get involved as a fractional role. Many more people can give 10% of their time to be a fractional movement builder and many more organisations can avail of small contributions than full time commitments.

Fractional movement building will often provide a better return on time and effort than full time movement building

Community builders with recognised roles at credible organisations will be seen as credible and trustworthy sources of information. If someone approached me as ‘climate change/socialism/veganism’ movement builder working for a centre I hadn’t heard of or named after that movement, I’d probably be more resistant to and sceptical of their message than if they worked for a more conventional and/or reputable organisation in a more conventional and/or reputable role. Here are two examples:

- Someone working as an average level researcher at an AI safety research organisation is likely to more trusted and knowledge able communicator about their research area and related research opportunities than someone who works full time as a movement builder.

- Someone who works in a university as a lecturer is going to be much better at credibly influencing research students and other faculty than someone who is outside the community and unaffiliated. As a lecturer focused on spending 20% of time on AI Safety Movement building this person can influence hundreds, maybe thousands, of students with courses. As a full-time movement builder they would lose this access and also associated credibility.

Most people who can currently contribute the most to movement building are better suited to fractional than full-time roles. As stated above, most experts, particularly technical experts, have unique, but rare opportunities to influence comparably talented peers, and/or provide them with important knowledge that can significantly speed up their research.

Fractional movement building is more funding efficient. Much movement building work by fractional movement builders (e.g., networking, presenting, or teaching etc) is partially or fully funded by their employer. It may displace less favourable work (e.g., a different lecturer who would not offer an AI Safety aligned perspective). This saves EA funders from having to fund such work, which is valuable while we are funding constrained.

Feedback

I have outlined a basic approach/theory of change - does it seem banal, or flawed? I will pay you for helpful feedback!

Supportive or critical feedback on this or my other posts would be helpful for calibrating my confidence in this approach and for deciding what to do or advocate for next.

For instance, I am interested to know:

- What, if anything, is confusing from the above?

- What, if anything, was novel, surprising, or useful / insightful about the proposed approach for AI safety movement building I outlined in my post?

- What, if anything, is the biggest uncertainty or disagreement that you have, and why?

If you leave feedback, please consider indicating how knowledgeable and confident you are to help me to update correctly.

To encourage feedback I am offering a bounty.

I will pay up to 200USD in Amazon vouchers, shared via email, to up to 10 people who give helpful feedback on this post or the two previous posts in the series. I will also consider rewarding anonymous feedback left here (but you will need to give me an email address). I will share anonymous feedback if it seems constructive and I think other people will benefit from seeing it.

I will also leave a few comments that people can agree/disagree vote on to provide quick input.

What next?

Once I have reviewed and responded to this feedback I will make a decision about my next steps. I will probably start working on a relevant fractional role unless I am subsequently convinced of a better idea. Please let me know if you are interested in any of the ideas proposed and I will explore if we could collaborate or I can help.

Acknowledgements

The following people helped review and improve this post: Alexander Saeri, Chris Leong, Bradley Tjandra and Ben Smith. All mistakes are my own.

This work was initially supported by a grant from the FTX Regranting Program to allow me to explore learning about and doing AI safety movement building work. I don’t know if I will use it now, but it got me started.

Feedback thread (agree/disagree vote)

Determining clear priorities for AI Safety Movement Building is an important bottleneck

Fractional movement building seems like something that we should do more of in AI Safety

There is too much uncertainty about what AI Safety Movement Building is, and how to do it helpfully

I think that it would be useful to regularly evaluate AI Safety Movement building progress

I generally agree with the process suggested for achieving those outcomes (AI Safety movement builders contribute to AI Safety by helping to start and scale AI Safety projects. Projects require contributions of many technical and non-technical professions and skills in full-time and fractional capacities.)

I generally agree with the suggested outcomes for AI Safety Movement Builders to focus on (contributors, contributions and coordination)

I generally agree with the claims and approach outlined in this post