Sequence contents

- Background on QALYs and DALYs

- The HALY+: Improving preference-based health metrics

- The sHALY: Developing subjective wellbeing-based health metrics

- The WELBY (i): Measuring states worse than dead

- The WELBY (ii): Establishing cardinality

- The WELBY (iii): Capturing spillover effects

- The WELBY (iv): Other measurement challenges

- Applications in effective altruism

- Applications outside effective altruism

- Conclusions

Key takeaways from Part 2

- As explained in Part 1, health-adjusted life-years (HALYs) combine length and quality of life on the same scale. This enables direct comparison of life-extending and life-improving interventions, and the quantification of the overall health of a population.

- The most widely-used HALYs are the quality-adjusted life-year (QALY) and disability-adjusted life-year (DALY). For reasons covered in Part 1, they tend to inaccurately estimate the overall wellbeing impact of many conditions, leading to serious misallocation of resources.

- The (hypothetical) HALY+ incorporates incremental improvements to the most common versions of the QALY and DALY, so that they more closely track subjective wellbeing (and perhaps other things people care about) while avoiding some potential problems with pure wellbeing measures. The extent to which they would resolve each of my core concerns with current HALYs is summarized in the conclusion.

- Key topics that must be addressed in order to develop and use the HALY+ include:

- How should health states be described? Narrow health-focused multi-attribute utility instruments like the EQ-5D could be replaced by ones that include more psychosocial dimensions, such as the E-QALY, and DALY health state descriptions could likewise be broadened.

- How should states be assigned weights? There is a lot of literature comparing the time tradeoff, standard gamble, visual analog scale, and discrete choice experiments. It may be worth taking another look at methods that have fallen out of favour, such as the person tradeoff, and recent innovations like direct elicitation of the social welfare function.

- How should we handle extremely negative (or positive) states? Current methods grossly underestimate the severity of some states, overestimate the severity of others, and ignore positive experiences beyond absence of health problems. There may be some relatively straightforward modifications to achieve some rapid improvement, but dealing with extreme states properly would be a challenging (though highly important and neglected) project.

- Who should provide the weights? Current value sets are normally derived from the stated preferences of the general public, most of whom haven’t experienced the relevant health conditions. Some have argued for the use of “experience-based” values (e.g., from the patient herself), but those are problematic for a variety of reasons, so it’s also worth thinking about how general population preferences can become better informed.

- How should we handle spillover effects? Consequences beyond the individual patient, e.g., on carers, are typically ignored, but can be valued as costs, HALYs, or a mixture. How to do this while avoiding double counting and limiting the scope of evaluations needs further research.

- How should HALYs be aggregated? HALYs are normally counted equally, but it’s possible to give extra weight to some HALYs in order to improve efficiency (e.g., to capture spillover effects) and/or to address distributional concerns (e.g., to favor severe states). Methods for deriving and implementing such weights are underdeveloped. While this is mostly an issue for broader evaluation methodology, it may also influence what kind of HALY we select or create in the first place.

- Which HALY should we focus on improving, the QALY or the DALY? The DALY is used in low-income settings with the highest burden of disease, and receives less attention from researchers; but the QALY is increasingly popular in middle- as well as high-income countries, and work on it is perhaps more tractable.

- How can we influence the development and use of HALYs? There’s no point creating a metric that won’t be used, so it’s crucial to think about the practicalities of effecting policy change. There are many relevant degree programs, academics, and public institutions, but there could also be a role for large non-profits.

- Work to develop the HALY+ seems generally more tractable than work on subjective wellbeing measures, such as those discussed in Parts 3–7, at least in terms of gaining acceptance among stakeholders outside the effective altruism movement. But the impact of successful reforms may be more limited, and some areas have already reached diminishing returns, so topics need to be selected carefully.

- Many of these projects would require strong quantitative skills, but there is also a need for qualitative research and philosophical analysis. In most cases, patience and a pragmatic attitude would be very beneficial.

Introduction to Part 2

This is the second in a series of posts on the measurement of health and wellbeing. Part 1 reviewed the structure, descriptive system, valuation methods, application, and interpretation of the most common health-adjusted life years (HALYs), namely the quality-adjusted life-year (QALY) and disability-adjusted life-year (DALY). It also highlighted five problems with them:

- They neglect non-health consequences of health interventions.

- They rely on poorly-informed judgements of the general public.

- They fail to acknowledge extreme suffering (and happiness).

- They are difficult to interpret, capturing some but not all spillover effects.

- They are of little use in prioritizing across sectors or cause areas.

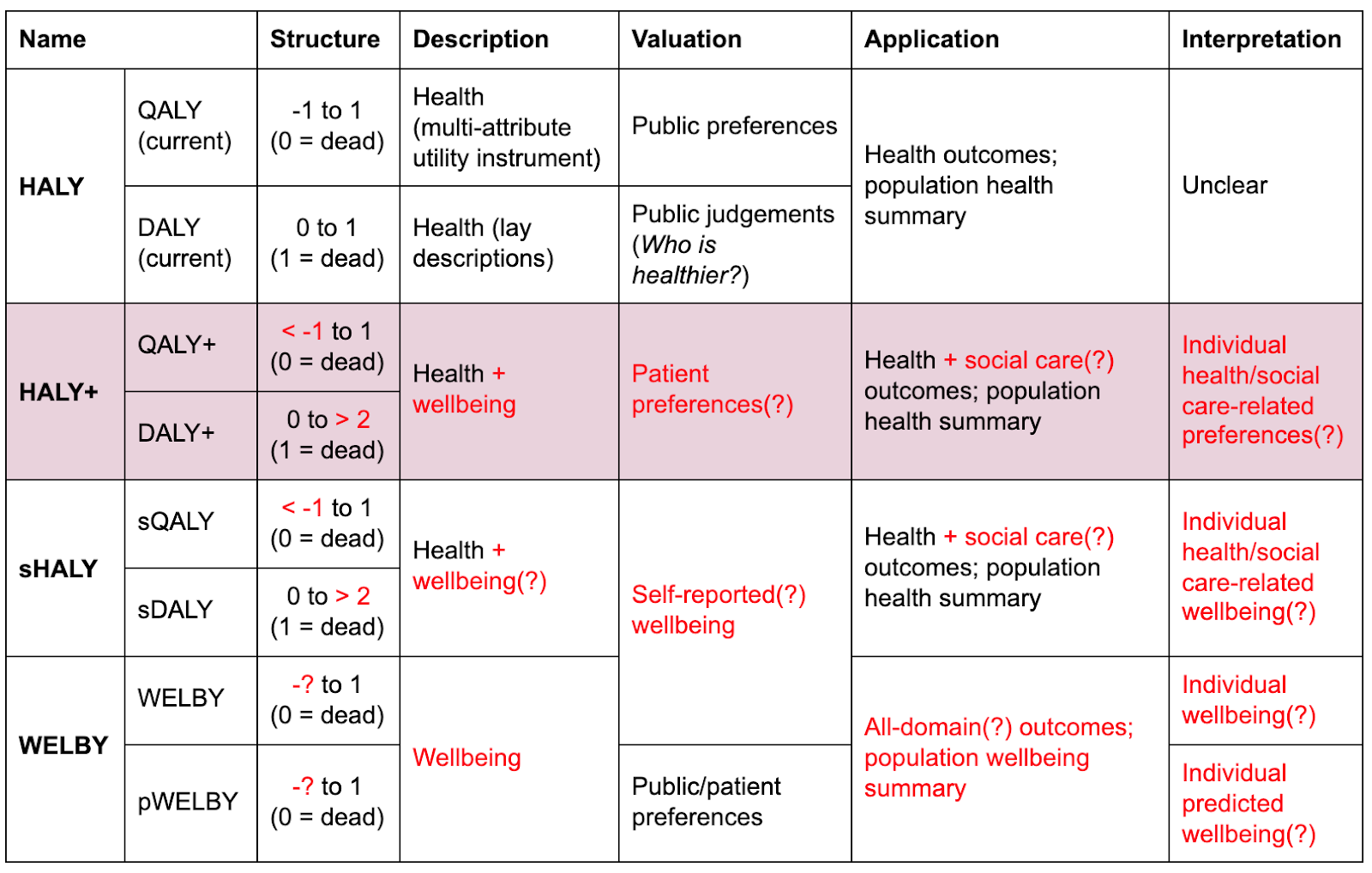

This series of posts focuses on the use of subjective wellbeing (SWB) to overcome these challenges. However, I first consider whether it’s worth trying to incrementally improve current outcome measures, and if so, what research will help us to do so. The result would be a hypothetical measure I called the HALY+: a variation of the QALY or DALY that retains preference-based methods of valuation but differs from the most widely-used existing versions in a few respects, as shown in the table below:

HALYs and their alternatives. Red text indicates departures from current practice. Question marks indicate optional or uncertain features.

The methods used to derive and apply HALYs, particularly QALYs, are an active area of research among academics and agencies such the UK’s National Institute for Health & Care Excellence (NICE) and the Institute for Health Metrics & Evaluation (IHME). Rather than providing detailed summaries of existing literature, I mostly give a basic outline of the issues (with links to further reading),[1] followed by a list of potential research topics. The main aims are to:

- Encourage proponents of a SWB approach to seriously consider alternatives, at least within domains such as health and social care. (I think the challenges of operationalizing SWB, and the advantages of some preference-based alternatives, are often underestimated.)

- Allow readers to appreciate previous work. (Even if SWB measures are ultimately preferred, I think their developers could learn much from preference-based efforts.)

- Draw particular attention to some areas that are important, somewhat tractable, and relatively neglected within health economics, such as ways of handling states worse than dead.

- Highlight opportunities for further research that could be carried out by students, academics, effective altruism organizations, and perhaps independent researchers.

I finish with a brief assessment using the importance, tractability, and neglectedness (ITN) framework commonly employed by the effective altruism community, and a summary of how well the HALY+ would address the five core problems listed above.

Why should we consider working on the HALY+?

It might reasonably be asked why we should bother trying to improve (rather than replace) traditional HALYs. Given their major flaws—discussed in Part 1 and listed in the introduction—shouldn’t we spend all our efforts working on distinct alternatives such as the wellbeing-adjusted life-year (WELBY), or at least a HALY valued using SWB (the sHALY, discussed in Part 3)?

It’s difficult to answer that question without addressing general critiques of a wellbeing approach to healthcare. Daniel Hausman (e.g., 2012; 2015) has perhaps provided the strongest arguments for evaluating health interventions and the burden of disease in terms of health itself (in some functional sense) rather than its effects on wellbeing.[2] Drawing partly on his work, I think there are three broad reasons we might want to continue work on preference-based measures of health as well as, or perhaps even instead of, developing a sHALY or WELBY.

- Wellbeing might be the wrong metric in principle.

- Most moral theories, and most members of the public, care about more than happiness, e.g., many people think health is intrinsically good, independently of its effects on wellbeing (Adler, Dolan, & Kavetsos, 2017; Brouwer et al., 2017). In particular, there is resistance to the idea that if someone adapts to a disability, such that it no longer affects their wellbeing, then preventing or curing their condition has no value.

- Even if they are wrong in some fundamental sense (e.g., because hedonic states are all that matter), the legitimacy of institutions may rest in part on their taking into account the views of the public they serve.

- While nobody would claim that health is all that matters in life, there is some intuitive appeal to the claim that the proper goal of health systems is to improve health—especially since other determinants of wellbeing can, to some extent, be addressed by other areas of public policy.

- Wellbeing might be hard to measure in practice.

- There are many different conceptions of wellbeing, and at least two components of subjective wellbeing alone, so it would be hard to reach agreement on which one(s) to measure. Even given a particular theory of wellbeing, how much various aspects of life (and health) influence it will vary so much among individuals and groups—and perhaps over time–that comparisons may be impractical. Defining and measuring health is not straightforward either, but there’s more of a consensus on what good health looks like.

- Some wellbeing measures, especially the common single-item ones (such as life satisfaction on a 0–10 scale), may lack validity or responsiveness in some conditions; that is, clinically important changes do not always show up in wellbeing data. When this is because they do not actually affect wellbeing, we may want to bite the bullet and say they don’t matter; but in some cases it’s likely to be due to response shift (your 5 may not be the same as my 5), the framing of the questions (e.g., questions about “happiness” may not fully capture physical pain or a sense of purpose), or simply because the data are noisy and the effect relatively small.

- There is a possibility that some preference-based measures of health better capture spillover effects. Absent other methods for accounting for such consequences, this could make them a better proxy for societal wellbeing than direct wellbeing measures. (See below and Part 6.)

- There may be resistance from stakeholders.

- Patients, clinicians, and decision-making bodies are generally reluctant to use wellbeing measures due to normative concerns mentioned above, perceived irrelevance to health conditions, or simply institutional inertia. So it is unlikely that the main “consumers” of QALYs and—even more so—DALYs will adopt measures based on wellbeing (especially subjective wellbeing) in the near future.

There are, of course, strong counter-arguments to the philosophical claims, and ways of overcoming at least some of the practical challenges. Moreover, some of these critiques may “prove too much,” in that they could also constitute reasons for opposing some “HALY+” improvements, many of which have strong support from stakeholders. But for now I will assume that there are grounds for some people to seriously consider working on the topics presented below.

How should states be described?

As noted in Part 1, there are four main ways to describe health:

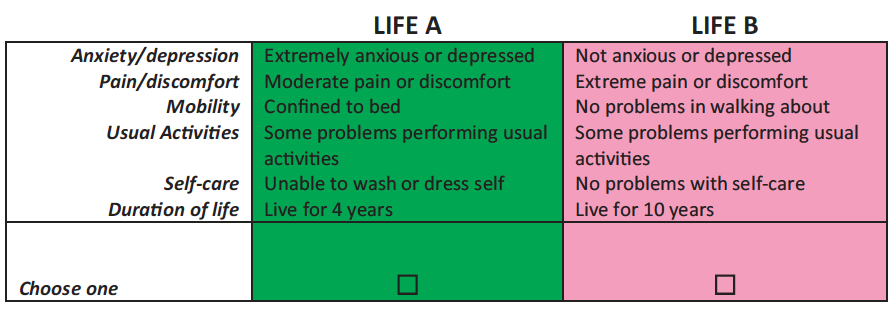

- Generic multi-attribute utility instruments (MAUIs) classify health states using severity levels on several dimensions of health. The EQ-5D-3L, for example, gives a score of 1–3 on five dimensions: mobility, self-care, usual activities, pain/discomfort, and anxiety/depression. When paired with preference-based valuation, they are called generic preference-based measures (GPBMs).

- Condition-specific measures only include dimensions relevant to the particular disease, such as cancer or dementia.

- Bolt-ons are dimensions added to generic MAUIs in order to capture “missing” aspects of a health condition, such as problems with vision, hearing, or tiredness.

- Vignettes are lay descriptions of what it’s like to live with the condition.

There are pros and cons to each of these, and there may be a place for all of them in health economic evaluation.[3] But since MAUIs are most widely used, and the various MAUIs provide non-trivially different values, choosing (or creating) an MAUI that correlates more strongly with subjective wellbeing is likely to have the greatest payoff. Moreover, about two thirds of the difference in values among GPBMs is due to the choice of MAUI rather than the valuation method (Richardson, Iezzi, et al., 2015), so it may be the single most important decision to make (though improving the handling of states worse than dead could be even more consequential).

Unfortunately, the EQ-5D, which is by far the most common MAUI,[4] is probably the worst of all. Of six MAUIs compared by Richardson, Chen, et al. (2015), the EQ-5D-5L explained the least variation in SWB scales—about 25% (i.e., R2 ~0.25). Other studies have drawn similar conclusions (e.g., Mukuria & Brazier, 2013). Potentially better options include:

- AQoL-8D (R2 ~0.4–0.6). In addition to three physical dimensions (independent living, pain, senses), it contains five “psychosocial” dimensions (happiness, mental health, coping, relationships, self-worth) that clearly overlap with aspects of wellbeing. Each of the 35 items on the questionnaire (3–7 per dimension) were thoroughly tested, and many were originally derived from existing mental health measures or focus groups of patients and carers, making the process more rigorous than for other MAUIs. However, its questionnaire takes about five minutes to complete (compared to under a minute for the EQ-5D) and it requires a complex, multi-stage valuation procedure (see references here). To my knowledge, it’s only used in Australia, and I’d be surprised if other countries/agencies would consider it practical enough to adopt, though I haven’t looked into the details. Note also that the minimum value on the current value set is -0.04, perhaps making it less suitable for extremely bad states, though revaluation is possible.

- SF-6D (R^2 ^ ~0.3–0.6). This comprises physical functioning, role participation (both physical and emotional), social functioning, bodily pain, mental health, and vitality. The new version (Mulhern et al., 2020) is derived from ten items (5–6 levels each) selected from the SF-36 version 2, one of the world’s most widely-used health status questionnaires. This makes it longer than the EQ-5D but still quite usable.

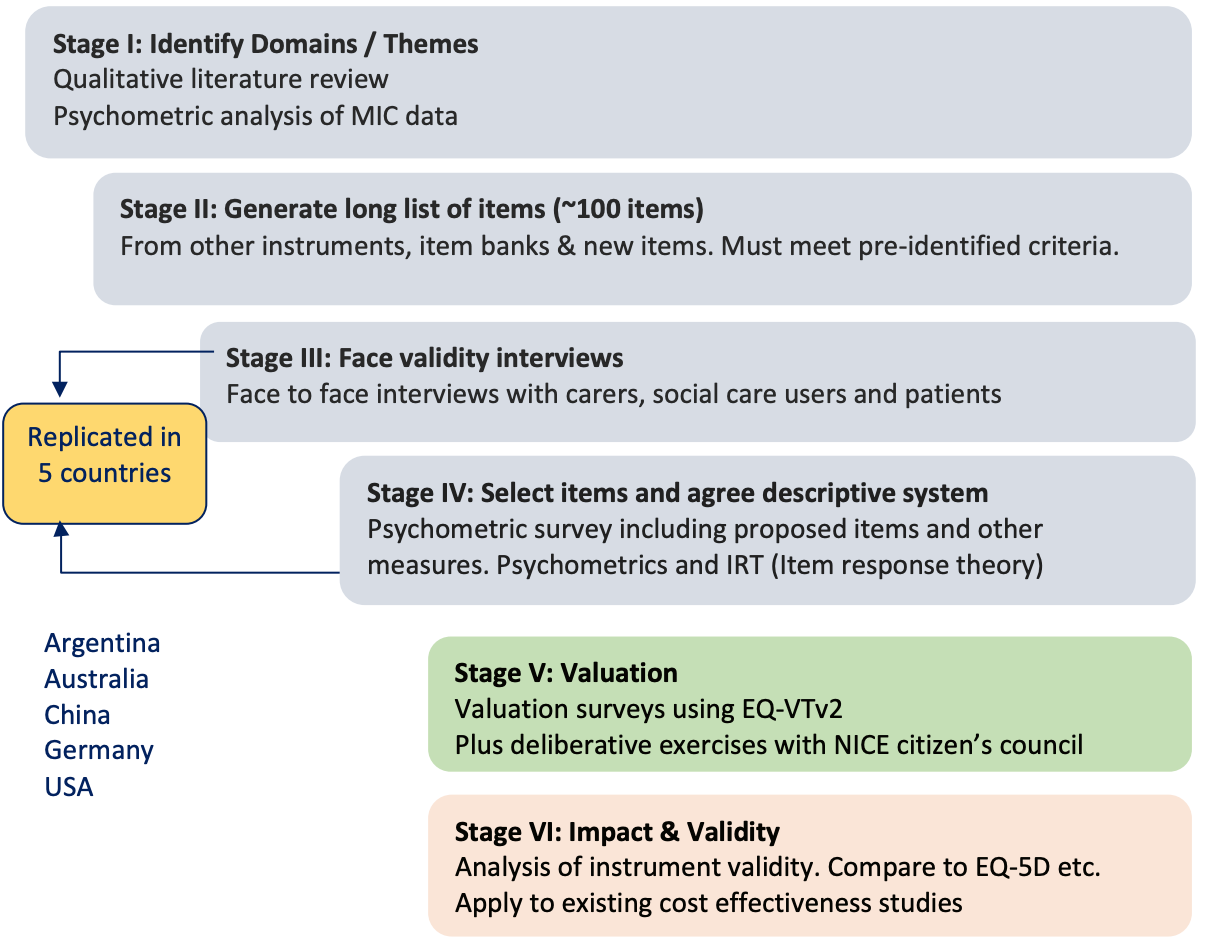

- E-QALY. In collaboration with NICE, the Extending the QALY project at Sheffield aims to develop a measure that can assess outcomes in health care, social care (e.g., providing living support to elderly people), and public health—including outcomes for carers—in order to guide resource allocation across those sectors. At the start of the six-stage process (see diagram below), seven domains of quality of life were identified from the literature—physical sensation, feelings & emotions, activity, self-worth, control & coping, relationships, and concentration & memory—each of which has 2–6 sub-domains. Potentially suitable items (questions), drawn from existing measures, were tested for face validity and psychometric performance in patients, social care users, carers, and the general public in six countries (the UK, USA, Germany, Australia, Argentina, and China) (Brazier et al., n.d.). The valuation of selected items by the general public in those countries is currently underway. The E-QALY is firmly rooted in the extra-welfarist[5] tradition (in that it values more than just “utility”), has more in common with objective list theories of wellbeing—especially the capabilities approach—than SWB, and will not be usable in all domains (Peasgood et al., n.d.). Nevertheless, I expect it to correlate more strongly with SWB and be more widely applicable than most of the alternatives—most importantly the EQ-5D. It’s still unclear whether it will be viable, or whether NICE and others will accept it, but it’s perhaps the most promising effort I’m aware of.

Stages of the development of the E-QALY (source). MIC = maximal information coefficient. EQ-VTv2 = EuroQuol Valuation Technology protocol, Version 2 (Oppe et al., 2014)

- Creating a new MAUI. Effective altruists (or anyone else) could in principle develop an entirely new MAUI that fits their desiderata. However, this typically takes several researchers a few years, and does not always result in a viable measure, so it should not be undertaken lightly. The basic steps in the process are described in Brazier et al. (2012). (See also Brazier et al., 2017, ch. 8; Brazier & Deverill, 1999; Young et al., 2010; Devlin et al., 2018; Yang et al., 2009.)

Just as for the QALY, it’s theoretically possible to nudge the DALY weights in a wellbeing direction by including more information about psychosocial aspects of the health states in the lay descriptions. More radically, they could be replaced with a QALY-style MAUI such as the EQ-5D or E-QALY. Neither of those seem likely given the developers’ stated aim to measure health in a functional sense, but the DALY has changed several times in the past following various objections (Chen et al., 2015), so it's not impossible they (or their successors) could be persuaded to make some reforms.

Some possible projects include:

- Updating reviews of MAUIs from a wellbeing perspective, to ensure I haven’t missed any more promising ones.

- Keeping an eye on the development of the E-QALY, and perhaps joining in the efforts (e.g., by offering to do a thesis related to it).

- Looking into the feasibility/cost/timeline of developing an even better one.

- Could a single metric be used to evaluate, say, anti-poverty as well as health and social care interventions, without losing validity in either?

- What would a system that took into account moral uncertainty look like?

- How would you decide which theories of wellbeing to include, which measures of each to use, and how much weight to give to each measure?

- Looking into the policy aspects of promoting a new form of QALY or DALY (see discussion below).

- Identifying “quick wins” for DALY improvements, e.g., cases where the descriptive system is clearly distorting results.

- The similarity of values for terminal cancer with (0.540) and without pain relief (0.569) described in Part 1 may be a good example.

How should states be valued?

The second major factor determining HALY weights (and therefore also its structure, applications, and interpretation) is the process for assigning values to health states. According to Richardson, Iezzi, et al. (2015), this accounts for about a third of the variation between GPBMs, making it an important choice.

This section offers a brief review of the five most common methods—visual analog scale, standard gamble, time tradeoff, and discrete choice experiments—and lists some rarer alternatives. (For longer reviews, see e.g., Brazier et al., 2017, ch. 4; Green, brazier, & Deverill, 2000.) Approaches to obtaining values considered “worse than dead” are left to the following section, as I think that topic merits special treatment.

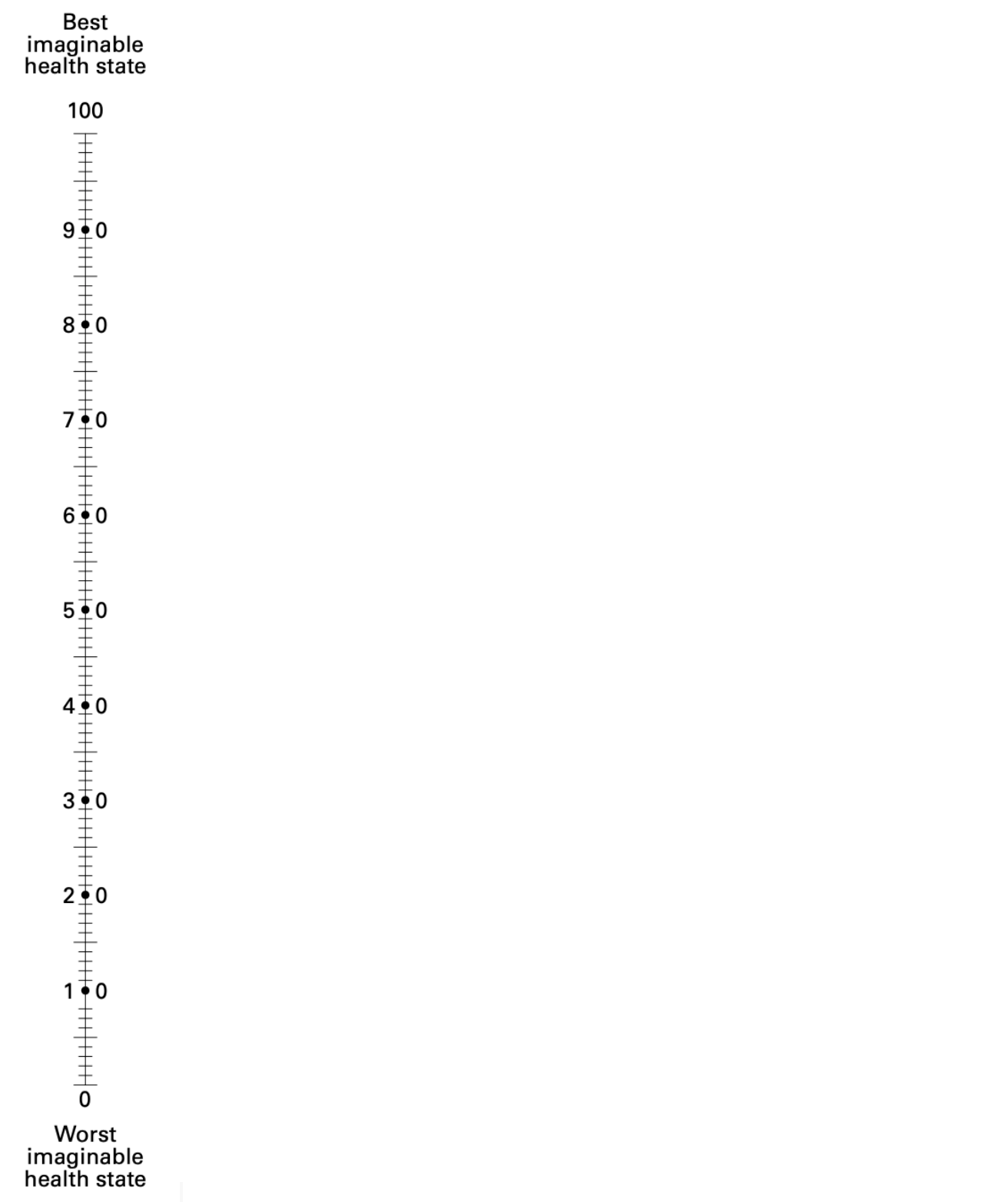

Visual analog scale (VAS)

Sometimes called a rating scale, the VAS is typically a thermometer-like scale, usually running from 0 (e.g., “the worst health you can imagine”) to 100 (e.g., “the best health you can imagine”). Respondents mark the point that they feel best represents the target health state.

The EQ-VAS, a form of visual analog scale used alongside the EQ-5D.

This is quick and easy to complete (resulting in high response rates), and is cheap to administer. The main criticism from health economists is that it violates expected utility theory (EUT). VAS responses are not strictly choice-based: they don’t require any consideration of the opportunity costs (e.g., lower life expectancy) of having a lower QALY weight for the target state. VAS is also vulnerable to end-aversion bias (people tend to avoid assigning extreme values), and the spreading effect (when valuing several states, people tend to be reluctant to place them close together). The latter results in significant context effects: the value for a given state depends to a considerable degree on which states are valued at the same time. Thus, some have claimed that responses are better understood as reflecting ordinal preferences (i.e., a ranking) rather than strength of preference (i.e., “utilities”). There has been some pushback on all of these points (see e.g., Devlin, 2006 and responses by Brazier & McCabe, 2006), but by the late 1990s it seems a near-consensus emerged among health economists that the VAS is unsuited to the generation of HALY weights (e.g., Green, Brazier, & Deverill, 2000; Brazier et al., 2003).

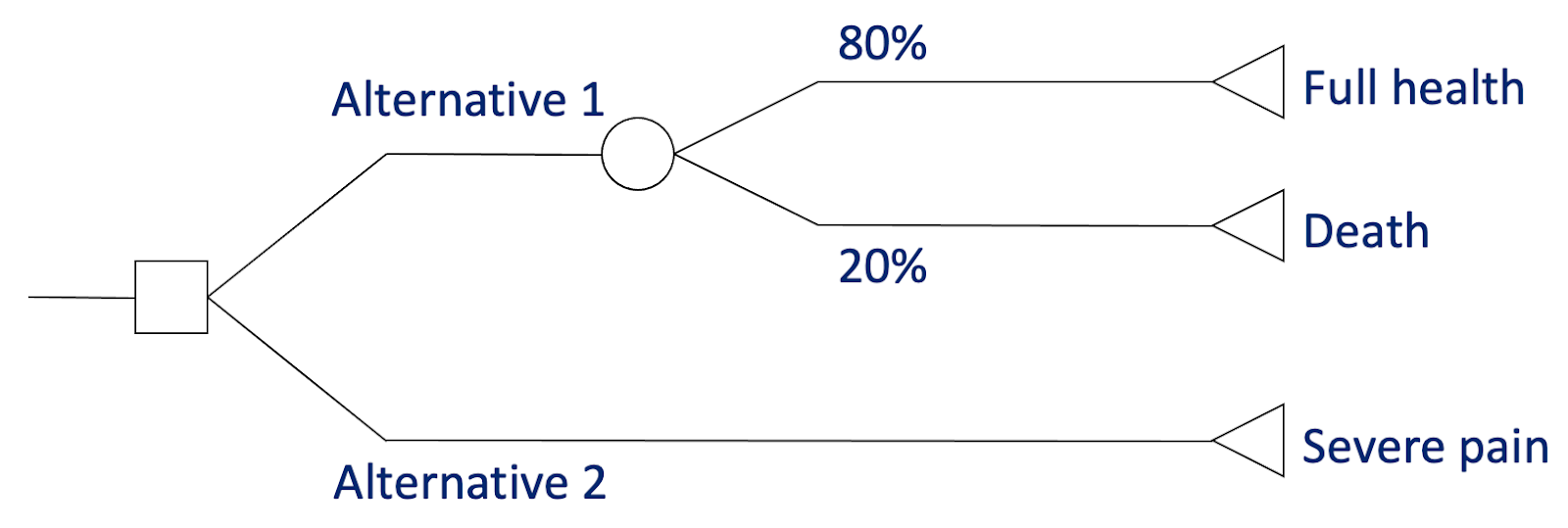

Standard gamble (SG)

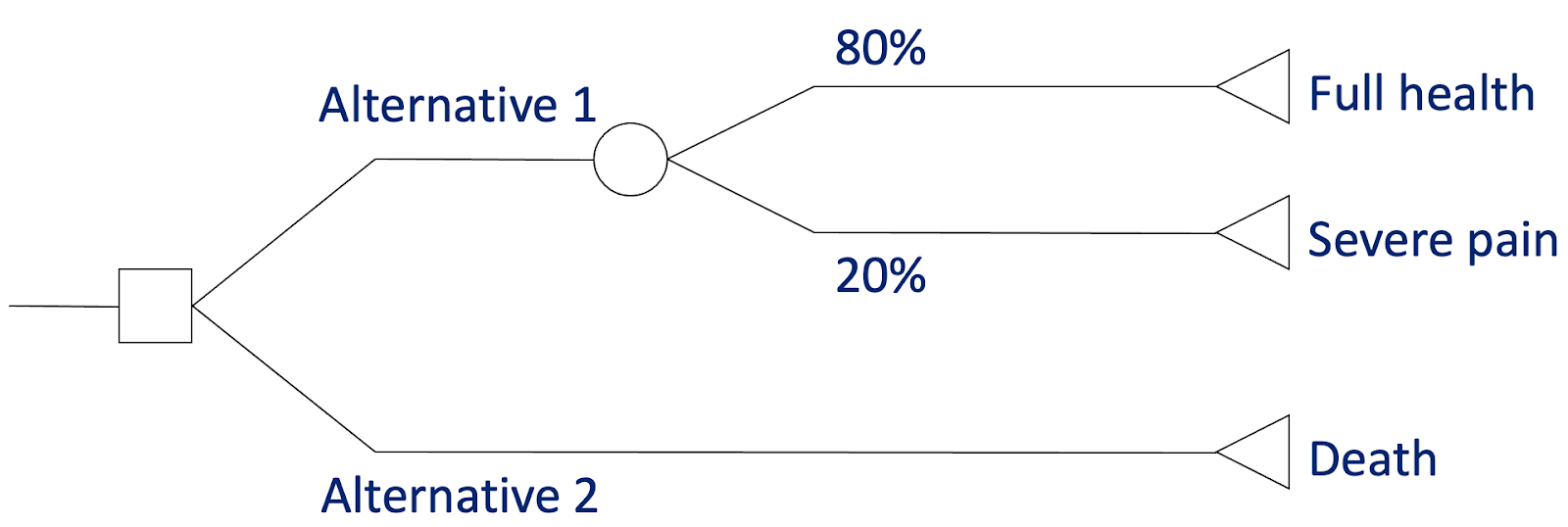

In the SG, respondents trade off quality of life and risk of death, by stating the highest probability of death they would accept to avoid a fixed period (e.g., 10 years) in the target health state.

The standard gamble for states considered better than dead. In this case, the respondent is willing to risk a 20% chance of death to avoid 10 years in severe pain, giving a QALY value of 0.8. (Image adapted from lecture slides by Tessa Peasgood.)

This was long favoured by health economists due to its supposed grounding in EUT. Unlike the other options here, it involves decisions under uncertainty; and unlike some (notably VAS), it requires choices that involve sacrifice, thereby addressing the tradeoff between quantity and quality of life. The resulting values are also on the interval scale required by QALYs: for someone in a state valued at 0.4, moving to 0.8 is twice as good as moving to 0.6, and so on.

However, it’s not clear that the kind of uncertainty involved is the same as in medical decision-making: most real-life choices are not between only two options, one of which is a known risk of instant death and the other a certain period in a chronic state. Moreover, the von Neumann–Morgenstern axioms on which EUT is based are not descriptively valid; for example, people are generally somewhat loss-averse, and tend to overstate small probabilities while understating large ones. Thus, while its psychometric performance is arguably acceptable, respondents often struggle with the task, and the resulting “utilities” may not represent quite what its proponents claim. (See, e.g., Richardson, 1994; Gafni, 1994). For some respondents, such as the terminally ill, it may also be considered inappropriate to ask questions about death.

Time tradeoff (TTO)

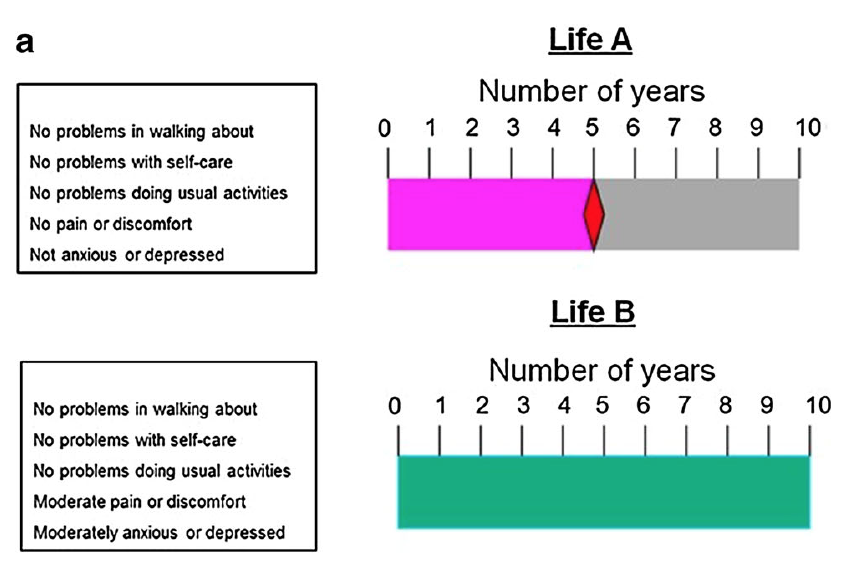

Respondents directly trade off duration and quality of life, by stating how much time in perfect health is equivalent to a fixed period in the target health state. For example, if they are indifferent between living 10 years with moderate pain and moderate depression or 8 years in perfect health, the weight for that state is 0.8.

Visual aid (“time board”) for better-than-dead states, used by the MVH TTO protocol. (From Oppe et al., 2016.)

This is arguably less cognitively challenging than the SG, while retaining many of its advantages: it’s choice-based, involves trading off quantity and quality of life, and produces values on an interval scale.

But it also suffers from many similar problems. It’s still quite hard for some respondents to grasp, especially when valuing states worse than dead (discussed in the next section), and the comparison with death—required even for “better than dead” states—may still be inappropriate in some cases. Just as the SG is affected by attitudes to risk, TTO responses often violate the assumption of constant proportional tradeoff: many people are willing to trade less proportional life expectancy to avoid a severe state when the hypothetical duration is shorter (e.g., 2 years out of 10 = QALY 0.8, but 20 years out of 40 = 0.5), sometimes switching to a preference for death when longer periods are used—it seems they feel they could “put up with it” for a while, perhaps until their children grow up, but would eventually find it intolerable (e.g., Dolan & Stalmeier, 2003). Relatedly, most people exhibit positive time preference: a year of healthy life is more valuable sooner than later. For mild illnesses, many are unwilling to trade any life expectancy, while others will not accept any amount of health life as “compensation” for enduring very severe states (see below).

So, while the TTO has dominated health state valuation so far this century (largely due to its place in EQ-5D protocols), some researchers have been developing alternatives, often based on ordinal methods.

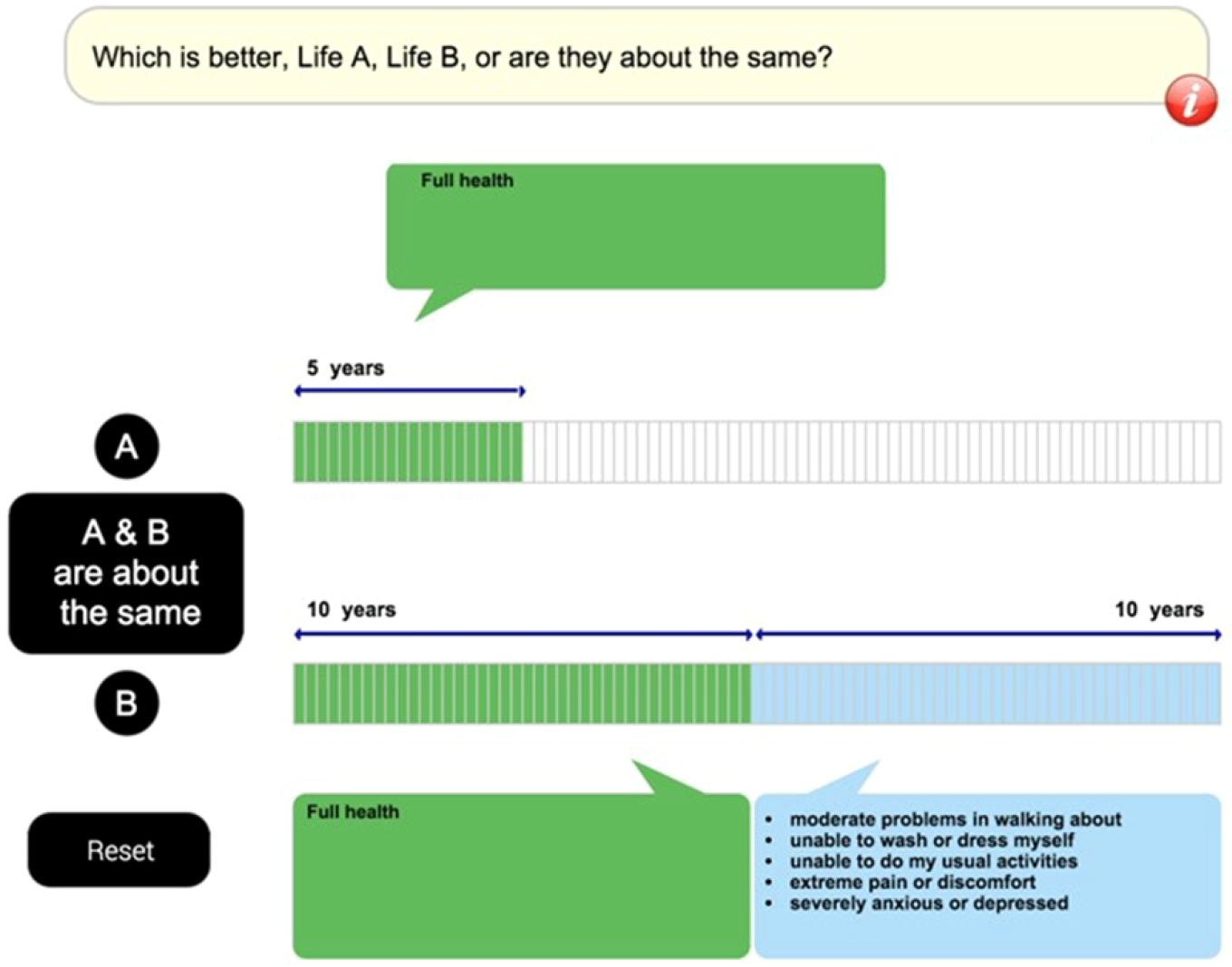

Discrete choice experiments (DCE)

Three closely related tasks have been used to obtain ordinal data for use in HALYs:

- Ranking: Placing several health states in order of preference.

- Best-worst scaling: Choosing the best and worst out of several options.

- Discrete choice experiments (DCE): Choosing one state out of two (or occasionally three) options, based on which seems “healthier” or which the respondent would prefer to live in for a specified duration.

DCE is currently the most popular of these for valuing QALYs, and DCE-like pairwise comparisons are the main approach for obtaining the DALY’s disability weights (Salomon et al., 2012; Salomon et al., 2015), so that will be the focus here. However, most of the following also applies to the analysis of other ordinal data.

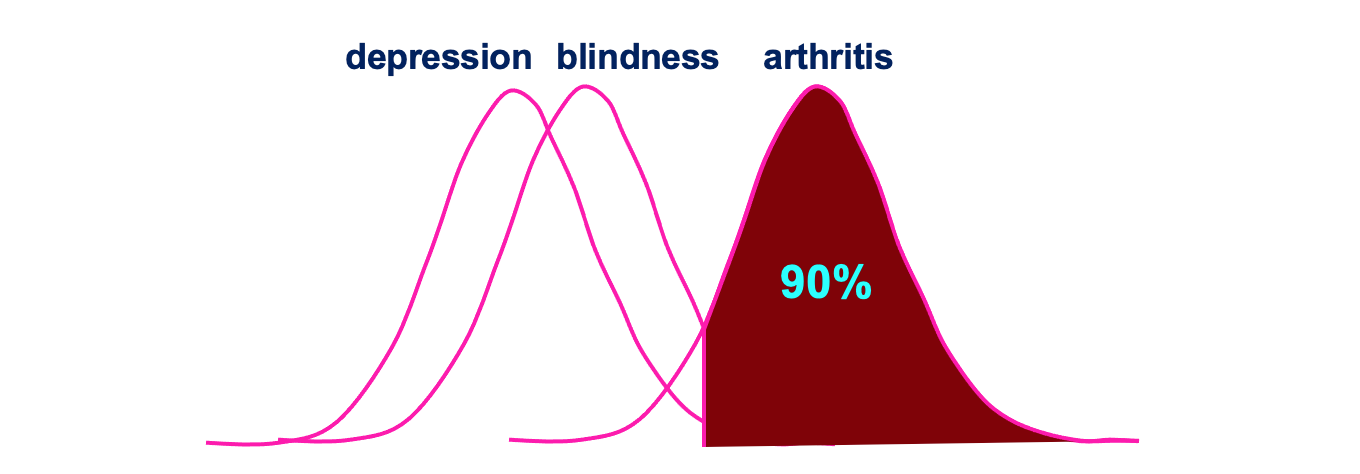

The roots of DCE lie in Thurstone’s (1927) law of comparative judgement, later developed into random utility theory (e.g., Bradley & Terry, 1952; McFadden, 1974). The key assumption is that “discriminal processes” (e.g., a choice of which of two objects is heavier, or which of two health states is better) are influenced both by systematic factors (e.g., the actual weight of the objects, or the mean value of a health state on the respondent’s latent utility function) and random error. On repeated sampling, the probability of choosing A over B reflects not just the order on the latent scale (weight, utility, health, etc.) but the distance between them. This allows each item to be placed on an interval scale. When applied to populations, each “sample” represents the choice of a different individual, and the results are on the latent utility scale of the population as a whole. (For a fuller explanation, see Brazier et al., 2017, ch. 6.)

An illustration of how ordinal responses can be placed on an interval scale. In this (hypothetical) case, 90% of respondents thought arthritis was better than blindness, and almost all thought arthritis was better than depression, but only 70% thought blindness was better than depression. (From lecture slides by Donna Rowen.)

As described, DCE has several advantages over the major alternatives outlined above:

- It’s less cognitively challenging than the TTO and SG, making it more suitable in vulnerable populations such as children and the elderly.

- It can usually be done online or by post, without an interviewer, making it cheaper to get large samples.

- It avoids some biases: attitudes to risk (SG); time preference and loss aversion (TTO); and end aversion and spreading (VAS).

- It uses the same tasks for better and worse than dead (see below for a discussion), making the values more comparable along the scale.

- It does not (necessarily) include explicit comparison with being dead, which both avoids potential biases this can introduce and makes it more appropriate for those who may become distressed by it.

- It can be combined with other aspects of a health state or its treatment, such as waiting period, being treated with respect, travelling time, and even cost. This can make it more relevant to real-life decision-making.

The key challenge, of course, is anchoring the interval data (showing the relative positions on the latent utility scale) to a ratio scale where 0 = dead and 1 = full health. Options for doing this are outlined in the next section on states worse than dead, but in brief, it seems like there are no solutions that maintain all of the putative advantages listed above, and no consensus on the best approach. This is an active area of research and I expect some progress in the coming years.

It’s also worth noting some more general criticisms:

- It may overstate the distance between some states, e.g., if almost all respondents think the EQ-5D-3L state 21111 (moderate mobility problems and no other problems) is worse than full health (11111), the former may get a much lower value than it ought to.

- It arguably lacks a theoretical basis in economics and EUT, e.g., choices are not made under uncertainty (though it can perhaps be modified to include risk: Robinson, Spencer, & Moffatt, 2015).

- It may not always be (much) less cognitively demanding than the TTO or SG: factoring in, say, all five levels of all five dimensions for two states on the EQ-5D-5L is not easy when one isn't obviously better than the other. Some anchoring methods increase the complexity.

- It’s unclear whether avoiding direct comparison to dead is an unalloyed benefit. If the resulting values are going to determine, say, whether extending a life is net negative or net positive, it may be desirable that respondents consider that implication explicitly.

- All methods for anchoring to the QALY scale require some states to be valued at 0 or below. That’s fine for generic instruments like the EQ-5D, but when used to value condition-specific measures for relatively mild problems (e.g., overactive bladder), there may be few such responses (Yang et al., 2009).

So, while the DCE is certainly promising, I’m not sure it’s yet been proven to be superior to the more established methods (beyond reducing costs).

Person tradeoff (PTO)

In the PTO (previously called equivalence of numbers), respondents trade off populations, in effect stating how many outcomes of one kind (e.g., curing depression) they consider as valuable as a fixed number of outcomes of another kind (e.g., saving the life of a young child).

In health economics, the PTO has fallen out of favour to the extent that it’s barely mentioned in recent reviews, perhaps because it’s no longer the primary method used to obtain disability weights for the DALY. Nevertheless, related methods have been employed by effective altruists in discussions of population ethics (e.g., Althaus, 2018), and by the Institute for Health Metrics & Evaluation to anchor disability weights to zero.

The Appendix contains a review of the PTO with a little more detail than provided above for the other methods. In brief:

- It's a cognitively quite different task from the others, e.g., because it involves consideration of others’ health (rather than one's own), and potentially distributional issues like equity. This could be considered a strength or a weakness, depending on the objective.

- The evidence base is relatively sparse and hard to interpret, partly because widely differing forms of task are used in the relevant studies. What does exist arguably suggests that it isn’t a very promising approach.

- But absence of evidence isn’t (always) evidence of absence, so further research could offer high value of information.

Other options

Much less common approaches include:

- Veil of ignorance: The respondent is presented with a description of two populations, and asked to which they would rather belong. One population contains a variable number of people in the target health state (e.g., severe asthma at age 40), and the other a fixed number in the reference health state (e.g., fatal disease at 40). The number in the target state is varied until the respondent is indifferent between the options. This may be considered a variant of the PTO or of the SG, depending on how the respondent thinks about the task. (Pinto‐Prades & Abellán‐Perpiñán, 2004; Shroufi et al., 2011)

- Magnitude estimation: The respondent states how many times worse one health state is than another (Torrance, 1986).

- Direct elicitation of the social welfare function: The respondent is directly asked about the relative importance of the dimensions and levels of an MAUI, including interactions between them (Devlin et al., 2019).

- Hybrid methods: Data from two or more methods are factored into the calculation of weights, e.g., some EQ-5D-5L value sets have combined DCE and TTO data using a common likelihood function and a Bayesian approach (Devlin et al., 2018).

- Consumption: The monetary value of health outcomes is estimated using a human capital approach (lost future earnings), revealed preferences (how much people in fact pay for the benefit in the market), or stated preferences (how much they are hypothetically willing to pay to avoid a problem). This is mainly used in cost-benefit (as distinct from cost-effectiveness) analysis (see my footnote in Part 1).

As with descriptive systems, there is already a lot of literature debating the merits and implications of the leading approaches (for reviews, see Brazier et al., 2017, ch. 4; Green, Brazier, & Deverill, 2000; Ryan et al., 2001; Drummond et al., 2015, chs. 5–6). However, it could be worth investigating some of the newer or currently unpopular methods in a bit more depth.

Possible research questions include:

- When, if ever, is the PTO the best option?

- Review of the literature since Green (2000).

- More empirical studies, perhaps working towards a more standardized format.

- Are other uncommon approaches worth considering?

- Again, a literature review and/or empirical work on magnitude estimation, veil of ignorance, direct elicitation, hybrid methods, monetary valuation, and anything else on the horizon could be illuminating.

- How should ordinal data be anchored to the HALY scale? (See discussion below.)

- This is less neglected, but potentially still impactful as the DCE becomes increasingly widespread.

- Is it worth reconsidering the VAS?

- How important is it for the task to be choice-based?

- This is relevant to wellbeing research, too (see later posts in this sequence).

- Is the theoretical basis and psychometric performance really that much worse than the alternatives, with all their flaws?

- How important is it for the task to be choice-based?

How should we handle extremely poor (and good) states?

As briefly discussed in Part 1, I think QALYs and DALYs do a particularly poor job of quantifying the disvalue from very poor health states, especially those that may be considered worse than being dead (WTD). Here I will outline the methods commonly used, the rationales typically provided, potential problems with them, and some possible avenues for improvement. More fundamental discussion about the possible asymmetry of positive and negative experiences will be left to Part 4.

The tasks used to elicit values for states worse than dead (SWTD) are naturally related to those for states better than dead (SBTD) described in the previous section, and likewise come in many variations. Below I outline just a few of the more common ones for VAS, SG, and TTO.

Visual analog scale

To ensure VAS values can be anchored to the 0–1 QALY scale, respondents are typically asked to place dead on the same scale as the state(s) being valued. This also allows the valuation of SWTD. The “raw” values (often on a 0–100 scale), whether better than dead (BTD) or WTD, are then transformed as follows:

- Adjusted value of h = Raw(h) – Raw(dead) / Raw(max) – Raw(dead)

where Raw(h) is the original rating (e.g., between 0 and 100) for the target health state, Raw(dead) is the original rating for being dead, and Raw(max) is the top of the scale (e.g., 100). For example, with a dead point of 30/100 and a rating for h of 20/100, the final value of h would be: (20 – 30) / (100 – 30) = -0.142. While technically unbounded below 0, this method should normally result in less extreme negative values: even where Raw(dead) = 70/100 and Raw(h) = 0/100, the “utility” of h would only be (0 – 70) / (100 – 70) = -2.33.

It’s unclear, however, whether this is an advantage or disadvantage. Presumably most people will place dead in the bottom half of the scale (in one study it was around 10/100 on average: Richardson & Hawthorne, 2001), which does not, in my view, leave enough room to capture the worst health states—particularly given the end-aversion phenomenon. This absence of a tradeoff may also be particularly problematic here: with a dead point of, say, 30/100, would they really be willing to endure a year at 0/100 (extreme pain, depression, etc.), to gain a year at 61/100? Pushing the other way: if faced with a year at 25/100 followed by death, would they really prefer immediate death? Whether “concealing” the implications of their decisions results in “better” values is a normative as well as a psychometric question. (This is also relevant to the use of wellbeing scales, discussed in Part 4.)

Time tradeoff

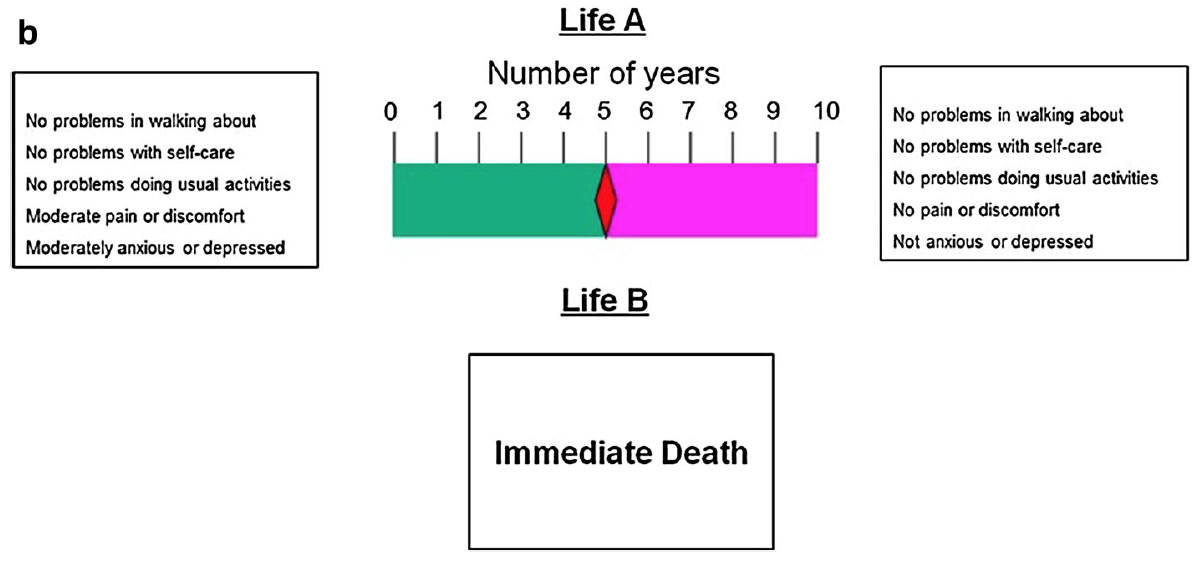

As described in Part 1, the Measuring and Valuing Health (MVH) protocol used for the UK EQ-5D-3L value set (and many others) uses a substantively different kind of time tradeoff for SWTD. Whereas the BTD task involves varying the duration in perfect health until it is equivalent to 10 years in the target health state h, the WTD task provides 10 years in total, to be shared between full health and h. The respondent must therefore simultaneously vary the duration in h and the duration in perfect health until she is indifferent between that life and immediate death.

Visual aid (“time board”) for worse-than-dead states, used by the MVH TTO protocol. From Oppe et al. (2016).

Because the tasks are so different, the validity of combining them on the same scale is in doubt. Moreover, the minimum value is determined by the fairly arbitrary choice of time increment: if 1 year, the minimum is -9; if 1 month, it’s -119.[6] (In the MVH protocol, it was three months, giving a lower bound of -39.)

To make matters worse, it became standard practice in the mid-1990s to rescale those responses to fit a lower bound of -1, using one of a few different methods that give considerably different results (Lamers, 2007). While acknowledging the lack of theoretical basis for this, analysts gave a number of justifications (see e.g., Tilling et al., 2010; Dolan, 1997; Lamers, 2007; Patrick et al., 1994):

- Convenience: It made it easier to carry out statistical modeling.

- Comprehension: Respondents may have misunderstood the task, assuming it was more like the BTD task. If so, transforming them may better reflect their true preferences, at least on aggregate.

- Plausibility:

- Some implied values (e.g., a lower bound of -39 for the UK EQ-5D-3L value set) were considered unrealistic. Richardson and Hawthorne (2001) even claimed that it was impossible to experience a state as bad as -1, and instead used a bound of -0.25 for the AQoL.[7]

- Unbounded negative values dominate calculations: a state can receive a negative mean value even when only a small minority of respondents consider it WTD, which doesn’t seem right. (One response is to use medians, but that brings its own problems.)

- Symmetry: The arbitrary figure of -1 was apparently “motivated by an equal range for positive and negative utilities” (Lamers, 2007), though it's unclear to me why this would be considered an advantage.

In response to these problems, more recent protocols, most notably the EuroQol Valuation Technology (EQ-VT), tend to use a variant of the “lead time” TTO (LT-TTO) (Robinson & Spencer, 2006; Devlin et al., 2010; Oppe et al., 2016; Oppe et al., 2014).[8] This presents a choice between Life A, a variable time in perfect health, and Life B, a fixed period in perfect health (the lead time) followed by a fixed period in h. The period in Life A is varied until the respondent is indifferent between the two lives. In effect, it’s asking: How much time in perfect health would you require as compensation for living the stated period in the target state?[9]

The visual aids used in the EQ-VT protocol for states worse than dead. From Oppe et al. (2016). In this example, the utility of the target state is (5 – 10) / (20 –10) = -0.5.

This approach is preferable because it produces utilities on the same scale as SBTD. However, it’s hard for some respondents to understand and is still subject to major framing effects. Most notably, results are sensitive to the duration of lead time: in a non-representative sample, Devlin et al. (2013) found a minimum value of about -1.5 when using a 2:1 ratio (e.g., 10 years lead time followed by 5 years in the target state), but about -4 when using a 5:1 ratio (though it did not affect the proportion of states deemed WTD). Around 3–5% of respondents exhausted all of their lead time, suggesting they would have given even lower values had it been possible. This is particularly concerning given that the EQ-VT (Oppe et al., 2014), which is often used to value the EQ-5D-5L, uses a 1:1 ratio (as shown in the image above).[10]

Moreover, nearly half of respondents reported taking into account the possibility that they would partially recover from the illness during the period in the target state (contrary to instructions). This would artificially raise their value for that state, because in effect they would be assessing the badness of a less severe condition.

Taken together, these findings suggests that the most common variants of the TTO for SWTD still generate minimum utilities that are far higher (healthier) than the actual preferences of the general public.

Standard gamble

The SG task for SWTD is identical to the task for SBTD, except that immediate death and the target health state h exchange places. That is, the possible outcomes of the gamble in Alternative 1 are (a) returning to full health for 10 years and (b) remaining in h for 10 years (rather than (a) full health versus (b) immediate death). Alternative 2 is certain immediate death (rather than certain 10 years in h). The probability p of being restored to full health is varied until the respondent is indifferent between the alternatives, and the utility is calculated as -p/(1 – p).

The standard gamble for states worse than dead. In this case, the respondent is willing to risk a 20% chance of 10 years in severe pain to avoid immediate death, giving a QALY value of -0.8/(1 – 0.8) = -4. (Image adapted from lecture slides by Tessa Peasgood.)[11]

However, this puts SG utilities on a scale of minus infinity to +1. As discussed above, some believe this results in implausible values, gives undue weight to negative values when calculating means, and presents difficulties for statistical analysis. Thus, as with the TTO, values are typically transformed to fit a lower bound of -1, often by simply using the negative of the indifference probability of the best outcome, i.e., -p (= -0.8 in the above example).

Discrete choice experiments

DCEs have some potential advantages when valuing SWTD. For instance, they tend to be less cognitively challenging than the TTO and SG (especially the variants for SWTD), can often be administered online without the support of an interviewer (thereby reducing costs), and can avoid “focusing effects” (e.g., instinctive aversion to death that increases values for poor states). They also use the same task for SWTD and SBTD, potentially providing better comparability across negative and positive values.

The key challenge with using DCEs (and other ordinal methods) for valuing HALYs is anchoring values to 0 and 1, which of course is also needed for handling SWTD. For generating QALYs, Rowen, Brazier, & Van Hout (2014) compared five methods to the TTO, to which I’ve added a sixth by Bansback et al. (2012):

1. Worst state = 0

The first is to assume the worst state valued (e.g., 55555 on the EQ-5D-3L) is equivalent to being dead (i.e., QALY = 0). This naturally makes SWTD impossible. While this may be compatible with some theoretical approaches to health (e.g., capability, or “functional efficiency”) there is no empirical support and it violates “common sense”: people clearly do consider some states WTD. So I don’t think this is a serious candidate.

2. Include “dead” in the DCE exercise

The second adds immediate death as one option in some choice pairs, and normalizes coefficients so that dead achieves the predicted value of zero. This is easier and cheaper to administer than most options below, but reintroduces potential focusing effects. This method has predicted SG health state values for the SF-6D and Health Utility Index pretty well (McCabe et al., 2006) but produced higher values than the TTO (Rowen, Brazier, & Van Hout, 2014). It also presents difficulties with interpreting responses from participants who do not value any states as WTD (Flynn et al., 2008): the location of their values on a HALY scale (i.e., in relation to 0) cannot easily be inferred.

3. Anchor to TTO for the worst state

The third is to normalize the coefficients using the TTO value for the worst state. In other words, the worst DCE value is anchored to the worst TTO value. This naturally requires carrying out the TTO alongside the DCE (albeit with a smaller sample), which makes it costly and time-consuming, and subject to all the usual problems with the TTO (time preference, cognitive complexity, etc.) that the DCE was designed to avoid. Due to its reliance on a single state, it produced values that were higher (healthier) and less dispersed (smaller range) than option 2 in Brazier et al. (2012), and higher than TTO in Rowen, Brazier, & Van Hout (2014).

4. Mapping DCE values to TTO

The fourth is to develop an algorithm to map (“crosswalk” in some literature) from mean DCE values to mean TTO (or SG, VAS, etc.) values for a small number of states (e.g., 10–20). This naturally assumes that the TTO values are “correct,” and the validity of the algorithm is assessed by extent to which the results match, so unsurprisingly the results are very similar. Obviously, this is not a good solution if, as I believe, there are serious problems with the TTO. The only advantage over just using TTO seems to be cost: a small TTO study plus large DCE is cheaper than a large TTO.

5. Hybrid models

TTO and DCE data can be combined using a common likelihood function and/or a Bayesian approach, which makes use of individual response data (rather than just mean values). This has been found to “perform well,” and was used in the valuation of the EQ-5D-5L (Devlin et al, 2018). But again, it presupposes the validity of the TTO responses, which I think we should be trying to avoid.

6. DCE with duration (DCETTO)

The final option, sometimes called the DCETTO, is like a normal DCE but with duration added as an attribute of the health state:

Discrete choice experiment using two EQ-5D-3L states plus duration. (From Bansback et al., 2012.)

Like in a TTO exercise, this information can be used to determine how much life expectancy the respondent is willing to give up so that the change in health state leaves the utility equal between the two options. In a study by Bansback et al. (2012) this generally performed well, e.g., most responses were logically consistent, but it lacks some key advantages of the regular DCE: it is about as cognitively challenging as the TTO, and uses a similar amount of resources due to the large sample required and complex study design and analysis. (See also Xie et al., 2020.) Overall, it produced values similar to TTO for mild states, but much lower for severe ones. Along with option 2 (including the state dead in choice pairs) the DCETTO is one of the few approaches that has the potential to address some (but not all) of the key problems with standard methods.

The differences between the values produced by the various kinds of TTO, SG, VAS and DCE are not trivial. However, the minimum value in every widely-used value set that I’m aware of is remarkably high, ranging from -0.59 for the UK EQ-5D-3L to +0.29 for the original SF-6D.[12] This implies that it would not be worth foregoing even one day in full health (which may not even be happy) to avoid a day of the most intense health-related suffering—confined to bed with unrelenting extreme pain, depression, and anxiety. For reasons I explain further in Part 4, I find this highly implausible and problematic, and it could lead to a grossly inefficient allocation of resources, particularly in countries with poor access to pain relief and mental health treatment (Knaul et al., 2017; Patel et al., 2018).

Suppose, for example, that the “correct” weight for the most severe cases of depression is -4 but the current weight for that state is 0.5. The value of bringing an extremely depressed person to 1 (full health) will be underestimated by a factor of ten—5 QALYs versus 0.5—which in an “efficient” health system based on cost-effectiveness would cause it to receive far too few resources. The overall burden of extreme depression would also be massively underestimated, which affects how much attention it gets from policy-makers, biomedical researchers, non-profit organizations, the media, the general public, and so on.

There may be two additional problems with current methods. First, some states may be wrongly classified as WTD. Because people tend to overestimate the badness (at least in terms of subjective wellbeing) of most physical illnesses and disabilities, particularly those affecting mobility, the values for some states are too low. As noted in Part 1, one study found that nearly half of respondents in states with negative EQ-5D-3L values reported themselves to be “quite satisfied,” “satisfied,” or even “very satisfied” with their lives overall (Bernfort et al., 2018). Yet extending their lives would be considered to have negative value based on some of the most popular health metrics.

Second, there may also be problems at the top of the scale. QALY 1 refers to full health, or maximum health-related quality of life, but is indifferent to improvements beyond the reduction of morbidity and mortality. There is surely some benefit in, say, being able to run a marathon rather than just walk around the park, so even from a “functional” perspective, common health utility instruments do not capture the full range of ability. Perhaps more importantly, there is also value in joy, pleasure, satisfaction, and other positive experiences that these metrics ignore.

A top priority, then, seems to be improving the way “extreme” states are handled. Some potential projects include:

- Promoting general improvements, such as those discussed above related to the selection of descriptive systems and valuation methods.

- Taking into account more psychosocial aspects of life, and incorporating the judgements of people with relevant experience, would likely lead to more accurate utilities on all parts of the scale, and extend the scale to describe a wider range of good and bad lives.

- A thorough review of common methods for handling SWTD in health economics. Surprisingly, I haven’t seen anything like this; the closest I’ve found is Tilling et al. (2010), an outdated review of TTO-based methods for SWTD, and a brief overview in the standard textbook (Brazier et al., 2017). It could address questions such as:

- How do results for SWTD differ across common methods (TTO, DCE, DG, etc.)

- What explains the differences?

- E.g. are they mostly due to the type of task/protocol or to statistical modeling decisions?

- Which seems most valid?

- Is the answer different for SWTD and SBTD?

- If so, it is feasible/reasonable to use different methods for either side of 0?

- How strong is the theoretical basis for each approach?

- To what extent are preferences “distorted” by (lack of) explicit comparison with being dead?

- What normative and psychometric questions have to be addressed to answer this?

- Is the answer different for SWTD and SBTD?

- What methodological developments are on the horizon?

- It might to good to focus on the DCE, ordinal methods in general, and hybrid models, as they are increasingly popular (and relevant to DALYs).

- What are the practical implications of the differences/reforms? How much would they actually change healthcare priorities?

- A review of less common methods, e.g.

- How are SWTD handled in person tradeoffs, magnitude estimation, and elicitation of the social welfare function?

- Is there any reason to (dis)prefer these over the more common ones?

- Can SWTD be addressed at the aggregation stage, e.g., by giving extra weight to QALYs gained by people in severe states (see below).

- An analysis of relevant policy issues, e.g.

- How and why were current methods chosen?

- Who are the key players in these decisions?

- How can the direction be influenced? (See below.)

- E.g. is it more tractable to focus on middle-income countries that are just developing their health technology assessment procedures, or on the “key players” in high-income countries?

- E.g. should we try to develop novel methods, or tweak more established processes like the EuroQol protocols?

- Advocating for specific changes, e.g.

- Adding more lead time to the LT-TTO may not require other major reforms, though it’s not without its challenges (Devlin et al., 2013).

- Further empirical research, e.g.

- Testing different amounts of lead time.

- It may even be worth experimenting with unlimited lead time, as well as with ratios such as 2:1, 5:1, 10:1 and 100:1

- Testing the effect of other methodological choices, such as the use of medians rather than means.

- Qualitative research on the plausibility/validity of extreme values.

- Do people “really mean it” when they give responses that imply values like -39 or minus infinity?

- Do they “really mean it” when they give quite high values for seemingly terrible states?

- Testing different amounts of lead time.

- Reflection on the appropriate purpose and scope of HALYs, e.g.

- Is it reasonable to expect them to capture the very worst/best (health-related) states, rather than a more “typical” range of experiences?

- E.g. if a QALY weight below -1 obtains for only, say, one in a thousand patients, and allowing lower values causes measurement problems in the rest of the scale, is it reasonable to “cap” values at -1? What if it’s one in a hundred, or one in a million?

- If not, are there ways of acknowledging more extreme cases—such as by using different outcome measures—and factoring those into decision-making?

- Is it reasonable to expect them to capture the very worst/best (health-related) states, rather than a more “typical” range of experiences?

Any work on this would also ideally consider broader issues around the (a)symmetry of valenced experience and the measurement of extreme suffering discussed in Part 4 of this sequence.

The above discussion has focused on the QALY. This is largely because, as noted in Part 1, the DALY does not even recognize SWTD—a conscious choice based, at least in part, on the desire to measure disability (defined in terms of loss of function, roughly speaking) rather than quality of life or “utility.” However, this could, in principle, change in future, either by allowing values to rise above 1 (= dead) or by switching the direction of the scale to match the QALY. In that case, the above research ideas would mostly be applicable to the DALY as well. Given the level of institutional inertia and active resistance to such changes, it may be good to start by looking into the policy aspects, e.g.

- How and why did the DALY come to be constructed this way?

- Who would need to be persuaded for there to be fundamental reforms?

- How could they be persuaded?

- How tractable are such changes? On what kind of time frame?

It’s also perhaps worth looking at how the “dead” anchor is established in the current DALY. To recap from Part 1: the main valuation procedure used pairwise comparisons (arguably a form of DCE), whereby respondents choose the “healthiest” of the two states. As with the DCE, the analysis of this ordinal data is rooted in random utility theory, which essentially claims that the frequency with which an item is chosen indicates its value relative to the alternative. To put these values on the DALY scale (which, like the QALY, arguably has ratio properties), some respondents were also asked “population health equivalence” questions (similar to PTO):

Imagine there were two different health programs.

The first program prevented 1000 people from getting an illness that causes rapid death.

The second program prevented [Number selected randomly from {1500, 2000, 3000, 5000, 10 000}] people from getting an illness that is not fatal but causes the following lifelong health problems: [Lay description for randomly selected health state inserted here, for example, “Some difficulty in moving around, and in using the hands for lifting and holding things, dressing and grooming.”].

Which program would you say produced the greater overall population health benefit?

These enabled inferences to be made about the badness of death versus living with various conditions.[13]

I don’t feel qualified to hold a strong opinion on the merits of this approach. Given that SWTD are excluded anyway, and that disability weights tend to be otherwise similar to the QALY equivalents, I suspect that developing and promoting another anchoring method should not be a high priority. However, having not looked into this in depth, I may well be mistaken.

Who should provide the values?

Whose preferences are used may matter even more than the choice of descriptive system and valuation methodology (Brazier et al., 2017, pp. 77–84; Brazier et al., 2018; Cubi-Molla, Shah, & Burström, 2018). In early versions of the DALY, disability weights were obtained from a panel of “experts,” mostly clinicians (Chen et al., 2015). In a few countries, such as Sweden and Germany (Leidl & Reitmeir, 2017), the assessment agencies require “experience-based” preferences for QALYs. These are typically from patients[14] valuing their current state using a standard method (TTO, SG, VAS, etc.), but other kinds of experience are possible (e.g., past, future, and vicarious: see Cubí-Mollá, Shah, & Burström, 2018 for a helpful framework). However, in recent years, both QALY and DALY weights have usually come from representative samples of the general public, most of whom have not experienced most of the states being valued; this is a requirement of most relevant agencies, including NICE.

The choice of “experienced” versus “hypothetical” preferences does seem to affect the resulting values. In general, experienced QALY weights are higher (healthier), especially for mobility-related dimensions (Cubí-Mollá, Shah, & Burström, 2018; Peeters & Stigglebout, 2010), although one meta-analysis found no difference when the standard gamble was used (Peeters & Stigglebout, 2010). However, the reverse seems to hold for at least some mental health conditions, with patients (and sometimes carers) providing lower values for dementia (Rowen et al., 2015), anxiety and depression (Pyne et al., 2009; Schaffer et al., 2002; Papageorgiou et al., 2015), and schizophrenia (Aceituno et al., 2020).

Three main reasons have been offered to explain the discrepancy between experienced and hypothetical values (Brazier et al., 2017, pp. 78–9):

- Inadequate descriptions (or inadequate imaginations): Any descriptive system, not just an MAUI like the EQ-5D, will only cover a few aspects of life with the condition. The (non-experienced) respondent will then focus on these (usually negative) elements, and “fill in the gaps” with their assumptions about other areas of life, which may not be accurate.

- Adaptation: In valuation tasks, people may focus on what it’s like to initially acquire the health condition, rather than to live with it for an extended period. In general, people don’t fully recover from a major adverse event (Cubí-Mollá, Jofre-Bonet, & Serra-Sastre, 2017; Howley & O’Neill, 2018; Lucas, 2007; Luhmann & Intelisano, 2018; Oswald & Powdthavee, 2008; Powdthavee, 2009), but they do tend to underpredict the extent to which they will adapt (Kahneman, 2000; Gilbert & Wilson, 2000; McTaggart-Cowan et al. 2011; Dolan, 2011; Karimi et al., 2017). This can involve both practical changes, such as learning to walk with a stick or taking up new hobbies, and psychological ones, such as lowering expectations, changing one’s view of what matters in life, or simply focusing less on the health problem. Note that this is not a measurement problem: adaptation causes the actual (not just perceived) wellbeing to improve relative to predictions, which may be seen as positive. However, as noted in Part 1, people tend to underpredict the severity of some mental disorders (e.g., Pyne et al., 2009; Schaffer et al., 2002; Papageorgiou et al., 2015): by their very nature, these conditions put distressing thoughts and feelings at front of mind, making them inherently resistant to adaptation.

- Response shift (also called scale recalibration): Patients may have lower internal standards for what counts as “healthy” or “good quality of life,” perhaps due to lowered expectations (Sprangers & Schwartz, 1999). For instance, someone with cystic fibrosis may compare themselves to others with the same condition, rather than to completely healthy individuals, whether or not they have adapted. That is, there could be a real difference in quality of life between people who give the same values, which is potentially a serious (and hard-to-quantify) measurement problem.

There has been considerable debate over the appropriate source of utilities. Below is a brief summary of the cases for and against public preferences and own-state (e.g., patient) values (adapted from: Brazier et al., 2017, p. 89; Helgesson et al., 2020; Brazier et al., 2018; Brazier et al., 2005).

Using preferences of the general population

For:

- The “veil of ignorance” argument was advocated by the Washington Panel on Cost-Effectiveness in Health and Medicine: a lack of “vested interest” was considered important (Gold et al., 1996).

- Public funding (in the form of taxation, for example) can essentially be seen as public insurance and so it may seem appropriate that ex ante public preferences determine health states. In other words, since the general population will be supplying the resources, they should be the ones determining how they are used. This has some support in the extra-welfarist tradition discussed in Part 1 (which often seeks to use “social” rather than individual values).

- Whatever the normative arguments, the use of population values may help generate public support for decisions based on cost-effectiveness analysis.

- It’s more straightforward to obtain large amounts of (by some measures) high quality data: it's easier to survey a simple random sample of adults than large numbers of patients with the relevant conditions.

Against:

- Members of the general population generally have little or no first-hand experience of the health states being valued, making them especially vulnerable to cognitive biases, misunderstandings, and other errors.

- While members of the public want to be involved in healthcare decision making, it’s not clear that they want to be asked to value health states specifically (Litva et al. 2002). There are other ways of involving the public, as noted below.

Using experience-based (own-state) values

For:

- Patients know their own health state better than anyone trying to imagine it, so in most cases it’s reasonable to assume that their values more accurately represent its severity.

- It’s the well-being of the patient that we’re interested in, since ultimately it’s (primarily) the patients who will be the losers and gainers from a public program. This is in keeping with welfare economics, discussed in Part 1, in which welfare is understood as the aggregation of individual (“consumer”) utilities (although many members of the public will also consume healthcare at some point, so it isn’t entirely clear welfarism implies the use of own-state values). It’s also consistent with some other “maximizing” theories, such as classical utilitarianism, given certain assumptions (e.g., that all relevant effects are on the patient rather than, say, relatives—or at least that the spillover effects correlate more strongly with patient than public preferences).

Against:

- Patients may behave strategically; for example, they may exaggerate the severity of their condition in order to obtain better treatment. Consciously or not, they do tend to rate conditions similar to their own as more severe (Álvarez & Rodríguez-Míguez, 2011).

- There are significant practical challenges. Some patients are unable (e.g., in severe dementia) or unwilling to provide values—or it may be unethical to ask, such as in terminal conditions when it may cause distress. This can lead to small and unrepresentative samples in poor health states (which are perhaps the ones that would benefit most from own-state valuation)—although this problem can be mitigated with careful methodological choices.

- Patients may be “penalized” for adapting to a health problem. Some non-utilitarians (e.g., Sen, 1997, pp. 45–46)[15] argue that some kinds of psychological adaptation, such as lowering expectations, should not count against the patient when it comes to resource allocation, even if it would lessen the (experienced) utility from treatment.

- Patients may be “penalized” for having a different subjective scale (e.g., due to response shift). For instance, if people rate their mobility in comparison to others of their age group, older people may consider themselves to have “moderate problems” and younger people “severe problems” for the same objective level of mobility. Interventions that alleviate mobility problems in older people may therefore seem relatively less cost-effective.

- Own-state valuation using some common preference elicitation tasks (TTO, SG, etc.) are not “experienced utility” in the Kahneman or Benthamite sense. They still involve imagining future health (e.g., staying in the current health state for 10 years), and thus may be vulnerable to many of the same “biases” as with valuation of hypothetical states, e.g., the patient may focus on the health state rather than other aspects of life, presume some change in severity over time, fail to grasp small probabilities, or have “irrational” time preferences—and, of course, they will still have to imagine the “full health” and “being dead” comparators. (VAS may avoid some of these issues, but has other problems, as noted above.) They could also be subject to additional distortions, such as the “negativity bias” associated with depression, so it is not impossible that some are less accurate than their hypothetical equivalents.

For these reasons, some advocate a “middle way” that better accounts for patient experience without relying entirely on own-state valuation. Options (adapted from Brazier et al., 2018) include:

- Improve the descriptive systems, e.g., include wellbeing dimensions that better reflect the impact on the lives of those experiencing the health states. This is discussed above. Of course, it’s also possible to combine a better descriptive system with patient preferences (something which I’d tentatively endorse for some purposes).

- Encourage more deliberation and reflection in the task, perhaps alongside more information. For example, in small-scale studies general population respondents have been given more detailed descriptions of life with the condition (audio, video, photographs), including information on adaptation; been told patients’ own-state TTO values; participated in simulations (such as goggles for vision problems); and been allowed to discuss potential responses in small groups. Results have been mixed, with some but not all leading to values closer to patients’ own (McTaggart-Cowen, 2011; Murphy et al., 2020). An alternative is using “citizens’ juries” or multi-criteria decision analysis (MCDA) to elicit utilities, which involve a small group of non-experts considering a particular policy issue in depth. It’s unclear how practical this is for generating whole value sets.

- Provide decision-makers with two incremental cost effectiveness ratios or net benefit values using (a) general population hypothetical values and (b) own health state values. This would increase the burden on analysts, and there would still be a need for some principled weighting of the two findings, so it does not seem like a sustainable solution.

- Use subjective well-being to reweight an existing health state classification system, such as the EQ-5D. Common criticisms include the fact it isn’t preference-based (and so may go against the wishes of the taxpayer and/or patients), and is not easily anchored to the 0–1 scale. This option is discussed in Part 3.

Overall, I’m moderately confident that experience-based values are, in many cases, preferable to the purely hypothetical values currently used for most value sets, but they have a lot more limitations than I realized at first. Given that most agencies are unlikely to accept experience-based utilities any time soon, it may be worth looking into ways of improving elicitations from the general population.

Some possible research questions include:

- How important is the value source relative to other factors (e.g., choice of MAUI and valuation task)?

- This may be available in, or deducible from, existing literature.

- How much more accurate are “experience-based” values? How does this vary by condition, type of experience (e.g., past, current, caring for a patient), and other factors? What is the “gold standard” for determining accuracy?

- What are the best ways of improving hypothetical preferences?

- Review of “informed preference” studies, i.e., an update of McTaggart-Cowen (2011).

- New empirical studies, or replications of previous ones.

- Would recent advances in virtual reality technology help?

- Is there some way of combining own-state and general public preferences, or using each more selectively?

- E.g. could we use own-state values only for conditions that are particularly hard for the general public to understand?

- E.g. could we use public values only when it is hard to get meaningful own-state values?

- E.g. could we take a weighted average of own-state and public preferences?

- How would we determine the weighting?

- Does the general public actually want to provide the values, if they are given evidence that they are misleading?

- What would it take to persuade institutions like NICE and IHME to use own-state values?

How should we handle spillover effects?

Becoming sick or disabled has implications beyond the individual patient. Most obviously, carers and family members can experience increased mental health problems, financial distress, and disruption to work, school, sleep, and social activities (for examples, see Peasgood, Foster, & Dolan, 2019, p. 39). They can also experience benefits, such as a sense of fulfilment (Cohen, Colantonio, & Vernich, 2000; Mackenzie & Greenwood, 2012) and even reduced mortality (Brown et al., 2003). Beyond the family, health problems can have implications for crime, antisocial behaviour, productivity, expenditure on a range of public services, and so on. If we take a consequentialist or (quasi-)welfarist perspective, which seeks to maximize the amount of “good” done with the available resources, it is important to capture these “indirect” effects in our economic assessments. Doing so is likely to have considerable impact on our priorities (Krol, Papenburg, & Exel, 2015).

Two broad, and not necessarily mutually exclusive, approaches to this have been taken so far. The first is to incorporate them into the numerator of the cost-effectiveness ratio; for example, by putting a dollar value on the time a relative spends caring for the patient, the income lost due to the condition, and the cost of future “unrelated” medical care (e.g., the cost of treatment for disease B that will only be incurred if treatment for disease A extends the patient’s life). This is often done in analyses that claim to be from a “societal” perspective (as opposed, for example, to a “payer” or “health service” perspective). It is often criticized on equity grounds; for instance, preventing morbidity and mortality among high-earners will look better than equivalent gains to the less “productive,” and counting future unrelated costs “biases” evaluations against the elderly and chronically ill. It also faces a number of practical measurement challenges, e.g., there are several competing approaches to measuring productivity loss, the value of informal care, and the value of intangibles such as travel time and leisure time; and there’s no consensus on which of these should be counted in the first place.

The second approach, then, is to add them to the denominator. The relatives, carers, etc., can be given the same questionnaire (e.g., EQ-5D) and their change in HALYs added to the patient’s. As with societal-perspective costing, this is not done consistently. But more fundamentally, it is clear that current health-focused metrics are not able to capture many of the most important indirect effects on either the patient or other members of society. This is one of the key motivations for the development of a wellbeing approach and “hybrid” health/wellbeing measures, most notably the E-QALY, which is explicitly designed for use with carers as well as patients.

However, there is a further concern that applies to any health or wellbeing measure, and to the translation of effects into costs, namely the risk of double counting. It seems to be widely assumed that respondents in valuation tasks consider only the impact of the health state on themselves, yet the limited available evidence suggests they often incorporate other-regarding factors as well. For example, in qualitative studies alongside time tradeoff and standard gamble exercises, participants considered a number of non-health consequences when valuing health states, including the potential for being a physical or psychological burden on others, ability to carry out parental duties, and the effect of bereavement on others (Baker & Robinson, 2004; Karimi, Brazier, & Paisley, 2017). At the same time, it seems reasonable to assume health state values do not fully reflect the consequences for the rest of society—something that would be impossible for most respondents to predict, even if they were wholly altruistic.

Thus, relying entirely on a valuation from the perspective of the patient is likely to underestimate the benefits of an intervention, whereas summing the HALYs of the patients and all others affected is likely to overestimate it. To give a simplified example: if having Disease A would cause a burden to one’s child (e.g., through caring responsibilities or disrupted education), one may be tempted to give up more time in the TTO (i.e., assign a lower QALY weight) to account for that—but this would not cover all negative effects on the child, let alone the rest of the family, carers, and the broader community. On the other hand, assessing the effect from the perspective of the child is likely to count not only the caring burden, but also the child’s preference for the parent to be better, which is already covered by the QALY weight for the patient. So counting just the parent’s valuation would underestimate the impact, but counting both the parent’s and the child’s would overestimate it. Similar issues are faced when dealing with costs, e.g., respondents may consider effects on income or leisure time when valuing the state, so subtracting them from the numerator as well could overestimate the benefits of treatment.[16]

This would seem to have major implications for the development, selection, and application of QALY and DALY instruments. For example, it seems possible (though perhaps unlikely) that health-focused measures based on judgements of the general public, like the DALY and most EQ-5D value sets, capture the total burden of an illness better than those that correlate more strongly with the subjective wellbeing of the patient—at least when they are not also administered to relatives, carers, etc. Blindness, for instance, seems to have surprisingly limited long-term consequences for the happiness of the blind person (Pinquart & Pfeiffer, 2017), but incurs significant costs to the rest of society through reduced productivity, caring requirements, etc. (Köberlein et al., 2013). If respondents take things like this into account when assigning values to conditions, or if by coincidence[17] conditions that cause the greatest overestimation of disutility for the individual tend to have the greatest indirect effects, narrowly health-focused measures could be superior to (quasi-)wellbeing measures from a wellbeing perspective in at least some scenarios.

Even within mental health it may substantially affect priorities. Schizophrenia and bipolar disorder, for instance, have much greater effects on families and communities than do depression and anxiety, so the overall disease burden and the cost-effectiveness of treatment may depend on how far these are taken into account.