Posts tagged community

Quick takes

Popular comments

Recent discussion

This is a cross-post and you can see the original here, written in 2022. I am not the original author, but I thought it was good for more EAs to know about this.

I am posting anonymously for obvious reasons, but I am a longstanding EA who is concerned about Torres's...

- SoGive works with major donors.

- As part of our work, we meet with several (10-30 per year) charities, generally ones recommended by evaluators we trust, or (occasionally) recommended by our own research.

- We learn a lot through these conversations. This suggests that we might want to publish our call notes so that others can also learn about the charities we speak with.

- Given that we take notes during the calls anyway, it might seem that it would be low cost for us to simply publish those. This would be deceptive.

- There is a non-trivial time cost for us, partly because documents which are published are held to a higher standard than those which are purely internal, but mostly because of our relationship with the charities. We want them to feel confident that they can speak openly with us. This means not only an extra step in the process (ie sharing a draft with the organisation

Summary

- Where there’s overfishing, reducing fishing pressure or harvest rates — roughly the share of the population or biomass caught in a fishery per fishing period — actually allows more animals to be caught in the long run.

- Sustainable fishery management policies

Just the arguments in the summary are really solid.[1] And while I wasn't considering supporting sustainability in fishing anyway, I now believe it's more urgent to culturally/semiotically/associatively separate between welfare and some strands of "environmentalism". Thanks!

Alas, I don't predict I will work anywhere where this update becomes pivotal to my actions, but my practically relevant takeaway is: I will reproduce the arguments from this post (and/or link it) in contexts where people are discussing conjunctions/disjunctions between environmenta...

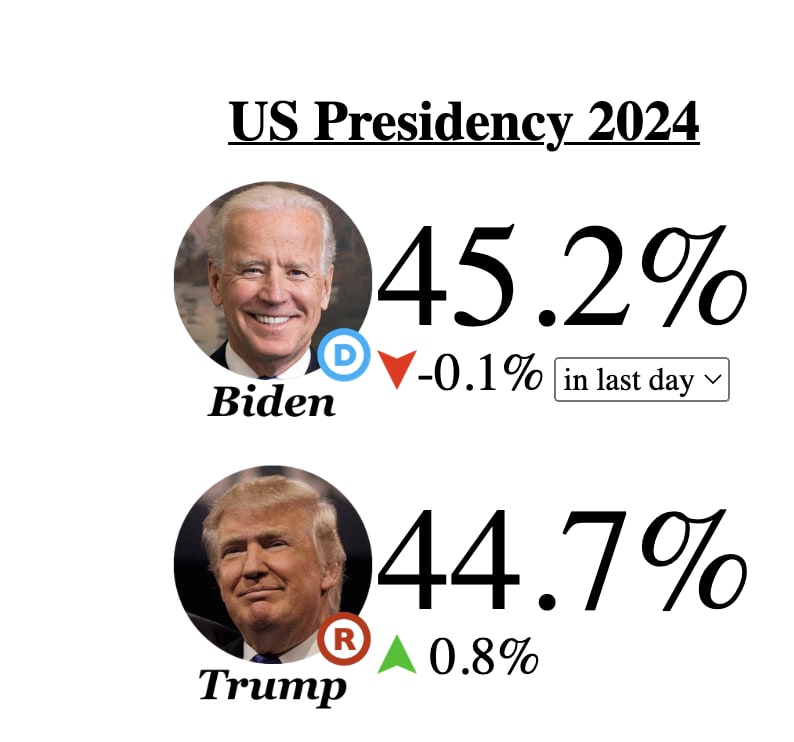

Trump recently said in an interview (https://time.com/6972973/biden-trump-bird-flu-covid/) that he would seek to disband the White House office for pandemic preparedness. Given that he usually doesn't give specifics on his policy positions, this seems like something he ...

The full quote suggests this is because he classifies Operation Warp Speed (reactive, targeted) as very different from the Office (wasteful, impossible to predict what you'll need, didn't work last time). I would classify this as a disagreement about means rather than ends.

One last question, Mr. President, because I know that your time is limited, and I appreciate your generosity. We have just reached the four-year anniversary of the COVID pandemic. One of your historic accomplishments was Operation Warp Speed. If we were to have another pandemic, would you take the same actions to manufacture and distribute a vaccine and get it in the arms of Americans as quickly as possible?

Trump: I did a phenomenal job. I appreciate the way you worded that question. So I have a very important Democrat friend, who probably votes for me, but I'm not 100% sure, because he's a serious Democrat, and he asked me about it. He said Operation Warp Speed was one of the greatest achievements in the history of government. What you did was incredible, the speed of it, and the, you know, it was supposed to take anywhere from five to 12 years, the whole thing. Not only that: the ventilators, the therapeutics, Regeneron and other things. I mean Regeneron was incredible. But therapeutics—everything. The overall—Operation Warp Speed, and you never talk about it. Democrats talk about it as if it’s the greatest achievement. So I don’t talk about it. I let others talk about it.

You know, you have strong opinions both ways on the vaccines. It's interesting. The Democrats love the vaccine. The Democrats. Only reason I don’t take credit for it. The Republicans, in many cases, don’t, although many of them got it, I can tell you. It’s very interesting. Some of the ones who talk the most. I said, “Well, you didn’t have it did you?” Well, actually he did, but you know, et cetera.

But Democrats think it’s an incredible, incredible achievement, and they wish they could take credit for it, and Republicans don’t. I don't bring it up. All I do is just, I do the right thing. And we've gotten actually a lot of credit for Operation Warp Speed. And the power and the speed was incredible. And don’t forget, when I said, nobody had any idea what this was. You know, we’re two and a half years, almost three years, nobody ever. Everybody thought of a pandemic as an ancient problem. No longer a modern problem, right? You know, you don't think of that? You hear about 1917 in Europe and all. You didn’t think that could happen. You learned if you could. But nobody saw that coming and we took over, and I’m not blaming the past administrations at all, because again, nobody saw it coming. But the cupboards were bare.

We had no gowns, we had no masks. We had no goggles, we had no medicines. We had no ventilators. We had nothing. The cupboards were totally bare. And I energized the country like nobody’s ever energized our country. A lot of people give us credit for that. Unfortunately, they’re mostly Democrats that give me the credit.

Well, sir, would you do the same thing again to get vaccines in the arms of Americans as quickly as possible, if it happened again in the next four years?

Trump: Well, there are the variations of it. I mean, you know, we also learned when that first came out, nobody had any idea what this was, this was something that nobody heard of. At that time, they didn’t call it Covid. They called it various names. Somehow they settled on Covid. It was the China virus, various other names.

But when this came along, nobody had any idea. All they knew was dust coming in from China. And there were bad things happening in China around Wuhan. You know, I predicted. I think you'd know this, but I was very strong on saying that this came from Wuhan. And it came from the Wuhan labs. And I said that from day one. Because I saw things that led me to believe that, very strongly led me to believe that. But I was right on that. A lot of people say that now that Trump really did get it right. A lot of people said, “Oh, it came from caves, or it came from other countries.” China was trying to convince people that it came from Italy and France, you know, first Italy, then France. I said, “No, it came from China, and it came from the Wuhan labs.” And that's where it ended up coming from. So you know, and I said that very early. I never said anything else actually. But I've been given a lot of credit for Operation Warp Speed. But most of that credit has come from Democrats. And I think a big portion of Republicans agree with it, too. But a lot of them don't want to say it. They don't want to talk about it.

So last follow-up: The Biden Administration created the Office of Pandemic Preparedness and Response Policy, a permanent office in the executive branch tasked with preparing for epidemics that have not yet emerged. You disbanded a similar office in 2018 that Obama had created. Would you disband Biden's office, too?

Trump: Well, he wants to spend a lot of money on something that you don't know if it's gonna be 100 years or 50 years or 25 years. And it's just a way of giving out pork. And, yeah, I probably would, because I think we've learned a lot and we can mobilize, you know, we can mobilize. A lot of the things that you do and a lot of the equipment that you buy is obsolete when you get hit with something. And as far as medicines, you know, these medicines are very different depending on what strains, depending on what type of flu or virus it may be. You know, things change so much. So, yeah, I think I would. It doesn't mean that we're not watching out for it all the time. But it's very hard to predict what's coming because there are a lot of variations of these pandemics. I mean, the variations are incredible, if you look at it. But we did a great job with the therapeutics. And, again, these therapeutics were specific to this, not for something else. So, no, I think it's just another—I think it sounds good politically, but I think it's a very expensive solution to something that won't work. You have to move quickly when you see it happening.

I'd say it's 50/50 but sure. And while politics is discouraged, I don't think that your thing is really what's being discouraged.

A crucial consideration in assessing the risks of advanced AI is the moral value we place on "unaligned" AIs—systems that do not share human preferences—which could emerge if we fail to make enough progress on technical alignment.

In this post I'll consider three potential...

Here are a few (long, but high-level) comments I have before responding to a few specific points that I still disagree with:

- I agree there are some weak reasons to think that humans are likely to be more utilitarian on average than unaligned AIs, for basically the reasons you talk about in your comment (I won't express individual agreement with all the points you gave that I agree with, but you should know that I agree with many of them).

However, I do not yet see any strong reasons supporting your view. (The main argument seems to be: AIs will be diff

This is a linkpost for Imitation Learning is Probably Existentially Safe by Michael Cohen and Marcus Hutter.

Abstract

...Concerns about extinction risk from AI vary among experts in the field. But AI encompasses a very broad category of algorithms. Perhaps some algorithms would

I agree, but I think very few people want to acquire e.g. 10 T$ of resources without broad consent of others.

I think I simply disagree with the claim here. I think it's not true. I think many people would want to acquire $10T without the broad consent of others, if they had the ability to obtain such wealth (and they could actually spend it; here I'm assuming they actually control this quantity of resources and don't get penalized because of the fact it was acquired without the broad consent of others, because that would change the scenario). It may be tha...

This announcement was written by Toby Tremlett, but don’t worry, I won’t answer the questions for Lewis.

Lewis Bollard, Program Director of Farm Animal Welfare at Open Philanthropy, will be holding an AMA on Wednesday 8th of May. Put all your questions for him on this thread...

In your recent 80k podcast almost all the work referenced seems to be targeted at the US and EU (except the Farm animal welfare in Asia section).

- What is the actual geographic target of the work that’s being funded?

- Is there work being done/planed to look at animal welfare funding opportunities more globally?

It’s happening. Our second international protest. The goal: convince the few powerful individuals (ministers) who will be visiting the next AI Safety Summit (the 22nd of May) to be the adults in the room. It’s up to us to make them understand that this shit is real, that they are the only ones who have the power to fix the problem. Join our discord (https://discord.gg/EFDQt6RBR7) to coordinate about the protests.

Check out the international protesting listing on our website for information about other locations: https://pauseai.info/2024-may

We just published an interview: Dean Spears on why babies are born small in Uttar Pradesh, and how to save their lives. Listen on Spotify or click through for other audio options, the transcript, and related links. Below are the episode summary and some key excerpts.

Episode summary

...I work in a place called Uttar Pradesh, which is a state in India with 240 million people. One in every 33 people in the whole world lives in Uttar Pradesh. It would be the fifth largest country if it were its own country. And if it were its own country, you’d probably know about its human development challenges, because it would have the highest neonatal mortality rate of any country except for South Sudan and Pakistan. Forty percent of children there are stunted. Only two-thirds of women are literate. So Uttar Pradesh is a place where there are lots of health challenges.

And then even within that, we’re working

Hi Mark,

I wonder if you'd be willing to do something along the lines of privately verifying that your identity is roughly as described in your post? I think this could be pretty straightforward, and might help a bunch in making things clear and low-drama. (At present you're stating that the claims about your identify are a fabrication, but there's no way for external parties to verify this.)

I think from something like a game-theoretic perspective, absent some verification it will be reasonable for observers to assume that Torres is correct that the anonymo... (read more)