Cross-posted on our website: https://www.convergenceanalysis.org/publications/ai-clarity-an-initial-research-agenda

Cross-posted on LessWrong: https://www.lesswrong.com/posts/324pQjqoHEHeF2vPs/ai-clarity-an-initial-research-agenda

Executive Summary

Transformative AI (TAI) has the potential to solve many of humanity's most pressing problems, but it may also pose an existential threat to our future. This significant potential of TAI warrants careful study of the AI’s possible trajectories and their corresponding consequences. Scenarios in which TAI emerges within the next decade are likely among the most treacherous, since society will not have much time to prepare for and adapt to advanced AI.

In response to this need, Convergence Analysis has developed a research program we call AI Clarity. AI Clarity’s research method centers on scenario planning. Scenario planning is an analytical tool used by policymakers, strategists, and academics to explore and prepare for the landscape of possible outcomes in domains defined by uncertainty. Though there is no single best method, scenario planning generally combines two activities: exploring possible scenarios, and evaluating possible strategies across those scenarios. Accordingly, AI Clarity intends to explore and evaluate strategies across possible AI scenarios.

In the first area of research, “exploring scenarios,” AI Clarity will (1) identify pathways to plausible existential hazards, (2) collect and review publicly-proposed AI scenarios, (3) select key parameters across which AI scenarios vary, and (4) generate additional scenarios that arise from combinations of those parameters. In the second area of research, “evaluating strategies,” AI Clarity will (1) collect and review strategies for AI safety and governance, (2) evaluate strategies for their performance across AI scenarios, (3) develop and recommend strategies that best mitigate existential risk across all plausible scenarios.

Over the coming year, we are publishing a series of blog posts delving into the two principal research areas outlined above. These blog posts will collectively build a body of research aimed at clarifying important uncertainties in AI futures.

Motivation

The 21st century has witnessed a precipitous rise in the power and potential of artificial intelligence. Advances in semiconductor technology and widespread use of the internet have raised computational limits and expanded the availability of data, respectively, and algorithmic breakthroughs have enabled the capability of AI systems to scale with both. As a consequence, global investment in AI has soared, and AI systems have become widely adopted and integrated in society. Several leading AI labs, such as OpenAIand Google DeepMind, are explicitly pursuing Artificial General Intelligence (AGI) — systems that perform as well as or surpass human capabilities across all cognitive domains.

The development and deployment of AGI, or similarly advanced systems, could constitute a transformation rivaling those of the agricultural and industrial revolutions. Transformative AI (TAI) has the potential to help with solving many of humanity's most pressing problems, but it may also pose an existential threat to our future.

One set of possible trajectories involves “short timelines” to TAI, in which TAI emerges within, say, 10 years. This is not merely academic speculation: some leading AI experts and prediction markets expect TAI sooner rather than later. Scenarios in which TAI emerges within the next decade are likely among the most treacherous, since society will not have much time to prepare for and adapt to advanced AI. It is for these reasons that the possibility of short timelines will be a key focus of AI Clarity’s research.

TAI governance is defined not only by its urgency but also by its lack of clarity. Key actors, researchers, and organizations disagree not only about 1) the magnitude of existential risk, but also 2) the share of that risk occupied by different threat models and 3) which strategies might best mitigate it.

A natural response to uncertainty is forecasting. However, the unprecedented nature of TAI makes it resistant to forecasting methods. For example, in July 2023, the Forecasting Research Institute released the results of a long-run tournament forecasting existential risks. The results emphasized the importance of TAI governance: both ‘superforecasters’ and domain experts estimated that the probability of human extinction was greater than from any other cause. However, despite months of debate, the groups’ estimates failed to converge. The report observes that “[t]he most pressing practical question for future work is: why were superforecasters so unmoved by experts’ much higher estimates of AI extinction risk, and why were experts so unmoved by the superforecasters’ lower estimates?”

It’s possible that forecasting TAI governance will become more tractable given time or different methods. But we shouldn’t rely on it. Instead, AI Clarity will explore scenario planning as a complementary approach to forecasting.

Scenario planning is an analytical tool used by policymakers, strategists, and academics to guide decision-making in domains dominated by irresolvable uncertainty. Though scenario planning is underrepresented in AI safety, there is a growing awareness of its complementary effects to forecasting and relevance to TAI governance.

As AI systems grow increasingly capable and widespread, their consequences to social systems, economic systems, and global security may become both less predictable and increasingly dramatic. In response to this uncertainty, AI scenario planning can provide a structured framework to explore a wide range of potential AI futures. It may also be able to help us identify critical points of intervention common to avoiding the worst futures.

This methodology enables us to explore and prepare for possibilities that are often overlooked in more traditional, narrowly focused safety research. It encourages a broader and more holistic view of AI's potential impacts, encompassing a wider range of possibilities beyond the most immediate or obvious risks.

In another way of putting it, AI risk has many uncertainties, and the first step to solving any problem is to understand what the problem is. Focusing directly on understanding AI scenarios is an attempt to directly understand what the problem of AI risk is.

Our Approach

Scenario planning

The main defining feature of AI Clarity’s research approach is our application of scenario planning to AI safety and AI governance.

Though there is no one standard methodology, scenario planning can be seen as combining two major activities:

- Exploring scenarios. Within a specified domain, the landscape of relevant pathways that the future might take is charted in detail. Key parameters which shape or differentiate these future outcomes are identified. The evidentiary status and consequences of parametric assumptions are analyzed.

- Evaluating strategies. Strategies are developed to mitigate the potential negative consequences which have surfaced through the study, steering towards positive future outcomes. Key interventions are identified and evaluated for effectiveness.

In the context of AI risk, we might call the first activity AI scenario research, and the second activity AI strategy research. Together, they provide a structure to envision and plan for a variety of potential futures. This makes scenario planning a particularly valuable tool for decision-making in areas — like AI risk — which are marked by significant uncertainties regarding the future.

Overview of our approach

AI Clarity intends to conduct high quality AI scenario and strategy research.

Exploring AI scenarios

We take the term AI scenario to refer to a possible pathway of AI development and deployment, encompassing both technical and societal aspects of this evolution. An AI scenario may be specified very precisely or very broadly. A highly specific scenario could, for example, describe a particular pathway to transformative AI, detailing what happens each year and at every key junction of development. A more general scenario (or set of scenarios) could be “transformative AI is reached through comprehensive AI services”.

This exploration of AI scenarios will include:

- Collection of AI Scenarios: Thoroughly review existing AI safety literature to understand the landscape of publicly-proposed AI scenarios and parameters of key interest. By examining a wide range of sources, a well-rounded understanding of the current state of AI safety considerations is enabled.

- Identification of Threat Models and Theories of Victory: Building on the literature review, map out a range of possible AI scenarios, identifying those with existential or catastrophic outcomes (which we call ‘threat models’) and those which successfully avert such outcomes (which we call ‘theories of victory’). Special focus will be given to exploring how threat models with short timelines might unfold.

- Identification of Key Parameters: Identify a relevant set of parameters that differentiate important AI scenarios or otherwise significantly shape the trajectory of AI development. These parameters may include technological advancements, ethical considerations, societal impacts, and governance mechanisms.

- Evaluation and Analysis: Evaluate the plausibility of different scenarios and examine the evidentiary basis for specific parametric assumptions. Further, describe and analyze the consequences associated with different pathways of AI development. Particular focus will be given to evaluating threat models with short timelines.

Evaluating AI strategies

In addition to charting out the landscape of AI scenarios through the exploratory work detailed above, AI Clarity also seeks to describe how to positively influence outcomes of AI development. This is pursued through the following research activities:

- Collection of AI Strategies: Undertake a thorough review of existing AI safety and governance literature to understand the landscape of strategies and specific interventions that have already been proposed or implemented.

- Strategy Development and Mitigation: Develop strategies to mitigate the potential negative consequences associated with a range of important scenarios and parametric assumptions (as identified through the exploration of scenarios). This involves assessing the efficacy of different interventions and tailoring strategies to the specific characteristics of each scenario.

- Identification of Intervention Points: A crucial part will be identifying high-impact intervention points. These are moments in or aspects of AI development and events where strategic actions can significantly shift the likelihood of threat models being realized. It is our hope that this approach will result in scenario planning that is thorough and actionable, contributing significantly to positive outcomes in AI safety and governance.

Major Research Activities for 2024 and Beyond

AI Clarity’s research output will feature a series of blog posts highlighting the results of our work. These outputs will coalesce under two major themes, exploring TAI scenarios and evaluating TAI strategies, corresponding to the two major activities of scenario planning detailed earlier. Our near-term research efforts will afford particular importance to threat models with short timelines to TAI. Publishing this series of blog posts will enable continuous interaction with the wider AI research and policy communities, creating regular opportunities for feedback.

Our progress (as of April 2024)

As of early 2024, we’ve begun publishing a series of blog posts to share our initial explorations into clarifying AI scenarios:

- Arguing for Scenario planning for AI x-risk

- Defining and exploring Transformative AI and scenario planning for AI x-risk

- Conducting An investigation into timelines to transformative AI

- Conducting An investigation into the role of agency in AI x-risk

Our team is currently and concurrently working on two more blog posts in these areas. The first is an exploration of short timelines to TAI scenarios, which will examine a combination of various parameters that combine together to give us a world in which timelines to TAI are short. The second post explores theories of victory. This work will explore conditions and combinations of various parameters that combine together to give us desirable states of the world.

Theory of Change

The motivation for AI Clarity and our approach to research is firmly rooted in Convergence’s organizational-level Theory of Change.

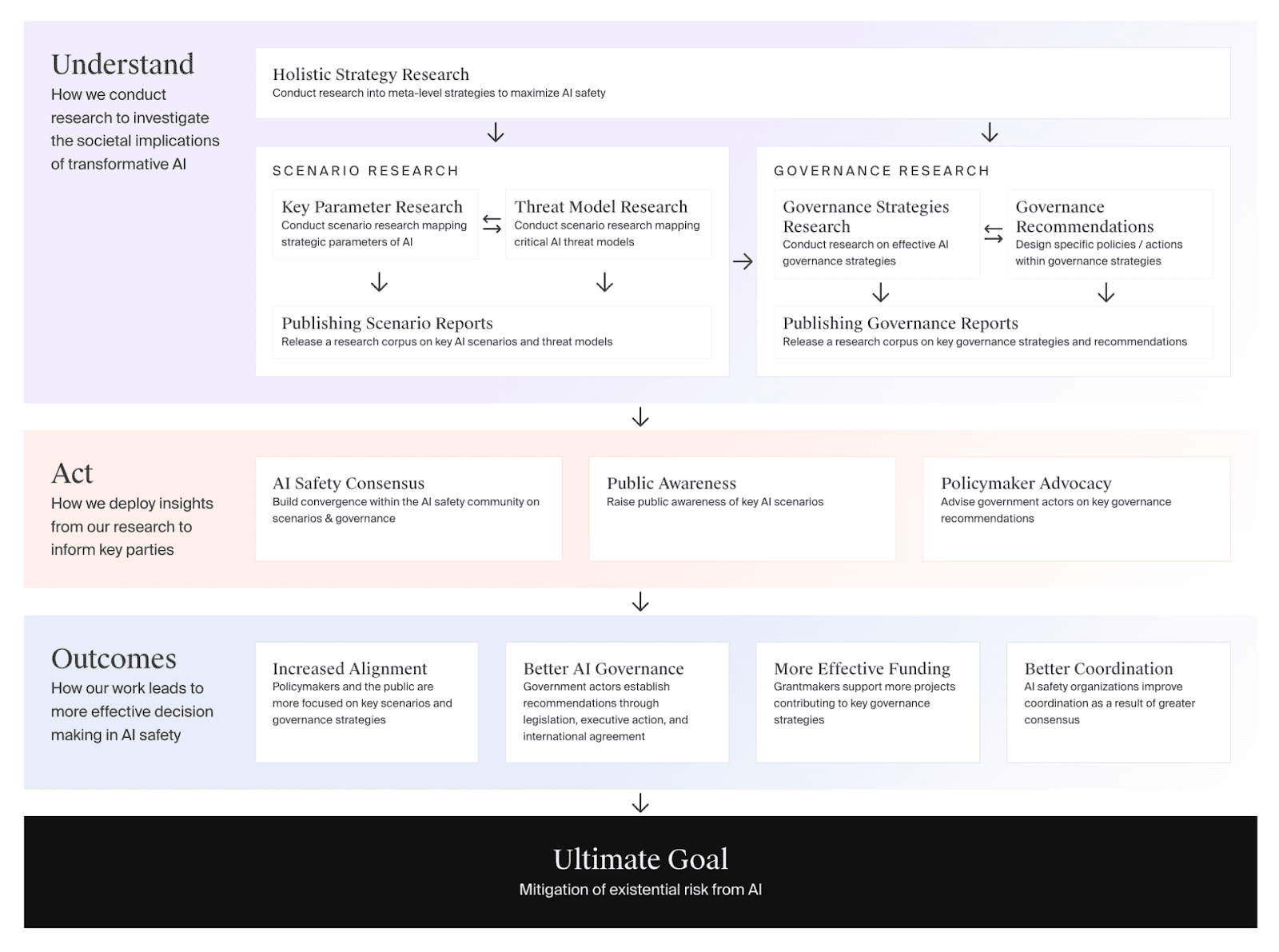

Figure 1. Visualization of the structure of Convergence Analysis’ organizational Theory of Change.

In order to mitigate existential threats from AI, Convergence seeks to improve decision making for AI safety and governance. To do this, we must firstly understand the societal and technical implications of AI development and deployment. Scenario planning, which is the focus of AI Clarity, is a key part of our efforts to deepen this understanding. Convergence will also work on concrete guidance with our governance recommendations research. We must secondly act to inform key parties about critical insights from our research, thus supporting decision makers to pursue effective strategies. This includes building consensus within the AI safety community, raising public awareness about the risks from AI, and advising relevant government actors.

We’re hoping to achieve these outcomes for decision making in AI safety:

- Increased alignment on key AI safety issues between researchers, policy makers, and the general public

- Better AI governance policies, based on a solid understanding of likely and important scenarios

- More effective project funding, supporting critical governance strategies

This improved decision making is aimed at steering the development of advanced AI away from existential risk, towards a safe and flourishing future for humanity.

Downside risks of this work

While AI Clarity's work is vital to this theory of change, it's important to recognize some potential downside risks:

- Accelerating Irresponsible AI Development:

- Enhanced Pathways to Advanced AI: Detailed scenario analyses could inadvertently reveal more efficient pathways to developing advanced AI, thus shortening the timeline to TAI.

- Unintended Information Dissemination: There's a risk that our research, especially detailed technical aspects, could be misused by those aiming to accelerate AI development irresponsibly.

- Increased Focus on Dangerous Advanced AI:

- Attracting More Investment: Highlighting advanced AI's capabilities might attract increased investment and attention from entities interested in pushing the boundaries of dangerous AI.

- Public and Industry Perception: Our research could shift public and industry perception, inadvertently creating more intense competition towards developing dangerous advanced AI systems.

With these potential downside risks in mind, we intend to pursue the following mitigation strategies:

- Adaptive Approach:

- Continuous Impact Assessment: Regularly assess the impact of our research and its reception, ready to modify our approach in response to new findings or concerns.

- Flexibility in Research Focus: Remain agile in adjusting research priorities to minimize any harmful consequences that become apparent.

- Contextualizing Research Outputs:

- Balanced Communication: Ensure that all outputs clearly communicate the potential risks alongside the benefits, promoting a balanced understanding of AI advancements.

- Educational Outreach: Engage in educational initiatives to inform the public and stakeholders about the ethical and societal implications of AI.

- Restrict Dissemination of Sensitive Insights:

- Controlled Release of Information: Implement prudent guidelines on what information is published, focusing on general insights rather than specific technical details.

- Security Protocols: Establish robust security protocols to prevent unauthorized access to sensitive research data, if we’re ever in possession of such data.

Conclusion

This research agenda lays out an approach to reduce AI existential risk through rigorous scenario planning. This approach involves deeply exploring the landscape of AI scenarios and evaluating strategies across them. We will collect existing perspectives, analyze parameters, generate additional scenarios, and identify effective interventions.

Through 2024 and beyond, AI Clarity will share insights and foster collaboration through public blog posts. Based on our findings, we will offer actionable recommendations to stakeholders across domains such as technical safety research, corporate governance, national regulation, and international cooperation. This work seeks to improve strategic consensus, inform policies, and coordinate interventions to enable more positive outcomes with advanced AI.

NOTES

Executive summary: AI Clarity outlines a research agenda using scenario planning to explore possible AI futures and identify strategies to mitigate existential risks from advanced AI.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.