Posts tagged community

Quick takes

Popular comments

Recent discussion

About a week ago, Spencer Greenberg and I were debating what proportion of Effective Altruists (EAs) believe enlightenment is real. Since he has a large audience on platform X, we thought a poll would be a good way to increase our confidence in our predictions

Before I share my commentary, I think in hindsight it would have been better to ask the question like this: 'Do you believe that awakening/enlightenment (which frees a person from most or all suffering for extended periods, like weeks at a time) is a real phenomenon that some people achieve (e.g., through meditation)?' I'm sure there are even better ways to phrase the question.

I'm sure there are still better ways of framing the question.

Anyway, the results are below and I find them strange.

Here's why I find them strange:

- Many EAs believe enlightenment is real.

- Many EAs are highly focused on reducing suffering.

- Nobody is really talking

This is an interesting #OpenPhil grant. $230K for a cyber threat intelligence researcher to create a database that tracks instances of users attempting to misuse large language models.

https://www.openphilanthropy.org/grants/lee-foster-llm-misuse-database/

Will user data be shared with the user's permission? How will an LLM determine the intent of the user when it comes to differentiating between purposeful harmful entries versus user error, safety testing, independent red-teaming, playful entries, etc. If a user is placed on the database, is she notified? How long do you stay in LLM prison?

I did send an email to OpenPhil asking about this grant, but so far I haven't heard anything back.

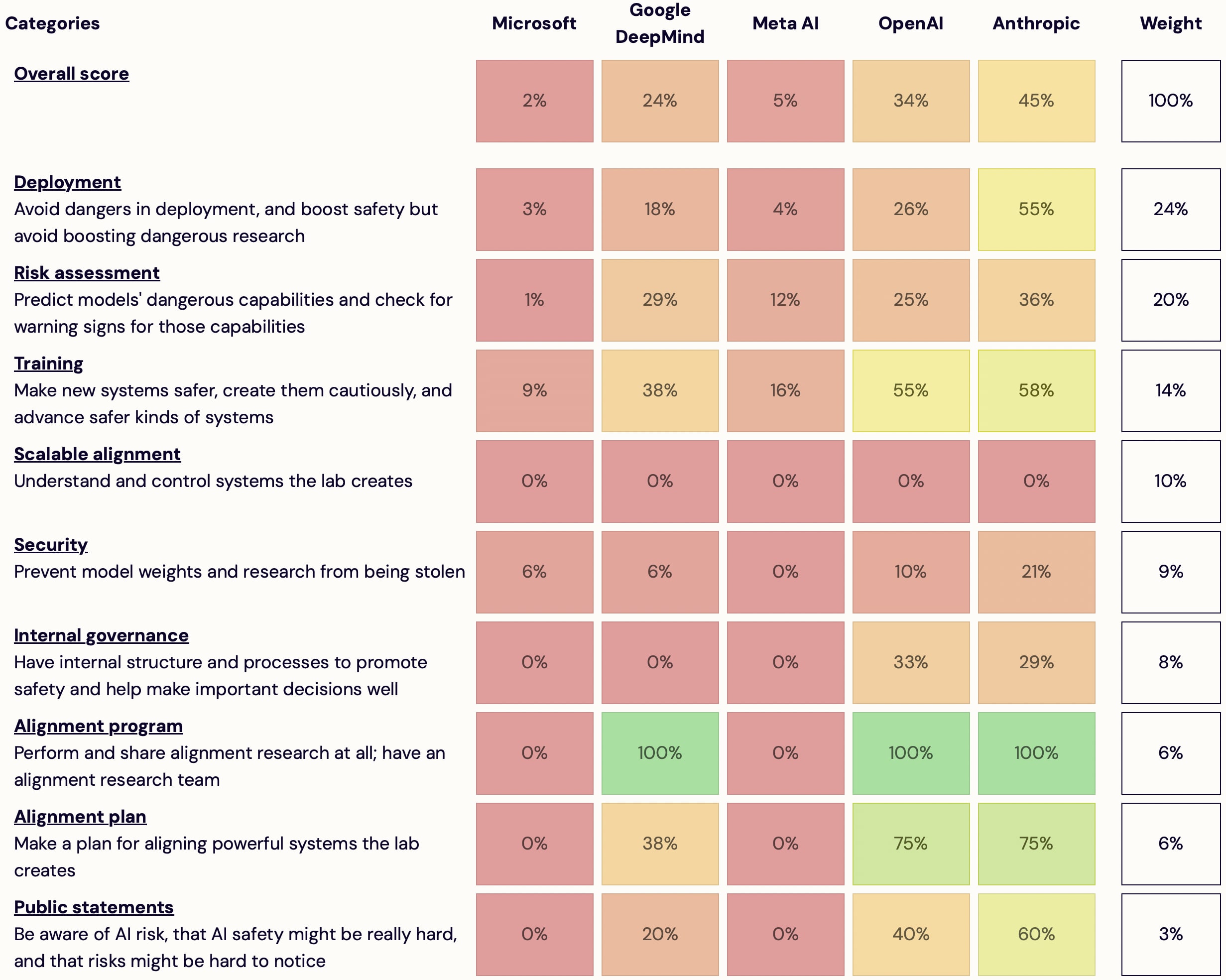

I'm launching AI Lab Watch. I collected actions for frontier AI labs to improve AI safety, then evaluated some frontier labs accordingly.

It's a collection of information on what labs should do and what labs are doing. It also has some adjacent resources, including a list...

Thanks for doing this! This is one of those ideas that I've heard discussed for a while but nobody was willing to go through the pain of actually making the site; kudos for doing so.

I’ve been working in animal advocacy for two years and have an amateur interest in AI. I’m writing this in a personal capacity, and am not representing the views of my employer.

Many thanks to everyone who provided feedback and ideas.

Introduction

In previous posts...

this is a very helpful post - thank you! I just wanted to make sure you've seen that that Bezos Earth Fund's $100 million AI grand challenge includes alternative proteins as one of three focus areas.

See here for details: https://www.bezosearthfund.org/news-and-insights/bezos-earth-fund-announces-100-million-ai-solutions-climate-change-nature-loss

GPT-5 training is probably starting around now. It seems very unlikely that GPT-5 will cause the end of the world. But it’s hard to be sure. I would guess that GPT-5 is more likely to kill me than an asteroid, a supervolcano, a plane crash or a brain tumor. We can predict...

What is the risk level above which you'd be OK with pausing AI?

My loose off-the-cuff response to this question is that I'd be OK with pausing if there was a greater than 1/3 chance of doom from AI, with the caveats that:

- I don't think p(doom) is necessarily the relevant quantity. What matters is the relative benefit of pausing vs. unpausing, rather than the absolute level of risk.

- "doom" lumps together a bunch of different types of risks, some of which I'm much more OK with compared to others. For example, if humans become a gradually weaker force in the wor

Just read this in the Guardian.

The title is: "‘Eugenics on steroids’: the toxic and contested legacy of Oxford’s Future of Humanity Institute"

The sub-headline states: "Nick Bostrom’s centre for studying existential risk warned about AI but also gave rise to cultish...

FYI this was already linked at https://forum.effectivealtruism.org/posts/KoLeR3q2bEWcL9FX8/the-guardian-calls-ea-cultish-and-accuses-the-late-fhi-of

Quick poll [✅ / ❌]: Do you feel like you don't have a good grasp of Shapley values, despite wanting to?

(Context for after voting: I'm trying to figure out if more explainers of this would be helpful. I still feel confused about some of its implications, despite having spent significant time trying to understand it)

Trump recently said in an interview (https://time.com/6972973/biden-trump-bird-flu-covid/) that he would seek to disband the White House office for pandemic preparedness. Given that he usually doesn't give specifics on his policy positions, this seems like something he is particularly interested in.

I know politics is discouraged on the EA forum, but I thought I would post this to say: EA should really be preparing for a Trump presidency. He's up in the polls and IMO has a >50% chance of winning the election. Right now politicians seem relatively receptive to EA ideas, this may change under a Trump administration.