tobyj

Posts 4

Comments22

Topic contributions1

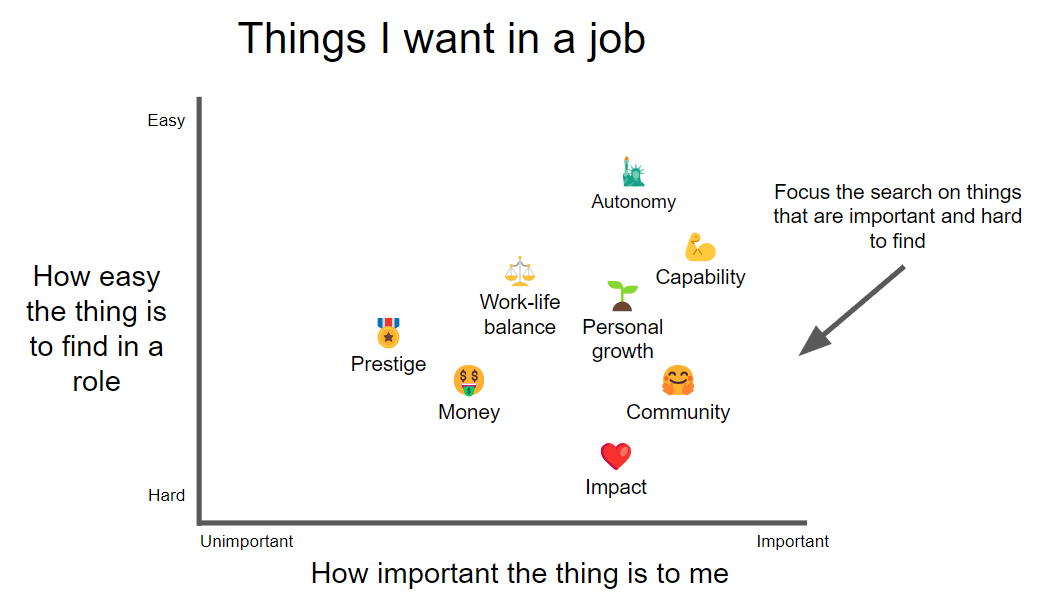

I wrote up my career review recently! Take a look

As a counter-opinion to the above, I would be fine with the use of GPT-4, or even paying a writer. The goal of most initial applications is to asses some of the skills and experience of the individual. As long as that information is accurate, then any system that turns that into a readable application (human or AI) seems fine, and more efficient seems better.

The information this looses, is the way someone would communicate their skills and experience unassisted, but I'm skeptical that this is valuable in most jobs (and suspect it's better to test for these kinds of skills later in the process).

More generally I'm doubtful of the value of any norms that are very hard to enforce and disadvantage scrupulous people (e.g. "don't use GPT-4 or "only spend x hours on this application").

Thanks for this Akash. I like this post and like these examples.

One thing I find has been helpful for me here is to stop talking and thinking about "status" so much and focus more on acknowledging my own emotional experience. e.g. the bullets at the start of this post for example are all example of someone feeling shame.

Shame can be a useful signal about how it would be best for you to act in any given situation, but often isn't particularly accurate in its predictions. Focusing on concepts like "status" instead of the emotions that are informing the concept has, at times, pushed me towards seeing status as way more real than it actually is.

This isn't to say that status is not a useful model in general. I'm just skeptical its anywhere near as useful for understanding your own experience as it is to analyzing the behavior of groups more objectively.

Thank Michael, I connect with the hope of this post a lot and EA still feels unusually high-trust to me.

But I suspect that a lot of my trust comes via personal interactions in some form. And it's unclear to me how much of the high level of trust in the EA community in general is due to private connections vs. public signs of trustworthiness.

If it's mostly the former, then I'd be more concerned. Friendship-based trust isn't particularly scalable, and reliance on it seems likely to maintain diversity issues. The EA community will need to increasingly pay bureaucratic costs to keep trust high as it grows further.

I'd be interested in any attempts at quantifying the costs to orgs of different governance interventions and their impact on trust/trustworthiness.

Thanks Jeff - this is helpful!

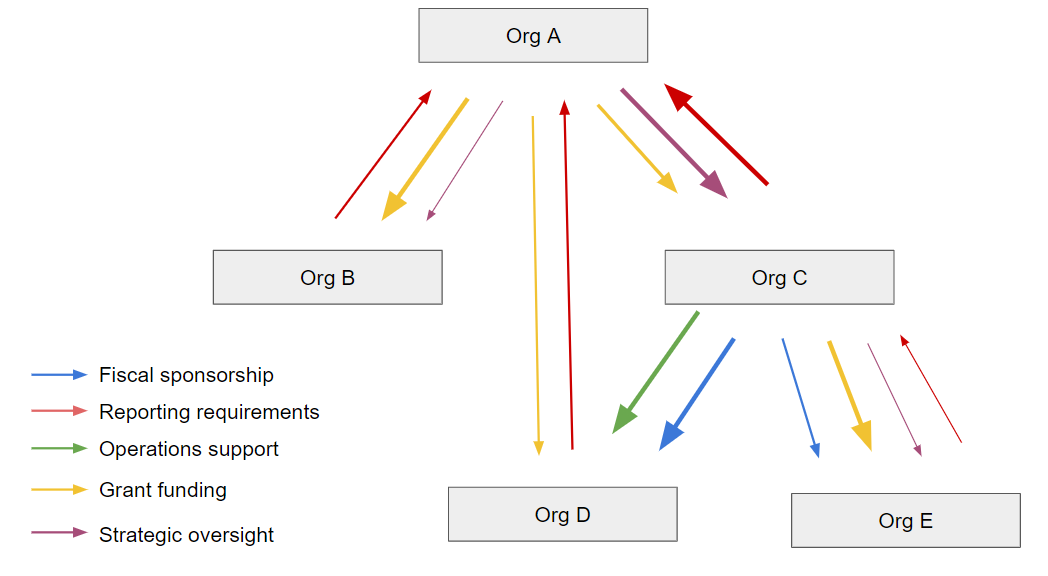

I don't know who would be best placed to do this, but I can imagine it would be really helpful to have more expansive versions of these diagrams. Especially ones that make the specific nature of the relationships between orgs clear (i.e. going beyond fiscal sponsorship) . A lot of the comments/discussion on this post seem to be about speculation about the specific nature of these relationships.

Here is what I imagined:

I suspect doing something like this would end up being pretty subjective, it would change over time, and there will be disagreement between people involved. e.g. things like "strength of strategic oversight" are going to be pretty ambiguous. But the value in attempts at creating some common knowledge here seems high given the current level of confusion.

(and alongside increasing trustworthiness, this kind of transparency would also be valuable for people setting up new orgs in the ecosystem. Currently, if you want to figure out how your org can/should fit in you have to try and build a picture like the above yourself)

Thank you for this post! One thing I wanted point out was that, this post talks about governance failures by individual organizations. But EA orgs are unusually tightly coupled, so I suspect a lot more work needs to be done on governance at the ecosystem level.

I most recently worked for a government department. This single organsiation was bigger, more complex, and less internally value-aligned than the ecosystem of EA orgs. EA has fuzzier boundaries, but for the most part, functions more cohesively than a single large organisation.

I haven't thought a tonne about how to do this in practice, but I read this report on "Constellation Collaboration" recently and found it compelling. I suspect there is a bunch more thinking that could be done at the ecosystem level.

I am really into writing at the moment and I’m keen to co-author forum posts with people who have similar interests.

I wrote a few brief summaries of things I'm interested in writing about (but very open to other ideas).

Also very open to:

- co-authoring posts where we disagree on an issue and try to create a steely version of the two sides!

- being told that the thing I want to write has already been written by someone else

Things I would love to find a collaborator to co-write:

- Comparing the Civil Service bureaucracy to the EA nebuleaucracy.

- I recently took a break from the Civil Service and to work on an EA project full time. It’s much better, less bureaucratic and less hierarchical. There are still plenty of complex hierarchical structures in EA though. Some of these are explicit (e.g. the management chain of an EA org or funder/fundee relationships), but most aren’t as clear. I think the current illegibility of EA power structures is likely fairly harmful and want more consideration of solutions (that increase legibility).

- Semi-related thing I’ve already written: 11 mental models of bureaucracies

- What is the relationship between moral realism, obligation-mindset, and guilt/shame/burnout?

- Despite no longer buying moral realism philosophically, I deeply feel like there is an objective right and wrong. I used to buy moral realism and used this feeling of moral judgement to motivate myself a lot. I had a very bad time.

- People who reject moral realism philosophically (including me) still seem to be motivated by other, often more wholesome moral feelings, including towards EA-informed goals.

- Related thing I’ve already written: How I’m trying to be a less "good" person.

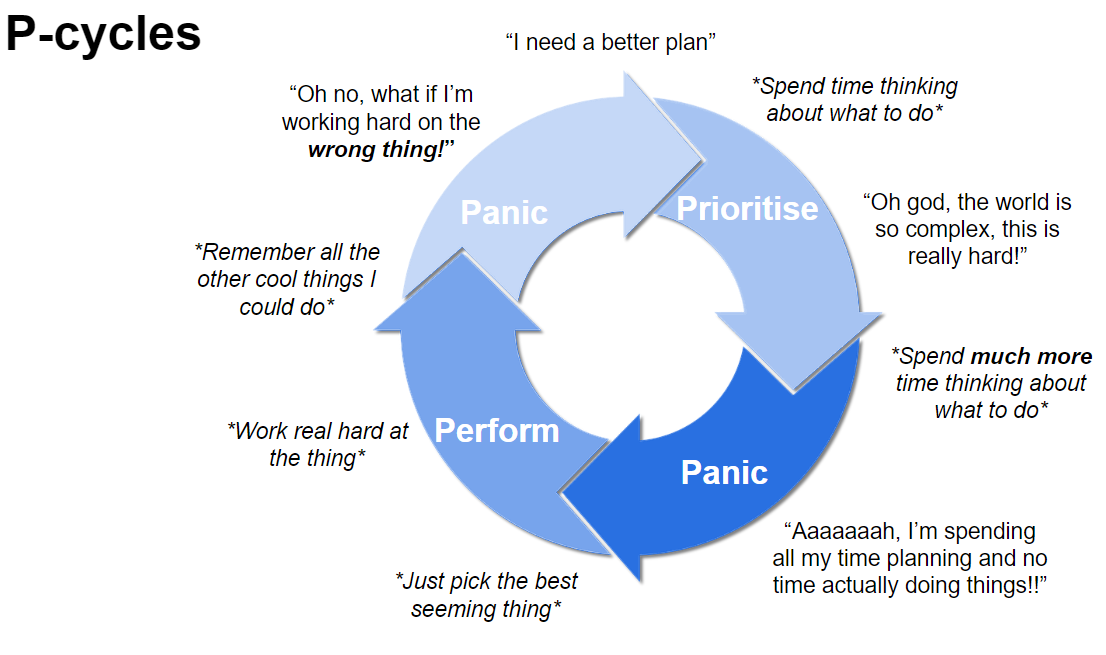

- Prioritisation panic + map territory terror

- These seem like the main EA-neuroses - the fears that drive many of us.

- I constantly feel like I’m sifting for gold in a stream, while there might be gold mines all around me. If I could just think a little harder, or learn faster, I could find them…

- The distribution in value of different possible options is huge, and prioritisation seems to work. But you have to start doing things at some point. The fear that I’m working on the wrong thing is painful and constant and the reason I am here…

- As with prioritisation, the fear that your beliefs are wrong is everywhere and is pretty all-consuming. False beliefs are deeply dangerous personally and catastrophic for helping others. I feel I really need to be obsessed with this.

- I want to explore more feeling-focussed solutions to these fears.

- When is it better to risk being too naive, or too cynical

- Is the world super dog-eat-dog or are people mostly good? I’ve seen people all over the cynicism spectrum in EA. Going too far either way has its costs, but altruists might want to risk being too naive (and paying a personal cost) rather than too cynical (which had greater external cost).

- To put this another way. If you are unsure how harsh the world is, lean toward acting like you’re living in a less harsh world - there is more value for EA to take there. (I could do with doing some explicit modelling on this one)

- This is kinda the opposite of the precautionary principle that drives x-risk work - so is clearly very context specific.

- Related thing I’ve already written: How honest should you be about your cynicism?

I really enjoyed this and found it really clarifying. I really like the term deep atheism. I'd been referring to the thing you're describing as nihilism, but this is a much much better framing.