pmelchor

Bio

Pablo Melchor, co-founder and president at Ayuda Efectiva. Co-founded EA Spain.

Posts 2

Comments26

Hi Miguel, Nicole,

I will be hosting this edition of the open forum. It looks like there is a mistake on the upper part of this page since the actual date announced on the GWWC website is tomorrow: Thursday, November 17.

If you see this before today at 8 p.m. CET please do let me know by replying to this comment. If I don't hear from you, I will log in today as well.

Sorry about this!

Two notes on existing resources:

- Jamie Gittins created this wiki listing EA-alined orgs.

- The EA Operations Slack (mentioned here) is doing wonders in terms of resource sharing and mutual help for people working in ops roles.

I can only describe "It has been a good year" as a massive understatement. You are doing amazing work with minimal resources and Effektiv Spenden is an inspiration for all of us working to promote effective giving. I think supporting your growth should be a no-brainer.

Regarding the contribution of effective giving to the growth of effective altruism, our donor base at Ayuda Efectiva skews even more towards "no previous knowledge". I think effective giving can be one of the main gateways into effective altruism in high-income, non-English-speaking countries.

Some great points have been made in previous comments, but I think there is some important context missing in GiveDirectly's post and this discussion.

Disclosure and caveats: At Ayuda Efectiva we rely heavily on GiveWell's research and will soon incorporate GiveDirectly as a giving option for Spanish donors. We therefore have an ongoing relationship with GiveWell and a just-getting-started one with GiveDirectly. We talk to GiveWell regularly to get a better sense of their thinking but all I write here is my personal understanding (which could be wrong).

Even though GiveWell has been around for more than a decade, it is a fast-growing and fast-changing organization. It seems that one of the current key drivers of change is the success of their Maximum Impact Fund. More and more, donors seem to be choosing to let GiveWell pursue whichever opportunities they think are the most impactful at any given time.

The way I see it, what this means in practice is that GiveWell's role as a charity recommender is becoming less prominent while their role as a grantmaking organization is expanding. The 40% growth of their incubation grants in 2020 also seems to point in that direction.

Once you see GiveWell in that light, the arguments against the roll over of part of their 2021 let-GiveWell-decide money raised seem to me quite weaker. If there is a strong argument, it should be applicable to any foundation (e.g. Gates, Open Phil, you name it) not giving away all of their available money in cash transfers now.

I do find convincing the argument that GiveWell is still widely seen and represented as just a charity recommender and they do have an important influence on giving decisions (I would say mostly in the EA community and adjacent groups). Their communications can therefore have a big impact and potentially unintended consequences. I have two comments to make on this:

First, GiveWell's message to donors is not "do not give, hold your money":

We will continue to raise funds, and our top recommendation to donors continues to be our Maximum Impact Fund, which grants funds on a quarterly basis to the most cost-effective giving opportunities we’ve identified at the time grants are made.

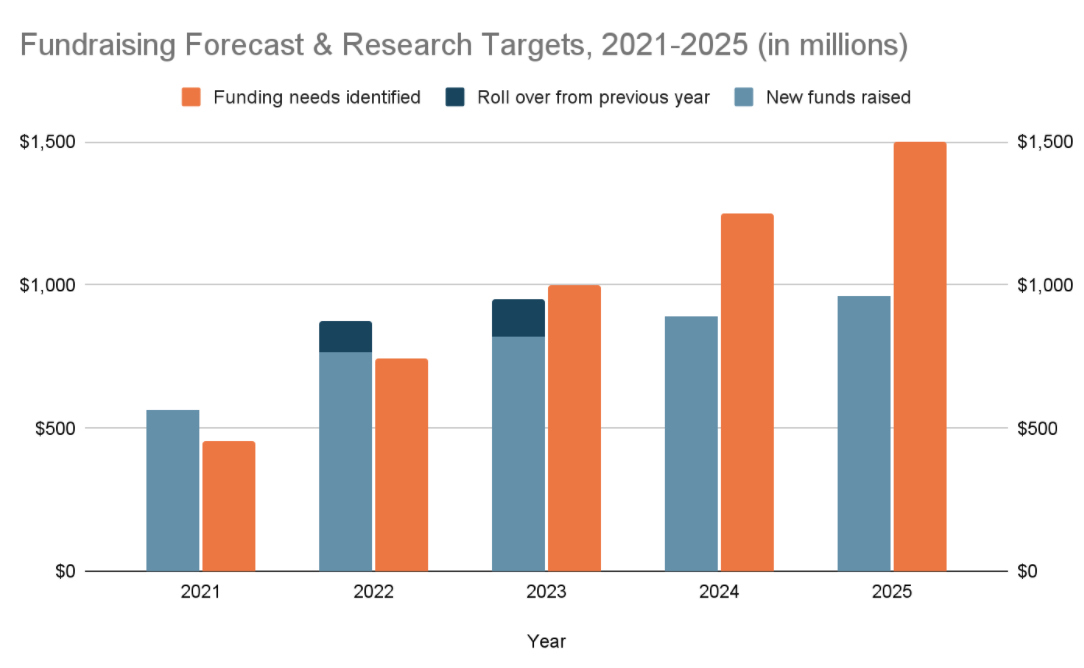

In fact, they expect to cover funding needs in 2022 equivalent to a very large percentage of the money they hope to raise:

Second, I find GiveDirectly's argument on the potential impact of GiveWell's announcement rather confusing:

But their decision here tries to optimize 0.1% of U.S. charitable giving in isolation from the other 99.9%; when, in reality, growing that 99.9% and allocating it better will mean a lot more for our world than asking those donors to hold out for possible silver bullets down the road.

One possible interpretation is the one in Jaime's comment. The way I understood it made me think the argument is not very consistent: On the one hand, they say that GiveWell is trying to optimize 0.1% of U.S. charitable giving. I don't know what the exact percentage is but it does seem clear that GiveWell's money moved and influence is very small in the scheme of things. On the other hand, the post seems to suggest that GiveWell is somehow telling the 99.9% donors it has no influence on to hold their donations.

In any case, I think it is perfectly compatible for GiveWell to announce they will be holding some funds for some time in order to achieve maximum impact (since that is precisely what they set out to do) while other donors decide to give now because they prefer to address the immediate needs that GiveDirectly is focused on. It does not seem like GiveWell's announcement should have a huge impact on that latter group, even if it is not the messaging that GiveDirectly will want to (and should) emphasize when addressing the 99.9% donors out there.

There is no perfect calculation of all the effects of a program but I think GiveWell's effort is impressive (and, as far as I can tell, unmatched in terms of rigor). I think the highest value is in the ability to differentiate top programs from the rest, even if the figures are imperfect.

Hi Aaron,

I think it's great that you ask these questions. I wouldn't assume that the majority of the community already has a crystal-clear grasp of them since (1) they are not straightforward at all and (2) as far as I know, the answers are not really consolidated in some single post you can read in 5 minutes.

GiveWell's current estimate is $3,000-$5,000 per life saved. This is the range they communicate on their Top Charities page and which they explain here. They have not updated this messaging since November 2020, so that may change soon.

As for a rough overview of the calculation process, this example may help. It is a complex process that starts with the evidence of effectiveness for a particular intervention but then includes a host of factors for which GiveWell calculate and regularly update their best estimates. Some mentioned in the example I just linked to are:

- Not every person who receives a certain treatment/intervention would have otherwise died of the targeted condition.

- Even when an intervention is effective, it probably does not prevent the condition 100% of the time.

- The effects may wane over time for lots of reasons.

- Treatments may not be consistently followed over time.

- Local mortality rates vary across regions.

- Other actors may deliver the intervention if you do not fund the particular program being considered.

If you want to dig deeper, you can go over the cost-effectiveness spreadsheets on this page Michael shared in a previous answer or read this detailed guide.

Your second question was about what "saving a life" actually means. Holden (GiveWell co-founder, now Open Philanthropy's co-CEO) wrote this post about it in 2007. Some snippets:

Approximately 80% of children born in sub-Saharan Africa reach age 5; in the developed world, it’s 99%. [...]

Between ages 5 and 45, people in sub-Saharan Africa have relatively similar mortaility[sic] rates to those in the developed world except for the influence of HIV/AIDS, TB, and mothers dying in childbirth. Around age 45, a lot of the same diseases that kill people under 5 start killing again (maybe due to weakened immune systems). [...]

So what’s a life saved? If you save someone right as they exit infancy (5 years old), you’ve saved someone who probably has around a 50% chance of making it to age 60 … another way of putting this is that if you save two lives [...], you’ve in expectation given one person a full life that they wouldn’t have had.

I will find out whether GiveWell has revisited that more recently.

I see this tag is largely unused, while "impact assessment" seems to have been chosen for many posts that could fit here (e.g. https://forum.effectivealtruism.org/posts/ZeFcfCAncT3jPAeht/clean-technology-innovation-as-the-most-cost-effective).

Two questions:

- How do you think about when to keep tags separate or when to merge them?

- How important is it for you that following a tag will have the expected results? In other words: if you have to choose, do you prioritise preciseness (e.g. two terms are not exactly the same >> let's create two separate tags) or content discovery (e.g. this is going to relevant for people who click on the tag >> let's make sure it shows up).

My experience in business matches two of the points that Catherine makes above:

My guess is that it is probably better to have a not-perfect name that everyone uses, than a whole variety of different names.

and

Another cost is that there are people who hear "effective altruism" several times in several places before deciding to learn more/ get involved, so each exposure of that name (as long as it is positive!) helps.

My current view is that:

- Consistent usage can be much more relevent for a brand's success than its intrinsic characteristics. I can imagine the team at early-days Google discussing whether they should rebrand to something easier to write for X-language-speakers and more understandable for the average user.

- It is easy to overestimate the potential of an imaginary, shiny new brand and underestimate the value of your current imperfect brand. This may be one of those things that you only notice when it is no longer there (e.g. people leave a company believing that it was "just and empty shell"and that they were what made it valuable... only to find out that it is much harder to get clients when that well-known logo is no longer on your slides).

If anything, I would say that one of the weaknesses of Effective Altruism (purely from a branding perspective) is that its brand landscape is already super-diverse (e.g. there is GiveWell, ACE, Open Phil, Founders Pledge, GWWC, 80,000 Hours, etc., etc. each pushing their own brand). This does make sense since each of the organizations I mention is applying effective altruism to a particular space or situation. However, when it comes to local groups, I tend to think that the EA movement as a whole has much more to gain from consistency.

Ben mentions in his comment how independent brands can reduce brand risk for EA, which is true. However, I think they can also reduce brand potential for EA (this is more of a side note, but I think that whenever we consider minimizing reputational risks we should also consider the opportunity costs of not doing something or doing it in the cautious-but-probably-less-impactful version).

I think that if we want to make the EA brand better (more meaningful, attractive, easily recognizable, etc.), simply using it consistently will go a long way.

Fantastic work! Strongly upvoted.