Ozzie Gooen

Bio

I'm currently researching forecasting and epistemics as part of the Quantified Uncertainty Research Institute.

Posts 69

Comments748

Topic contributions1

(This is a draft I wrote in December 2021. I didn't finish+publish it then, in part because I was nervous it could be too spicy. At this point, with the discussion post-chatGPT, it seems far more boring, and someone recommended I post it somewhere.)

Thoughts on the OpenAI Strategy

OpenAI has one of the most audacious plans out there and I'm surprised at how little attention it's gotten.

First, they say flat out that they're going for AGI.

Then, when they raised money in 2019, they had a clause that says investors will be capped at getting 100x of their returns back.

"Economic returns for investors and employees are capped... Any excess returns go to OpenAI Nonprofit... Returns for our first round of investors are capped at 100x their investment (commensurate with the risks in front of us), and we expect this multiple to be lower for future rounds as we make further progress."[1]

On Hacker News, one of their employees says,

"We believe that if we do create AGI, we'll create orders of magnitude more value than any existing company." [2]

You can read more about this mission on the charter:

"We commit to use any influence we obtain over AGI’s deployment to ensure it is used for the benefit of all, and to avoid enabling uses of AI or AGI that harm humanity or unduly concentrate power.

Our primary fiduciary duty is to humanity. We anticipate needing to marshal substantial resources to fulfill our mission, but will always diligently act to minimize conflicts of interest among our employees and stakeholders that could compromise broad benefit."[3]

This is my [incredibly rough and speculative, based on the above posts] impression of the plan they are proposing:

- Make AGI

- Turn AGI into huge profits

- Give 100x returns to investors

- Dominate much (most?) of the economy, have all profits go to the OpenAI Nonprofit

- Use AGI for "the benefit of all"?

I'm really curious what step 5 is supposed to look like exactly. I’m also very curious, of course, what they expect step 4 to look like.

Keep in mind that making AGI is a really big deal. If you're the one company that has an AGI, and if you have a significant lead over anyone else that does, the world is sort of your oyster.[4] If you have a massive lead, you could outwit legal systems, governments, militaries.

I imagine that the 100x return cap means that the excess earnings would go to the hands of the nonprofit; which essentially means Sam Altman, senior leadership at OpenAI, and perhaps the board of directors (if legal authorities have any influence post-AGI).

This would be a massive power gain for a small subset of people.

If DeepMind makes AGI I assume the money would go to investors, which would mean it would be distributed to all of the Google shareholders. But if OpenAI makes AGI, the money will go to the leadership of OpenAI, on paper to fulfill the mission of OpenAI.

On the plus side, I expect that this subset is much more like the people reading this post than most other AGI competitors would be. (The Chinese government, for example). I know some people at OpenAI, and my hunch is that the people there are very smart and pretty altruistic. It might well be about the best we could expect from a tech company.

And, to be clear, it’s probably incredibly unlikely that OpenAI will actually create AGI, and even more unlikely they will do so with a decisive edge over competitors.

But, I'm sort of surprised so few other people seem at least a bit concerned and curious about the proposal? My impression is that most press outlets haven't thought much at all about what AGI would actually mean, and most companies and governments just assume that OpenAI is dramatically overconfident in themselves.

(Aside on the details of Step 5)

I would love more information on Step 5, but I don’t blame OpenAI for not providing it.

- Any precise description of how a nonprofit would spend “a large portion of the entire economy” would upset a bunch of powerful people.

- Arguably, OpenAI doesn’t really need to figure out Step 5 unless their odds of actually having a decisive AGI advantage seem more plausible.

- I assume it’s really hard to actually put together any reasonable plan now for Step 5.

My guess is that we really could use some great nonprofit and academic work to help outline what a positive and globally acceptable (wouldn’t upset any group too much if they were to understand it) Step 5 would look like. There’s been previous academic work on a “windfall clause”[5] (their 100x cap would basically count), having better work on Step 5 seems very obvious.

[1] https://openai.com/blog/openai-lp/

[2] https://news.ycombinator.com/item?id=19360709

[3] https://openai.com/charter/

[4] This was titled a “decisive strategic advantage” in the book Superintelligence by Nick Bostrom

Also, see:

https://www.cnbc.com/2021/03/17/openais-altman-ai-will-make-wealth-to-pay-all-adults-13500-a-year.html

Artificial intelligence will create so much wealth that every adult in the United States could be paid $13,500 per year from its windfall as soon as 10 years from now.

https://www.reddit.com/r/artificial/comments/m7cpyn/openais_sam_altman_artificial_intelligence_will/

Yea, this is what I was assuming the action/alternative would be. This strategy is very tried-and-true.

Of course! In general I'm happy for people to make quick best-guess evaluations openly - in part, that helps others here correct things when there might be some obvious mistakes. :)

Thanks for the replies! Some quick responses.

First, again, overall, I think we generally agree on most of this stuff.

Perhaps, but I think you gain a ton of info from actually trying to do stuff and iterating. I think prioritization work can sometimes seem more intuitively great than it ends up being, relative to the iteration strategy.

I agree to an extent. But I think there are some very profound prioritization questions that haven't been researched much, and that I don't expect us to gain much insight from by experimentation in the next few years. I'd still like us to do experimentation (If I were in charge of a $50Mil fund, I'd start spending it soon, just not as quickly as I would otherwise). For example:

- How promising is it to improve the wisdom/intelligence of EAs vs. others?

- How promising are brain-computer-interfaces vs. rationality training vs. forecasting?

- What is a good strategy to encourage epistemic-helping AI, where philanthropists could have the most impact?

- What kinds of benefits can we generically expect from forecasting/epistemics? How much should we aim for EAs to spend here?

I would love for this to be true! Am open to changing mind based on a compelling analysis.

We might be disagreeing a bit on what the bar for "valuable for EA decision-making" is. I see a lot of forecasting like accounting - it rarely leads to a clear and large decision, but it's good to do, and steers organizations in better directions. I personally rely heavily on prediction markets for key understandings of EA topics, and see that people like Scott Alexander and Zvi seem to. I know less about the inner workings of OP, but the fact that they continue to pay for predictions that are very much for their questions seems like a sign. All that said, I think that ~95%+ of Manifold and a lot of Metaculus is not useful at all.

I think you might be understating how fungible OpenPhil's efforts are between AI safety (particularly governance team) and forecasting

I'm not sure how much to focus on OP's narrow choices here. I found it surprising that Javier went from governance to forecasting, and that previously it was the (very small) governance team that did forecasting. It's possible that if I evaluated the situation, and had control of the situation, I'd recommend that OP moved marginal resources to governance from forecasting. But I'm a lot less interested in this question than I am, "is forecasting competitive with some EA activities, and how can we do it well?"

Seems unclear what should count as internal research for EA, e.g. are you counting OP worldview diversification team / AI strategy research in general?

Yep, I'd count these.

Quick notes on your QURI section:

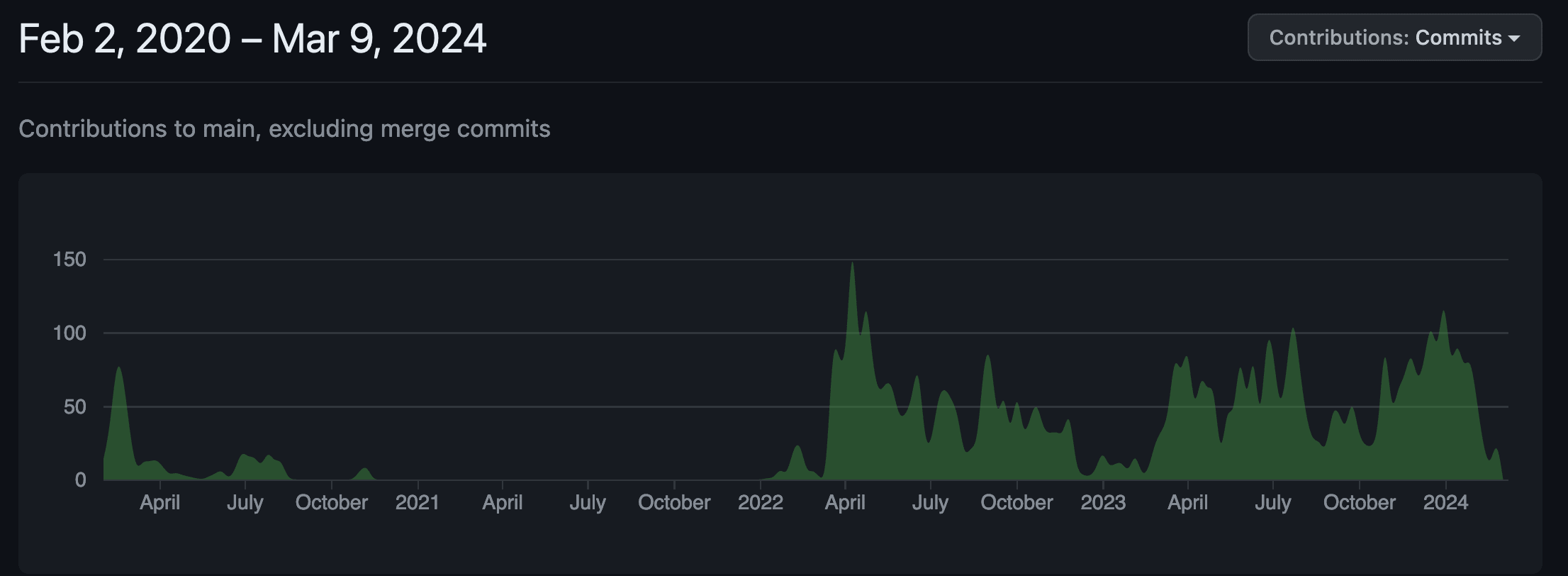

"after four years they don't seem to have a lot of users" -> I think it's more fair to say this has been about 2 years. If you look at the commit history you can see that there was very little development for the first two years of that time.

https://github.com/quantified-uncertainty/squiggle/graphs/contributors

We've spent a lot of time at blog posts / research, and other projects, as well as Squiggle Hub. (Though in the last year especially, we've focused on Squiggle)

Regarding users, I'd agree it's not as many as I would have liked, but think we are having some. If you look through the Squiggle Tag, you'll see several EA groups who have used Squiggle.

We've been working with a few EA organizations on Squiggle setups that are mostly private.

I like that it's for-profit.

I think for-profits have their space, but I also think that nonprofits and open-source/open organizations have a lot of benefits.

Obvious point that it would be neat for someone to write forecasting questions for each one, if there can be some easy way of doing so.

I feel like I need to reply here, as I'm working in the industry and defend it more.

First, to be clear, I generally agree a lot with Eli on this. But I'm more bullish on epistemic infrastructure than he is.

Here are some quick things I'd flag. I might write a longer post on this issue later.

- I'm similarly unsure about a lot of existing forecasting grants and research. In general, I'm not very excited about most academic-style forecasting research at the moment, and I don't think there are many technical groups at all (maybe ~30 full time equivalents in the field, in organizations that I could see EAs funding, right now?).

- I think that for further funding in this field to be exciting, funders should really work on designing/developing this field to emphasize the very best parts. The current median doesn't seem great to me, but I think the potential has promise, and think that smart funding can really triple-down on the good stuff. I think it's sort of unfair to compare forecasting funding (2024) to AI Safety funding (2024), as the latter has had much more time to become mature. This includes having better ideas for impact and attracting better people. I think that if funders just "funded the median projects", then I'd expect the field to wind up in a similar place to it is now - but if funders can really optimize, then I'd expect them to be taking a decent-EV risk. (Decent chance of failure, but some chance at us having a much more exciting field in 3-10 years).

- I'd prefer funders focus on "increasing wisdom and intelligence" or "epistemic infrastructure" than on "forecasting specifically". I think that the focus on forecasting is over-limiting. That said, I could see an argument to starting from a forecasting angle, as other interventions in "wisdom and intelligence / epistemic infrastructure" are more speculative.

- If I were deploying $50M here, I'd probably start out by heavily prioritizing prioritization work itself - work to better understand this area and what is exciting within it. (I explain more of this in the wisdom/intelligence post above). I generally think that there's been way too little good investigation and prioritization work in this area.

- Like Eli, I'm much more optimistic about "epistemic work to help EAs" than I am "epistemic work to help all of society", at very least in the short-term. Epistemics/forecasting work requires a lot of marginal costs to help any given population, and I believe that "helping N EAs" is often much more impactful than helping N people from most other groups. (This is almost true by definition, for people of any certain background).

- I'd like to flag that I think that Metaculus/Manifold/Samotsvety/etc forecasting has been valuable for EA decision-making. I'd hate to give this up or de-prioritize this sort of strategy.

- I don't particularly trust EA decision-making right now. It's not that I think I could personally do better, but rather that we are making decisions about really big things, and I think we have a lot of reason for humility. When choosing between "trying to better figure out how to think and what to do" vs. "trying to maximize the global intervention that we currently think is highest-EV," I'm nervous about us ignoring the former and going all-in on the latter. That said, some of the crux might be that I'm less certain about our current marginal AI Safety interventions than I think Eli is.

- Personally, around forecasting, I'm most excited about ambitious, software-heavy proposals. I imagine that AI will be a major part of any compelling story here.

- I'd also quickly flag that around AI Safety - I agree that in some ways AI safety is very promising right now. There seems to have been a ton of great talent brought in recently, so there are some excellent people (at very least) to give funding to. I think it's very unfortunate how small the technical AI safety grantmaking team is at OP. Personally I'd hope that this team could quickly get to 5-30 full time equivalents. However, I don't think this needs to come at the expense of (much) forecasting/epistemics grantmaking capacity.

- I think you can think of a lot of "EA epistemic/evaluation/forecasting work" as "internal tools/research for EA". As such, I'd expect that it could make a lot of sense for us to allocate ~5-30% of our resources to it. Maybe 20% of that would be on the "R&D" to this part - perhaps more if you think this part is unusually exciting due to AI advancements. I personally am very interested in this latter part, but recognize it's a fraction of a fraction of the full EA resources.

Reading closer, I would separately note that I think there is some semantic ambiguity in how you and others describe extreme optimizers.

I think that an agent that's "intensely maximizing for a goal that can be put into numbers in order to show that it's optimal" can still be incredibly humble and reserved.

Holden writes, "Can we avoid these pitfalls by “just maximizing correctly?” and basically answers no, but his alternative proposal is to "apply a broad sense of pluralism and moderation to much of what they do".

I think that very arguably, Holden is basically saying, "The utility we'd get from executing [pluralism and moderation] strategy is greater than we would be executing [naive narrow optimization] strategy, so we should pursue the former". To me, this can easily be understood as a form of "utility optimization over utility optimization strategies." So Holden's resulting strategy can still be considered utility optimization, in my opinion.

Good question! As I tried describing in this post (though I really didn't get in the details of specific QURI plans), there are many sorts of utility functions you can use, and many ways of optimizing over them.

Using some of my terminology above, I think a lot of people here think of advanced AIs as applying a highly prescriptive, deliberation-extrapolated utility function, with a great deal of optimization power, particularly in situations where there's very little ability to account for utility-function uncertainty. I agree that this is scary and a bad idea, especially in situations where we have little experience in learning to optimize for any sort of explicit utility function.

But again, utility functions and their optimization can be far more harmless than this.

Very simple examples of (partial) utility functions include:

- For each animal/being, how much should EA donors value one of their life-years?

- For each of [set of EA projects], how valuable did it seem to be?

- For each of [a long list of potential life hacks], what is the expected value?

I believe these lists can satisfy the core tenants of Von Neumann–Morgenstern utility functions, but I don't think that many people here would consider "trying to make these lists using reasonable measures, and generally then taking decisions based on them" to be particularly scary or controversial.

In the limit, I could imagine people saying, "I think that prescriptive, deliberation-extrapolated utility function, with a great deal of optimization power is scary, so we should never optimize or improve anything that's technically any sort of utility function. Therefore, no more cost-benefit analysis, no more rankings of things, etc." I think this mistake would be highly unfortunate, and attempted to clarify some aspects of it in this post.

Audio/podcast is here:

https://forum.effectivealtruism.org/posts/fsnMDpLHr78XgfWE8/podcast-is-forecasting-a-promising-ea-cause-area