jackva

Posts 24

Comments255

Even if one is skeptical of the detailed numbers of a cost effectiveness analysis like this (as I am), I think it is nonetheless pretty clear that this 1M spent was a pretty great bet:

- When I talked to ITIF in 2020, they were pretty clear how transformative the Let's Funds campaign had been for their fundraising.

- Given the amount of innovation-related decision making that occurred in the run-up to and early Biden administration -- what became the IIJA, CHIPS, and IRA, probably the largest expansion of energy innovation activity in decades -- significantly strengthening one of the most respected voices on energy innovation seemed clearly very good.

- ITIF literally co-authored the most detailed blueprint for the Biden energy innovation agenda (Energizing America) and had clear ties into the White House so, conditional on them being funding-constrained (which they perceived themselves to be, see (1)) it seems hard to think there wasn’t a pretty useful way to spend this additional funding.

- Even if one thinks ITIF shifted zero dollars towards innovation (from other areas), just marginally improving a single decision would quickly make this a great investment.

- We have lots of evidence from other areas that this kind of philanthropy works and often has large impacts via legislative subsidy and other mechanisms.

- 2000 smallish donors would not have spent their money better otherwise given how most small climate donors allocate their funds (Big Green etc).

I think there’s a failure mode of looking at a cost-effectiveness model like this and rightly thinking -- this is really crude and unbelievable! -- while, in this case, wrongly concluding that this wasn’t a great bet even though it is hard to put into a credible BOTEC.

I am also just beginning to think about this more, but some initial thoughts:

- Path dependency from self-ampliying processes -- Thinking about model generations as forks where significant changes in the trajectory become possible (e.g. crowding in a lot more investment, as has happened with ChatGPT/GPT4, but also, as has also happened, a changed Overton window). I think overall this introduces a dynamic where the extremes of the scenario space become more likely, with social dynamics such as strong increase in investment or, on the other side, stricter regulation after a warning shot, having self-amplifying dynamics. As the sums get larger and the public and policy makers pay way more attention, I think the development process will become a lot more contingent (my sense is that you are already thinking about these things at Convergence).

- Modeling domestic and geopolitics -- e.g. the Biden and Trump AI policies probably look quite different, as does the outlook for race dynamics (essentially all mentions of artificial intelligence by Project 2025, a Heritage-backed attempt to define priorities for an incoming Republican administration, are about science dominance and/or competition with China, there is no discussion of safety at all).

- Modeling more direct AI progress > AI politics > AI policy > AI progress feedback loops, i.e. based on what we know from past examples or theory, what kind of labor displacement would one need to see to expect serious backlash? what kind of warning shots would likely lead to serious regulation? and similar questions.

I agree with you that the 2018 report should not have been used as primary evidence for CATF cost-effectiveness for WWOTF (and, IIRC, I advised against it and recommended an argument more based on landdscaping considerations with leverage from advocacy and induced technological change). But this comment is quite misleading with regards to FP's work as we have discussed before:

- I am not quite sure what is meant with "referencing it", but this comment from 2022 in response to one of your earlier claims already discusses that we (FP) have not been using that estimate for anything since at least 2020. This was also discussed in other places before 2022.

- As discussed in my comment on the Rethink report you cite, correcting the mistakes in the REDD+ analysis was one of the first things I did when joining FP in 2019 and we stopped recommending REDD+ based interventions in 2020. Indeed, I have been publicly arguing against treating REDD+ as cost-effective ever since and the thrust of my comment on the RP report is that they were still too optimistic.

It seems like that this number will increase by 50% once FLI (Foundation) fully comes online as a grantmaker (assuming they spend 10%/year of their USD 500M+ gift)

https://www.politico.com/news/2024/03/25/a-665m-crypto-war-chest-roils-ai-safety-fight-00148621

Interesting, thanks for clarifying!

Just to fully understand -- where does that intuition come from? Is it that there is a common structure to high impact? (e.g. if you think APs are good for animals you also think they might be good for climate, because some of the goodness comes from the evidence of modular scalable technologies getting cheap and gaining market share?)

I don't think these examples illustrate that "bewaring of suspicious convergence" is wrong.

For the two examples I can evaluate (the climate ones), there are co-benefits, but there isn't full convergence with regards to optimality.

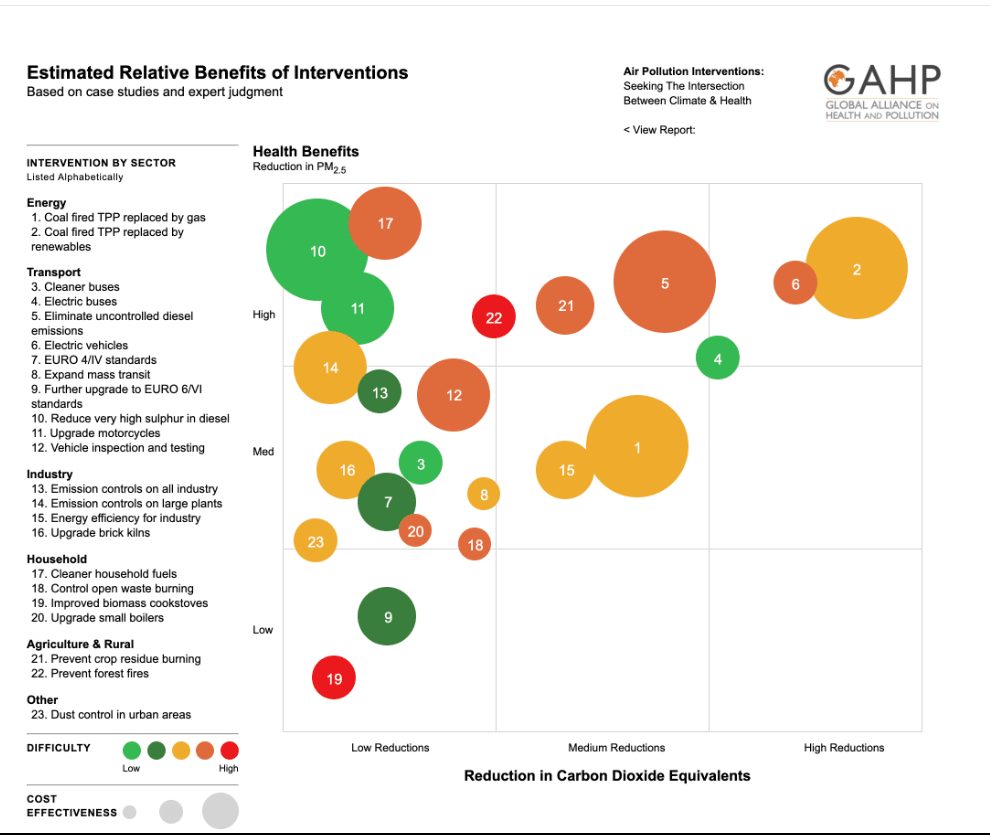

On air pollution, the most effective intervention for climate are not the most effective intervention for air pollution even though decarbonization is good for both.

See e.g. here (where the best intervention for air pollution would be one that has low climate benefits, reducing sulfur in diesel; and I think if that chart were fully scope-sensitive and not made with the intention to showcase co-benefits, the distinctions would probably be larger, e.g. moving from coal to gas is a 15x improvement on air pollution while only a 2x on emissions):

And the reason is that different target metrics (carbon emissions, reduced air pollution mortality) are correlated, but do not map onto each other perfectly and optimizing for one does not maximize the other.

Same thing with alternative proteins, where a strategy focused on reducing animal suffering would likely (depending on moral weights) prioritize APs for chicken, whereas a climate-focused strategy would clearly prioritize APs for beef.

(There's a separate question here whether alternative proteins are an optimal climate strategy, which I think is not really established).

I think what these examples show is that we often have interventions with correlated benefits and it is worth asking whether one should optimize for both metrics jointly (given that there isn't really an inherent reason to separate, say, lives lost from climate from lives lost from air pollution it could make sense to prioritize interventions which do not optimize either dimension), but if one decides to optimize for a single dimension not expecting to also accidentally optimize the other dimension (i.e. "bewaring of suspicious convergence") continues to be good advice (or, more narrowly, is at least not discredited by these examples).

Fascinating stuff!

I am curious how you think about integrating social and political feedback loops into timeline forecasts.

Roughly speaking, (a) when we remain in the paradigm of relatively predictable progress (in terms of amount of progress, not specific capabilities) enabled by scaling laws, (b) we put significant probability on being fairly close to TAI, e.g. within 10 years, (c) it remains true that model progress is clearly observable by the broader public,

then it seems that social and political factors might drive a large degree in the variance of expectable timelines (by affecting your (E2)).

E.g. things like (i) Sam Altman seeking to discontinously increase chip supply (ii) How the next jump in capabilities will be perceived, e.g. if it is true that GPT-5 will be another similarly sized jumped compared to what we've seen from GPT-3 to GPT-4 what policy and investment responses will this cause? (iii) whether there will be a pre-TAI warming shot that will lead to a significant shift in the Overton Window and more serious regulation.

It seems to me that those dynamics should take up a larger part of the variance the closer we get so I am curious how you think about this and whether you will include this in your upcoming work on short timelines.

but that a priori we should assume diminishing returns in the overall spending, otherwise the government would fund the philanthropic interventions.

I think this is fundamentally the crux -- many of the most valuable philanthropic actions in domains with large government spending will likely be about challenging / advising / informationally lobbying the government in a way that governments cannot self-fund.

Indeed, when additional government funding does not reduce risk (does not reduce the importance of the problem) but is affectable, there can probably be cases where you should get more excited about philanthropic funding to leverage as public funding increases.

My sense is that it is not a big priority.

However, I would also caution against the view that expected climate risk has increased over the past years.

Even if impacts are faster than predicted, most GCR-climate risk does probably not come from developments in the 2020s, but on emissions paths over this century.

And the big story there is that the expected cumulative emissions have much decreased (see e.g. here).

As far as I know no one has done the math on this, but I would expect that the decrease in likelihood of high warming futures dominates somewhat higher-than-anticipated warming at lower level of emissions.