Dan_Keys

Comments57

It looks like the 3 articles are in the appendix of the dissertation, on pages 65 (fear, Study A), 72 (hope, Study B), and 73 (mixed, Study C).

The effect of health insurance on health, such as the old RAND study, the Oregon Medicaid expansion, the India study from a couple years ago, or whatever else is out there.

Robin Hanson likes to cite these studies as showing that more medicine doesn't improve health, but I'm skeptical of the inference from 'not statistically significant' to 'no effect' (I'm in the comments there as "Unnamed"). I would like to see them re-analyzed based on effect size (e.g. a probability distribution or confidence interval for DALY per $).

I'd guess that this is because an x-risk intervention might have on the order of a 1/100,000 chance of averting extinction. So if you run 150k simulations, you might get 0 or 1 or 2 or 3 simulations in which the intervention does anything. Then there's another part of the model for estimating the value of averting extinction, but you're only taking 0 or 1 or 2 or 3 draws that matter from that part of the model because in the vast majority of the 150k simulations that part of the model is just multiplied by zero.

And if the intervention sometimes increases extinction risk instead of reducing it, then the few draws where the intervention matters will include some where its effect is very negative rather than very positive.

One way around this is to factor the model, and do 150k Monte Carlo simulations for the 'value of avoiding extinction' part of the model only. The part of the model that deals with how the intervention affects the probability of extinction could be solved analytically, or solved with a separate set of simulations, and then combined analytically with the simulated distribution of value of avoiding extinction. Or perhaps there's some other way of factoring the model, e.g. factoring out the cases where the intervention has no effect and then running simulations on the effect of the intervention conditional on it having an effect.

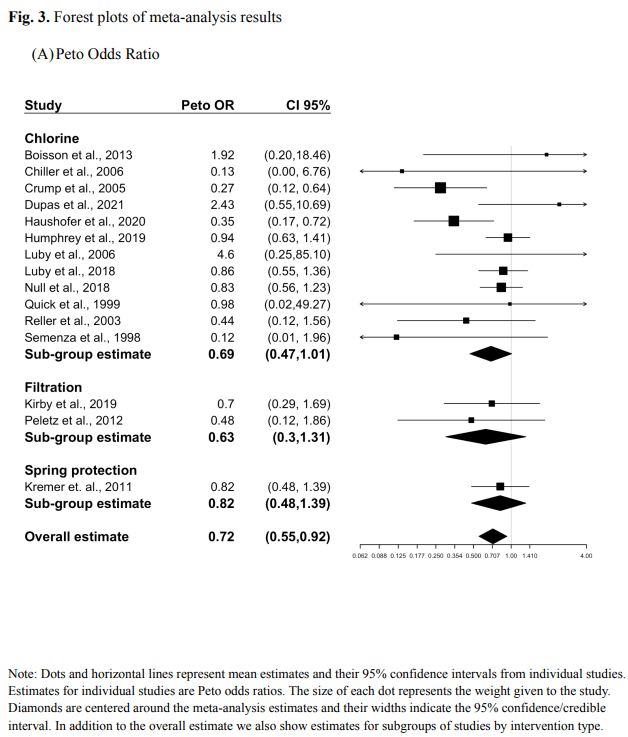

I believe the paper you're referring to is "Water Treatment And Child Mortality: A Meta-Analysis And Cost-effectiveness Analysis" by Kremer, Luby, Maertens, Tan, & Więcek (2023).

The abstract of this version of the paper (which I found online) says:

We estimated a mean cross-study reduction in the odds of all-cause under-5 mortality of about 30% (Peto odds ratio, OR, 0.72; 95% CI 0.55 to 0.92; Bayes OR 0.70; 95% CrI 0.49 to 0.93). The results were qualitatively similar under alternative modeling and data inclusion choices. Taking into account heterogeneity across studies, the expected reduction in a new implementation is 25%.

That's a point estimate of a 25-30% reduction in mortality (across 3 methods of estimating that number), with a confidence/credible interval that has a lower bound of a 7-8% reduction in mortality. So, it's a fairly noisy estimate, due to some combination of the noisiness of individual studies and the heterogeneity across different studies.

That interval for the reduction in mortality just barely overlaps with your number that "Sub-saharan Africa diarrhoea causes 5-10% of child mortality." (The overlap might be larger if that rate was higher than 5-10% in the years & locations where the studies were conducted.)

So it could be that the clean water interventions prevent most children's deaths from diarrhoea and few other deaths, if the mortality reduction is near the bottom of the range that Kremer & colleagues estimate. Or they might prevent a decent chunk of other deaths, but not nearly as many as your Part 4 chart & list suggest, if the true mortality reduction is something like 15%.

There is generally also a possibility of a meta-analysis giving inflated results, due to factors like publication bias affecting which studies they include or other methodological issues in the original studies, which could mean that the true effect is smaller than the lower bound of their interval. I don't know how likely that is in this case.

Here's a more detailed look at their meta-analysis results:

More from Existential Risk Observatory (@XRobservatory) on Twitter:

It was a landmark speech by @RishiSunak: the first real recognition of existential risk by a world leader. But even better are the press questions at the end:

@itvnews: "If the risks are as big as you say, shouldn't we at the very least slow down AI development, at least long enough to understand and control the risks."

@SkyNews: "Is it fair to say we know enough already to call for a moratorium on artificial general intelligence? Would you back a moratorium on AGI?"

Sky again: "Given the harms and the risk you pointed out in this report, and some of those are profound, surely there must be some red lines we can draw at this point. Which ones are yours?"

@TheSun: "You say we shouldn't be losing sleep over this stuff. If not, why not?"

@theipaper: "You haven't really talked about whether your government is actually going to regulate. Will there be an AI Bill or similar on the The King's Speech?"

iNews again: "On the details of that regulation: does the government remain commited to this idea of responsible scaling, whereby you sort of test models after they're being developed, or is it time to start thinking about how you intervene to stop the most dangerous models being developed at all?"

Who would have thought one year ago? The public debate about AI xrisk so far outdoes everyone's expectations. Next step: convincing answers.

https://www.youtube.com/watch?v=hSup6mgNhzQ

One way to build risk decay into a model is to assume that the risk is unknown within some range, and to update on survival.

A very simple version of this is to assume an unknown constant per-century extinction risk, and to start with a uniform distribution on the size of that risk. Then the probability of going extinct in the first century is 1/2 (by symmetry), and the probability of going extinct in the second century conditional on surviving the first is smaller than that (since the higher-risk worlds have disproportionately already gone extinct) - with these assumptions it is exactly 1/3. In fact these very simple assumptions match Laplace's law of succession, and so the probability of going extinct in the nth century conditional on surviving the first n-1 is 1/(n+1), and the unconditional probability of surviving at least n centuries is also 1/(n+1).

More realistic versions could put more thought into the prior, instead of just picking something that's mathematically convenient.

Why are these expected values finite even in the limit?

It looks like this model is assuming that there is some floor risk level that the risk never drops below, which creates an upper bound for survival probability through n time periods based on exponential decay at that floor risk level. With the time of perils model, there is a large jolt of extinction risk during the time of perils, and then exponential decay of survival probability from there at the rate given by this risk floor.

The Jupyter notebook has this value as r_low=0.0001 per time period. If a time period is a year, that means a 1/10,000 chance of extinction each year after the time of perils is over. This implies a 10^-43 chance of surviving an additional million years after the time of perils is over (and a 10^-434 chance of surviving 10 million years, and a 10^-4343 chance of surviving 100 million years, ...). This basically amounts to assuming that long-lived technologically advanced civilization is impossible. It's why you didn't have to run this model past the 140,000 year mark.

This constant r_low also gives implausible conditional probabilities. e.g. Intuitively, one might think that a technologically advanced civilization that has survived for 2 million years after making it through its time of perils has a pretty decent chance of making it to the 3 million year mark. But this model assumes that it still has a 1/10,000 chance of going extinct next year, and a 10^-43 chance of making it through another million years to the 3 million year mark.

This seems like a problem for any model which doesn't involve decaying risk. If per-time-period risk is 1/n, then the model becomes wildly implausible if you extend it too far beyond n time periods, and it may have subtler problems before that. Perhaps you could (e.g.) build a time of perils model on top of a decaying r_low.

You can get a sense for these sorts of numbers just by looking at a binomial distribution.

e.g., Suppose that there are n events which each independently have a 45% chance of happening, and a noisy/biased/inaccurate forecaster assigns 55% to each of them.

Then the noisy forecaster will look more accurate than an accurate forecaster (who always says 45%) if >50% of the events happen, and you can use the binomial distribution to see how likely that is to happen for different values of n. For example, according to this binomial calculator, with n=51 there is a 24% chance that at least 26/51 of the p=.45 events will resolve as True, and with n=201 there is a 8% chance (I'm picking odd numbers for n so that there aren't ties).

With slightly more complicated math you can look at statistical significance, and you can repeat for values other than trueprob=45% & forecast=55%.

Seems like a question where the answer has to be "it depends".

There are some questions which have a decomposition that helps with estimating them (e.g. Fermi questions like estimating the mass of the Earth), and there are some decompositions that don't help (for one thing, decompositions always stop somewhere, with components that aren't further decomposed).

Research could help add texture to "it depends", sketching out some generalizations about which sorts of decompositions are helpful, but it wouldn't show that decomposition is just generally good or just generally bad or useless.

Does your model without log(GNI per capita) basically just include a proxy for log(GNI per capita), by including other predictor variables that, in combination, are highly predictive of log(GNI per capita)?

With a pool of 1058 potential predictor variables, many of which have some relationship to economic development or material standards of living, it wouldn't be surprising if you could build a model to predict log(GNI per capita) with a very good fit. If that is possible with this pool of variables, and if log(GNI per capita) is linearly predictive of life satisfaction, then if you build a model predicting life satisfaction which can't include log(GNI per capita), it can instead account for that variance by including the variables that predict log(GNI per capita).

And if you transform log(GNI per capita) into a form whose relationship with life satisfaction is sufficiently non-linear, and build a model which can only account for the linear portion of the relationship between that transformed variable and life satisfaction, then within that linear model those proxy variables might do a much better job than transformed log(GNI per capita) of accounting for the variance in life satisfaction.