Posts tagged community

Quick takes

Popular comments

Recent discussion

- Little discussion of why or how the affiliation with SBF happened despite many well connected EAs having a low opinion of him

- Little discussion of what led us to ignore the base rate of scamminess in crypto and how we'll avoid that in future

For both of these comments, I want a more explicit sense of what the alternative was. Many well-connected EAs had a low opinion of Sam. Some had a high opinion. Should we have stopped the high-opinion ones from affiliating with him? By what means? Equally, suppose he finds skepticism from (say) Will et al, instead of a warm welcome. He probably still starts the FTX future fund, and probably still tries to make a bunch of people regranters. He probably still talks up EA in public. What would it have taken to prevent any of the resultant harms?

Likewise, what does not ignoring the base rate of scamminess in crypto actually look like? Refusing to take any money made through crypto? Should we be shunning e.g. Vitalik Buterin now, or any of the community donors who made money speculating?

For both of these comments, I want a more explicit sense of what the alternative was.

Not a complete answer, but I would have expected communication and advice for FTXFF grantees to have been different. From many well connected EAs having a low opinion of him, we can imagine that grantees might have been urged to properly set up corporations, not count their chickens before they hatched, properly document everything and assume a lower-trust environment more generally, etc. From not ignoring the base rate of scamminess in crypto, you'd expect to have seen stronger and more developed contingency planning (remembering that crypto firms can and do collapse in the wake of scams not of their own doing!), more decisions to build more organizational reserves rather than immediately ramping up spending, etc.

It's likely that no single answer is "the" sole answer. For instance, it's likely that people believed they could assume that trusted insiders were more significantly more ethical than the average person. The insider-trusting bias has bitten any number of organizations and movements (e.g., churches, the Boy Scouts). However, it seems clear from Will's recent podcast that the downsides of being linked to crypto were appreciated at some level. It would take a lot for me to be convinced that all that $$ wasn't a major factor.

Anders Sandberg has written a “final report” released simultaneously with the announcement of FHI’s closure. The abstract and an excerpt follow.

...Normally manifestos are written first, and then hopefully stimulate actors to implement their vision. This document is the reverse

For anyone wondering about the definition of macrostrategy, the EA forum defines it as follows:

...Macrostrategy is the study of how present-day actions may influence the long-term future of humanity.[1]

Macrostrategy as a field of research was pioneered by Nick Bostrom, and it is a core focus area of the Future of Humanity Institute.[2] Some authors distinguish between "foundational" and "applied" global priorities research.[3] On this distinction, macrostrategy may be regarded as closely related to the former. It is concerned with the assessment of

Super broad question, I know.

I've been going down the rabbit hole of critical psychiatry lately and I'm finding it fascinating. Parts of it seem convincing and anecdotally align with my (admittedly extensive) interactions with the psychiatric system. But the evidence in...

G'day Marissa! I'm admittedly not the best-versed in psychiatry specifically, since I've focused more on psychotherapy in the past. My general vibe from reading & research I've done is that (for pharmacotherapy only, can't speak to crisis care):

- Pharmacotherapy is robustly effective in the short-term with minimal deterioration

- It's no more effective than therapy, and is likely worse than therapy in the long-term

- Pharmacology & psychotherapy combined is better than both individually

- People might adapt to it, requiring higher and higher doses

- We don

At Giving What We Can, we're hoping to speak to people who are interested in taking the Giving What We Can Pledge at some point, but haven't yet.

We're conducting 45 min calls to understand your journey a bit more, and we'll donate $50 to a charity of your choice on our ...

Oh I thought I responded to this already!

I'd like to say that people often have very good reasons for not pledging, that are sometimes visible to us, and other times not - and no one should feel bad for making the right choice for themselves!

I do of course think many more people in our community could take the GWWC Pledge, but I wouldn't want people to do that at the expense of them feeling comfortable with making that commitment.

We should respect other people's journeys, lifestyles and values in our pursuits to do good.

And thanks Lizka for sharing your previous post in this thread too! Appreciate you sharing your perspective!

Marcus Daniell appreciation note

@Marcus Daniell, cofounder of High Impact Athletes, came back from knee surgery and is donating half of his prize money this year. He projects raising $100,000. Through a partnership with Momentum, people can pledge to donate for each point he gets; he has raised $28,000 through this so far. It's cool to see this, and I'm wishing him luck for his final year of professional play!

Summary

- Many views, including even some person-affecting views, endorse the repugnant conclusion (and very repugnant conclusion) when set up as a choice between three options, with a benign addition option.

- Many consequentialist(-ish) views, including many person-affecting

I wouldn't agree on the first point, because making Desgupta's step 1 the "step 1" is, as far as I can tell, not justified by any basic principles. Ruling out Z first seems more plausible, as Z negatively affects the present people, even quite strongly so compared to A and A+. Ruling out A+ is only motivated by an arbitrary-seeming decision to compare just A+ and Z first, merely because they have the same population size (...so what?). The fact that non-existence is not involved here (a comparison to A) is just a result of that decision, not of there reall...

Nick Bostrom's website now lists him as "Principal Researcher, Macrostrategy Research Initiative."

Doesn't seem like they have a website yet.

I also didn't vote but would be very surprised if that particular paper - a policy proposal for a biosecurity institute in the context of a pandemic - was an example of the sort of thing Oxford would be concerned about affiliating with (I can imagine some academics being more sceptical of some of the FHI's other research topics). Social science faculty academics write papers making public policy recommendations on a routine basis, many of them far more controversial.

The postmortem doc says "several times we made serious missteps in our communications with other parts of the university because we misunderstood how the message would be received" which suggests it might be internal messaging that lost them friends and alienated people. It'd be interesting if there are any specific lessons to be learned, but it might well boil down to academics being rude to each other, and the FHI seems to want to emphasize it was more about academic politics than anything else.

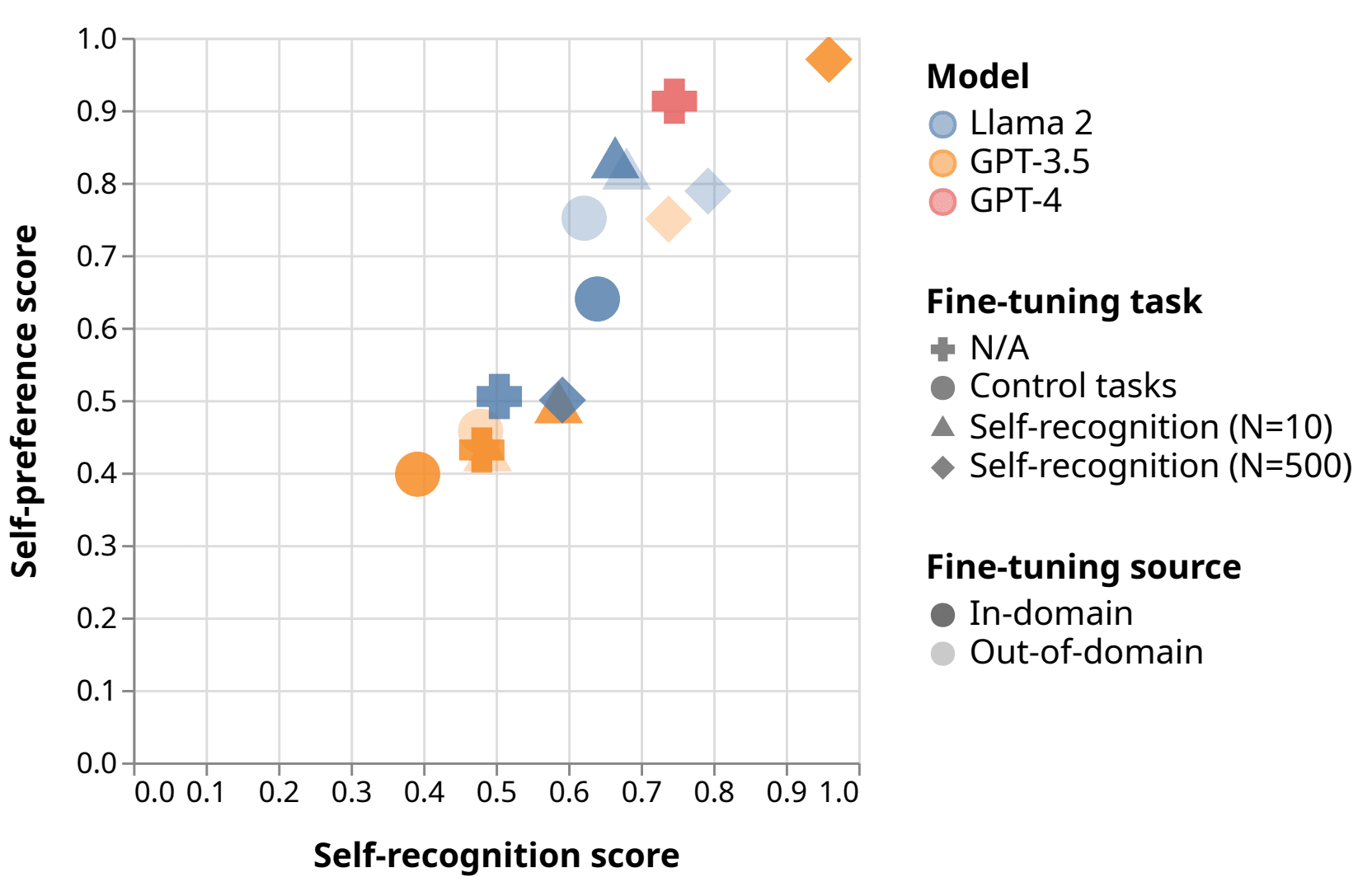

Self-evaluation using LLMs is used in reward modeling, model-based benchmarks like GPTScore and AlpacaEval, self-refinement, and constitutional AI. LLMs have been shown to be accurate at approximating human annotators on some tasks.

But these methods are threatened by self...

Interesting. I think I can tell an intuitive story for why this would be the case, but I'm unsure whether that intuitive story would predict all the details of which models recognize and prefer which other models.

As an intuition pump, consider asking an LLM a subjective multiple-choice question, then taking that answer and asking a second LLM to evaluate it. The evaluation task implicitly asks the the evaluator to answer the same question, then cross-check the results. If the two LLMs are instances of the same model, their answers will be more strongly cor...

Open Philanthropy’s “Day in the Life” series showcases the wide-ranging work of our staff, spotlighting individual team members as they navigate a typical workday. We hope these posts provide an inside look into what working at Open Phil is really like. If you’re interested in joining our team, we encourage you to check out our open roles.

Alex Bowles is a Senior Program Associate on Open Philanthropy’s Science and Global Health R&D team[1], and a member of the Global Health and Wellbeing Cause Prioritization team. His responsibilities include estimating the cost-effectiveness of research and development grants in science and global health, identifying and assessing new strategic areas for the team, and investigating new Open Phil cause areas within global health and wellbeing.

Day in the Life

I’m part of the ~70% of Open Phil staff who work...

I am not confident that another FTX level crisis is less likely to happen, other than that we might all say "oh this feels a bit like FTX".

Changes:

- Board swaps. Yeah maybe good, though many of the people who left were very experienced. And it's not clear whether there are

...