Vasco Grilo

Bio

Participation4

How others can help me

You can give me feedback here (anonymous or not). You are welcome to answer any of the following:

- Do you have any thoughts on the value (or lack thereof) of my posts?

- Do you have any ideas for posts you think I would like to write?

- Are there any opportunities you think would be a good fit for me which are either not listed on 80,000 Hours' job board, or are listed there, but you guess I might be underrating them?

How I can help others

Feel free to check my posts, and see if we can collaborate to contribute to a better world. I am open to part-time volunteering, and part-time or full-time paid work. In this case, I typically ask for 20 $/h, which is roughly equal to 2 times the global real GDP per capita.

Posts 109

Comments1287

Topic contributions25

Thanks for sharing, Garrison. I have read Yoshua's How Rogue AIs may Arise and FAQ on Catastrophic AI Risks, but I am still thinking annual extinction risk over the next 10 years is less than 10^-6. Do you know Yoshua's thoughts on the possibility of AI risk being quite low due to the continuity of potential harms? If deaths in an AI catastrophe follow a Pareto distribution (power law), which is a common assumption for tail risk, there is less than 10 % chance of such a catastrophe becoming 10 times as deadly, and this severely limits the probability of extreme outcomes. I also believe the tail distribution would decay faster than that of a Pareto distribution for very severe catastrophes, which makes my point stronger.

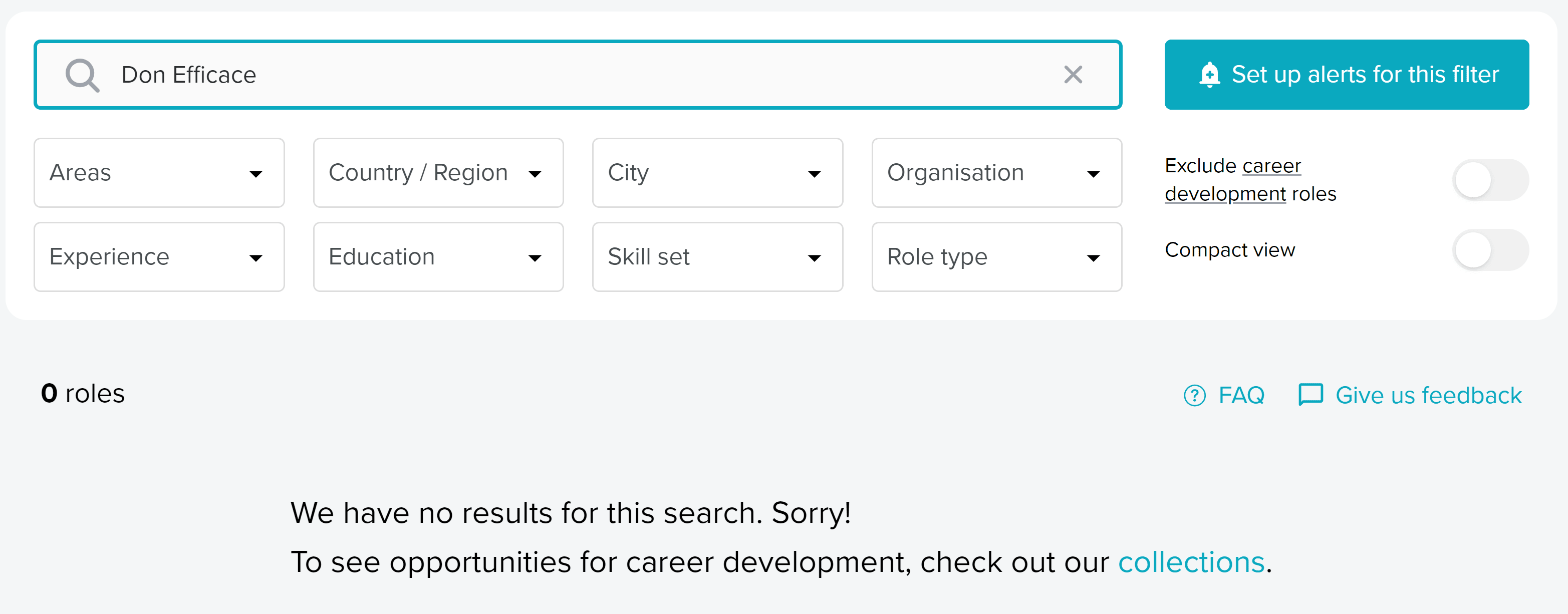

Thanks for sharing. It looks like this position is not on 80,000 Hours' job board:

I have asked them whether they had considered adding your position to the board, clicking on "Give us feedback" above, but you may want to reach out to them too (in case you have not done so yet).

@Don Efficace, they replied your position will go up this week.

Thanks, Michael! For readers' reference, I have also estimated the scale of the welfare of various animal populations[1]:

| Population | Intensity of the mean experience as a fraction of the median welfare range | Median welfare range | Intensity of the mean experience as a fraction of that of humans | Population size | Absolute value of ETHU as a fraction of that of humans |

|---|---|---|---|---|---|

| Farmed insects raised for food and feed | 12.9 μ | 2.00 m | 3.87 m | 8.65E10 | 0.0423 |

| Farmed pigs | 12.9 μ | 0.515 | 1.00 | 9.86E8 | 0.124 |

| Farmed crayfish, crabs and lobsters | 12.9 μ | 0.0305 | 0.0590 | 2.21E10 | 0.165 |

| Humans | 6.67 μ | 1.00 | 1.00 | 7.91E9 | 1.00 |

| Farmed shrimps and prawns | 12.9 μ | 0.0310 | 0.0599 | 1.39E11 | 1.05 |

| Farmed fish | 12.9 μ | 0.0560 | 0.108 | 1.11E11 | 1.52 |

| Farmed chickens | 12.9 μ | 0.332 | 0.642 | 2.14E10 | 1.74 |

| Farmed animals analysed here | 12.9 μ | 0.0362 | 0.0700 | 1.36E12 | 4.64 |

| Wild mammals | 6.67 μ | 0.515 | 0.515 | 6.75E11 | 43.9 |

| Wild fish | 6.67 μ | 0.0560 | 0.0560 | 6.20E14 | 4.39 k |

| Wild terrestrial arthropods | 6.67 μ | 2.00 m | 2.00 m | 1.00E18 | 253 k |

| Wild marine arthropods | 6.67 μ | 2.00 m | 2.00 m | 1.00E20 | 25.3 M |

| Nematodes | 6.67 μ | 0.200 m | 0.200 m | 1.00E21 | 25.3 M |

| Wild animals analysed here | 6.67 μ | 0.365 m | 0.365 m | 1.10E21 | 50.8 M |

I think it makes sense that Open Philanthropy prioritises chickens, fish and shrimp, as these are the 3 populations of farmed animals with the most suffering according to the above (1.74, 1.52 and 1.05 as much suffering as the happiness of all humans).

- ^

ETHU in the header of the last column means expected total hedonistic utility.

nuclear security is getting almost no funding from the community

For reference, I collected some data on this:

Supposedly cause neutral grantmakers aligned with effective altruism have influenced 15.3 M$[17] (= 0.03 + 5*10^-4 + 2.70 + 3.56 + 0.0488 + 0.087 + 5.98 + 2.88) towards efforts aiming to decrease nuclear risk[18]:

- ACX Grants supported Morgan Rivers via a grant of 30 k$ in 2021 “to help ALLFED improve modeling of food security during global catastrophes” (the public write-up is 1 paragraph).

- Founders Pledge’s Global Catastrophic Risks Fund advised on 2.70 M$ (= 0.2 + 2.50), supporting:

- The Pacific Forum recommending a grant of 200 k$ in 2023 (1 sentence).

- The Carnegie Endowment for International Peace recommending a grant of 2.50 M$ in 2024 (1 sentence).

- The Future of Life Institute (FLI) supported nuclear war research via 10 grants in 2022 totalling 3.56 M$ (1 paragraph each), of which 1 M$ was to support Alan Robock’s and Brian Toon’s research.

- The Long-Term Future Fund (LTFF) directed 48.8 k$ (= 3.6 + 5 + 40.2), supporting:

- ALLFED via a grant of 3.6 k$ in 2021 for “researching plans to allow humanity to meet nutritional needs after a nuclear war that limits conventional agriculture” (1 sentence).

- Isabel Johnson via an “exploratory grant” of 5 k$ in 2022 for “preliminary research into the civilizational dangers of a contemporary nuclear strike” (1 sentence).

- Will Aldred via a grant of 40.2 k$ in 2022 to “1) Carry out independent research into risks from nuclear weapons, [and] 2) Upskill in AI strategy” (1 sentence).

- Longview Philanthropy’s Emerging Challenges Fund directed 87 k$ (= 15 + 52 + 20), supporting:

- The Council on Strategic Risks via a grant of 15 k$ in 2022 (2 paragraphs).

- The Carnegie Endowment for International Peace via a grant of 52 k$ in 2023 (3 paragraphs).

- Decision Research via a grant of 20 k$ in 2023 (6 paragraphs).

- Longview Philanthropy’s Nuclear Weapons Policy Fund has supported the Council on Strategic Risks, Nuclear Information Project, and Carnegie Endowment for International Peace (1 paragraph each).

- For transparency, I encourage Longview to share on their website information about at least the date and size of the grants this fund made[19].

- Open Philanthropy has supported Alan Robock’s and Brian Toon’s research on nuclear winter via grants totalling 5.98 M$ (= 2.98 + 3), 2.98 M$ in 2017, and 3 M$ in 2020[20] (2 paragraphs each).

- The Survival and Flourishing Fund (SFF) has supported ALLFED via grants totalling 2.88 M$ (= 0.01 + 0.13 + 0.175 + 0.979 + 0.427 + 1.16), 10 k$ and 130 k$ in 2019, 175 k$ and 979 k$ in 2021, 427 k$ in 2022, and 1.16 M$ in 2023 (1 sentence each).

Thanks for the context, Toby!

For what it's worth, my working assumption for many risks (e.g. nuclear, supervolcanic eruption) was that their contribution to existential risk via 'direct' extinction was of a similar level to their contribution via civilisation collapse

I was guessing you agreed the direct extinction risk from nuclear war and volcanoes was astronomically low, so I am very surprised by the above. I think it implies your annual extinction risk from:

- Nuclear war is around 5*10^-6 (= 0.5*10^-3/100), which is 843 k (= 5*10^-6/(5.93*10^-12)) times mine.

- Volcanoes is around 5*10^-7 (= 0.5*10^-4/100), which is 14.8 M (= 5*10^-7/(3.38*10^-14)) times mine.

I would be curious to know your thoughts on my estimates. Feel free to follow up in the comments on their posts (which I had also emailed to you around 3 and 2 months ago). In general, I think it would be great if you could explain how you got all your existential risk estimates shared in The Precipice (e.g. decomposing them into various factors as I did in my analyses, if that is how you got them).

Your comment above seems to imply that direct extinction would be an existential risk, but I actually think human extinction would be very unlikely to be an existential catastrophe if it was caused by nuclear war or volcanoes. For example, I think there would only be a 0.0513 % (= e^(-10^9/(132*10^6))) chance of a repetition of the last mass extinction 66 M years ago, the Cretaceous–Paleogene extinction event, being existential. I got my estimate assuming:

- An exponential distribution with a mean of 132 M years (= 66*10^6*2) represents the time between i) human extinction in such catastrophe and ii) the evolution of an intelligent sentient species after such a catastrophe. I supposed this on the basis that:

- An exponential distribution with a mean of 66 M years describes the time between:

- 2 consecutive such catastrophes.

- i) and ii) if there are no such catastrophes.

- Given the above, i) and ii) are equally likely. So the probability of an intelligent sentient species evolving after human extinction in such a catastrophe is 50 % (= 1/2).

- Consequently, one should expect the time between i) and ii) to be 2 times (= 1/0.50) as long as that if there were no such catastrophes.

- An exponential distribution with a mean of 66 M years describes the time between:

- An intelligent sentient species has 1 billion years to evolve before the Earth becomes habitable.

Thanks for clarifying, Ben!

I'd add that if if there's almost no EA-inspired funding in a space, there's likely to be some promising gaps by someone applying that mindset.

Agreed, although my understanding is that you think the gains are often exagerated. You said:

Overall, my guess is that, in an at least somewhat data-rich area, using data to identify the best interventions can perhaps boost your impact in the area by 3–10 times compared to picking randomly, depending on the quality of your data.

Again, if the gain is just a factor of 3 to 10, then it makes all sense to me to focus on cost-effectiveness analyses rather than funding.

In general, it's a useful approximation to think of neglectedness as a single number, but the ultimate goal is to find good grants, and to do that it's also useful to break down neglectedness into different types of resources, and consider related heuristics (e.g. that there was a recent drop).

Agreed. However, deciding how much to weight a given relative drop in a fraction of funding (e.g. philanthropic funding) requires understanding its cost-effectiveness relative to other sources of funding. In this case, it seems more helpful to assess the cost-effectiveness of e.g. doubling philanthropic nuclear risk reduction spending instead of just quantifying it.

Causes vs. interventions more broadly is a big topic. The very short version is that I agree doing cost-effectiveness estimates of specific interventions is a useful input into cause selection. However, I also think the INT framework is very useful. One reason is it seems more robust.

The product of the 3 factors in the importance, neglectedness and tractability framework is the cost-effectiveness of the area, so I think the increased robustness comes from considering many interventions. However, one could also (qualitatively or quantitatively) aggregate the cost-effectiveness of multiple (decently scalable) representative promising interventions to estimate the overall marginal cost-effectiveness (promisingness) of the area.

Another reason is that in many practical planning situations that involve accumulating expertise over years (e.g. choosing a career, building a large grantmaking programme) it seems better to focus on a broad cluster of related interventions.

I agree, but I did not mean to argue for deemphasising the concept of cause area. I just think the promisingness of areas had better be assessed by doing cost-effectiveness analyses of representative (decently scalable) promising interventions.

E.g. you could do a cost-effectiveness estimate of corporate campaigns and determine ending factory farming is most cost-effective.

To clarify, the estimate for the cost-effectiveness of corporate campaigns I shared above refers to marginal cost-effectiveness, so it does not directly refer to the cost-effectiveness of ending factory-farming (which is far from a marginal intervention).

But once you've spent 5 years building career capital in that factory farming, the available interventions or your calculations about them will likely very different.

My guess would be that the acquired career capital would still be quite useful in the context of the new top interventions, especially considering that welfare reforms have been top interventions for more than 5 years[1]. In addition, if Open Philanthropy is managing their funds well, (all things considered) marginal cost-effectiveness should not vary much across time. If the top interventions in 5 years were expected to be less cost-effective than the current top interventions, it would make sense to direct funds from the worst/later to the best/earlier years until marginal cost-effectiveness is equalised (in the same way that it makes sense to direct funds from the worst to best interventions in any given year).

Thanks for the comment, Zach. I upvoted it.

I fully endorse expected total hedonistic utilitarianism[1], but this does not imply any reduction in extinction risk is way more valuable than a reduction in nearterm suffering. I guess you want to make this case by making a comparison like the following:

- If extinction risk is reduced in absolute terms by 10^-10, and the value of the future is 10^50 lives, then one would save 10^40 (= 10^(50 - 10)) lives.

- However, animal welfare or global health and development interventions have an astronomically low impact compared with the above.

I do not think the above comparison makes sense because it relies on 2 different methodologies. The way they are constructed, the 2nd will always have an impact for life-saving interventions which is limited to the global population of around 10^10, so it is bound to result in a lower impact than the 1st even if it is describing the exact same intervention. Interventions which aim to decrease the probability of a given population loss[2] achieve this via saving lives, so one could weight lives saved at lower population sizes more heavily, but still estimate their cost-effectiveness in terms of lives saved per $. I tried this, and with my assumptions interventions to save lives in normal times look more cost-effective than ones which save lives in severe catastrophes.

Less theoretically, decreasing measurable (nearterm) suffering (e.g. as assessed in standard cost-benefit analyses with estimates in DALY/$) has been a great heuristic to improve the welfare of the beings whose welfare is being considered both nearterm and longterm[3]. So I think it makes sense to a priori expect interventions which very cost-effectively decrease measurable suffering to be great from a longtermist perspective too.

- ^

In principle, I am very happy to say that a 10^-100 chance of saving 10^100 lives is exactly as valuable as a 100 % chance of saving 1 life.

- ^

For example, decresing the probability of population dropping below 1 k for extinction, or dropping below 1 billion for global catastrophic risk.

- ^

Animal suffering has been increasing, but animals have been neglected. There are efforts to account for animals in cost-benefit analyses.

Nice post, Keyvan!

I have Fermi estimated the scale of the suffering of the various populations of farmed animals, assuming the intensity of suffering relative to the welfare range for all populations matches that of broilers in reformed scenarios. In agreement with Open Philanthropy's prioritisation, I calculated farmed chickens and fish are the 2 populations with the most suffering, with a scale equal to 1.74 and 1.52 times that of the happiness of all humans (which highlights the meat eater problem). Farmed shrimps and prawns came in 3rd, with a suffering whose scale is 1.05 times that of the happiness of all humans.

Thanks for the update, Ben!

I think nuclear war becoming an existential catastrophe is an extremely remote possibility: